Automatic vectorization of satellite images: one model - the first two places

Hello!

In this article I want to share with you the story of how the same model architecture brought two victories in machine learning competitions on the topcoder platform at once with a month interval.

The following competitions will be discussed:

- Urban 3d mapper - search houses on satellite imagery. The competition lasted 2 months, there were 54 participants and five prizes.

- Spacenet: road detection challenge - search for a graph of roads. The decision was also given 2 months, including 33 participants and five prize positions.

The article describes the general approaches to solving such problems and the peculiarities of implementation for specific contests.

For comfortable reading of the article, it is desirable to have a basic knowledge of convolutional neural networks and their training.

A little background

Last year, after watching several machine-training sessions, I had the idea to participate in competitions. My first contest ended with an unexpectedly high result for me, which added even more confidence and motivation. So, I quickly became interested in competitive machine learning, especially image processing tasks.

The first for me was a competition for counting sea lions in the Aleutian Islands. At that time, my knowledge was only enough to take other people's decisions as a basis, to understand them and try to improve them. This is how I recommend doing to anyone who wants to learn something and / or enter the competition. Reading books until blue spots without the practical use of knowledge is a bad idea. Thus, I trained the detector, which worked very well, and brought me the 13th place out of 385 participants, to which I was incredibly pleased. You can read more about the task in the next post by Artem .

The next was a competition for the classical classification of satellite images, in which I became acquainted with classical machine learning and many different architectures of neural networks. This time we worked in a team and finished in 7 positions out of 900 teams. Article based on the competition .

After that, I was ready to dig deeper and engage in more serious research, which played a role in my first victory in the machine learning competition - Carvana image segmentation challenge. You can read more about the decision in our interview in a blog post on kaggle . (And there is also a link to the source code)

A few words about sites and competitive machine learning

The leader among the platforms for the competition for machine learning is kaggle. But there are other platforms on which equally interesting contests take place with good prizes. In my case, both competitions were held on the topcoder platform. The differences between the platforms can be the subject of a long discussion, but now I want to focus your attention on some entertaining features that deserve special attention.

First, the time constraints on learning patterns and prediction. For Urban 3d - 7 days for training at p2.xlarge and 8 hours for prediction on the same instance. For Spacenet: road detection, a decent car with a 4 titan xp was allocated to the training, but there were similar limitations for the prediction. In my opinion, these are great limitations that allow you to apply the solutions to problems in real conditions, unlike many solutions that are on the top of kaggle. For example, to reproduce our solution in the competition Carvana image segmentation, you need either 20 GPUs and a week of time, as we did, or one Titan X and 90 days. Of course, it is impossible to apply in practice. Even the Carpathians agree with me .

- Secondly, at the end of the competition, to verify the decision, the organizers ask all of the top 10 public leaders to arrange the code in a docker container and hand over the containers. This is a controversial point, since the best and simplest solution can not get into the top places, but leave the competition customer. However, this completely eliminates cheating, which means better models come to victory more often.

Due to the fact that the topcoder platform is not so well-known and convenient, and also because of these restrictions, the number of participants in the competition is not very large, but there are motivated professionals with whom you can compete for one place.

Setting goals

The task of Urban 3d was to segment roofs of houses, at first glance, the classical task of semantic segmentation. But in fact, the task was for instance segmentation, that is, houses standing nearby should be defined as separate elements. And the metric was heavily penalized for "sticky houses". The metric was as follows:

We take all the found connected components and for each of them we look for in the markup the component with which the largest Jacquard index (intersection over union, the ratio of the intersection to the union), and if , the component is written to (true positive). All components for which no matching is found are written to . All components in the markup for which no components were found in the prediction are written in .

And the final metric is:

It turns out that for each stuck together building we get if this building in markup consists of two components and we are not lucky to exceed the threshold of 0.45 for none of them. (hereinafter in the picture in blue white yellow )

The task of Spacenet: road detection looked much more interesting. Customers came up with a metric that takes into account the connectivity of the resulting graph of roads, implemented it in java and python, described in detail in medium. [ 1 ], [ 2 ], [ 3 ]. This metric was intrigued, I wanted to apply not only the skills of learning networks, but also classical computer vision and graph theory.

As a result, it turned out that it is very unstable and sensitive to errors.

Urban 3d - house recognition on satellite imagery

First, a few words about markup, which could cause resentment. It was made poorly - often at home in the markup were where they actually did not appear. But this was soon reported to the organizers, and they promised to correct the mistakes in the final test. In fact, the problem markup is found in many tasks, so I didn’t fight it in the training set, the network should cope on its own. However, if there is an opportunity to correct something, it is better to do it.

We now turn to the input data of the problem:

- RGB images;

- DTM (digital terrain model);

- DSM (digital surface model).

If everything is clear with RGB, then DTM / DSM is something new. Roughly speaking, DSM is the elevation map of all objects on earth, and DTM is the elevation map without objects. From satellite images, they are obtained in very poor resolution, but the data is new and correlates with RGB (standard three-color image) rather weakly, so they had to be used. Therefore, the fourth channel in the network included (DSM-DTM) / 9. Subtract them to get the height of the objects and remove the height where the land is. Kostantu can be obtained in different ways, for example, take the 98th percentile (although I just took the average maximum). It is needed to bring the input in the same order as the RGB (nominated in the interval 0-1), so that not one of the channels dominates in the first convolution.

The first convolution deserves special attention. The fact is that in the past mainly self-made Unet-like architectures were used, which do not use pre-trained encoders. Read more about them here and here . But after the Carvana competition, everything changed. The architectures, in which the encoder is a network pretended for imagenet, appeared on the scene, you can read about it in detail in ternausnet . Also after this competition, the Linknet network with various resnet-like encoders became popular. That Linknet was refined and taken as the basis for solving the problem.

Encoder made resnet34, as it has great expressiveness and speed, and also requires a bit of video memory. I tried other encoders, but with resnet34 convergence was better. A review of neural network architectures is beyond the scope of this article, but you can read about them in the following good review .

A sequence of blocks was used as a decoder: 3x3 convolution, upsampling, 3x3 convolution. Hereinafter, “convolution 3x3” is a convolution operation with a 3x3 yard, pitch (stride) 1 and padding 1. Experiments have shown that upsampling works no worse than transposed convolution (which also generate unwanted artifacts ), but needs in a decreasing or incremental convolution of 1x1, which was on linknet, there is none at all, since the learning speed suited me. At the 3x3 convolution input, the signs from the corresponding resnet layer come through a skip connection, connected via the depth channel to the output of the previous layer. All this can be replaced with a simple operation +, since the inputs have the same dimension, sometimes I do.

The final network diagram (after all convolutions, except the first and last - relu):

Let's return to the first convolution. Only 4 channels, but with the pre-encoder encoder, the input dimension along the channel axis is 3. It was possible to go along several paths. Someone proposed to initialize with zeros, someone chose to rescale the available weights. I chose a different way.

To begin with, I taught 5 eras without 4 channels, as if warming up the weights of all the other layers. Then he completely reinitialized the first layer and started feeding 4 channels to the input. I did not change the learning rate and did not freeze the weight. Of course, it was worth freezing the weights of all layers, except the first. But this was a good convergence, and there was not enough time for additional experiments.

As a loss function (loss), a well-proven bundle was chosen: . In this competition, the weights were 0.5 / 0.5, but in the last two epochs, slightly more weight was given in bce. Visually, this slightly improved the result and the separability of the houses, about which the narration will continue. Usually, the dice coefficient tries to sharpen edges and give more confident predictions, and binary cross entropy holds it back.

Now let us recall that we are solving the problem of instance segmentation, and when binarizing along the threshold most of the houses will merge. Usually, where there is a lack of network abilities, tricks from classical computer vision are used, which was eventually used in this task. It was noticed that the network almost always predicts closer to the center of the house with a very high probability, and closer to the border its confidence falls.

But binarization on a high threshold will not give the desired result - at home they lose the effective area, and the metric is not very happy about it. Therefore, binarization was carried out on two thresholds, with the support in the form of the watershed algorithm. The algorithm, as it were, pours out water from the seeds, which is distributed over the levels of the same values. And where water from different seeds is found, a dividing line appears. Pixels obtained by binarization on a higher threshold were the watershed sided, and on the lower threshold the separation space. As a result, the area of houses was taken from a low threshold, and the separation - from a high. All houses less than 100 pixels (about 3 by 3 meters) were also removed, since on average they had a not very high intersection with marking.

As a result: one network for 4 folds (for correct validation on out of fold predictions), a resten34 pretreated encoder and an almost classical Unet-like decoder. Easy post-processing of the result by the watershed algorithm, learning tricks and some luck.

I came to the second position on the public leaderboard to the finish line, which was fine with me because of the uniform distribution of prizes (11-9-7). On a private was a new city, and I was transferred to 1 place.

Spacenet: road detection challenge - Road recognition and graph construction

By tradition, let's start with markup and data. Unfortunately, it was not without difficulties.

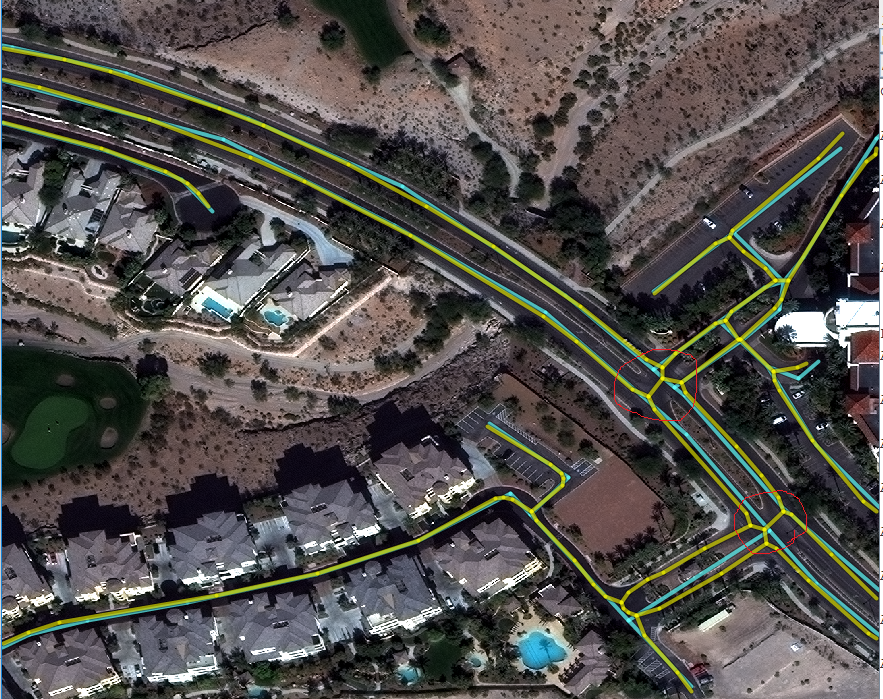

The organizers promised quality markup, but still there were serious flaws that greatly influenced the metric. For example, in the picture below, the highway lanes are not connected at the junction points, which immediately led to a 30% drop in the score, although in general the graph looks good. Here and further, blue is the markup, yellow is the predicted graph.

Now let's look at the data, which was very much in terms of volume, but not much in terms of meaning. The fact is that the organizers laid out everything that they have - MUL, MUL-PAN, PAN, RGB-PAN. I visually examined the data and decided to train only on RGB, because I did not find useful additional information in other channels. After all, the simpler the data from the point of variance, the easier it is to teach the model to generalize to them. RGB I normalized to mean min / max. To build the masks, the organizers suggested rendering the roads from geojson to the images; nothing had to be changed here. There were ideas on what to do with different widths of the road, depending on the type, but everything worked and so.

All the data I fed my past network, without changing anything in the architecture. At the basic postprocessing (about which later), the day after the start of participation in the competition, I received 610 / 620k (~ 6-8 places at the end of the competition). As usual, having banished training with different hyper parameters several times - it increased the number of training epochs and set weights 0.8 / 0.2 for loss. This gave the second base mark of 640k, only post-processing gave further improvements.

Building a graph from a pixel probability map is a nontrivial task. Fortunately, on the Internet, the very first link to google found the sknw package, which I managed to adapt to my needs. He receives a skeleton at the input, and at the output produces a multigraph - very convenient. It only remained to add vertices to where the road changes its direction, for which the Douglas-Pecker algorithm was used, or rather its implementation on opencv. Also, roads along the edges of the picture had a strong influence on skor. The fact is that if there is a part of the road on the edge of the picture, the algorithm will consider it expensive, but there may not be a road if the width of the real road is more than 2 times.

It would be correct to use neighboring tiles for this, but this created certain difficulties, since it was not clear whether they would be available at the testing stage. Plus, my lead in LB was already huge, so I took the path of least resistance and just left cutting off all the roads that are less than 2 pixels, which on average worked well. In addition, there were small improvements of the graph - remove short terminal edges, connect the edges, which lie almost in the same straight line and are close to each other, but all this did not give a significant increase in the metric.

Total: only RGB data (the most common in the world, that is, the solution should be easily scaled to other sources), the same network, the same tricks, only serious post-processing was added, which involved building a skeleton using a binary mask, transforming the skeleton into a graph, projecting the graph in segments, working with borders and some not very useful tricks.

About validation

In all competitions, it is very important to validate correctly so that in the end there is no insult. The fact is that simply achieving the highest possible result on a leaderboard is not always enough - it is also necessary that the solution be stable and work well not only on data from the public leaderboard, but also on others - which are hidden from the developer and even get the metric value on them does not seem possible. For validation, I use the standard k-fold cross validation technique. It lies in the fact that the training data set is divided into k parts (I have k = 4) and k models are trained as follows: each model learns on k-1 folds, and the remaining fold goes into validation, that is, we predict on those data that the model did not see. As a result, we get k sets of predictions that cover the entire set of training data, but none of these data has been seen by any model. These predictions are called OOF (out of fold predictions), you can select thresholds and experiment on them. In addition, they often leave a small part of the data in order to carry out a final check on them. But in the competition will be enough and LB.

Instead of conclusion

And now a little action and personal experiences. 6 hours before the end of the Spacenet: road detection challenge competition, the participant from 3-4 places, the gap from which was very large and the last days giveaways did not bring success, was able to rise to the second place. After another three hours, he was the first.

In addition, at the end of the competition, colleagues from neighboring sites reported a bug in the data set, which consisted in the fact that the multispectral data was slightly incomplete, and as a result, those who studied at RGB received undeservedly better results on the leaderboard, in the test such promised to fix it. Therefore, I expected to fall to several places below. With the announcement of the results, it was strongly delayed, since the models were retrained for a long time, it took the organizers more than a month. Now you imagine that joy and surprise when I discovered my name in the first line of LB.

In the end, I would like to express my gratitude to my colleagues in the competition (especially Selim and Viktor ) for sound competition and good advice, as well as to all those who helped me edit the text.

Thanks to all who read, and good luck in the competition!

PS: spacenet organizers have posted the code .

')

Source: https://habr.com/ru/post/348756/

All Articles