The market of cooling systems for data centers on the verge of significant changes

Cooling data centers is always expensive for data center owners. This is especially important in view of the emergence of high-density physical servers that are actively used by providers of virtual servers (cloud services). A report from Global Market Insights shows that by 2024 the global market for cooling systems for data centers will reach $ 20 billion. This is a huge jump, as in 2016 the mark varied around 8 billion dollars. In addition, the data in the report indicate that cooling systems account for an average of about 40 percent of total energy consumption.

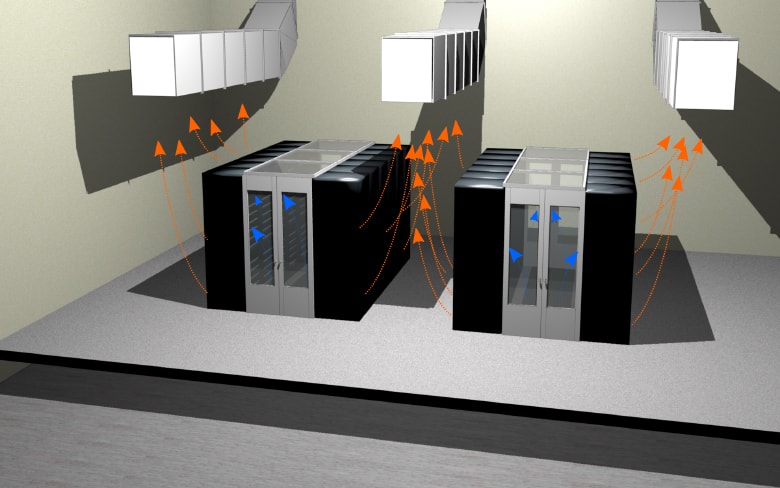

When deploying a data center ecosystem, there were always 2 main questions: how to use energy efficiently and how to correctly relate the power consumed by data center equipment to the power used to cool this equipment. The obsession with solving these problems has reached its apogee, but a new difficulty is looming in the background. While Intel, AMD and other manufacturers of components for data centers solve the problem of reducing the heat produced by equipment, the density of their servers continues to grow.

The power used by the average data center varies within 10 megawatts. However, the density of racks increases, because currently, there has been an increase in high performance computing (HPC) and, moreover, the use of graphics processors is increasing. Therefore, more and more often the power consumption of data centers reaches 50 MW and more, and this in turn leads to higher temperatures as a result of the equipment operation.

')

The equipment in the data center is a “hot” process. Every day, CPUs produce more and more heat, GPUs also do not differ in heat generation and they always get very hot during operation, plus the temperature of a dozen DIMMs on two-component servers adds up to temperature.

The only equipment working in the opposite direction is the storage. The emergence of SSD and the transition from drives with a frequency of 15,000/10,000 rpm was literally a salvation, because solid-state drives (even if they operate at full capacity) practically do not generate heat.

There is a clear logical connection in the data center ecosystem - the greater the density of servers, the more cooling you will need. However, do not turn your data center into a fridge. A vivid example of this is the huge, clever eBay data center in Phoenix, a city that is not famous for cool summer, cooling there reaches comfortable temperatures and you will not feel the cold penetrating the bones in it.

In their report, analysts from Global Market Insights noted that along with traditional cooling methods, new technologies, such as liquid cooling, are widely used. Liquid cooling is still more popular with ordinary users, data centers are slower adopting this technology due to fear of fluid leakage. Also taking into account the scale of the application, the fact that liquid cooling will cost more than air cooling is taken into account.

But, nevertheless, analysts expect that after some time, the demand for liquid cooling technologies will still show significant growth, thanks to the use of advanced cooling agents that can provide effective cooling and minimize total carbon dioxide emissions. The liquid provides more efficient cooling than air, so this technology is often used in high-performance computers.

It is expected that this cooling technology of data centers and server centers will show the highest growth rates due to the ability to provide certain conditions for each type of equipment even when being in close proximity to each other, and it will primarily focus on the server rooms of banks and small data centers of organizations that rent VPS Windows sevrera. Given the visible advantages, we can safely say that this area is simply intended for growth and development.

Global Market Insights also predicts that IT and telecommunications will continue to have a dominant influence on the data center cooling technology market as the demand for storage and data availability increases day by day. This is due to the fact that the growth in the number of smartphones and Internet of Things devices entails an increase in the growth of peripheral networks, which, in turn, will require. And again we return to the logical connection - any increase in equipment will require significant cooling.

Source: https://habr.com/ru/post/348636/

All Articles