Serverless application with AWS and Bitbucket Pipelines CI / CD implementation

This article is about deploying a Django application in the AWS cloud using Bitbucket Pipelines. For those who are interested in this topic, welcome under cat.

Go on the mines!

The project is a typical Django application. The only difference is that the application settings will be pulled through environment variables. The repository of the project is on the beatback. To create a similar one, install the requirements from the list:

As you can see, a typical set of dependencies for building a REST API and connecting PostgreSQL. Next we go through the steps of creating a typical Django application. Add to the project settings the settings for connecting to the database and placing statics on S3.

')

Zappa is a framework that simplifies the deployment of wsgi applications based on API Gateway and Lambda. Under the hood, it has a generator Cloudformation template and an adapter for the Lambda event in a wsgi query, which allows you to use the classical scheme of the application. The final touch is adding dependencies for tests.

and add configuration files for tox, pylint and pytest

It is a JSON or YAML file with a set of variables. In my version, it is stored on a hidden bucket in the settings of Pipelines S3 and is copied each time an artifact is created.

I will give an example:

Everything related to the project settings is written in environment_variables. See the zappa documentation for details of the rest.

Those who do not know what it is, refer to my other articles. Here I will try to consider in detail the configuration of the pipeline. For CI / CD, I use the following shell script:

The script works in a standard for a bitbucket pipeline container (image: python: 3.6.1) on Debian. Pipeline allows you to use any container with DockerHub, but the script adaptation will remain on your conscience.

The actual configuration of the pipeline is as follows:

image points to the container, the deployment of the production is done by tags, the master brunch is deployed in the dev1 environment. Those interested can add their own test run to the remaining branches. It's simple.

After adding the configuration files, it remains only to configure the bitpack itself. Turn on pipelines and set the environment variables:

Everything, now you can push in the master, cut off the tags and ... well, give the repository to the developers, they know what to do with it.

The topic of encrypting secrets and using Cloudfront, as well as the settings of RDS, ACM, IAM, Route53, remained behind the scenes, but this is already beyond the scope of the article. Those interested can find all this in the AWS documentation.

Once again the link to the repository

Go on the mines!

Creating an application framework

The project is a typical Django application. The only difference is that the application settings will be pulled through environment variables. The repository of the project is on the beatback. To create a similar one, install the requirements from the list:

zappa==0.45.1 django-rest-swagger==2.1.2 djangorestframework==3.7.3 django-filter==1.1.0 Django==2.0 psycopg2==2.7.3.2 django-storages==1.6.5 As you can see, a typical set of dependencies for building a REST API and connecting PostgreSQL. Next we go through the steps of creating a typical Django application. Add to the project settings the settings for connecting to the database and placing statics on S3.

')

STATICFILES_STORAGE = 'storages.backends.s3boto3.S3Boto3Storage' DEFAULT_FILE_STORAGE = 'storages.backends.s3boto3.S3Boto3Storage' ##################################### # ENV VARIABLES ##################################### RDS_DB_NAME = os.environ.get('RDS_DB_NAME') RDS_USERNAME = os.environ.get('RDS_USERNAME') RDS_PASSWORD = os.environ.get('RDS_PASSWORD') RDS_HOSTNAME = os.environ.get('RDS_HOSTNAME') RDS_PORT = os.environ.get('RDS_PORT') S3_BUCKET = os.environ.get('S3_BUCKET') ##################################### ##################################### # THIS SETTINGS CAN'T BE OVERRIDED # ##################################### # Database # https://docs.djangoproject.com/en/2.0/ref/settings/#databases DATABASES = { 'default': { 'ENGINE': 'django.db.backends.postgresql_psycopg2', 'NAME': RDS_DB_NAME, 'USER': RDS_USERNAME, 'PASSWORD': RDS_PASSWORD, 'HOST': RDS_HOSTNAME, 'PORT': RDS_PORT, } } AWS_STORAGE_BUCKET_NAME = S3_BUCKET AWS_S3_CUSTOM_DOMAIN = '%s.s3.amazonaws.com' % AWS_STORAGE_BUCKET_NAME STATIC_ROOT = 'static' STATIC_URL = "https://%s/" % AWS_S3_CUSTOM_DOMAIN Zappa is a framework that simplifies the deployment of wsgi applications based on API Gateway and Lambda. Under the hood, it has a generator Cloudformation template and an adapter for the Lambda event in a wsgi query, which allows you to use the classical scheme of the application. The final touch is adding dependencies for tests.

pytest==3.3.1 pylint==1.8.1 tox==2.9.1 pytest-django==3.1.2 docstringtest==0.3.0 and add configuration files for tox, pylint and pytest

Zappa configuration

It is a JSON or YAML file with a set of variables. In my version, it is stored on a hidden bucket in the settings of Pipelines S3 and is copied each time an artifact is created.

I will give an example:

{ "dev1": { "environment_variables": { "RDS_DB_NAME": "dbname", "RDS_USERNAME": "user", "RDS_PASSWORD": "pass", "RDS_HOSTNAME": "host", "RDS_PORT": "5432", "S3_BUCKET": "s3-bucket" }, "aws_region": "us-east-1", "django_settings": "sample.settings", "project_name": "serverless", "runtime": "python3.6", "s3_bucket": "app-bucket", "domain":"example.com", "certificate_arn":"<ACM certificate arn>" } } Everything related to the project settings is written in environment_variables. See the zappa documentation for details of the rest.

Bitbucket Pipelines Configuration

Those who do not know what it is, refer to my other articles. Here I will try to consider in detail the configuration of the pipeline. For CI / CD, I use the following shell script:

#!/bin/bash setup () { echo ------- SETUP ------- apt-get update # required to install zip apt-get install -y zip pip install virtualenv virtualenv --python=python3 env source env/bin/activate pip install -r requirements.txt return $? } tests() { echo ------- TESTS ------- pip install -r requirements-test.txt # for tests tox return $? } deploy() { echo ------- DEPLOY ------- echo $1 pip install awscli aws s3 cp s3://$CMDB/zappa_settings.json . zappa update $1 || zappa deploy $1 zappa certify $1 --yes zappa manage $1 "migrate --noinput" zappa manage $1 "collectstatic --noinput" return $? } setup && test && deploy $1 The script works in a standard for a bitbucket pipeline container (image: python: 3.6.1) on Debian. Pipeline allows you to use any container with DockerHub, but the script adaptation will remain on your conscience.

The actual configuration of the pipeline is as follows:

image: python:3.6.1 pipelines: tags: release-*: - step: caches: - pip script: - ./ci.sh prod1 branches: master: - step: caches: - pip script: - ./ci.sh dev1 image points to the container, the deployment of the production is done by tags, the master brunch is deployed in the dev1 environment. Those interested can add their own test run to the remaining branches. It's simple.

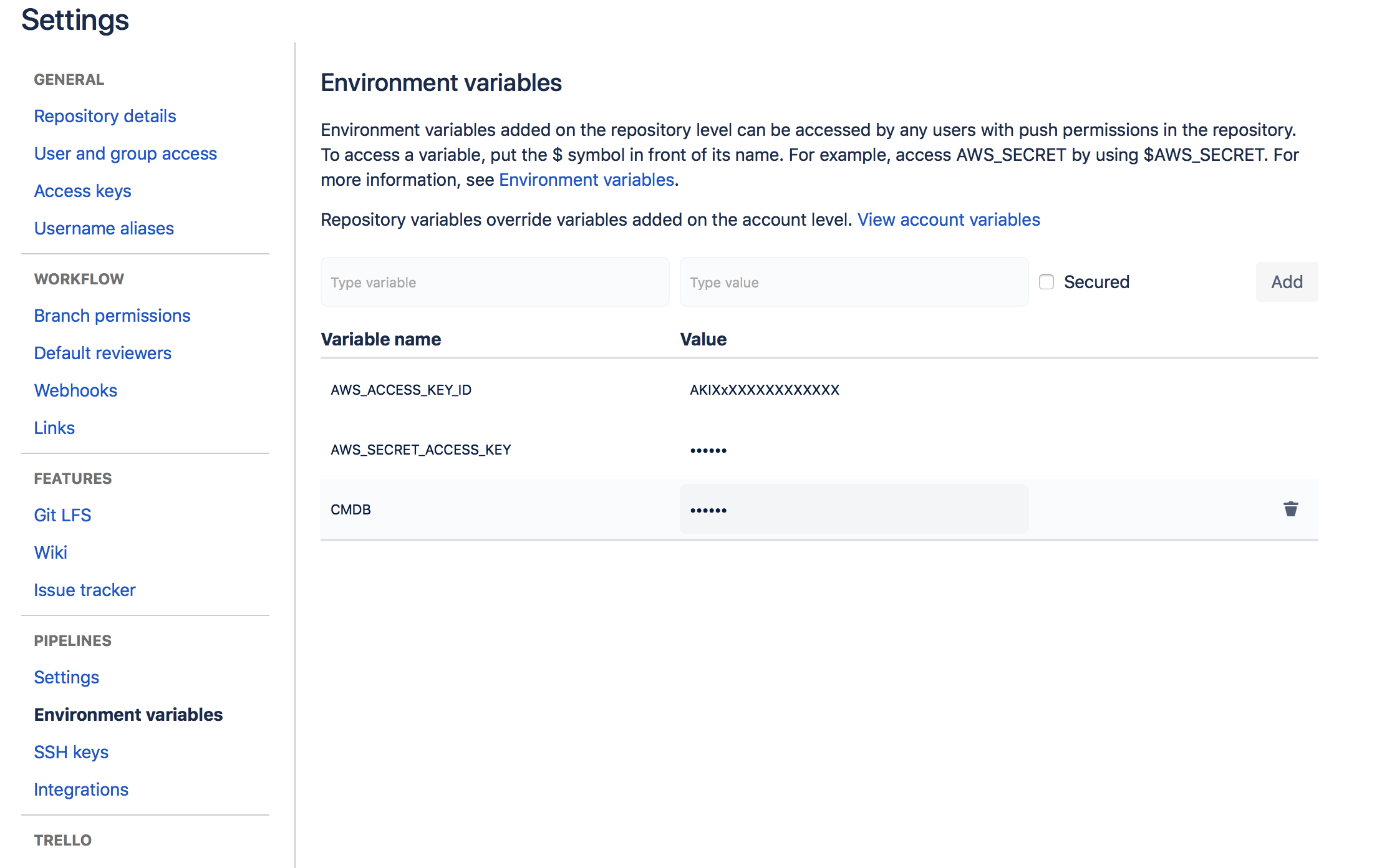

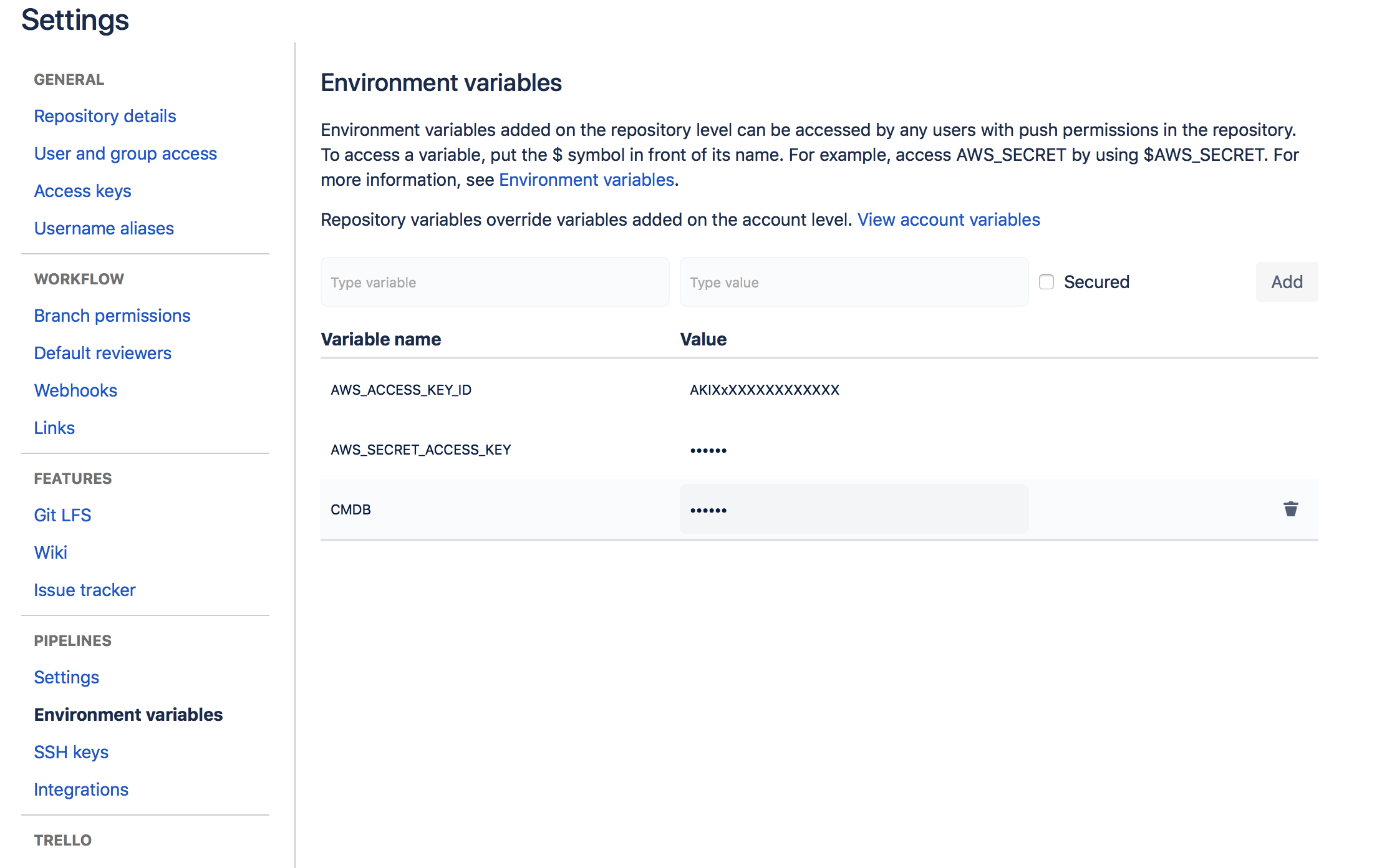

After adding the configuration files, it remains only to configure the bitpack itself. Turn on pipelines and set the environment variables:

Everything, now you can push in the master, cut off the tags and ... well, give the repository to the developers, they know what to do with it.

The topic of encrypting secrets and using Cloudfront, as well as the settings of RDS, ACM, IAM, Route53, remained behind the scenes, but this is already beyond the scope of the article. Those interested can find all this in the AWS documentation.

Once again the link to the repository

Source: https://habr.com/ru/post/348578/

All Articles