We write scalable and supported servers on Node.js and TypeScript

For the last three years I have been developing servers on Node.js and in the process of working I have accumulated some code base, which I decided to take as a framework and put it in open-source.

The main features of the framework include:

- simple architecture without any js magic

- automatic serialization / deserialization of models (for example, it is not necessary to check whether the field came from the client, everything is checked automatically)

- ability to generate an API schema

- schema-based documentation generation

- generation of a fully typed SDK for the client (at the moment we are talking about the JS frontend)

In this article we will talk a little about Node.js and consider this framework.

Anyone who cares - I ask under the cat

What is the problem and why write another bike?

The main problem with server development on Node.js is two things:

- lack of a developed community and infrastructure

- reasonably low entry level

With the lack of infrastructure, everything is simple: Node.js is a rather young platform, so things that can be conveniently and quickly written on older platforms (for example, the same PHP or .NET) in Node.js cause difficulties.

What about low entry level? The main problem here is that with every turn of the hyip wheel and the arrival of new libraries / frameworks, everyone is trying to simplify. It would seem easier - better, but because these solutions are not always used by experienced developers as a result - there arises a cult of worshiping libraries and frameworks. Probably the most obvious example is express.

What is wrong with express? Never mind! No, of course you can find flaws in it, but express is a tool, a tool that needs to be able to use, problems start when express, or any other framework, plays a major role in your project when you are too tied to it.

Based on the above, starting to develop a new project, I started by writing some "core" server, later it was transferred to other projects, finalized and, of course, became an Airship framework.

Basic concept

Starting to think through the architecture, I asked myself the question: "What does the server do?". At the highest level of abstraction, the server does three things:

- receives requests

- processes requests

- gives answers

Still, why complicate your life? I decided that the architecture should be something like the following: each request supported by our north has a model, request models are sent to request handlers, and handlers, in turn, give response models.

It is important to note that our code does not know anything about the network, it only works with the instances of the usual classes of requests and responses. This fact allows us not only to finish the more flexible architecture, but even to change the transport layer. For example, we can switch from HTTP to TCP without changing our code. Of course this is a very rare case, but this possibility shows us the flexibility of the architecture.

Models

Let's start with the models, what do you need from them? First of all, we need a simple way to serialize and deserialize models, validation of types during deserialization still does not hinder, since custom queries are also models.

In the following description, I will omit some details that can be read in the documentation so as not to inflate the article.

Here's how it works:

class Point { @serializable() readonly x: number @serializable() readonly y: number constructor(x: number, y: number) { this.x = x this.y = y } } As you can see, the model is a normal class, the only difference is the use of the serializable decorator. With the help of this decorator we specify the fields to the serializer.

Now we can sarialize and deserialize our model:

JSONSerializer.serialize(new Point(1,2)) // { "x": 1, "y": 2 } JSONSerializer.deserialize(Point, { x: 1, y: 2 }) // Point { x: 1, y: 2 } If we pass data of the wrong type, the serializer will throw an exception:

JSONSerializer.deserialize(Point, { x: 1, y: "2" }) // Error: y must be number instead of string Query models

Requests are the same models, the difference is that all requests are inherited from ASRequest and use the @queryPath decorator to specify the query path:

@queryPath('/getUser') class GetUserRequest extends ASRequest { @serializable() private userId: number constructor( userId: number ) { super() this.userId = userId } } Answer Models

Response models are also written as usual, but inherited from ASResponse :

class GetUserResponse extends ASResponse { @serializable() private user: User constructor(user: User) { super() this.user = user } } Request Handlers

Request handlers are inherited from BaseRequestHandler and implement two methods:

export class GetUserHandler extends BaseRequestHandler { // public async handle(request: GetUserRequest): Promise<GetUserResponse> { return new GetUserResponse(new User(....)) } // public supports(request: Request): boolean { return request instanceof GetUserRequest } } Since with this approach, it is not very convenient to implement processing of several requests in one handler - there is a descendant of BaseRequestHandler , which is called MultiRequestHandler and allows you to process several requests:

class UsersHandler extends MultiRequestHandler { // @handles(GetUserRequest) // GetUserRequest public async handleGetUser(request: GetUserRequest): Promise<ASResponse> { } @handles(SaveUserRequest) public async handleSaveUser(request: SaveUserRequest): Promise<ASResponse> { } } Receive requests

There is a base class RequestsProvider , which describes the request provider to the system:

abstract class RequestsProvider { public abstract getRequests( callback: ( request: ASRequest, answerRequest: (response: ASResponse) => void ) => void ): void } The system calls the getRequests method, waits for requests, processes them, and sends the response to the answerRequest .

HttpRequestsProvider implemented for receiving requests via HTTP, it works very simply: all requests come through POST, and data comes to json. Using it is also easy, just pass the port and the list of supported requests:

new HttpRequestsProvider( logger, 7000, // GetUserRequest, SaveUserRequest ) We connect all together

The main class of the server is AirshipAPIServer , to which we pass the request handler and the request provider. Since AirshipAPIServer accepts only one handler - the handler manager has been implemented, which accepts a list of handlers and calls the necessary one. As a result, our server will look like this:

let logger = new ConsoleLogger() const server = new AirshipAPIServer({ requestsProvider: new HttpRequestsProvider( logger, 7000, GetUserRequest, SaveUserRequest ), requestsHandler: new RequestHandlersManager([ new GetUserHandler(), new SaveUserRequest() ]) }) server.start() API Schema Generation

The API scheme is such a special JSON that describes all the models, requests and responses of our server, it can be generated using the special utility aschemegen .

First of all, you need to create a config that will indicate all our requests and answers:

import {AirshipAPIServerConfig} from "airship-server" const config: ApiServerConfig = { endpoints: [ [TestRequest, TestResponse], [GetUserRequest, GetUserResponse] ] } export default config After that we can run the utility by specifying the path to the config and to the folder where the schema will be written:

node_modules/.bin/aschemegen --o=/Users/altox/Desktop/test-server/scheme --c=/Users/altox/Desktop/test-server/build/config.js Client SDK generation

Why do we need a circuit? For example, we can generate a fully typed SDK for the frontend in TypeScript. The SDK consists of four files:

- API.ts - the main file with all methods and work with the network

- Models.ts - here are all the models

- Responses.ts - here are all the answer models

- MethodsProps.ts - here the interfaces describing the queries

Here is a piece of API.ts from the working draft:

/** * This is an automatically generated code (and probably compiled with TSC) * Generated at Sat Aug 19 2017 16:30:55 GMT+0300 (MSK) * Scheme version: 1 */ const API_PATH = '/api/' import * as Responses from './Responses' import * as MethodsProps from './MethodsProps' export default class AirshipApi { public async call(method: string, params: Object, responseType?: Function): Promise<any> {...} /** * * * @param {{ * appParams: (string), * groupId: (number), * name: (string), * description: (string), * startDate: (number), * endDate: (number), * type: (number), * postId: (number), * enableNotifications: (boolean), * notificationCustomMessage: (string), * prizes: (Prize[]) * }} params * * @returns {Promise<SuccessResponse>} */ public async addContest(params: MethodsProps.AddContestParams): Promise<Responses.SuccessResponse> { return this.call( 'addContest', { appParams: params.appParams, groupId: params.groupId, name: params.name, description: params.description, startDate: params.startDate, endDate: params.endDate, type: params.type, postId: params.postId, enableNotifications: params.enableNotifications, notificationCustomMessage: params.notificationCustomMessage, prizes: params.prizes ? params.prizes.map((v: any) => v ? v.serialize() : undefined) : undefined }, Responses.SuccessResponse ) } ... All this code is written automatically, it allows you not to be distracted by writing a client and get hints of field names and their types for free, if your IDE knows how.

It is also easy to generate the SDK, you need to run the asdkgen utility and pass it the path to the schemas and the path where the SDK will be located:

node_modules/.bin/asdkgen --s=/Users/altox/Desktop/test-server/scheme --o=/Users/altox/Desktop/test-server/sdk Documentation generation

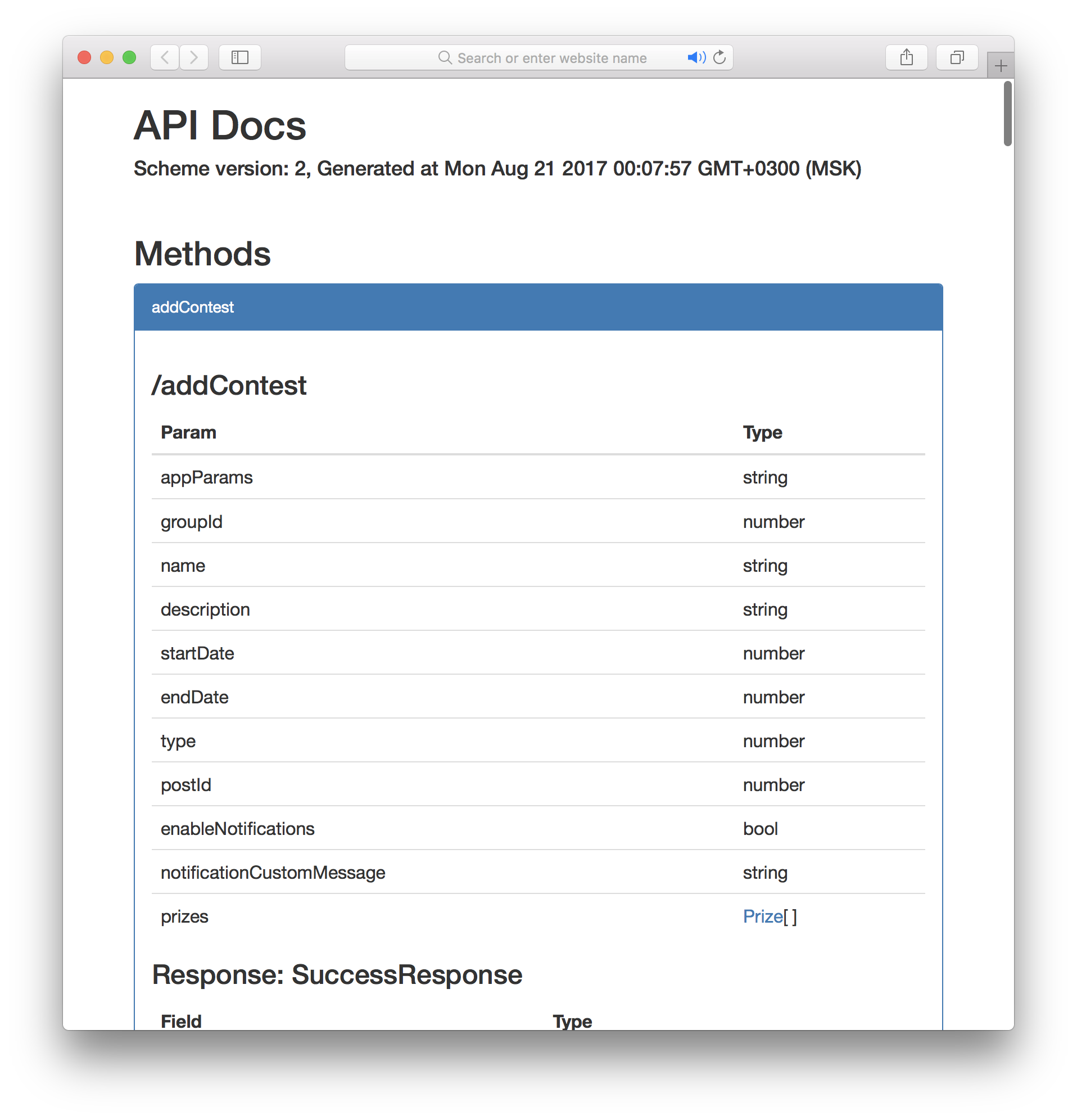

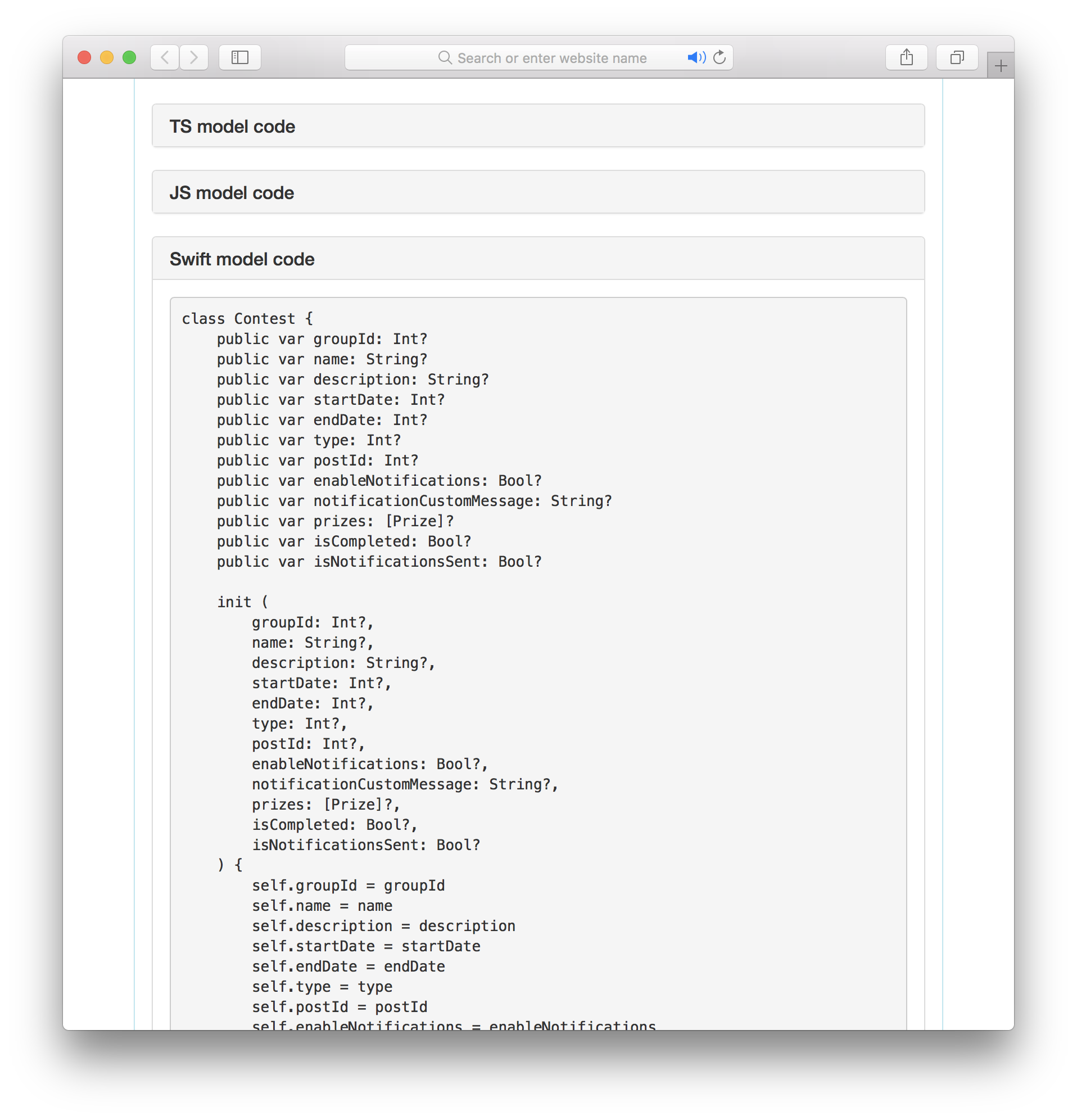

I did not stop generating the SDK and wrote the documentation generation. The documentation is quite simple, it is plain HTML with a description of requests, models, responses. From the interesting: for each model there is a generated code for JS, TS and Swift:

Conclusion

This solution has been used in production for a long time, it helps to maintain the old code, write new code and not write code for the client.

To many, both the article and the framework itself may seem very obvious, I understand that, others may say that there are already such solutions and even stick my nose at the link to such a project. In my defense, I can only say two things:

- I did not find such solutions at one time, or they did not suit me

- writing your bikes is fun!

If someone liked all of the above, welcome to GitHub .

')

Source: https://habr.com/ru/post/348564/

All Articles