Threats of the past and future protocols

As many of Qrator Labs blog readers probably already know, DDoS attacks can be targeted at different levels of the network. In particular, the malicious bot's presence of a large botnet allows him to carry out attacks on the L7 level (application / application) and try to mimic a normal user, while without it the attacker is forced to restrict himself to batch attacks (any, allowing you to fake the source address at one time or another ) to the lower levels of transit networks.

Naturally, both for the first and for the second task, attackers usually seek to use some kind of already existing toolkit - exactly the same way as, for example, a website developer does not write it completely from scratch, but uses common frameworks like Joomla and Bootstrap. For example, a popular framework for organizing attacks from the Internet of Things for a year and a half has been Mirai , whose source code was laid out in open access by its authors in an attempt to shake the FBI back in October 2016 .

')

And for packet attacks, such a popular framework is the pktgen module built into Linux. He was built in there not for this, but for quite legitimate network testing and administration purposes, however, as Isaac Asimov wrote, “an atomic blaster is a good weapon, but it can fire in both directions.”

The peculiarity of pktgen is that it “out of the box” can generate only UDP traffic. For the purposes of network testing, this is enough, and the Linux kernel developers do not particularly want to simplify indiscriminate firing in both directions. As a result, this (somewhat) simplified the fight against DDoS attacks, since a number of intruders even registered the correct ports in pktgen, but did not pay attention to the resulting IP protocol values , and a significant proportion of packet attacks targeted TCP services (for example, HTTPS using port 443 / TCP) UDP datagrams. Such parasitic traffic used to be easily and cheaply discarded even on distant approaches (based on BGP Flowspec including), and it would never reach the “last mile” to the attacked resource.

But everything changes when Google comes.

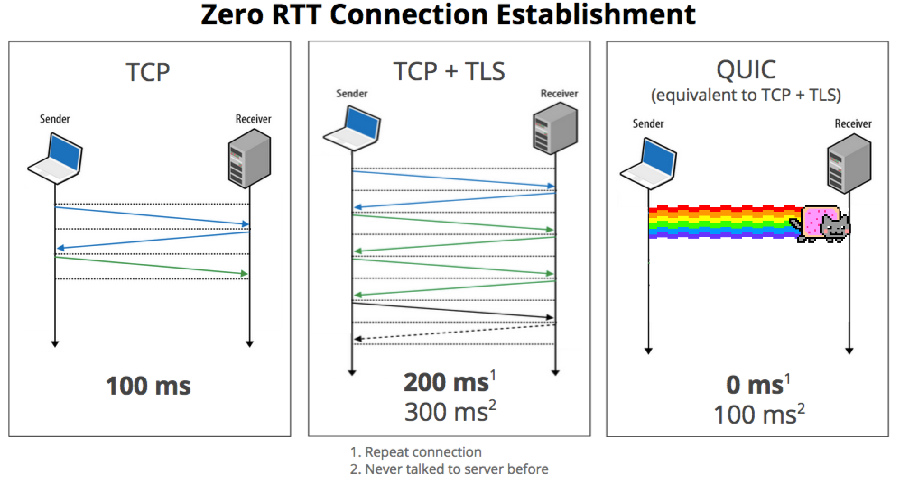

Consider the specification developed from 2013 to replace TCP QUIC protocol. QUIC allows you to multiplex multiple data streams between two computers, working on top of the UDP protocol and contains encryption capabilities equivalent to TLS and SSL . It has a lower connection delay and transmission than TCP . It tolerates the loss of part of packets by aligning the boundaries of cryptographic blocks on the boundaries of packets. The protocol has the possibility of error correction ( FEC ) at the packet level, but in practice it is disabled. Ⓒ Wikipedia

Google over the past few years has developed a bundle of protocols designed to replace the old World Wide Web protocol stack. QUIC works on top of the well-known UDP transport protocol, and at the moment we see isolated cases of QUIC integration into projects by individual “enthusiasts”. Google Chrome, like Google’s application servers, is already supported by QUIC, so the enthusiasm will be explained. According to reviews of some telecom operators, the protocol, first released in 2013, already accounts for a larger share of their traffic than IPv6 introduced since the 90s.

However, at the moment all attempts to implement QUIC are mostly user space-programs, and from the point of view of the kernel, not to mention all transit devices and Flowspec, this is ( drum roll ) UDP on port 443.

On the one hand, this in some sense illustrates the vulnerability of the approach, when packet attacks are filtered on one equipment, and attacks on higher levels on the other (usually cumulatively much weaker). It is clear that building such solutions is cheap and pleasant, but they essentially fix the historical situation on the World Wide Web, and the notion of a “historical situation” regarding the industry, which is only 25 years old, sounds somewhat ridiculous. Imagine that tomorrow the situation will change - say, a business will come and will require the implementation of the new-fashioned QUIC protocol, because Google promised to rank QUIC-enabled-sites in the list above - this has not happened yet, but it could well happen in 2020. As a result of such an introduction, as in the old joke about the Kremlin plumbing, the entire system will need to be changed.

Two are in prison and talking

- Are you on a political or criminal article?

- For political. I'm a plumber. Called to the Kremlin. I looked around and said: “It’s necessary to change the whole system!”

- For political. I'm a plumber. Called to the Kremlin. I looked around and said: “It’s necessary to change the whole system!”

On the other hand, the QUIC protocol is specifically reasonably developed - it, in particular, does not allow for the design, unlike many other UDP-based protocols such as DNS, amplification attacks, that is, it does not guarantee increased response traffic to requests from an unconfirmed source, as a result of the presence in the specification of a handshake (it is the same handshake or “handshake”).

The main task of a handshake is to make it as simple and effective as possible that requests actually come from the IP address that is written in the source field of the IP packet. This is necessary in order not to waste the limited processing power of the server and the network to communicate with many fake clients. In addition, this allows you to get rid of using the server as an intermediate link in the amplification attack, when the server sends significantly more data to the victim’s address (fake in the source field) than the attacker needs to generate on behalf of the victim.

Adam Langley and the company can serve as an example for all who are trying to create their own UDP-based protocols (hello, igrodely!). In the current version of the QUIC draft draft, an entire section is devoted to a handshake. In particular, the first QUIC connection packet cannot be less than 1200 bytes, since it is this size that the server can answer to a handshake. A QUIC client, up to the time of establishing a connection (that is, until the moment when the authenticity of the client's IP address is confirmed) cannot force the server to send more response bytes or generate more packets than the client itself sends.

Naturally, the handshake mechanism is a very convenient target for intruders. The famous SYN flood attack, for example, is aimed specifically at the triple handshake mechanism in the TCP protocol. Therefore, it is important that any handshake is designed in the most optimal way and implemented without significant algorithmic complexity, ideally - as close as possible to the hardware. In TCP, for example, this was not originally the case - the effective SYN cookies were designed much later by the famous Daniel Bernstein and are based, in fact, on hacks, so that even outdated clients could work with this mechanism.

It is ridiculous, but, as can be seen from the first part of the post, in fact, the QUIC protocol is the goal of the attackers even before their full release. Therefore, when introducing QUIC into battle, it is crucially important to ensure that the QUIC handshake is implemented as efficiently as possible and close to the hardware. Otherwise, those attacks that the kernel could cope with earlier (and Linux supports SYN cookies for a very long time) will have to be processed in some Nginx or, I suppose, IIS. Needless to say that he is unlikely to cope with them.

Every year the network becomes more and more complicated. This challenge has to be answered by everyone, especially the engineers who are involved in the development and implementation of new network protocols. In the process of such implementation, you should first of all take into account security issues and solve them in advance, without waiting for the actual realization of the threat. Note that effective support for the same QUIC at a level close to the hardware is still to be ensured.

It will be very difficult to implement protection of a system designed on top of the QUIC protocol until all such issues are discovered, analyzed and solved. The same goes for the rest of the newfangled things like HTTP / 2, DNS-over-TLS and even IPv6.

In the future, we will see even more interesting examples of attacks on the protocols of the new generation. We will certainly keep you informed.

Source: https://habr.com/ru/post/348414/

All Articles