Phone Keyboard vs. Keyword Algorithms vs. Protection of personal information

Language models

In virtual keyboards of mobile phones, word-hinting algorithms for the first letters entered and automatic correction of typos in them are commonly used. The function is necessary because typing on the phone is inconvenient. However, it often annoys users with its “stupidity”.

The hint algorithm is based on a language model that predicts the probability of the next word in the text relative to the previous words. Usually, the model is based on the statistics of n- grams - sequences of n words, which are often adjacent to each other. With this approach, only short common phrases are well guessed.

Neural networks do the job of predicting words better. For example, the neural network algorithm is able to understand that after the words " Linus is the best " the word " programmer " should go, and after " Shakespair is the best " - " writer ". The n- gram model for this problem most likely lacks statistics: even if the training texts contained information about Torvalds and Shakespeare, it was most likely not formulated exactly the same words in the same order.

')

Much is written about recurrent neural networks for language models. For example, using a simple TensorFlow tutorial , you can see under what conditions which words will be predicted.

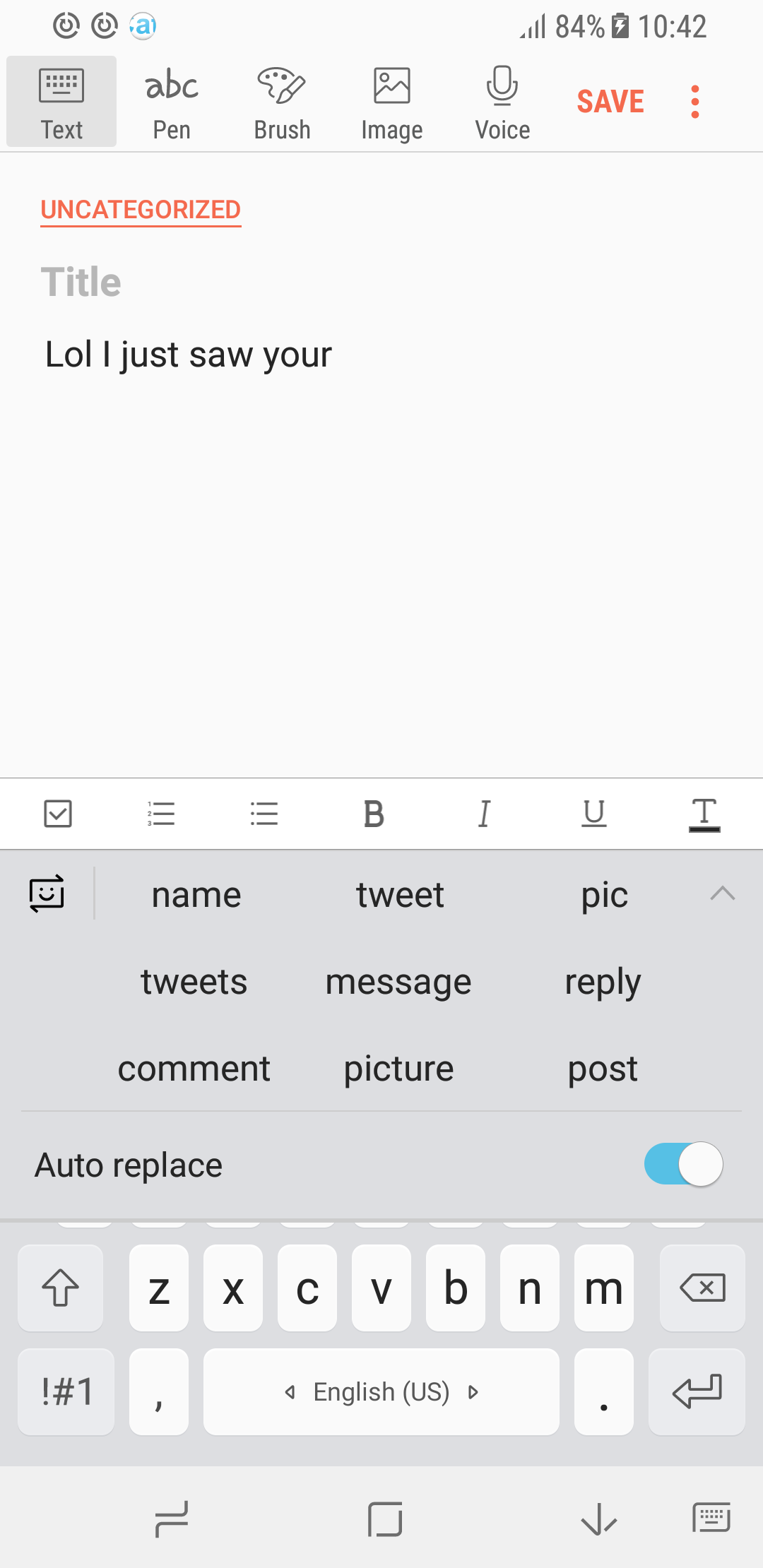

An important point: model predictions are highly dependent on the training set. The screenshots below show sample tips for casual, professional, and informal vocabulary.

| Ordinary model | Molecular biology | Informal vocabulary |

|---|---|---|

|  |  |

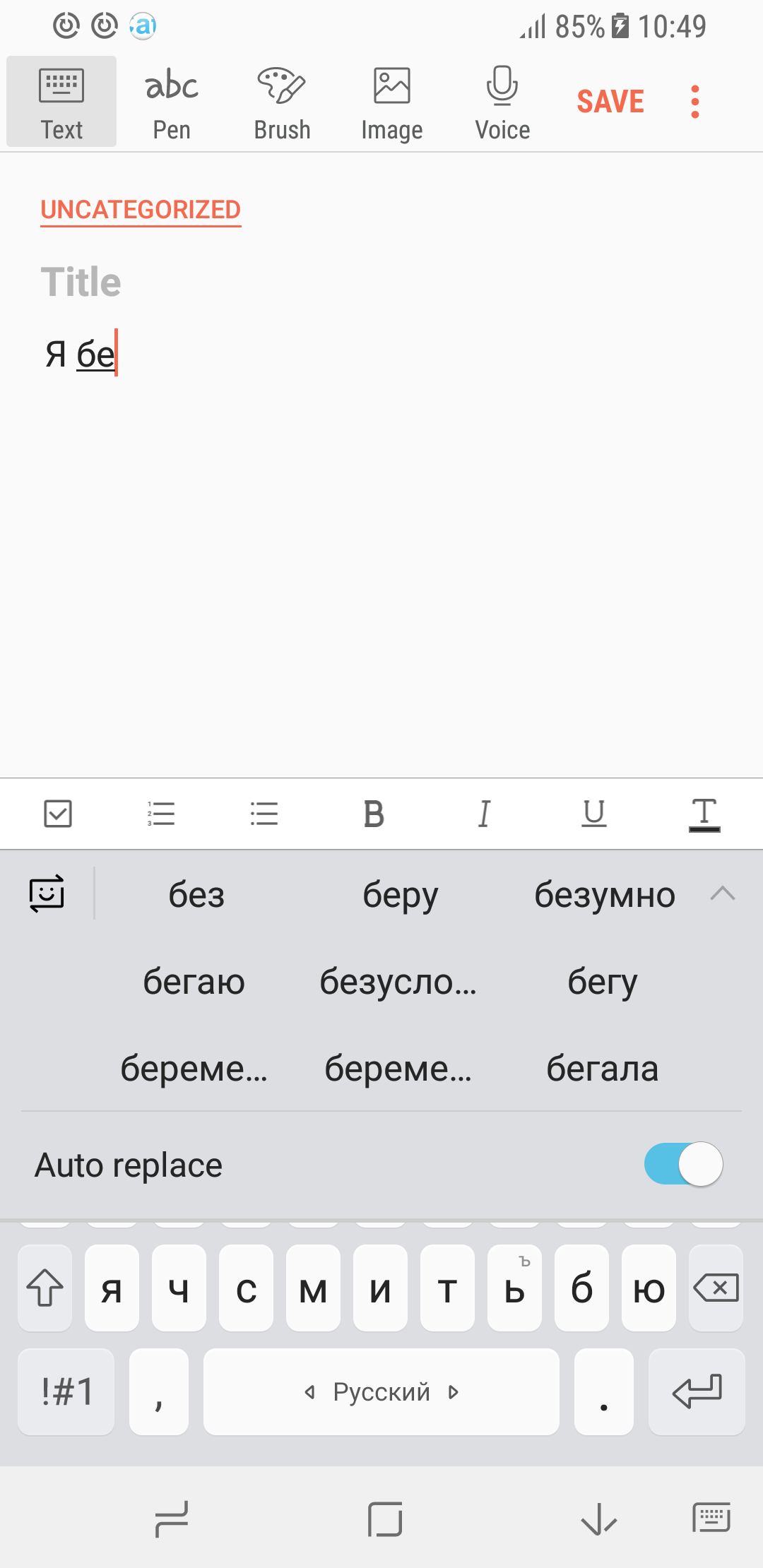

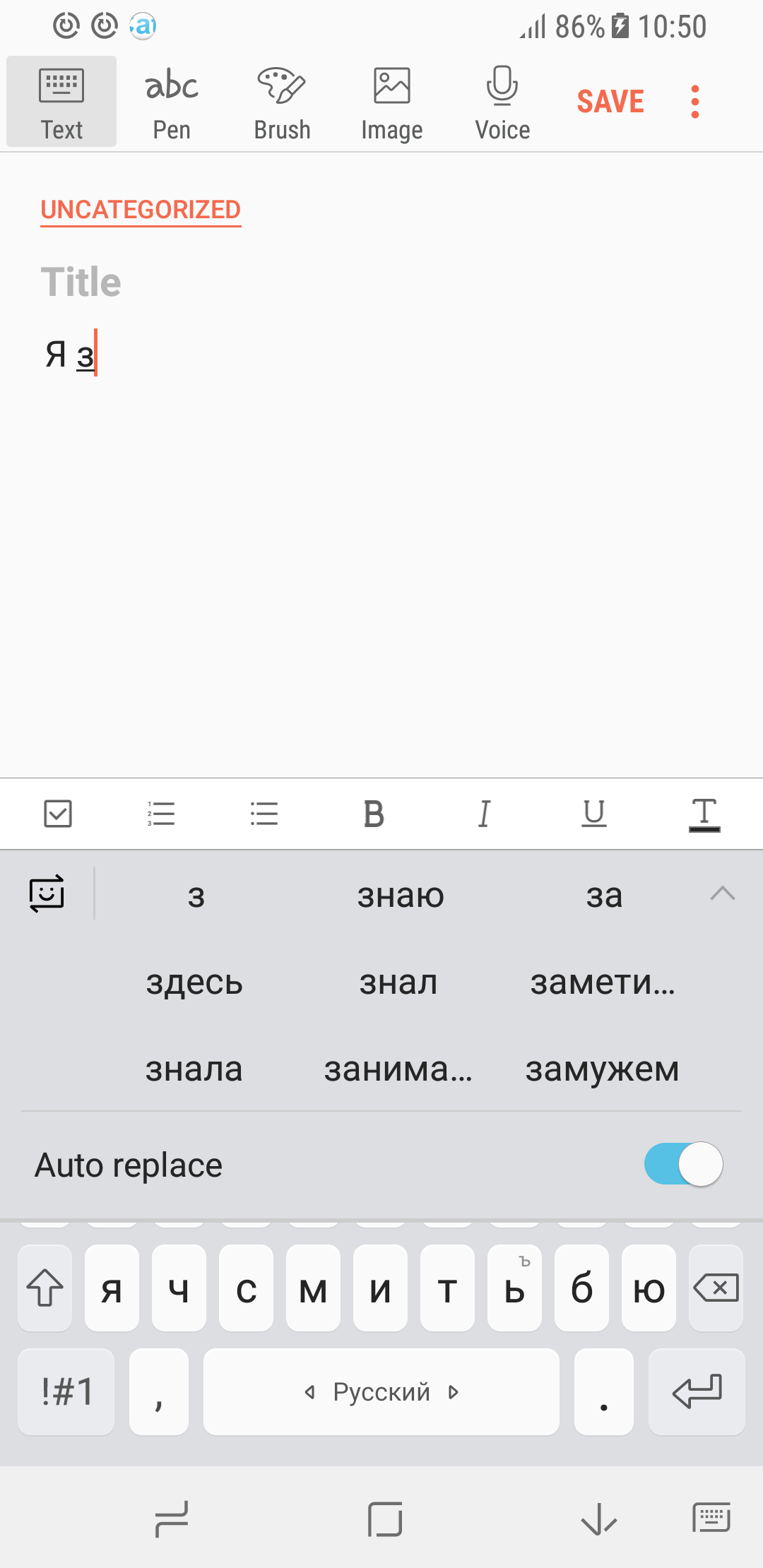

Another example. We collected a large amount of texts from forums and discussions on the Internet in Russian and taught them a recurrent neural network. From the obtained predictions of the language model, the portrait of a typical user of the Runet is well distinguished ... although, rather, it is a typical user.

| Case 1 | Case 2 |

|---|---|

|  |

How to train a model on user data if this data cannot be collected?

We formulate the problem. Texts available on the Internet are not exactly what is usually typed on the keyboard of a mobile device. Most often short messages are written from the phone in the spirit of:

- Hi, how are you? When will you arrive?

- We meet at 7 in Belarus.

- Buy bread.

- Ivan Vasilyevich! I do not have time to finish the work on time, as my computer broke down ...

- Uraaaaaa food to you.

The messages contain a lot of personal information, and there are hardly any users willing to provide their correspondence with the developers of language models “for experiments”.

But in order to prompt the users at the proper level of words, access to what they write is necessary; however, it is private information that cannot be transferred anywhere. It follows from this that an algorithm is needed for learning a language model that does not “peep” into user data.

To solve this problem, we turn to the distributed training of models. The main idea of the approach is as follows:

- The server learns the basic model on a large amount of data (books, articles, forums). This model is able to generate coherent smooth sentences, that is, in general, has learned a language. However, her “style of communication” is different from the style used in SMS and instant messengers, and the tips may be too literary.

- This model is a little training on the phone on the texts typed by the user. It is important that in this case the data is not sent to the server, and no one other than the user gets access to them. It is also worth noting that the additional training of the model takes less than three minutes (tested on the Samsung S8) and slightly affects the charge of the phone’s battery.

- Pre-trained models of different users are collected on the server and combined into one. There are various methods of combining models. In our approach, the weights of the models are simply averaged. Then the updated model is sent to users.

Collecting models from several users is important because each person knows many more words than he manages to type on his device, for example, in a month. Consequently, for a good modeling language needs information from many users.

Good. User data is not sent to the server. But after all, custom models are going. Is it possible to extract private information from these models? Maybe she is there, but in "encrypted" form?

The answer is no. First, the models are averaged over a large number of users. Accordingly, personal information characteristic of only one person is lost against the background of information common to a group of people. Secondly, when teaching neural networks, various methods are used to protect the model from retraining, aimed, inter alia, at minimizing the influence of atypical examples. Thus, the final model contains information about the “average” user.

For interested readers

More details, specific formulations, experiments, and so on were published in an article on arXiv , which will be presented at ICLR 2018. In addition, it presents mathematical evidence that the averaged general model protects well the data of each individual user.

Source: https://habr.com/ru/post/347822/

All Articles