Translation from human to botsky

It all started with the fact that I, like many others, wanted to write a bot. It was assumed that the bot would remind me of all sorts of different things that I constantly forget - the first scenario that was supposed to be implemented was for the bot to tell me at 10 pm that I read everything I wrote in my notebook during the day.

I started by looking for something ready. But everything that I came across was sharpened for meetings - when, with whom, where and for how long. In addition, not everyone understood the normal human language, and in some places did not even have recurring events. Inconvenient.

So write your own. It was immediately decided that the bot will be very modular. The first thing I wrote was a simple bridge between the telegram and the unix socket. And shell script to throw arbitrary text into this socket. And an entry in CZK.

./pa_send.sh " !"

One way communication appeared. In the other yet. Something was needed to parse human phrases. And here I made another important decision - I want to separate the parsing of phrases from the actual implementation. To be more precise, I want to separately have a module-translator, which from phrases in the human language will produce phrases in Botovo.

The first was the option when ChatScript was used as such a translator. It is intended not for this purpose a little - working with ChatScript is “answering a question by question”, and I need “selecting a translation for a question”, but who prevents to use the tool for other purposes? In addition to ChatScript, I looked at RiveScript and in the end I stopped on it.

$ ./human2pa.py aragaer , remind aragaer to " " Some initial phrases were enough for me, so I switched to other pieces of the bot - in particular, the “brain”, which I decided to write in common lisp. The brain sends human speech to the translator, and then works with phrases in the Boto language. Then he constructs the answer in Botov, translates it into a human one through the same translator, and then gives the answer.

And then I found myself in a situation where I no longer know much about what and how it works. Therefore, the idea arose to somehow fix the versions of different modules in the bot. And dealing with this, I rested in the Boto language.

Writing in the course of work language was inconvenient. That is, of course, they could somehow be used ...

10 -

maki-uchi log 10

I began to think about how I could do something more convenient. For example, to make parsing easier:

maki-uchi report count: 10

If we consider the colon part of the word (such a special syntax), then we could do something similar to the p-list:

:maki-uchi report :count 10

But then I suddenly realized that I could get the same p-list in a lisp in a much simpler way. All interaction between the modules of my bot is implemented via json. So you can immediately translate from human speech to json. As an example of how this all should be parsed, Dialogflow usually appeared before my eyes. Unfortunately, it was not very interesting to me - firstly, I want to implement this translation on my own, and secondly ... there is still a privacy issue. I would like for my bot to be used by people who do not trust cloud services. And they set it up and use it locally.

Can a neural network generate a valid json?

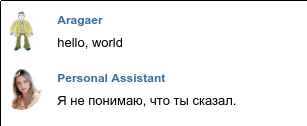

I decided to start with an experiment. Among all the phrases that a bot should be able to get, this is a phrase from the translator "I did not understand what exactly the person here was trying to express." To do this, it would be enough to know how to turn an arbitrary line, for example, "hello, world" into {"unknown": "hello, world"}

In RiveScript, this problem is solved trivially.

+ * - {"unknown": "<star>"} But after all, I came for a full translation. Therefore, my choice, after several days of reflection, fell on nmt .

I wrote a script that took 39 words that I just typed without much thought, and collected all possible combinations of one to four words. Then he took 200 thousand of them, mixed them, sent 120 thousand for training, 40 each took to calculate the error and to cross-validate. Then I launched nmt on the whole thing to study.

And it was ... a failure.

Firstly, from the point of view of nmt hello and "hello are completely different words. Secondly, individual words are also any combinations of non-whitespace characters. My target line began to look like { "unknown" : " hello world test " } with a bunch spaces in different places. At the same time, nmt is stable ... did not write closing quotes and braces. By trial and error, I found that if the original phrase looks like a hello world test END , then the answer is finally what it looks like.

human last dog black END -> { "unknown" : " human last dog black " } But any unfamiliar situation confused the grid:

teach human rest green stack END -> { "unknown" : " teach human rest bag " } stack white light green mirror END -> { "unknown" : " stack white light word " } And this is just the addition of the fifth word, which was also in the dictionary. Trouble

Perhaps it was not the most correct task, so I decided to try again, but to use more reasonable phrases.

Retreat: Timer

One of the potential scenarios for the bot was supposed to include such a dialogue:

- ? - , . - So I need some kind of mechanism so that you can screw up different events with different delays, and even with regular repetitions at the same time. I decided to write such a thing on go just to write something on it. The code turned out to be quite simple - in the end, concrete implementations of similar things in my life already came across. Of course, a person will not contact the timer directly to interact with it somehow, but the syntax of the timer commands seemed simple enough for me to start somewhere.

The timer takes commands of three types:

{"command": "add", "name": "", "what": < json>, "delay": , "repeat": }- repeat and delay are optional, the rest is mandatory. A non-zero repeat means that the event will be periodic.{"command": "modify", "name": "", "what": < json>, "delay": , "repeat": }- here repeat, delay and what are optional, if they are not specified, the corresponding parameter does not change in the name event.{"command": "cancel", "name": ""}- everything seems to be obvious here.

Experiment number two

The phrases that needed to be translated looked like this:

print "hello" after 30 seconds every 7 seconds modify "asdf" to print every 10 seconds cancel "qwerty" These phrases should have matched the commands.

{ "command": "add" , "name": "hello" , "delay": 30 , "repeat": 7 } { "command": "modify" , "name": "asdf" , "repeat": 10 } { "command": "cancel" , "name": "qwerty" } Oops, I forgot about what. However, in the experiment it affected slightly.

First, I wrote a script that generated the files for learning:

- Random action - add, modify, or cancel

- Each team has several words that mean this command. For example for add it is print, emit, send

- For the modify command after the name was added to and one of the words corresponding to add

- If it is add or modify, then by chance it may or may not be delay and / or repeat. For modify, you must have at least one of the two

- the name field is a random character set

- The repeat and delay fields, if any, are random numbers (on the order of 0 to 200)

- The dictionary contains opening and closing curly brackets, comma. Words that in json will be field names fall into the dictionary along with colons.

The result was so-so. I had to add the line end marker for the English text again. If the name field values are included in the dictionary, then the size of the dictionary is the same as the number of lines in the training files. At the same time, these names do not overlap between the training set and the cross-validation set, so the result seems to be not very good. So when the real transfer anyway if there is a word in the dictionary which was not, then go back <unk> .

If you do not include the name in the dictionary, but include the numbers delay and repeat, then everything is not so bad. The result of the translation is about the same, but at least it "translates" the numbers adequately.

Conclusions from the first two experiments

I understand that the main problem of this approach is that part of the phrase must be "translated", and part must be processed on a completely different principle (in this case, it should not be processed in any way). This is solved with the help of improved nmt , but unfortunately, I have not found a simple tutorial on how to use it. Maybe I was looking bad. It’s more likely that I don’t know what exactly to look for - I don’t understand machine learning and NLP and therefore I can’t even formulate a correct Google request. I again mentally return to Dialogflow - there you can select a part inside the phrase that should be processed separately. I'm trying to search by words like "neural network to extract data from sentence" and stumble upon sequence tagging .

I look at the examples and understand that this is exactly what I need.

Experiment three

I plan to do a "translation" in a few steps. In the first step, I run the original sentence through the tagger. Then I build a new sentence, where all the words for which the tags differ from O (capital Latin letter o - Other), replace them with the actual tags.

source phraseprint "hello" after 30 seconds every 7 seconds

tagsO data_sched_name O data_sched_delay OO data_sched_repeat O

phrase with placeholdersprint data_sched_name after data_sched_delay seconds every data_sched_repeat seconds

After that, the phrase goes to nmt, it turns out a translation, and then I make a replacement back. Since I’ll still redo something, I’ll give up on trying to generate json. Instead, I get a phrase like

command add name data_sched_name delay data_sched_delay repeat data_sched_repeat

To construct from this json is already a matter of technology.

This is how the “translation” will take place. But there is still training. Everything is about the same as before, but now I generate a triple English + tags + translation. A pair of English + tags is needed for learning Tegger, and a pair of English-with-replacement + translation is needed for learning nmt.

The result is a victory.

Then I finally notice that in the previous experiment I lost what. I add it equal to the name. Tegger is not required to retrain, retrain nmt ... and it works!

Finally, the final touch. I decided to add to English phrases please - at the beginning, at the end, or not to add at all. If please, I want to see it in the ready-made json in the "tone" field - it is useful for bot to know when it is addressed politely. A small edit in the code for the generation of training data, retrain the tegger and nmt. Works!

$ ./translate.py 'please start printing "hello" every 20 seconds after 50 seconds' < nmt> {'delay': 50, 'name': 'hello', 'tone': 'please', 'command': 'add', 'what': 'hello', 'repeat': 20} Final conclusions

Probably you can do without nmt. I know the class of the phrase, and construct it myself from the data that the tagger picked out from the source sentence. In theory, it would be enough just to use a classifier. Here I’ll run into a problem with “Do not tell me what the weather will be like tomorrow in Moscow,” but this is treated by proper retraining.

Another conclusion is that instead of writing complex scripts to generate instructional data in pure python, I can use RiveScript to generate "roughly human" phrases. Along with the tags. This will greatly simplify the process of learning and retraining.

The most important thing is that now I seem to know how to translate phrases into a language that my bot will understand.

The scripts that I used are available here . For a tegger to work, you need to download a dictionary in a couple of gigabytes (make glove in the sequence_tagging directory). There is a suspicion that you can do without it, if you create such a dictionary yourself. But I still do not know how.

')

Source: https://habr.com/ru/post/347496/

All Articles