Developing AI for a turn-based game on Node.js (part 2)

Hello again, friends!

Not so long ago, I shared with you my experience of using a neural network to solve the problem of choosing an action by a bot. To learn more about the essence of the problem, please read the first part of the article .

And I turn to the story of the next stage of work!

After an unsuccessful (but very useful) experience with the neural network, I began to study decision trees and game theory. In the course of studying the issue, I had a lot of ideas and concepts, but I stopped at the following:

')

The following is a recursive function describing this algorithm:

This is only a schematic representation of the recursive function, in fact in the construction of the tree there are nuances, which I will discuss later. I also implemented a function for writing data about the decision tree to a file so that later you can visually see how it looks.

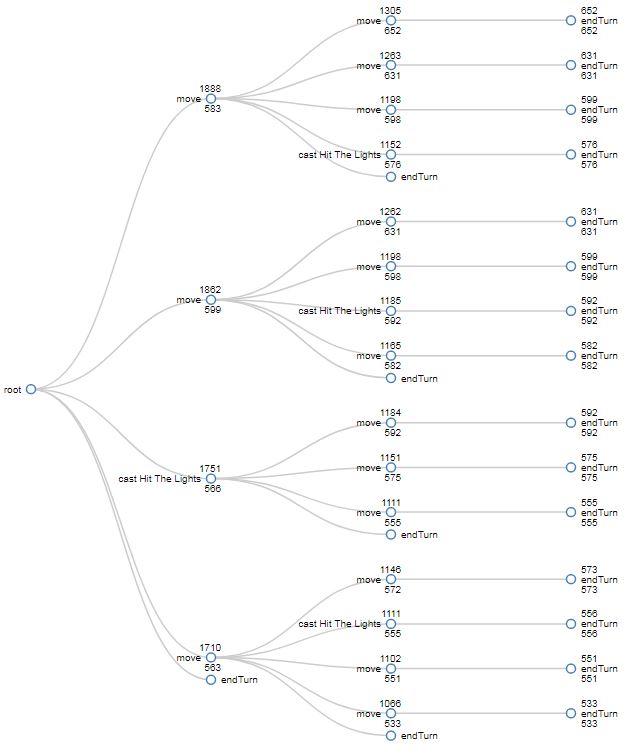

Decision tree (JSON file size: 4kb)

This tree is built for a move, where the character has already spent some energy and only 5 options are given to him: 3 moves to adjacent cells, use the Hit The Lights ability and end the turn. At each node you can see the name of the action, score - the number above and selfScore - the number below. You can easily see how the score is formed at the root level. Due to the fact that all arrays are sorted by score , the optimal solution is always on top. Consider another tree:

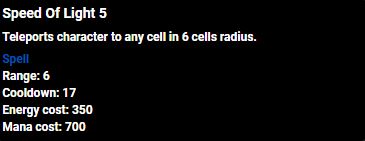

A tree with an open best solution (JSON file size: 169kb)

Unfortunately, I will not be able to show you the full tree for this case, since it will take about 80,000 pixels in height. Let's walk along the opened branch from right to left:

377 (endTurn) + 378 (move) + 373 (cast Defender Of The Faith) + 312 (cast Punishment Due) + 218 (move) = 1658

For the move action, the score value is 1660. The difference is 2 points due to the rounding of numbers to integer values when displayed on the screen. Let's look at the selfScore values for the first tree level: the move action with selfScore equal to 218 is not the largest among the neighboring nodes. The best action at this level is to use "Defender Of The Faith" ( selfScore = 244). However, the total score (1660 versus 1652) is still greater for the move action. It turns out that without thinking through the actions for the whole course, using the ability in this situation would not be so optimal.

Now let's take a closer look at the various aspects of this algorithm.

To assess the situation, use the following function:

This method runs over all the characters involved in the battle and charges a certain number of points for various parameters:

In order to evaluate the result of the execution of the bot action:

We will return to copying a bit later, but for now let's take a closer look at what the simulation is like using the example of one of the simplest mechanics abilities in the game - “Die By The Sword”.

Each ability in the game has a cast function that deals damage, heals, applies effects, and so on. Let's look at the cast function for “Die By The Sword” :

From the function it is clear that inside this function there are at least 3 operations of a random nature (determining damage, determining hit, determining critical impact), as well as utilitarian methods like adding sounds for playback on the client. Naturally, when it comes to predicting the consequences, we cannot use this function. Therefore, in addition to the cast function, each ability also has castSimulation :

For the simulation of movement actions, the same method is used - we simply cut off the unnecessary and replace random methods with a mathematical expectation.

After the basic algorithm was built, I saw for the first time that the bots ponder the course and take actions. It was very cool to see how they decide how to act in this or that situation. But then the following problem suddenly surfaced - sometimes they think oooooochen for a long time. So long that Socket.io crashes because the ping event doesn’t occur due to busy Node.js tree computing. It's time to do optimization.

The first thing that occurred to me was to make the construction of the tree asynchronous. Using the async library and the eachOf method , I converted all passes through the action lists to asynchronous, and all returns in callback 'and. But it only got worse: (The tree was built slowly one and a half times, and debugging the asynchronous construction of a deep tree is another quest ...

Then I began to experiment with process.nextTick , I tried to wrap different pieces of code in it, but did not notice any effect.

As a result, I came to the following scheme:

This solution turned out to be the best one I reviewed, but it is still blocking. The socket continues to fall with a long "thinking" course. If someone has any ideas about changing the architecture of building a tree and using the process.nextTick , I will be happy to help)

Another problem was that sometimes the bot thought for so long that I got an error like JavaScript heap out of memory . It is clear that there is an overflow of RAM, since the decision tree simply does not fit in the default memory space of 512 MB. You can, of course, expand the allotted space, but this is still wrong, it is better to try to meet the minimum. The weak point of my architecture was that I had to keep the states of all combat situations as I was building a tree. And since the objects before the simulation are completely copied, the memory simply chokes. The first thing I did was reduce the weight of the objects before building the tree. So, for example, there is a number of properties for the Character object, which do not participate at all in the simulation: inventory, an array of messages to the log, an array of sounds for playback, frame colors for characters, etc. Now, before building the tree, the object objects are lightened as follows:

Thus, I managed to reduce the size of the object of each character by more than 40%. But even this did not save me from memory overflow. I discussed the problem with a colleague and we found out that it does not make sense to keep a branch in memory after it returns us a list of actions. After all, we are only interested in the most successful solution, and the rest without the need. Now immediately after returning the result, the branch is simply deleted:

After that, I forgot about memory overflow forever.

Despite all the previous optimizations, thinking about the move still sometimes took about 30 seconds: (The next step was to add an additional usageLogic function for each ability, which described the acceptability of its use depending on the situation. For example, there is no point throwing healing on a character with maximum health, therefore Before building a list of actions involving such an ability, we will perform the following check:

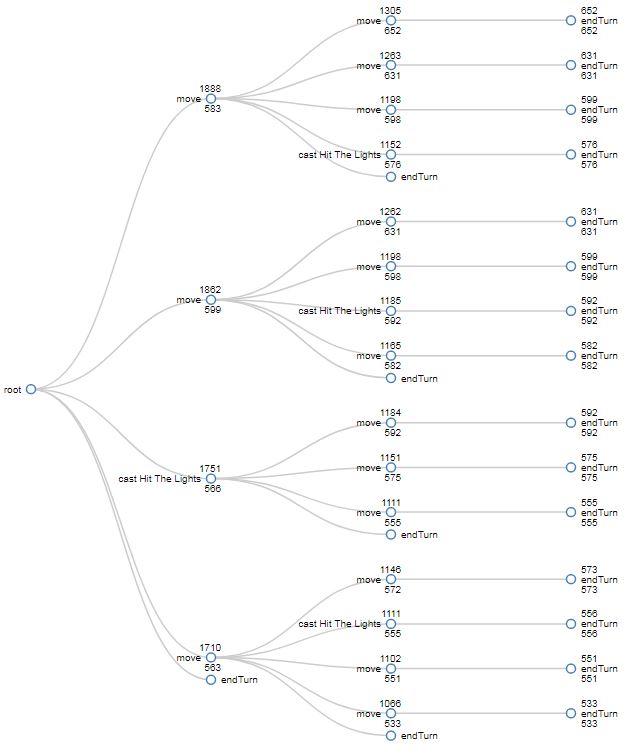

Thus, both bots have become smarter and the number of actions for selection has been reduced by several times. Nevertheless, there was one ability that spawned a large number of actions:

The ability "Speed Of Light" allows you to heat your character to a position within a radius of 6 cells around him.

It turns out that in the worst case, this ability generates 35 actions. The decision tree here is very wide. The solution came naturally after I completed the usageLogic functions for abilities. After all, you can also eliminate unsuccessful points for moving. Above, I have already mentioned the scales of positions and the function calculatePositionWeight . So, at the stage of building a list of cells available for movement, you can evaluate the profitability of each and eliminate the weakest positions:

I applied this approach to both normal movement and abilities that move a character ( “Speed Of Light” ).

Even with this optimization, there are times when thinking is delayed.

The “I don't wanna Stop” ability recovers 850 energy.

The ability to use this ability increases the thinking time by 2 or even 3 times, because after any action, you can almost completely restore energy and start thinking over the course again.

In order to avoid such “long” thoughts, I introduced a threshold time for building a tree. At the moment it is 3 seconds. Before building a new branch with options, I check with this time. , , . . 2000 , Heroku «FREE» 700 . , 3 . , , , , , .

So, I was able to solve the problem with the help of a decision tree. Let's try to understand the advantages and disadvantages of this approach.

Benefits :

Disadvantages :

, . . , . , :

110, , « », , , . «». «» , «» . .

, . , .

PS . , . , .

Not so long ago, I shared with you my experience of using a neural network to solve the problem of choosing an action by a bot. To learn more about the essence of the problem, please read the first part of the article .

And I turn to the story of the next stage of work!

Decision tree

After an unsuccessful (but very useful) experience with the neural network, I began to study decision trees and game theory. In the course of studying the issue, I had a lot of ideas and concepts, but I stopped at the following:

')

- A bot already has a set of actions (nodes) at the very first level of the tree. These are the actions among which the bot will later select the best for the current game situation.

- The bot makes a "simulation" of each action, i.e. trying to commit it "in the mind." The result of such an action will be a change in the game situation, in other words, the transition of the environment to another state.

- The new state is passed to the function of assessing the situation, the result of which is a certain value - score action.

- After calculating the score, the bot generates a list of actions that can be performed from a new virtual situation. Then we recursively repeat points 2 and 3 until the bot can do something other than complete the move.

- When scores are calculated for all actions at one level of the tree, the maximum score returns to the parent level of the tree and is added to the score of the action that generated the child's list of actions. Thus, score values seem to “float” from the extreme branches of the tree to the roots.

- When the tree walk is completed, every action that a bot can do at the moment will have a score . Now we just have to choose the action with the maximum score value and apply it.

The following is a recursive function describing this algorithm:

Lot of code

function buildActionBranch (situation) { var actionList = createActionList(situation); /* createActionList , . actionList (newSituation). */ for(var i = 0; i < actionList.length; i++){ if(actionList[i].type !== "endTurn") { /* , */ actionList[i].branch = buildActionBranch(actionList[i].newSituation); /* selfScore - , score , */ actionList[i].score = actionList[i].selfScore + actionList[i].branch[0].score; /* actionList[i].branch[0] - score */ } else { actionList[i].score = actionList[i].selfScore; } } /* score */ actionList.sort(function (a, b) { if (a.score <= b.score) { return 1; } else if (a.score > b.score) { return -1; } }); /* actionList branch , */ return actionList; } This is only a schematic representation of the recursive function, in fact in the construction of the tree there are nuances, which I will discuss later. I also implemented a function for writing data about the decision tree to a file so that later you can visually see how it looks.

Decision tree (JSON file size: 4kb)

This tree is built for a move, where the character has already spent some energy and only 5 options are given to him: 3 moves to adjacent cells, use the Hit The Lights ability and end the turn. At each node you can see the name of the action, score - the number above and selfScore - the number below. You can easily see how the score is formed at the root level. Due to the fact that all arrays are sorted by score , the optimal solution is always on top. Consider another tree:

A tree with an open best solution (JSON file size: 169kb)

Unfortunately, I will not be able to show you the full tree for this case, since it will take about 80,000 pixels in height. Let's walk along the opened branch from right to left:

377 (endTurn) + 378 (move) + 373 (cast Defender Of The Faith) + 312 (cast Punishment Due) + 218 (move) = 1658

For the move action, the score value is 1660. The difference is 2 points due to the rounding of numbers to integer values when displayed on the screen. Let's look at the selfScore values for the first tree level: the move action with selfScore equal to 218 is not the largest among the neighboring nodes. The best action at this level is to use "Defender Of The Faith" ( selfScore = 244). However, the total score (1660 versus 1652) is still greater for the move action. It turns out that without thinking through the actions for the whole course, using the ability in this situation would not be so optimal.

Now let's take a closer look at the various aspects of this algorithm.

Assessment of the situation

To assess the situation, use the following function:

Lot of code

function situationCost (activeChar, myTeam, enemyTeam, wallPositions){ var score = 0; var effectScores = 0; // score += activeChar.curHealth / activeChar.maxHealth * 110; score += activeChar.curMana / activeChar.maxMana * 55; var positionWeights = arenaService.calculatePositionWeight(activeChar.position, activeChar, myTeam.characters, enemyTeam.characters, arenaService.getOptimalRange(activeChar), wallPositions); score += positionWeights[0] * 250 + Math.random(); score += positionWeights[1] * 125 + Math.random(); for(var j = 0; j < activeChar.buffs.length; j++){ if(activeChar.buffs[j].score) { effectScores = activeChar.buffs[j].score(activeChar, myTeam.characters, enemyTeam.characters, wallPositions); score += this.calculateEffectScore(effectScores, activeChar.buffs[j].name); } } for(j = 0; j < activeChar.debuffs.length; j++){ if(activeChar.debuffs[j].score) { effectScores = activeChar.debuffs[j].score(activeChar, myTeam.characters, enemyTeam.characters, wallPositions); score -= this.calculateEffectScore(effectScores, activeChar.debuffs[j].name); } } //myTeam - for(var i = 0; i < myTeam.characters.length; i++){ if(myTeam.characters[i].id !== activeChar.id) { var ally = myTeam.characters[i]; score += ally.curHealth / ally.maxHealth * 100; score += ally.curMana / ally.maxMana * 50; for(j = 0; j < ally.buffs.length; j++){ if(ally.buffs[j].score) { effectScores = ally.buffs[j].score(ally, myTeam.characters, enemyTeam.characters, wallPositions); score += this.calculateEffectScore(effectScores, ally.buffs[j].name); } } for(j = 0; j < ally.debuffs.length; j++){ if(ally.debuffs[j].score) { effectScores = ally.debuffs[j].score(ally, myTeam.characters, enemyTeam.characters, wallPositions); score -= this.calculateEffectScore(effectScores, ally.debuffs[j].name); } } } } //enemyTeam - for(i = 0; i < enemyTeam.characters.length; i++){ var enemy = enemyTeam.characters[i]; score -= Math.exp(enemy.curHealth / enemy.maxHealth * 3) * 15 - 200; score -= enemy.curMana / enemy.maxMana * 50; for(j = 0; j < enemy.buffs.length; j++){ if(enemy.buffs[j].score) { effectScores = enemy.buffs[j].score(enemy, enemyTeam.characters, myTeam.characters, wallPositions); score -= this.calculateEffectScore(effectScores, enemy.buffs[j].name); } } for(j = 0; j < enemy.debuffs.length; j++){ if(enemy.debuffs[j].score) { effectScores = enemy.debuffs[j].score(enemy, enemyTeam.characters, myTeam.characters, wallPositions); score += this.calculateEffectScore(effectScores, enemy.debuffs[j].name); } } } return score; } This method runs over all the characters involved in the battle and charges a certain number of points for various parameters:

- Health . The higher the percentage of allied health, the more points. Moreover, the dependence is linear:

score += activeChar.curHealth / activeChar.maxHealth * 110;

But when assessing the health of the characters of the enemy, points are accrued according to the following formula:score -= Math.exp(enemy.curHealth / enemy.maxHealth * 3) * 15 - 200;

The graph of the above function in the range from 0 to 1 on the axis of the current health

With the full health of opponents from the total number of points are subtracted about the same values, which are added to the allies. However, the lower the health of a particular character, the more points this situation will pick up. At low health points will no longer be deducted, but added. This is done so that the bots focus their efforts on destroying a single target, instead of “smearing” the damage on the enemy team. - Mana (resource for the use of abilities). There are already no complicated functions, everything is linear for both allies and opponents.

- Position. I have already casually mentioned the assessment of the position of the character on the battlefield.

It consists of two parts: attacking and defensive. The best attacking position is 3 enemy characters within sight and at the optimum distance to attack. The best defensive position is 2 allies within sight and at the optimum distance for using support abilities. Positioning points are awarded only for the active character, since changing the positions of other characters will still affect the value of the corresponding indicators for the active one. - Effects. The most difficult part of the evaluation function. Bot needs to determine

how much one effect imposed on a character is more important than another. For this, each effect has a score function. For example, buff (buff)

“Cauterization” gradually restores a character’s health, but burns his mana. For this effect, the score function looks like this:Lot of codescore: function(owner, myTeam, enemyTeam, walls) { var buffer = {}; /* buffer - , */ for (var i = 0; i < myTeam.length; i++) { if (myTeam[i].id === this.casterId) buffer = myTeam[i]; } /* */ var heal = (this.variant * 80) * (1 + buffer.spellPower); /* , */ heal = arenaService.calculateExpectedHeal(heal, buffer); /* */ var positionWeights = arenaService.calculatePositionWeight(owner.position, owner, myTeam, enemyTeam, arenaService.getOptimalRange(owner), walls); return { effectScore: heal / 10, leftScore: this.left * 5, offensivePositionScore: 0, defensivePositionScore: - positionWeights[1] * 25, healthScore: - owner.curHealth / owner.maxHealth * 25, manaScore: owner.curMana / owner.maxMana * 15 }; }

So, the score function for the effect returns an object with 6 properties:- effectScore. The modifier of the numerical indicators of the effect itself: the size of the damage caused, the healing received, the increase or decrease in the characteristics, etc.

- leftScore. Time modifier effect.

- offensivePositionScore. Modifier attacking position.

- defensivePositionScore. Defensive position modifier.

- healthScore. The amount of health modifier effect holder.

- manaScore. Mana modifier for the effect owner.

In the situation assessment function, all these modifiers are summed up. This amount will be an indicator of the "quality" of this effect.

For all these modifiers about the effect of "Cauterization", we can draw the following conclusions:- The more health he recovers, the higher the amount.

- The more he will hold on the character, the higher the amount.

- The location of the opponents does not affect the amount.

- The further we are from the allies, the higher the amount. Since there is no one to rely on and be safe.

- The less health the character has, the higher the amount.

- The more mana left in the character, the higher the amount. After all, we do not want this effect to completely leave us without mana, if it is so small.

Action simulation

In order to evaluate the result of the execution of the bot action:

- Fully copies the state of the environment so that all its further actions do not change the original state.

- Performs a simulation of an action (moving or using an ability)

- Evaluates the new state of the environment using the evaluation function

We will return to copying a bit later, but for now let's take a closer look at what the simulation is like using the example of one of the simplest mechanics abilities in the game - “Die By The Sword”.

Each ability in the game has a cast function that deals damage, heals, applies effects, and so on. Let's look at the cast function for “Die By The Sword” :

Lot of code

cast : function (caster, target, myTeam, enemyTeam, walls) { /* */ caster.spendEnergy(this.energyCost()); caster.spendMana(this.manaCost()); /* , */ this.cd = this.cooldown(); /* */ if(caster.checkHit()){ /* */ var physDamage = randomService.randomInt(caster.minDamage * (1 + this.variant * 0.35), caster.maxDamage * (1 + this.variant * 0.35)); /* */ var critical = caster.checkCrit(); if(critical){ physDamage = caster.applyCrit(physDamage); } /* */ physDamage = target.applyResistance(physDamage, false); /* , */ caster.soundBuffer.push(this.name); /* physDamage */ target.takeDamage(physDamage, caster, {name: this.name, icon: this.icon(), role: this.role()}, true, true, critical, myTeam, enemyTeam); } else { /* , */ caster.afterMiss(target.charName, {name: this.name, icon: this.icon(), role: this.role()}, myTeam, enemyTeam); } /* , */ caster.afterCast(this.name, myTeam, enemyTeam); } From the function it is clear that inside this function there are at least 3 operations of a random nature (determining damage, determining hit, determining critical impact), as well as utilitarian methods like adding sounds for playback on the client. Naturally, when it comes to predicting the consequences, we cannot use this function. Therefore, in addition to the cast function, each ability also has castSimulation :

/* */ caster.spendEnergy(this.energyCost()); caster.spendMana(this.manaCost()); /* , */ this.cd = this.cooldown(); /* - */ var physDamage = (caster.minDamage * (1 + this.variant * 0.35) + caster.maxDamage * (1 + this.variant * 0.35)) / 2; /* */ physDamage = caster.hitChance * ((1 - caster.critChance) * physDamage + caster.critChance * (1.5 + caster.critChance) * physDamage); physDamage = target.applyResistance(physDamage, false); /* "" */ target.takeDamageSimulation(physDamage, caster, true, true, myTeam, enemyTeam); caster.afterCastSimulation(this.name); For the simulation of movement actions, the same method is used - we simply cut off the unnecessary and replace random methods with a mathematical expectation.

Optimization

After the basic algorithm was built, I saw for the first time that the bots ponder the course and take actions. It was very cool to see how they decide how to act in this or that situation. But then the following problem suddenly surfaced - sometimes they think oooooochen for a long time. So long that Socket.io crashes because the ping event doesn’t occur due to busy Node.js tree computing. It's time to do optimization.

1) Release of flow

The first thing that occurred to me was to make the construction of the tree asynchronous. Using the async library and the eachOf method , I converted all passes through the action lists to asynchronous, and all returns in callback 'and. But it only got worse: (The tree was built slowly one and a half times, and debugging the asynchronous construction of a deep tree is another quest ...

Then I began to experiment with process.nextTick , I tried to wrap different pieces of code in it, but did not notice any effect.

As a result, I came to the following scheme:

- For the very first list of actions, we asynchronously start building branches.

- For all nested branches, the tree will be synchronized.

Lot of code

/* - " " */ function buildActionBranchAsync(myTeam, enemyTeam, activeCharId, wallPositions, cb){ var self = this; /* , */ var actionList = self.createActionList(myTeam, enemyTeam, activeCharId, wallPositions); async.eachOf(actionList, function(actionInList, index, cb){ /* */ process.nextTick(function() { if(actionInList.type != "endTurn" ) { /* */ actionInList.branch = self.buildActionBranchSync(actionInList.myTeamState, actionInList.enemyTeamState, actionInList.activeCharId, wallPositions); if(actionInList.branch && actionInList.branch[0]) { actionInList.score = actionInList.selfScore + actionInList.branch[0].score; } else { actionInList.score = actionInList.selfScore; } } else { actionInList.score = actionInList.selfScore; } cb(null, null); }); }, function(err, temp){ if(err){ return console.error(err); } actionList.sort(function (a, b) { if (a.score <= b.score) { return 1; } else if (a.score > b.score) { return -1; } }); cb(actionList); }) } This solution turned out to be the best one I reviewed, but it is still blocking. The socket continues to fall with a long "thinking" course. If someone has any ideas about changing the architecture of building a tree and using the process.nextTick , I will be happy to help)

2) Memory release

Another problem was that sometimes the bot thought for so long that I got an error like JavaScript heap out of memory . It is clear that there is an overflow of RAM, since the decision tree simply does not fit in the default memory space of 512 MB. You can, of course, expand the allotted space, but this is still wrong, it is better to try to meet the minimum. The weak point of my architecture was that I had to keep the states of all combat situations as I was building a tree. And since the objects before the simulation are completely copied, the memory simply chokes. The first thing I did was reduce the weight of the objects before building the tree. So, for example, there is a number of properties for the Character object, which do not participate at all in the simulation: inventory, an array of messages to the log, an array of sounds for playback, frame colors for characters, etc. Now, before building the tree, the object objects are lightened as follows:

Lot of code

function lightWeightTeamBeforeSimulation(team){ delete team.teamName; delete team.lead; for(var i = 0; i < team.characters.length; i++){ var char = team.characters[i]; delete char.battleTextBuffer; delete char.logBuffer; delete char.soundBuffer; delete char.battleColor; delete char.charName; delete char.gender; delete char.isBot; delete char.portrait; delete char.race; delete char.role; delete char.state; delete char.calcParamsByPoint; delete char.calcItem; delete char.updateMods; delete char.removeRandomBuff; delete char.removeRandomDebuff; delete char.removeAllDebuffs; delete char.removeRandomDOT; delete char.stealRandomBuff; delete char.afterDealingDamage; delete char.afterDamageTaken; delete char.afterMiss; delete char.removeImmobilization; delete char.afterCast; delete char.getSize; for(var j = 0; j < char.abilities.length; j++){ var ability = char.abilities[j]; delete ability.cast; delete ability.icon; delete ability.role; } for(j = 0; j < char.buffs.length; j++){ var effect = char.buffs[j]; delete effect.icon; delete effect.role; delete effect.apply; } for(j = 0; j < char.debuffs.length; j++){ var effect = char.debuffs[j]; delete effect.icon; delete effect.role; delete effect.apply; } } return team; } Thus, I managed to reduce the size of the object of each character by more than 40%. But even this did not save me from memory overflow. I discussed the problem with a colleague and we found out that it does not make sense to keep a branch in memory after it returns us a list of actions. After all, we are only interested in the most successful solution, and the rest without the need. Now immediately after returning the result, the branch is simply deleted:

Lot of code

function buildActionBranchSync: function(myTeam, enemyTeam, activeCharId, wallPositions){ var actionList = this.createActionList(myTeam, enemyTeam, activeCharId, wallPositions); for(var z = 0; z < actionList.length; z++){ /* ... */ actionList[z].branch = this.buildActionBranchSync(actionList[z].myTeamState, actionList[z].enemyTeamState, actionList[z].activeCharId, wallPositions); actionList[z].score = actionList[z].selfScore + actionList[z].branch[0].score; delete actionList[z].branch; /* */ /* ... */ } /* sort actionList */ return actionList; } After that, I forgot about memory overflow forever.

3) Reduction of the list of actions

Despite all the previous optimizations, thinking about the move still sometimes took about 30 seconds: (The next step was to add an additional usageLogic function for each ability, which described the acceptability of its use depending on the situation. For example, there is no point throwing healing on a character with maximum health, therefore Before building a list of actions involving such an ability, we will perform the following check:

usageLogic: function(target) { /* 60% */ return target.curHealth < target.maxHealth * 0.6; } Thus, both bots have become smarter and the number of actions for selection has been reduced by several times. Nevertheless, there was one ability that spawned a large number of actions:

The ability "Speed Of Light" allows you to heat your character to a position within a radius of 6 cells around him.

It turns out that in the worst case, this ability generates 35 actions. The decision tree here is very wide. The solution came naturally after I completed the usageLogic functions for abilities. After all, you can also eliminate unsuccessful points for moving. Above, I have already mentioned the scales of positions and the function calculatePositionWeight . So, at the stage of building a list of cells available for movement, you can evaluate the profitability of each and eliminate the weakest positions:

Lot of code

/* ... */ var bestMovePoints = []; /* , */ var movePoints = arenaService.findMovePoints(myTeam, enemyTeam, activeChar, false, wallPositions); /* */ for(var i = 0; i < movePoints.length; i++){ var weights = arenaService.calculatePositionWeight(movePoints[i], activeChar, myTeam.characters, enemyTeam.characters, arenaService.getOptimalRange(activeChar), wallPositions); /* (weights[0]) , (weights[1]) */ bestMovePoints.push({ point: movePoints[i], weightScore: weights[0] * 6 + weights[1] * 4 }) } /* */ bestMovePoints.sort(function (a, b) { if (a.weightScore <= b.weightScore) { return 1; } else if (a.weightScore > b.weightScore) { return -1; } }); /* 3 */ bestMovePoints = bestMovePoints.slice(0, 3); for(j = 0; j < bestMovePoints.length; j++){ /* */ } I applied this approach to both normal movement and abilities that move a character ( “Speed Of Light” ).

4) Thinking threshold

Even with this optimization, there are times when thinking is delayed.

The “I don't wanna Stop” ability recovers 850 energy.

The ability to use this ability increases the thinking time by 2 or even 3 times, because after any action, you can almost completely restore energy and start thinking over the course again.

In order to avoid such “long” thoughts, I introduced a threshold time for building a tree. At the moment it is 3 seconds. Before building a new branch with options, I check with this time. , , . . 2000 , Heroku «FREE» 700 . , 3 . , , , , , .

Conclusion

So, I was able to solve the problem with the help of a decision tree. Let's try to understand the advantages and disadvantages of this approach.

Benefits :

- You can track the entire "train of thought" bot and find errors

- Fine-tuning each ability, effect, and rating points allows you to control the balance of combat

- No need to collect data for the training sample, and also save the sample in the database

- No need to store the neural network model in the database

- Easily add new abilities without having to adapt AI to them.

Disadvantages :

- For each action, the tree must be built from scratch, which is very expensive for server resources.

- Bots are not trained, they will repeat the same mistakes over and over again.

- You need to debug and configure the internal decision-making algorithm.

, . . , . , :

score += activeChar.curHealth / activeChar.maxHealth * 110; 110, , « », , , . «». «» , «» . .

, . , .

PS . , . , .

Source: https://habr.com/ru/post/347330/

All Articles