IT infrastructure of Navalny headquarters and collection of signatures: preparation for collection, site “Bulk 20! 8”

Series of publications about collecting signatures

1. Introduction, the site "Bulk 20! 8", preparation for the collection

2. Iron and networks, video surveillance

3. Reaper 2018: a system for collecting signatures

4. Project Management

Introduction

This is a story about how the IT infrastructure of the regional headquarters of Alexei Navalny and the system for collecting signatures in support of his nomination as a presidential candidate in Russia are arranged.

This is also a story about how with the help of free software and inexpensive components a small team has created a complex system of collecting signatures throughout the country. There are no complicated technical solutions in the project, but there are many important trifles that cannot be foreseen on the basis of typical IT development experience.

')

For convenience, the material is divided into four posts, which are best read sequentially.

This is a technical material, but many of the issues discussed here are incomprehensible without a minimal knowledge of the current political context, so it is described to the extent necessary. If for some reason you are afraid of the word “Navalny” (it will meet several times) or the mention of democratic institutions, just do not read this text. Political questions will not be discussed in the comments.

Campaign target

Alexey Navalny's registration as a presidential candidate.

Tasks assigned to IT-department

(in chronological order):

- Pre-registration of all who are ready to sign for the nomination of our candidate;

- Ensuring the work of the network of headquarters throughout Russia;

- Create a system to collect 315,000 ideal signatures.

Historical and political context

If you do not have a parliamentary party, then you need to collect signatures to participate in the elections. This is a barrage procedure that is used to prevent “uncoordinated” candidates from being elected.

Infinite possibilities for refusal of registration are laid down at the level of collection rules:

- The collection of signatures is strictly limited in time;

- According to the law, a small percentage of the required number of signatures is assigned to a marriage; signatures cannot be returned with a good margin;

- It is impossible to verify signatures on our side, since the voters' data must correspond to the FMS database, which is accessible only to state bodies;

- The graphologist, when checked by the CEC, may reject any signature and is not legally liable in the event of an error;

- The verification scheme itself assumes that there will be a significant percentage of false positives ( the Bayes theorem paradox as a barrier to elections ).

We have already encountered this in Novosibirsk when we collected signatures for the elections to the Legislative Assembly.

To collect signatures in Novosibirsk, we created the Reaper system , which was focused on collecting signatures “in the field” and on cubes, managed the collectors' routes, took all the signature lists and allowed us to rank signatures according to the results of various checks.

Collectors in Novosibirsk brought more than 16,000 signatures, of which we chose and passed the best 11,722. Despite the rigorous selection, the working group of the election commission revealed many “invalid signatures”, and the election commission refused to register the candidates. For more information on the absurd reasons why signatures are considered invalid, read here .

The new system was based on the accumulated experience of collecting signatures and their subsequent protection in the election commission.

Features of the new signature collection

For the collection of signatures for the nomination of a presidential candidate even more stringent conditions are set:

- It is necessary to hand over no more than 315 thousand signatures;

- At least 300 thousand signatures must be recognized as valid;

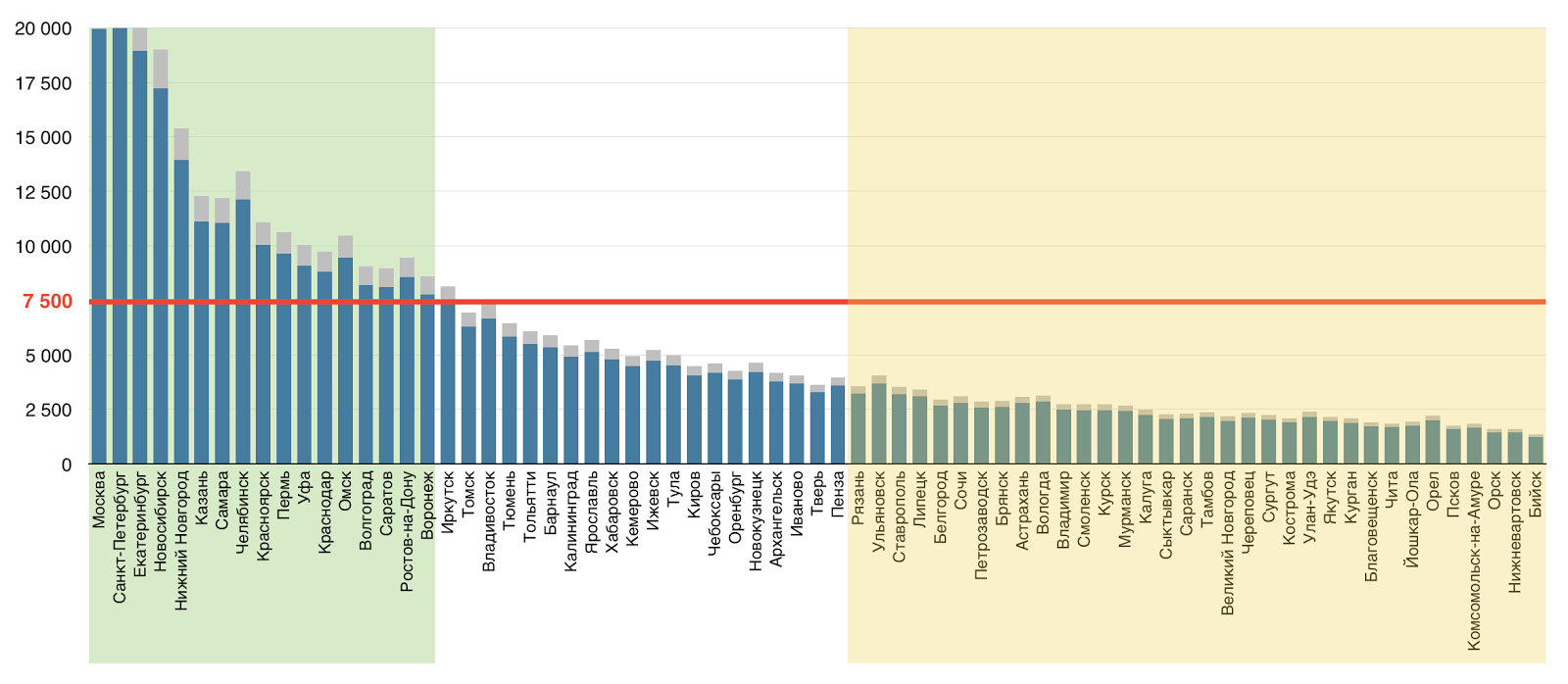

- From one region counted no more than 7500 signatures;

- For a short period of collection (from December 27 to January 31) there are long New Year holidays, when many go on vacation.

Given the previous experience and new requirements, we adopted the following basic principles.

All-Russian Staff Network

Because of the regional quotas, it was impossible to conduct work in, say, the ten largest cities. 315 thousand signatures could be collected if you covered at least 40 cities. In sparsely populated regions, collecting signatures is more difficult, so in practice, for successful collection, it was necessary to open headquarters in most regions of the country.

The forecast on the number of signatures at the time of the successful completion of the collection shows that in large cities the number of people wishing to sign would significantly exceed regional quotas. Moscow (127 thousand) and Peter (63 thousand) did not fit on the screen.

Signature collection only at headquarters

We would have had to hire several thousand assemblers to collect our homes. Anyone who has ever worked with paid collectors (or, for example, sociology students) knows that not all of them are equally sensitive to the procedure and not all overcome the temptation to simply “draw” a signature or two. Careless filling leads to a large percentage of defects, and “drawing” signatures is such a common problem that the CEC provides for verification by a graphologist. Even the presence of a graphologist in the state and the demonstrative execution of several statements to the police cannot 100% save the headquarters from “draftsmen” (we checked). In addition, the collector can finish drawing signatures not only from malicious intent, but, conversely, to "help the headquarters."

We knew that when collecting "in the field," we would definitely introduce " toxic collectors ", as it was in Novosibirsk. Toxic collectors deliberately make mistakes in voter data (for example, they replace one digit in the passport number). Their task is to increase the number of invalid signatures above the limit, after which the electoral commission refuses to register. In Novosibirsk, they spent a lot of effort to clear out toxic signatures. When collected across the country it is impossible to do.

Only in stationary headquarters it was possible to ensure sufficient quality of signatures, conditions for accurate filling of signature sheets and their safety.

Multistage verification of signatures

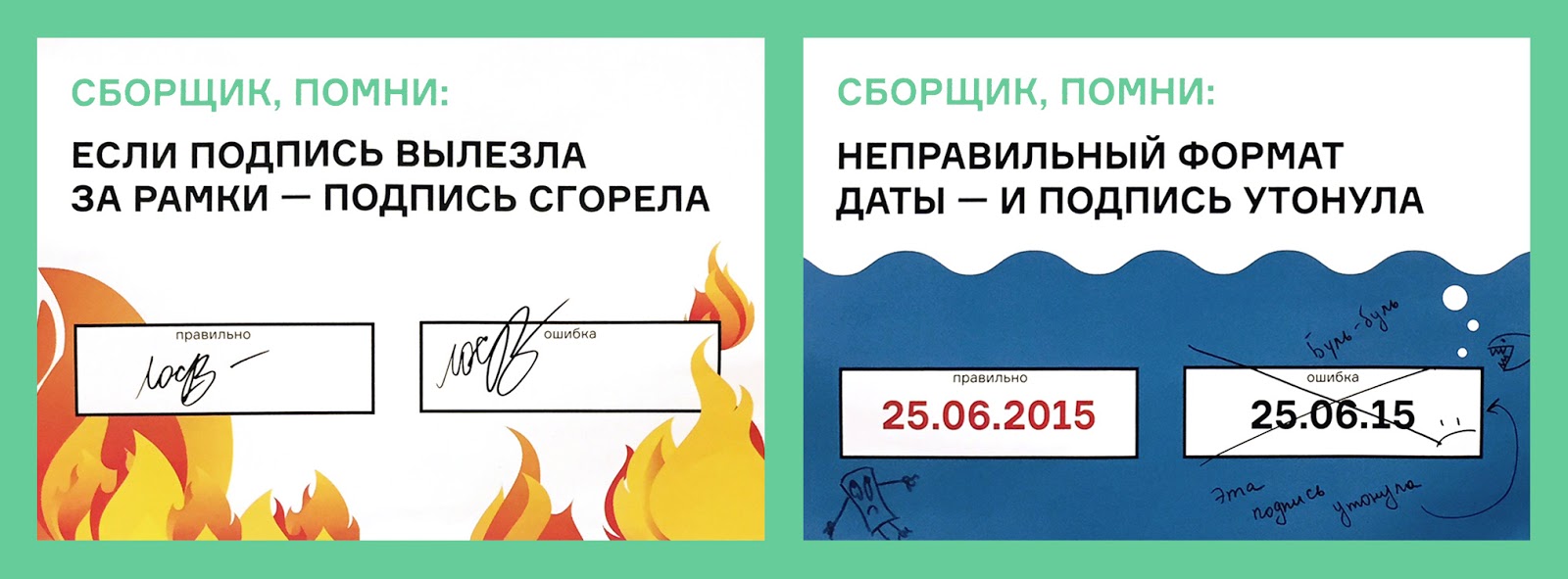

Ideal signatures are a mathematical abstraction. This collection of signatures is a difficult and difficult process. Even honest and well-trained collectors make mistakes, and in the face of lack of time, administrative pressure and provocations, marriage will be even more.

We have a lot of data on how errors appear. In our experience, in the subscription lists, collected in a completely honest manner, there will be about 10% of the signatures that the electoral commission recognizes as invalid.

We had to hand over not just good signatures, but signatures that the electoral commission will take. To do this, several stages of verification and a ranking mechanism were needed - in order to select and hand over only those signatures that would most likely pass the checks of the electoral commission, no matter how absurd we might consider them.

Passport scan for each signature

Without a scan, the entire responsibility for the quality of the signature lies with the collector. If he accidentally or intentionally made a mistake in the passport number, we will never know.

From experience, we found out that only errors of rewriting passport data into a subscription list and data entry errors easily exhaust the allowable 5% limit, even if signatures are collected in comfortable conditions and by conscientious collectors.

Having a scan of the document, we could spend several independent stages of verification of the signature and make corrections.

In addition, our lawyers were preparing to fight for every signature in court. Last time there was a large category of rejected signatures, about which we knew for sure: the signature corresponds to the passport, but checked it on an outdated and complete error base . A single database and the availability of scans would allow lawyers to automate the process of preparing complaints in such cases.

Of course, a passport could only be scanned at headquarters, otherwise it would have been impossible to ensure a sufficient level of personal data security.

Synchronization with electronic database

All operations with signatures and subscription lists, all statuses and movements should have been reflected in the electronic database. The system of collecting signatures was supposed to control all stages of the collection and identify errors. Only in this way would we maintain order (and mental equilibrium) when working with hundreds of thousands of physical objects.

What has been done in the new version of the system

- So that we have where to collect signatures, we have deployed a network of regional headquarters. The IT infrastructure of the headquarters consists of several physical servers, a number of virtual machines, 70 routers, 230 cameras and 189 manned workstations. Inside, the system is simultaneously used by more than 250 people.

- In order to bring several hundred thousand people to headquarters in a short period of time, we started voter registration on site 20! 8 in advance, where they previously confirmed their data.

- To reduce the number of errors, we made a system that allows for independent checks of the correctness of filling in the subscription list. The system consists of several web applications and a mobile application for two platforms.

- In order to upload data to the system, we collected (and partially manufactured) a set of equipment for scanning passports, thought out a scheme for the secure transmission of personal data, and implemented it in all headquarters.

- In order for the address formatting to be correct from the point of view of the electoral commission, we raised the search on the FIAS database and, together with the lawyers, seriously tinker with it in order to take into account all the requirements of the law.

- In order to (partially) secure the headquarters and have additional arguments in the courts, we have established a round-the-clock video surveillance and recording system.

- To test the infrastructure, mechanics, clarify the data and prepare the headquarters for the collection, we conducted a large procedure of preliminary verification of voters, through which 81,750 people passed.

- We have developed the appearance of the subscription list, a sheet logistics system at the headquarters, as well as a physical storage and quick access system for the central headquarters.

The main technologies of our web applications

Primary backend language: Python.

Front-end: JavaScript, jQuery, React, D3.js.

Framework: Django (6 pieces), aiohttp (1 piece).

Databases: PostgreSQL, Redis and others.

Full-text search: Sphinx.

HTTP server: Nginx, Varnish.

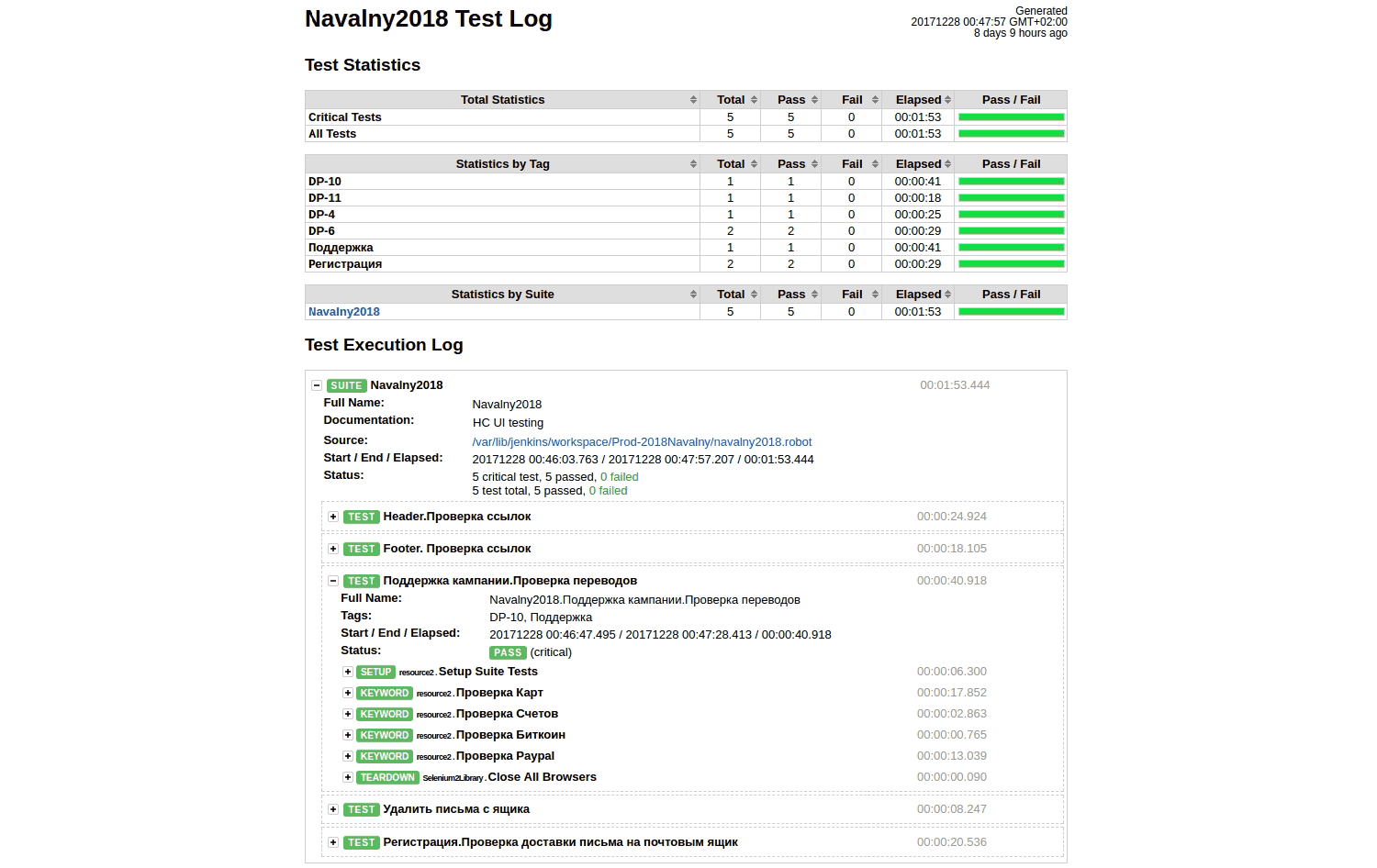

Testing: Jenkins, Browserstack, RobotFramework, Locust.

Monitoring: Zabbix, Elasticsearch, Kibana, Sentry.

Deploy: Ansible and other tools.

Server configuration management: Chef.

Part One: the site "Bulk 20! 8"

We had to bring several hundred thousand people to headquarters in a very limited period of time. To do this, we started registering supporters right on the day the campaign starts. Recruiting and registering supporters is one of the main tasks of the site “ Bulk 20! 8 ”, so there is a registration form on almost every page.

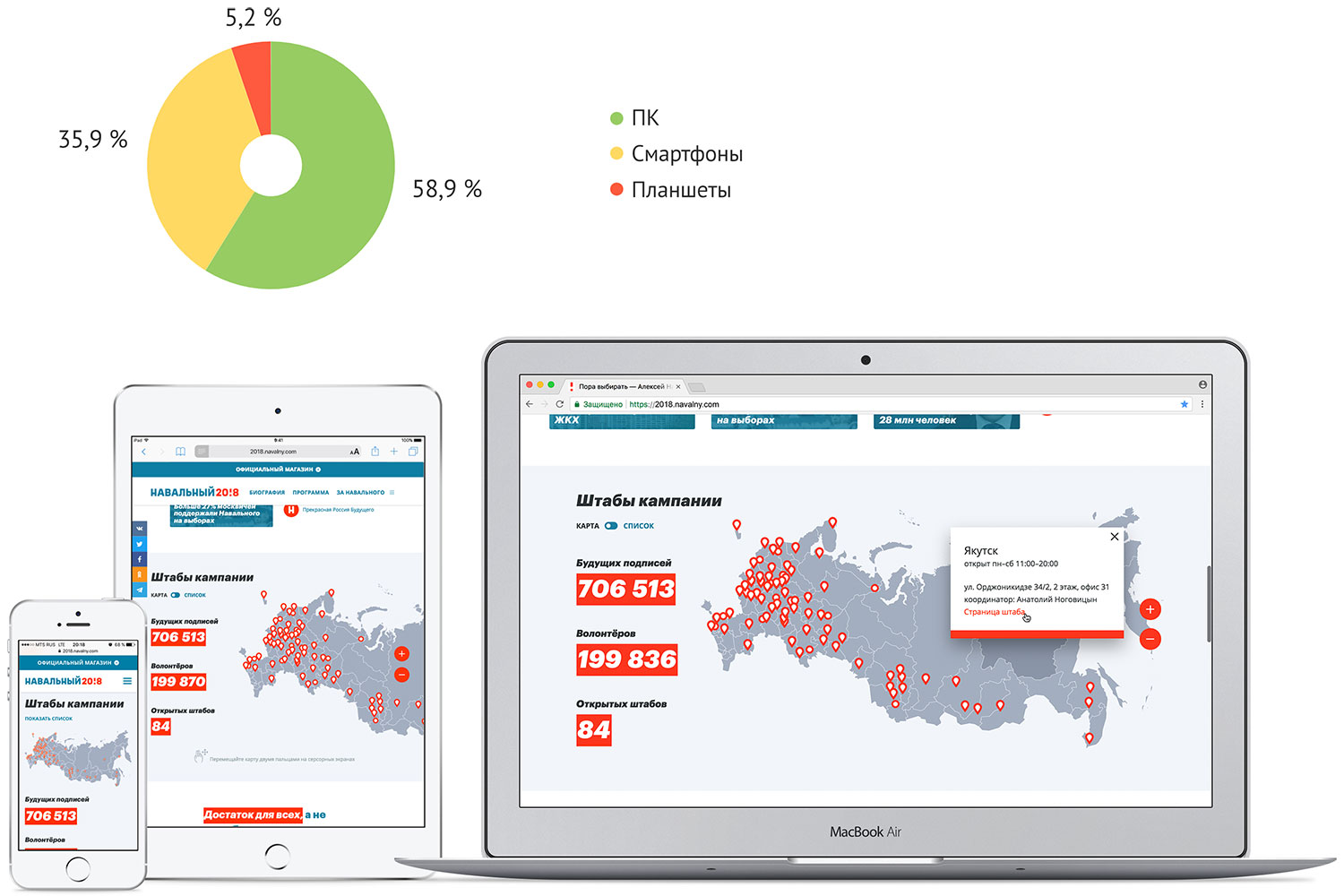

Since all this is necessary not just for the sake of beautiful numbers, it was important for us to know that the registered supporters are real people, not bots, to be able to communicate with them and understand what city they are registered in (to predict the implementation of quotas by regions). Therefore, registration on the site was quite complicated and with the obligatory confirmation of the phone number. In order not to deceive ourselves and others, we recorded only people who filled out the questionnaire and confirmed their phone number as potential signatories. Therefore, on the main page, instead of a million or more (total number of registrations), we now have only 706,513 “future signatures”.

From the point of view of site building it is quite an ordinary product. The site is made in Python + Django + PostgreSQL, standard ORM and standard admin are used. For a year and a half, the site experienced several updates: sections were added, the work of the registration form changed, texts and images on the pages changed. We tried not to complicate the design, so that it was possible to impose standard blocks, so that some sections went from idea to launch in three days.

Approximately half of visitors come to any modern site from mobile devices. We tried to make the site convenient for everyone, so the layouts were drawn and edited for correct display on any screen width, starting at 320px.

Headquarters Map

The only complex interactive element that visitors see is the map of Russia with the headquarters marked on it. When the number of staffs exceeded 50, it became difficult to navigate the map because of the proximity of the markers in the European part of the country. Initially, the map was conceived as a purely decorative element, but suddenly filled with functionality, so for those who have already appreciated the federality of the campaign and just want to find their city, we made a list mode.

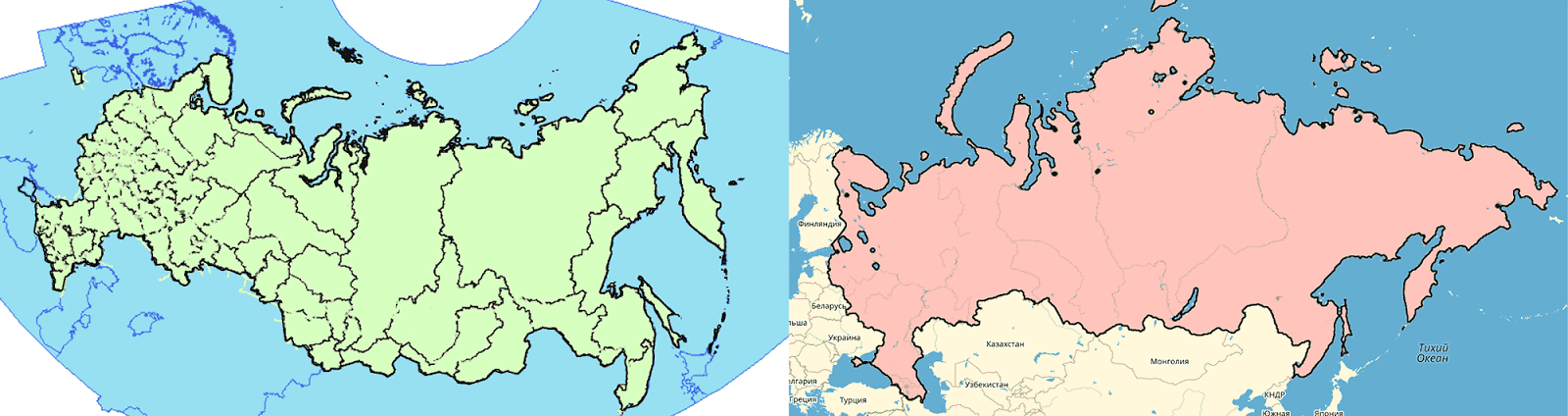

The map is made using the beautiful and versatile library d3.js. We decided to write our own script and not use standard Google Maps or Yandex.Maps because of the map projection. There are many ways to scan the ellipsoid of the Earth on a plane . In the Mercator projection, objects are strongly stretched to the northern latitudes, and we need more space in those areas where the main major cities are concentrated. In addition, in the Mercator projection, Russia looks rather strange. We chose Albers (Albers-Siberia), a more familiar conic projection on geography textbooks.

Russia is a healthy person (a conic projection of Albers) and a Russian smoker (a projection of Mercator)

Content Management

The editorial section of the site is of little interest. The usual Django admin panel with minimal customization is used. With limited development resources, it is more profitable to teach several admin users to use the standard tool than to spend time creating a really convenient one.

Some decisions that simplify the life of the editor were taken from other projects. For example, a tool for typing texts on the client side. Our typographer is convenient because it easily connects to any text or string input field. Information about the state of auto-typography (on / off) is stored as a non-printable character at the end of a line and does not depend on the backend.

To work with complex content of posts and news, we use the block editor, which is also used on many other projects:

Blocks come in different types, each project has its own set. Each block contains content and may contain settings. The block data is stored in the database as json, and the markup inside the text block is stored in markdown format.

For the display, the blocks are converted into the required format: HTML for the post, text for indexing, RSS or XML for Yandex.Dzen, JSON for the mobile application, and so on. Thus, we get a predictable result on any device with rather complex content formatting.

The first version was based on the Sir Trevor code. Later, when supporting Sir Trevor's spaghetti code became hard, the editor was rewritten to React.

Analytics

The most interesting from a technical point of view occurs in the admin panel of the site. From there we watched the stream of registrations.

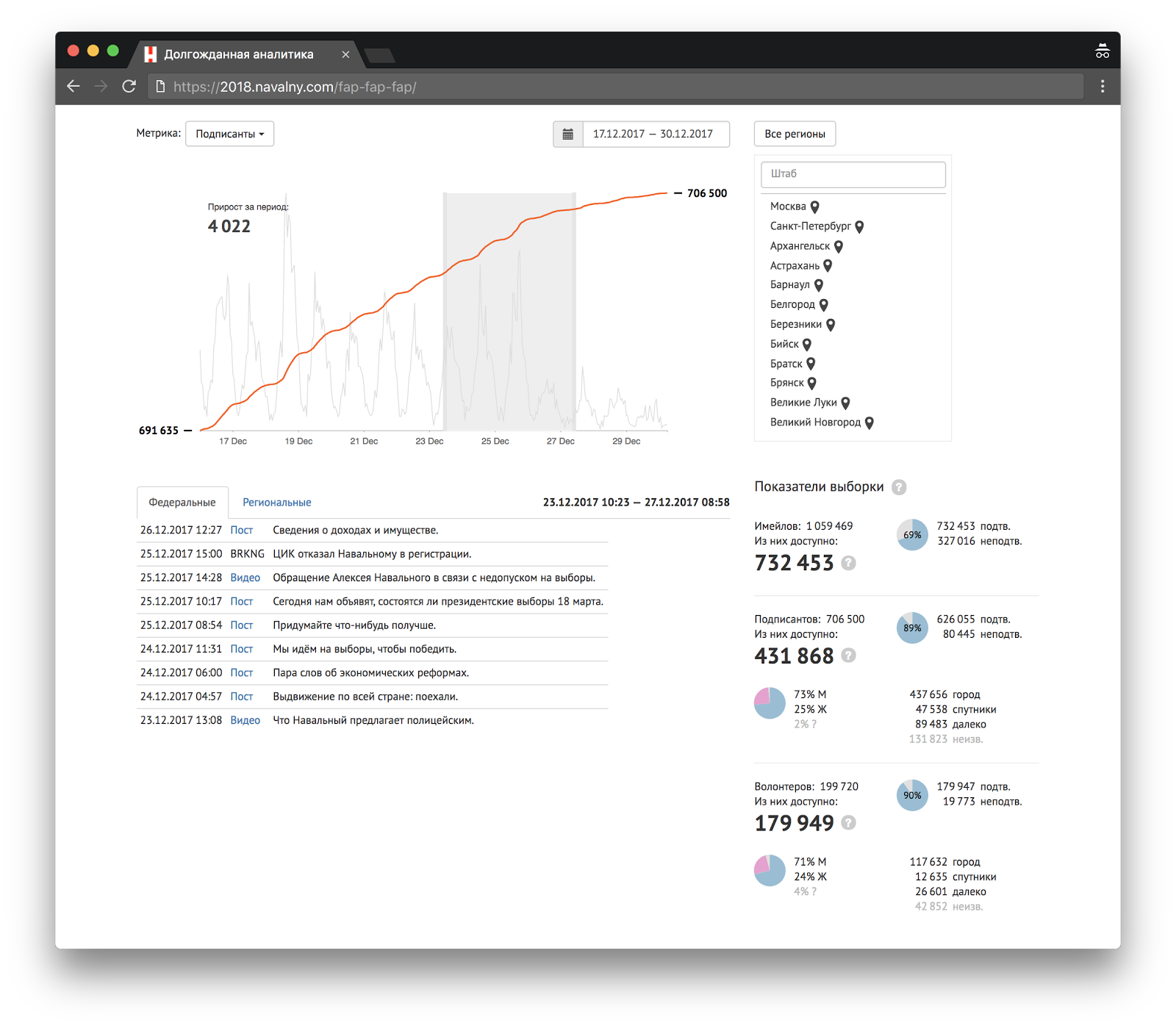

The first time analyst was quite primitive: graphics of the number of registrations of different types from time to time. But we wanted to see the dynamics by regions and track the impact of various events on the number of registrations. So there was a long-awaited analyst:

This screen contains summary information for the entire lifetime of the site, a schedule for a certain period and a list of events for that period. You can select a peak on the chart and try to understand what event caused it. Most often this is the publication of the next video with the investigation on the YouTube-channel Navalny. The biggest increase in signatures was given by videos about the machinations of regional officials.

The graph is made on d3.js, and the filtering of events by time and headquarters is implemented using the Crossfilter library. This solution allows, on the client side without interface brakes, to operate with registration data for an interval of more than a year in 1 hour increments. At the moment it is 12 megabytes of data (1.3 MB in gzip).

A small text report with key indicators of the registration base and success for the previous day was automatically sent daily to all project participants.

City and region

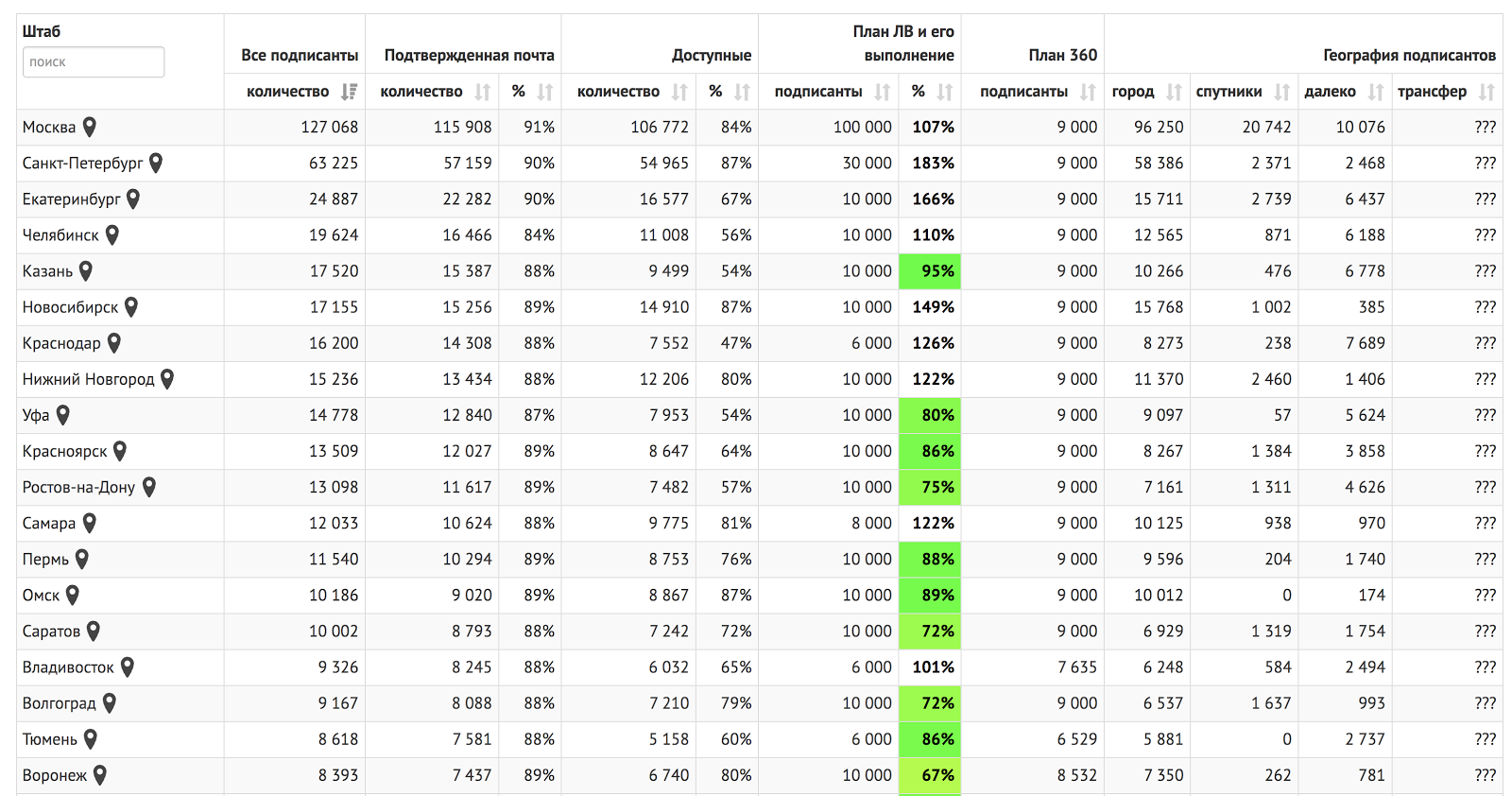

We also have a huge table, where for each region of Russia the main indicators of preparation for collecting signatures are spelled out:

The numbers in this table at first did not want to converge. The amount by city was significantly less than the number of registrations. It turned out that when filling out the questionnaire on the site, people unexpectedly often often make mistakes in the name of their city or use non-standard names:

- Moscow - 2.5% of errors and 579 spellings;

- St. Petersburg - 12.6% of errors and 767 spellings;

- Komsomolsk-on-Amur - more than 20% of errors and abbreviations, 75 options.

An incorrect estimate of the number of supporters could lead to incorrect planning of the network of headquarters and campaign events. I had to think about how to turn the user input of the city name into the standard name of the region. I didn’t want to use the KLADR or FIAS autocompletion mechanisms for such a simple form. Therefore, we took a list of the 700 largest cities in Russia, added a list of typical spellings ("St. Petersburg", "n-s") and made a loose search on them with ranking by Levenshten (this is a measure of the difference between the two sets of characters).

Each city from the list we attributed to one of three categories by distance to the nearest headquarters: the headquarters is in the city, the headquarters is close (urban agglomeration), the headquarters is far. The distance from the headquarters was taken into account when assessing the number of people who would come at the right moment and sign. In the analytics, we separately counted all the signatories and “available” (confirmed mail, lives in a city with a headquarters or nearby).

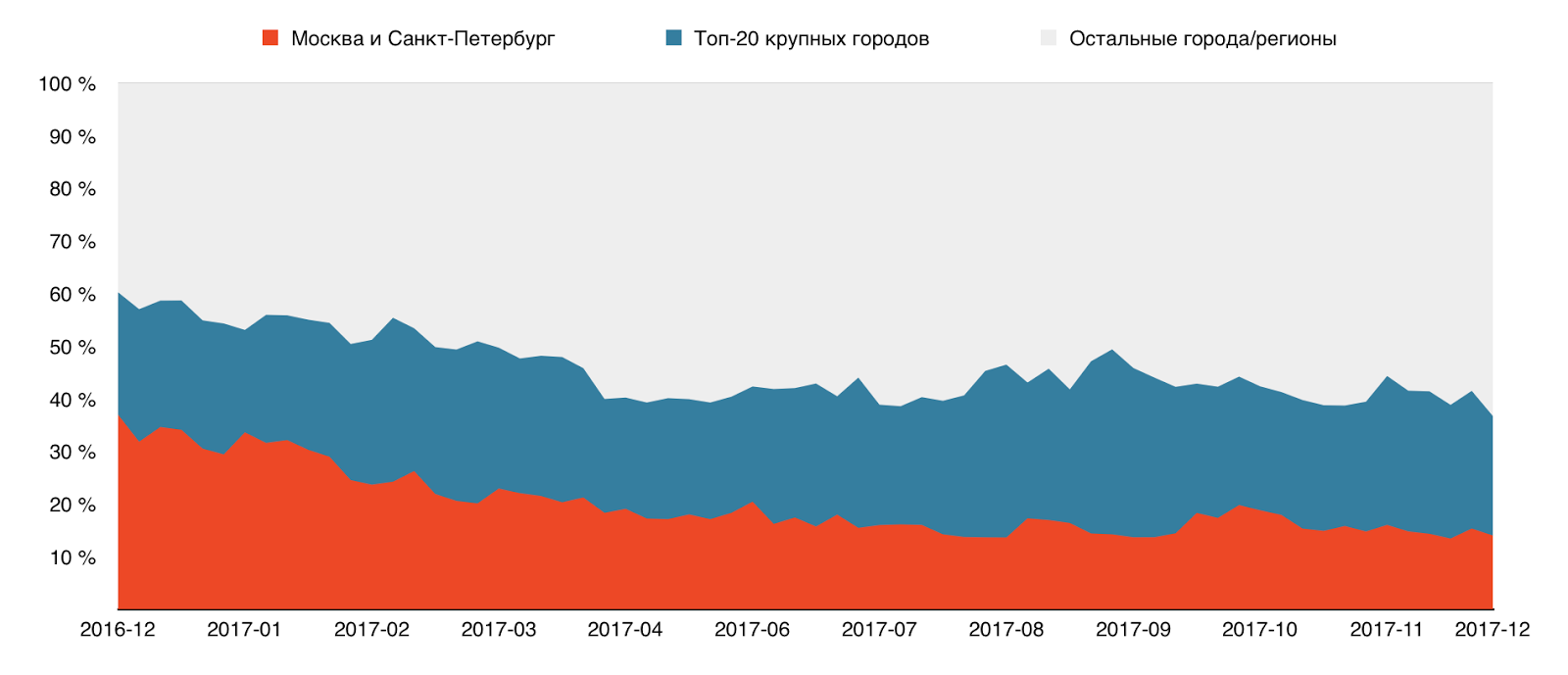

This chart shows how the campaign over time became more and more regional. The share of new registrations from Moscow and St. Petersburg has decreased from 35% to 15%.

SMS and mail

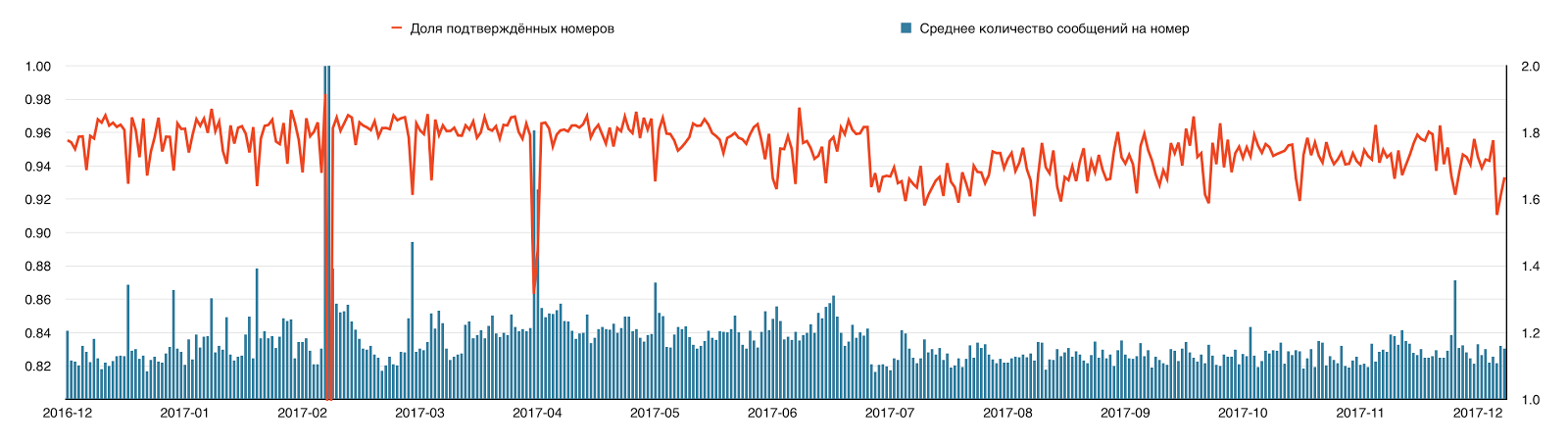

Another technical difficulty was sending SMS and letters. Gateways do not deliver messages very well, especially to overseas numbers. But we wanted to get the cleanest and most reliable base of supporters, so the second part of the registration form required to confirm the phone number via SMS. For reliable dispatch, we rotated three gateways: if the message was not delivered, then the second dispatch went through another gateway. In addition, individual gateways could be turned off in the event of failures on their side. SMS code deliverability indicators are one of the parameters monitored:

According to the schedule, it is clear that the gateways failed twice. The share of delivered SMS fell heavily on February 21 and April 17-18 due to the failure of the message queue. And on July 15, we changed the layout of the registration form, this is also noticeable on the chart.

We send a large number of letters on the basis of more than 700 thousand email-addresses. Someone is subscribed to the news, someone should receive notification of the event. In addition, each address must be confirmed according to the 2-opt-in rules (this is when a link comes in the first letter that you need to click on, confirming the subscription to the newsletter). At the beginning of the campaign, we used the ActiveCampaign service, but it is expensive and incredibly slow. When the base passed over 300 thousand contacts, it became impossible to work. Therefore, we have written our CRM / mailing service, which allows us to form newsletters and chain of letters for the necessary samples. Mailgun is now used to deliver letters.

Delayed task queues

Sending mail or SMS through a third-party API is a time consuming operation. Such operations must be performed asynchronously, in order not to slow down the user interface and not to put the entire application under load. Initially, all asynchronous tasks worked through Celery with Redis as a broker. Each letter or SMS created a task in the Celery queue, after which the free worker processed this task. But this approach proved to be unreliable and too resource-intensive.

Once, more than 10 thousand registrations arrived in an hour (no, we were not shown on TV, it was the “+1” campaign ). 10 Celery workers could not cope with this, users began to notice a significant delay in receiving SMS and mail.

After this incident, we abandoned Celery in favor of the simplest PostgreSQL-based queue. Tasks from the queue dismantled the "demons" in python, one for each channel of message delivery. Every 10 seconds, the demon took a pack of tasks from the queue and in one batch sent data to the distribution API. Grouping tasks has drastically reduced server load, and the use of a homemade queue has extremely simplified debugging and monitoring.

Celery turned out to be too complicated tool for our task. He needs thoughtful tuning and monitoring through external utilities like Flower, which itself consumes a lot of resources. On other projects, we try to use a simpler solution - RQ + Redis.

Comparison of the complexity of RQ and Celery from an article about working with asynchronous tasks.

Development process

How is the process of creating the site "Bulk 20! 8" from the point of view of developers? We do not adhere to any one methodology, but use approaches from different systems. For example, managers set tasks in Trello with a structure similar to kanban-board, and the developers apply some extreme programming practices.

Approximately half of the team is located in the Moscow office, and the rest work remotely. Moscow employees can participate in campaign campaigns in order

Most of the project participants are working on it full-time, but individual tasks were made by developers temporarily involved with other projects, or even volunteers. For example, volunteer Ilya almost completely made a map of the headquarters for the main page.

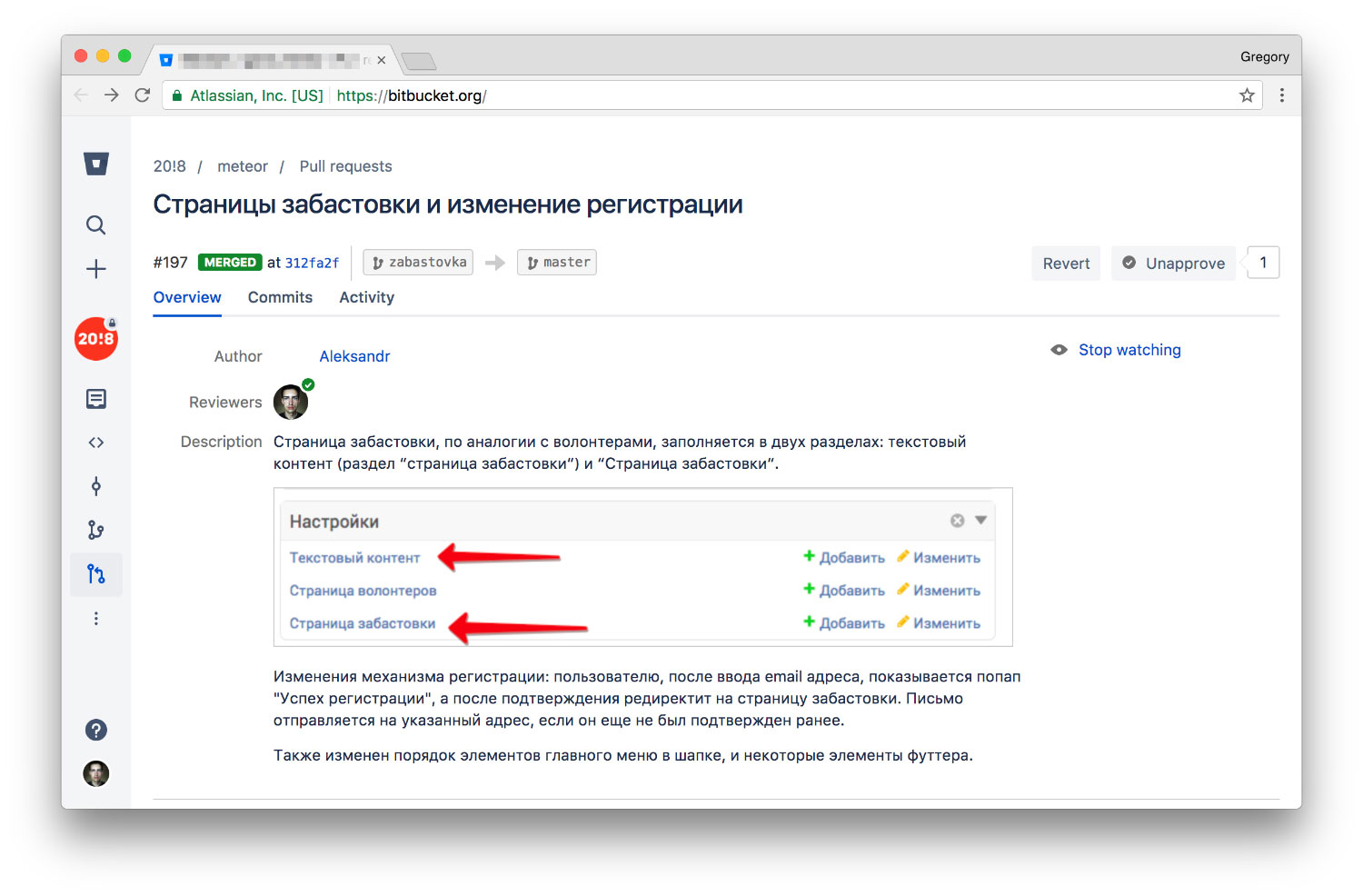

The source code is stored in a git repository on the Bitbucket platform. For each serious new task a separate branch is created. staging- , develop . , , - . , , . , .

. , - Bitbucket ( ), , . Makefile.

«make update» , , , , uwsgi-. , , .

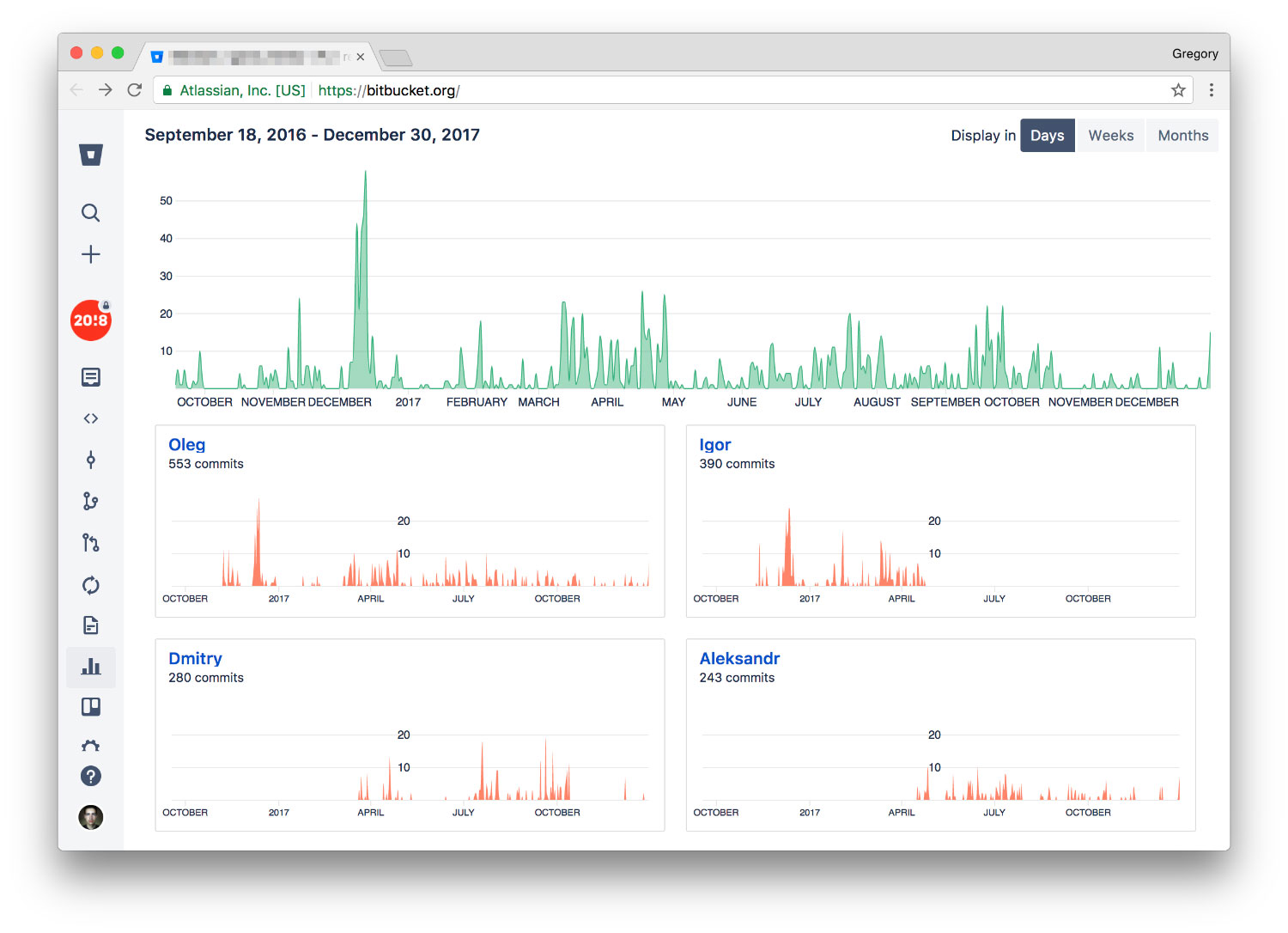

18 2016 . 1228 , 200 -, 600 , 67 ( ).

About design

(- ), . .

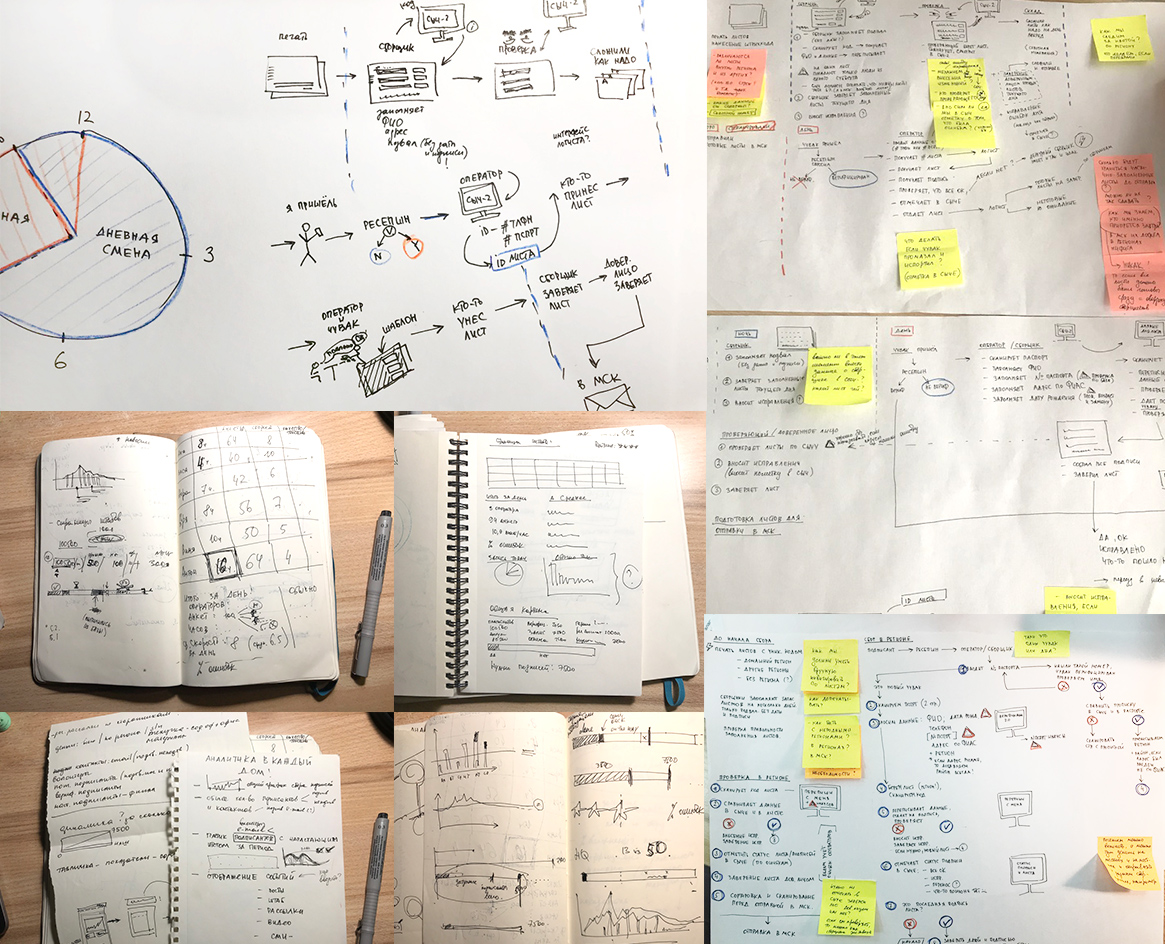

IT- :

1) «» ( , , );

2) , ( ) ( , , ).

*, , , , .

IT-c — , , , -, ( Sketch).

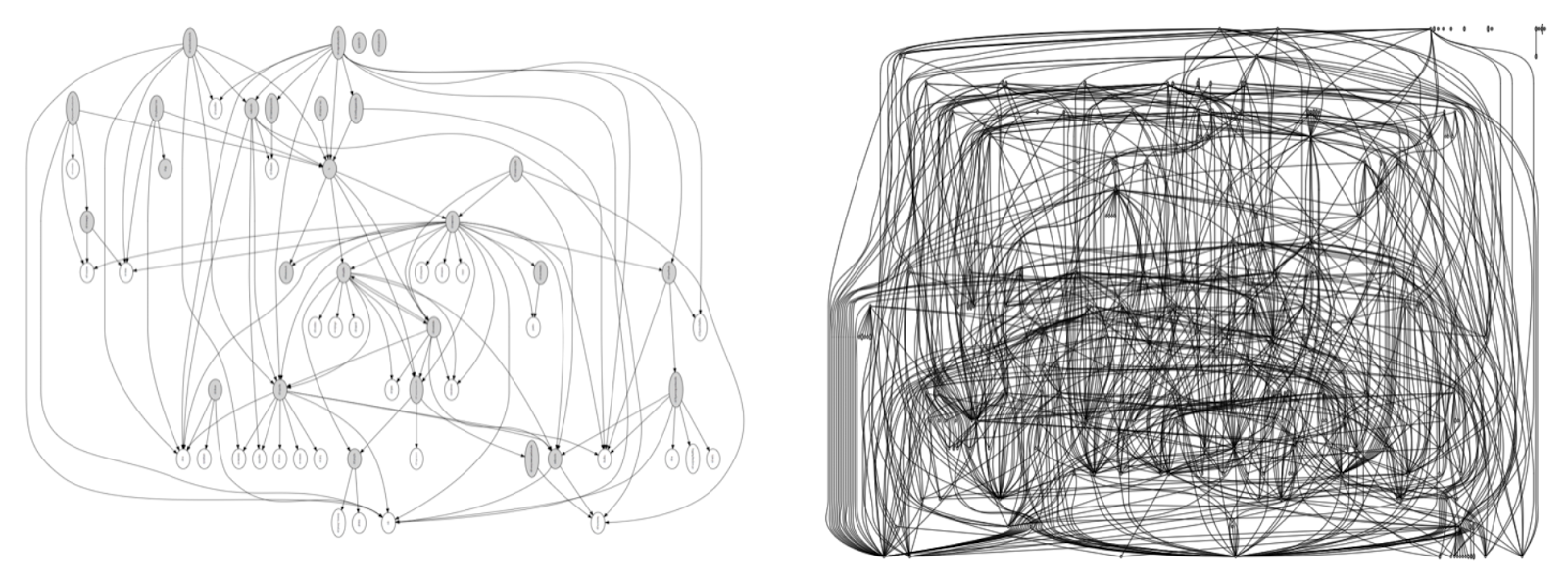

, , . draw.io, . . «» .

marvelapp.com , , . .

. , , ( , ) , .

, , , , . ( , ) .

. , .

, 6 — «» .

IT- — , MVP . , (-, ), . -, .

. - .

Testing

RobotFramework. , . CI- Jenkins.

— , SMS-. GSM- SIM- Asterisk. SMS- , .

Trello .

« 20!8» , , . : , edge-. chef, .

, ip. , master-slave .

- Varnish, url- . , -, .

Edge- ssl- ( VPN-). — . -. DNS-. Edge- . , edge-, — 5 / .

, , javascript, json- , (, style.28fa1c7b1761.css), . edge-. , 25 .

edge- CloudFlare, , . . CloudFlare . , , ip ( ).

Conclusion

( IT-, , ) — : , , , .

, , . , , .

, .

, .

, , .

Source: https://habr.com/ru/post/347312/

All Articles