Even faster acceleration of WebAssembly: new streaming and layered compiler in Firefox

Both authors: Lin Clark is a developer in the Mozilla Developer Relations group. Engaged in JavaScript, WebAssembly, Rust and Servo, and also draws comics about the code.

People call WebAssembly a factor that changes the rules of the game, because this technology speeds up code execution on the web. Some of the accelerations are already implemented , while others will appear later.

One of the techniques is stream compilation, when the browser compiles code while it is being loaded. Until now, this technology was considered only as a potential acceleration option. But with the release of Firefox 58, it will become a reality.

')

Firefox 58 also includes a two-level compiler. The new base compiler compiles code 10–15 times faster than the optimizing compiler.

Together, these two changes mean that we compile the code faster than it comes from the network.

On the desktop, we compile 30-60 MB of WebAssembly code per second. This is faster than the network delivering packets .

If you have Firefox Nightly or Beta, you can try out the technology on your own device. Even on an average mobile device, compilation is performed at 8 MB / s - this is faster than the download speed in most mobile networks.

In other words, the execution of the code begins immediately after the download is completed.

Web productivity lawyers are critical of a large number of javascript sites, because it slows down the loading of web pages.

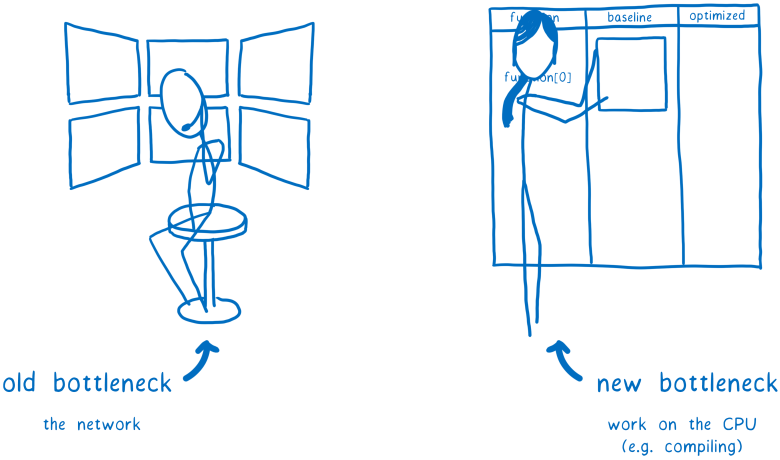

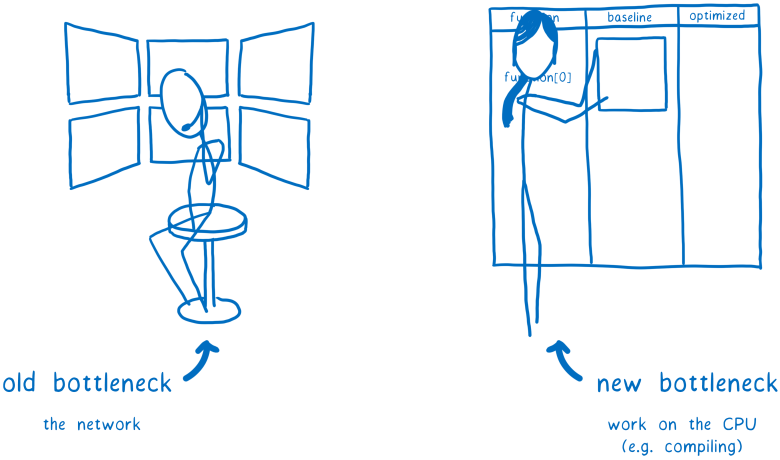

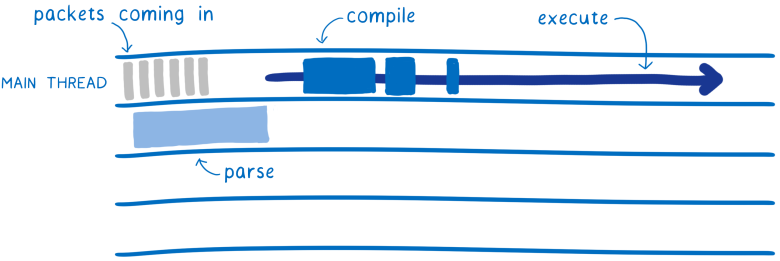

One of the main reasons for this slowdown is the parse and compile time. As Steve Sauders observed , the bottleneck of web productivity used to be a network, and now a CPU, namely the main thread of execution.

So we want to take out as much work as possible from the main thread. We also want to ensure the interactivity of the page as early as possible, so we use the CPU all the time. And it is better to reduce the load on the CPU.

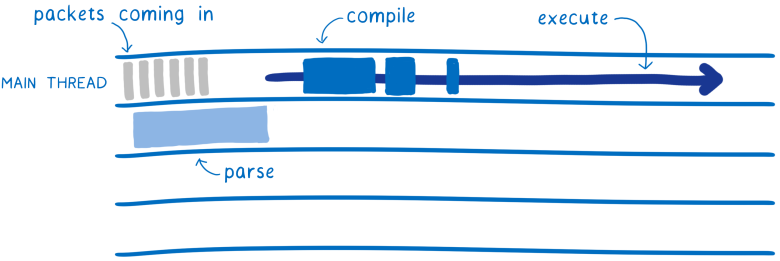

Some of these goals are achievable with JavaScript. You can parse files outside the main stream after receiving them. But they still have to disassemble, and this is a lot of work. And you need to wait until the end of the parsing before starting the compilation. And for compilation, you return to the main thread, because it usually happens lazy JS compilation on the fly.

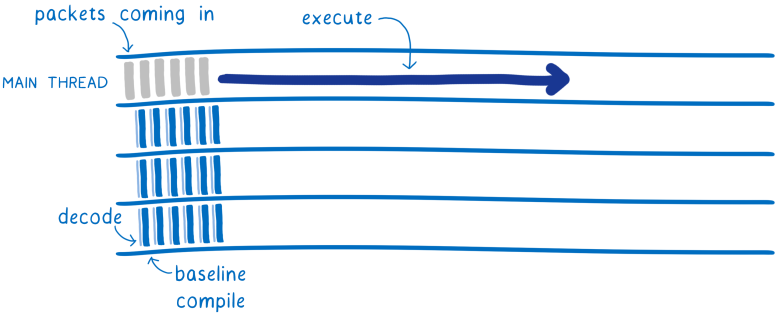

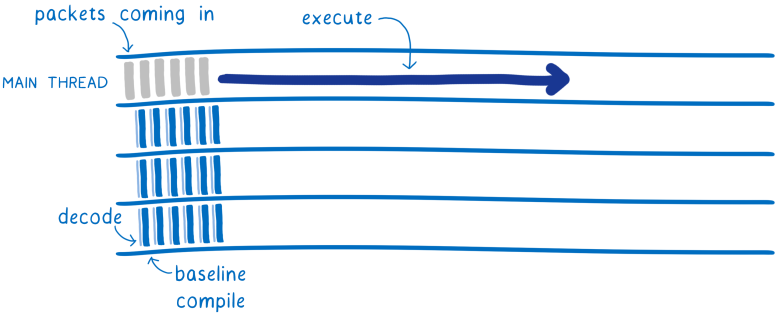

When using WebAssembly from the very beginning less work. Decoding WebAssembly is much simpler and faster than parsing JavaScript. And these decoding and compilation can be broken down into several streams.

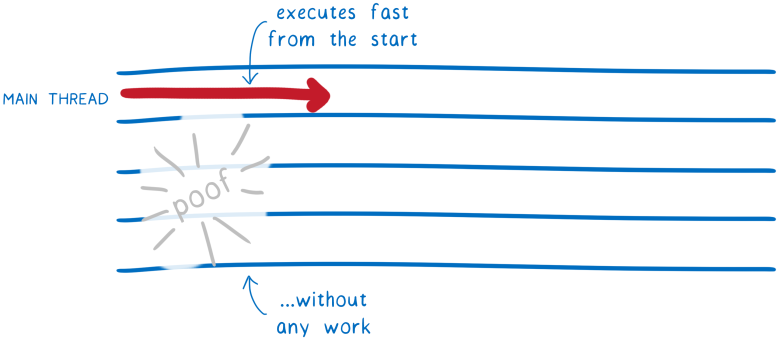

This means that several threads will perform a basic compilation, significantly speeding it up. At the end of the process, the precompiled code starts to run in the main thread. No need to stop waiting for compilation, as in the case of JS.

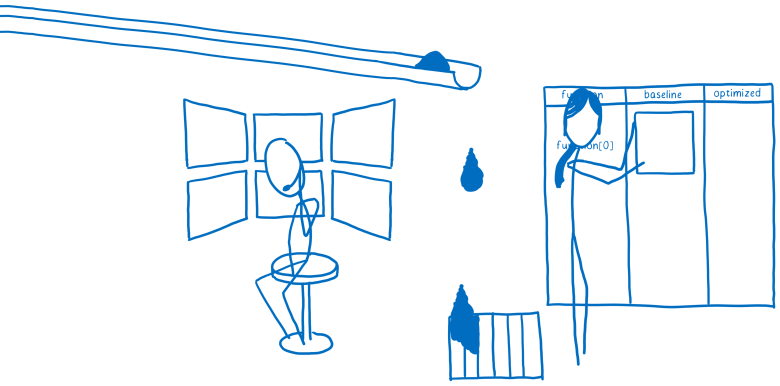

Although the precompiled code runs in the main thread, other threads are currently working on an optimized version. When an optimized version is ready, it replaces the preliminary version - and the code runs even faster.

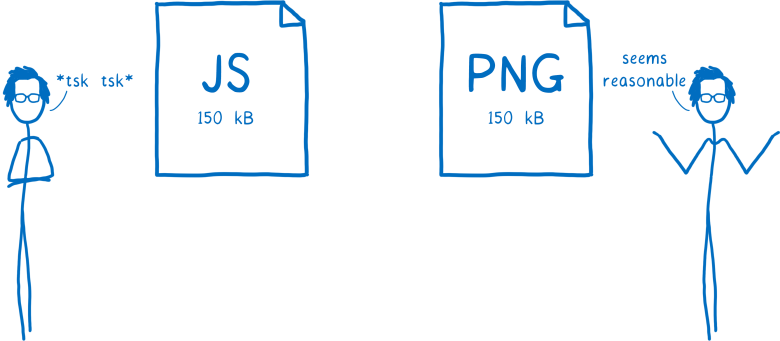

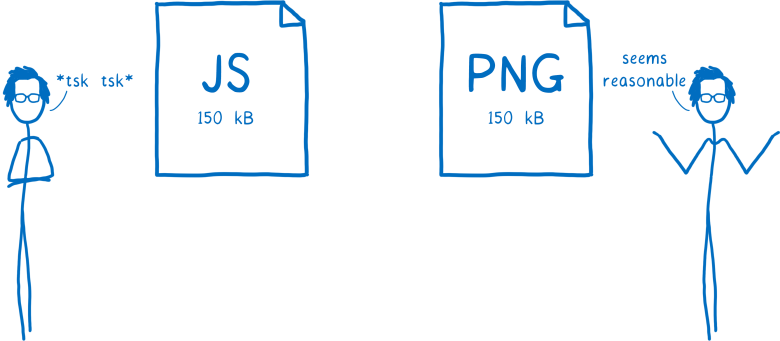

This makes loading WebAssembly more like decoding an image than loading a JavaScript. Think about it ... lawyers of productivity are hostile to the scripts that meet more than 150 KB, but images of this size are not satisfactory.

This is because loading images is much faster, as Eddie Osmani explained in the article “The Price of JavaScript .” And decoding an image does not block the main stream, as Alex Russell explained in the article “ Can you afford it? A budget for web productivity in the real world . ”

This does not mean that WebAssembly files will be as big as the images. Although the first versions of WebAssembly tools do create large files, but this is because a significant portion of the runtime has to be included there. Now there is an active work to reduce their size. For example, in the Emscripten there is a “ squeeze initiative ”. In Rust, you can still get quite small files by applying the target wasm32-unknown-unknown. And there are tools like wasm-gc and wasm-snip for even more optimization.

This means that WebAssembly files will load much faster than equivalent JavaScript.

It is very important. As Yehuda Katz noted , this is a factor that really changes the rules of the game.

So let's see how the new compiler works.

The sooner you start compiling the code, the sooner you finish it. This is what streaming compilation does ... starting compiling a .wasm file as early as possible.

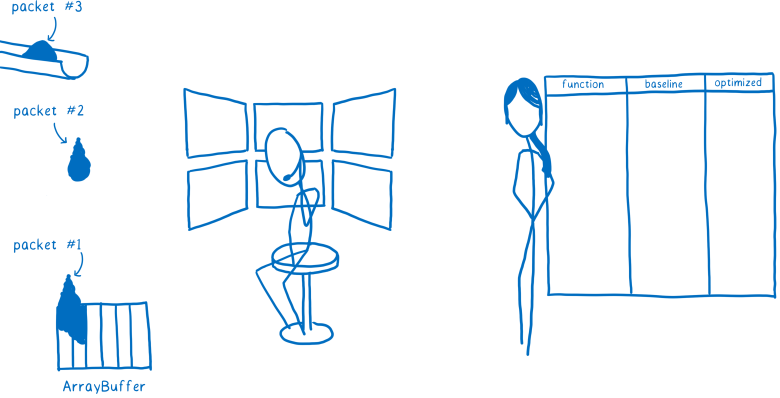

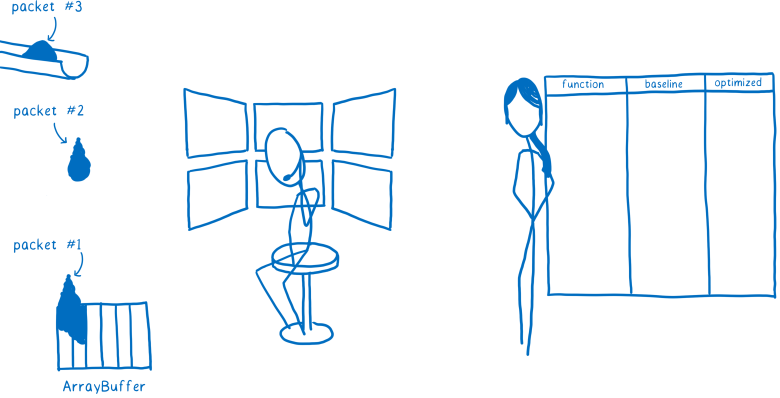

When you download a file, it does not come in one piece. Instead, it comes in a series of packages.

Previously, you had to download all the packages of the .wasm file, then the browser’s network layer placed it in ArrayBuffer.

Then this ArrayBuffer was transferred to the Web VM (aka JS engine). At this point, the WebAssembly compiler would start compiling.

But there is no good reason to leave the compiler on hold. It is technically possible to compile WebAssembly line by line. This means that the process can be started after the arrival of the first fragment.

This is exactly what our compiler does, which takes advantage of the streaming WebAssembly programming interfaces.

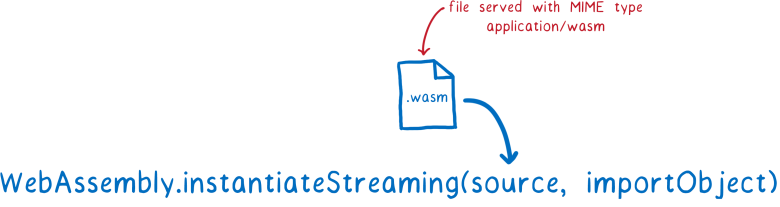

If you pass the Response object to

In addition to simultaneously loading and compiling code, there is another advantage.

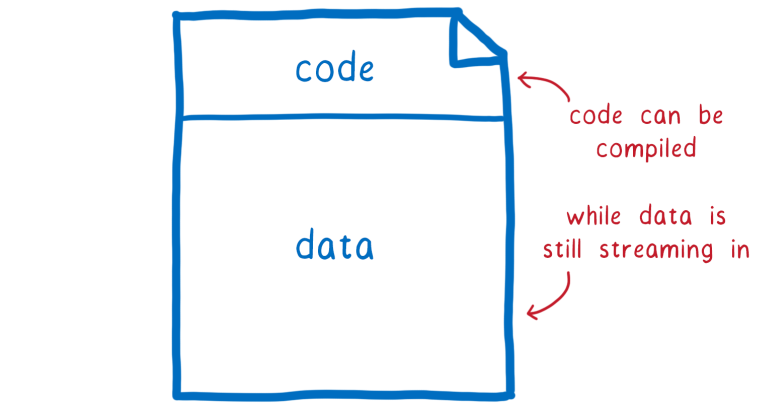

The code section of the .wasm module comes at the very beginning, before the rest of the data (which is placed in the module's memory object). So in the case of streaming compilation, the code is compiled while the module data has not yet been fully downloaded. If your module requires a lot of data, then the memory object can be several megabytes in size, and streaming compilation will give a significant performance increase.

With streaming compilation, the compilation process starts earlier. But we can also make it faster.

If you want fast code work, then you need to optimize it. But it takes time to perform these optimizations during compilation, which slows down the compilation itself. So this is a definite compromise.

But if you use two compilers, you can get the benefits of both quick compilation and optimized code. The first one quickly compiles without any special optimizations, and the second one works more slowly, but produces more optimized code.

This is called a multi-level compiler. When the code initially arrives, it is compiled by a Level 1 compiler (or the base compiler). After the code compiled by the base compiler starts up, the Level 2 compiler processes the code again and prepares a more optimized version in the background.

Upon completion of the process, the basic version of the code is replaced by an optimized version. This speeds up code execution.

JavaScript engines have long used multi-level compilers. However, the JS engines use a Level 2 compiler (i.e., optimizing) only for hot code ... which is often called for execution.

In contrast, in WebAssembly, a Level 2 compiler will eagerly perform a complete recompilation, optimizing all module code. In the future, we can add more options to control how greedy or lazy optimization should be.

The base compiler saves a lot of time on loading. It works 10–15 times faster than an optimizing compiler. And the compiled code in our case works only two times slower.

This means that your code will work quickly enough even in the first moments when only the basic non-optimized version works.

In an article on Firefox Quantum, I explained the options for coarse and fine-tuned parallelization. We use both types to compile WebAssembly.

I mentioned earlier that the optimizing compiler runs in the background, freeing the main thread for code execution. The basic compiled version can work while the optimizing compiler performs its own recompilation.

But on most computers, in this case, most of the cores will remain unloaded. In order to optimally use all cores, both compilers use fine-tuned parallelization to separate work.

The unit of parallelization is a function. Each function can be compiled independently, on a separate core. In fact, it is so finely tuned that in reality we have to group these functions into larger groups of functions. These groups are sent to different kernels.

Currently, decoding and compilation are repeated every time you reload the page. But if you have the same .wasm file, then it must be compiled into the same machine code.

So almost always this work can be skipped. That is what we will do in the future. Decoding and compilation will be performed when the page is first loaded, and the resulting machine code will be stored in the HTTP cache. Then, when requesting this URL, the precompiled machine code will be issued immediately.

So the load time will disappear altogether on subsequent page loads.

The foundation for this feature has already been laid. In Firefox 58, we thus cache JavaScript bytecode. You only need to extend this feature to support .wasm files.

People call WebAssembly a factor that changes the rules of the game, because this technology speeds up code execution on the web. Some of the accelerations are already implemented , while others will appear later.

One of the techniques is stream compilation, when the browser compiles code while it is being loaded. Until now, this technology was considered only as a potential acceleration option. But with the release of Firefox 58, it will become a reality.

')

Firefox 58 also includes a two-level compiler. The new base compiler compiles code 10–15 times faster than the optimizing compiler.

Together, these two changes mean that we compile the code faster than it comes from the network.

On the desktop, we compile 30-60 MB of WebAssembly code per second. This is faster than the network delivering packets .

If you have Firefox Nightly or Beta, you can try out the technology on your own device. Even on an average mobile device, compilation is performed at 8 MB / s - this is faster than the download speed in most mobile networks.

In other words, the execution of the code begins immediately after the download is completed.

Why is it important?

Web productivity lawyers are critical of a large number of javascript sites, because it slows down the loading of web pages.

One of the main reasons for this slowdown is the parse and compile time. As Steve Sauders observed , the bottleneck of web productivity used to be a network, and now a CPU, namely the main thread of execution.

So we want to take out as much work as possible from the main thread. We also want to ensure the interactivity of the page as early as possible, so we use the CPU all the time. And it is better to reduce the load on the CPU.

Some of these goals are achievable with JavaScript. You can parse files outside the main stream after receiving them. But they still have to disassemble, and this is a lot of work. And you need to wait until the end of the parsing before starting the compilation. And for compilation, you return to the main thread, because it usually happens lazy JS compilation on the fly.

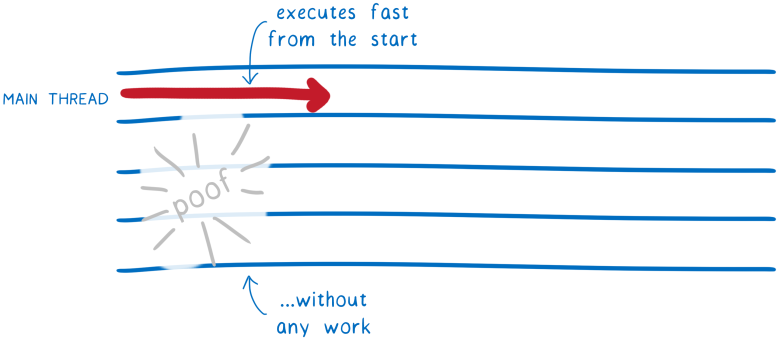

When using WebAssembly from the very beginning less work. Decoding WebAssembly is much simpler and faster than parsing JavaScript. And these decoding and compilation can be broken down into several streams.

This means that several threads will perform a basic compilation, significantly speeding it up. At the end of the process, the precompiled code starts to run in the main thread. No need to stop waiting for compilation, as in the case of JS.

Although the precompiled code runs in the main thread, other threads are currently working on an optimized version. When an optimized version is ready, it replaces the preliminary version - and the code runs even faster.

This makes loading WebAssembly more like decoding an image than loading a JavaScript. Think about it ... lawyers of productivity are hostile to the scripts that meet more than 150 KB, but images of this size are not satisfactory.

This is because loading images is much faster, as Eddie Osmani explained in the article “The Price of JavaScript .” And decoding an image does not block the main stream, as Alex Russell explained in the article “ Can you afford it? A budget for web productivity in the real world . ”

This does not mean that WebAssembly files will be as big as the images. Although the first versions of WebAssembly tools do create large files, but this is because a significant portion of the runtime has to be included there. Now there is an active work to reduce their size. For example, in the Emscripten there is a “ squeeze initiative ”. In Rust, you can still get quite small files by applying the target wasm32-unknown-unknown. And there are tools like wasm-gc and wasm-snip for even more optimization.

This means that WebAssembly files will load much faster than equivalent JavaScript.

It is very important. As Yehuda Katz noted , this is a factor that really changes the rules of the game.

So let's see how the new compiler works.

Stream Compilation: Early Start of Compilation

The sooner you start compiling the code, the sooner you finish it. This is what streaming compilation does ... starting compiling a .wasm file as early as possible.

When you download a file, it does not come in one piece. Instead, it comes in a series of packages.

Previously, you had to download all the packages of the .wasm file, then the browser’s network layer placed it in ArrayBuffer.

Then this ArrayBuffer was transferred to the Web VM (aka JS engine). At this point, the WebAssembly compiler would start compiling.

But there is no good reason to leave the compiler on hold. It is technically possible to compile WebAssembly line by line. This means that the process can be started after the arrival of the first fragment.

This is exactly what our compiler does, which takes advantage of the streaming WebAssembly programming interfaces.

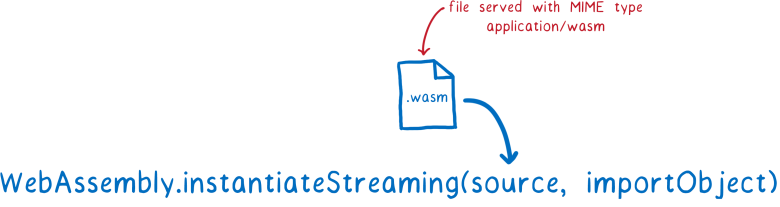

If you pass the Response object to

WebAssembly.instantiateStreaming , new code fragments will be sent to the WebAssembly engine immediately after downloading. Then the compiler can start working on the first fragment while the next one is still being downloaded.

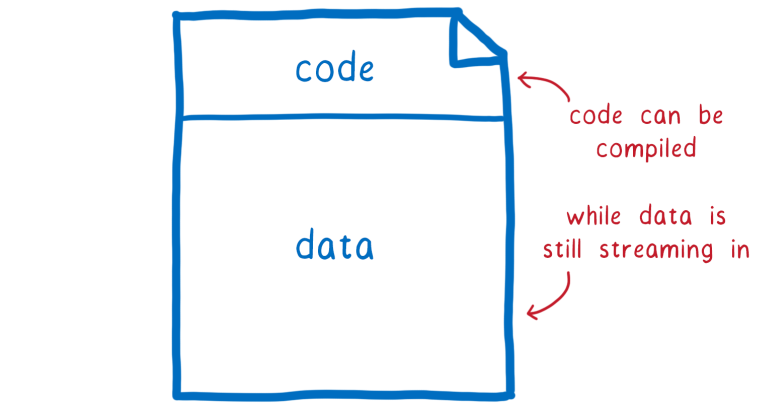

In addition to simultaneously loading and compiling code, there is another advantage.

The code section of the .wasm module comes at the very beginning, before the rest of the data (which is placed in the module's memory object). So in the case of streaming compilation, the code is compiled while the module data has not yet been fully downloaded. If your module requires a lot of data, then the memory object can be several megabytes in size, and streaming compilation will give a significant performance increase.

With streaming compilation, the compilation process starts earlier. But we can also make it faster.

Basic compiler level 1: compile acceleration

If you want fast code work, then you need to optimize it. But it takes time to perform these optimizations during compilation, which slows down the compilation itself. So this is a definite compromise.

But if you use two compilers, you can get the benefits of both quick compilation and optimized code. The first one quickly compiles without any special optimizations, and the second one works more slowly, but produces more optimized code.

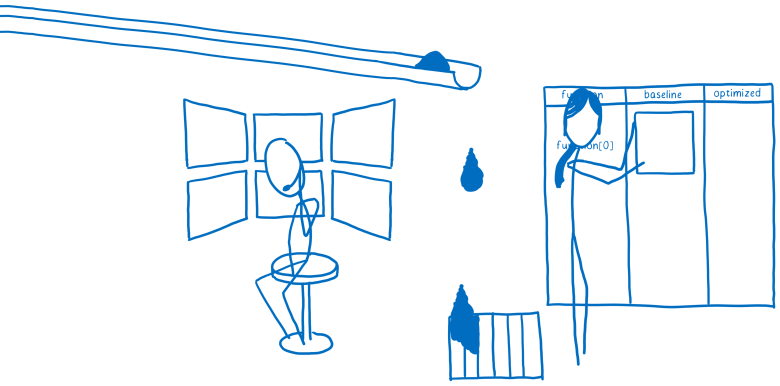

This is called a multi-level compiler. When the code initially arrives, it is compiled by a Level 1 compiler (or the base compiler). After the code compiled by the base compiler starts up, the Level 2 compiler processes the code again and prepares a more optimized version in the background.

Upon completion of the process, the basic version of the code is replaced by an optimized version. This speeds up code execution.

JavaScript engines have long used multi-level compilers. However, the JS engines use a Level 2 compiler (i.e., optimizing) only for hot code ... which is often called for execution.

In contrast, in WebAssembly, a Level 2 compiler will eagerly perform a complete recompilation, optimizing all module code. In the future, we can add more options to control how greedy or lazy optimization should be.

The base compiler saves a lot of time on loading. It works 10–15 times faster than an optimizing compiler. And the compiled code in our case works only two times slower.

This means that your code will work quickly enough even in the first moments when only the basic non-optimized version works.

Parallelization: even faster acceleration

In an article on Firefox Quantum, I explained the options for coarse and fine-tuned parallelization. We use both types to compile WebAssembly.

I mentioned earlier that the optimizing compiler runs in the background, freeing the main thread for code execution. The basic compiled version can work while the optimizing compiler performs its own recompilation.

But on most computers, in this case, most of the cores will remain unloaded. In order to optimally use all cores, both compilers use fine-tuned parallelization to separate work.

The unit of parallelization is a function. Each function can be compiled independently, on a separate core. In fact, it is so finely tuned that in reality we have to group these functions into larger groups of functions. These groups are sent to different kernels.

... and then skipping all this work due to full caching (in the future)

Currently, decoding and compilation are repeated every time you reload the page. But if you have the same .wasm file, then it must be compiled into the same machine code.

So almost always this work can be skipped. That is what we will do in the future. Decoding and compilation will be performed when the page is first loaded, and the resulting machine code will be stored in the HTTP cache. Then, when requesting this URL, the precompiled machine code will be issued immediately.

So the load time will disappear altogether on subsequent page loads.

The foundation for this feature has already been laid. In Firefox 58, we thus cache JavaScript bytecode. You only need to extend this feature to support .wasm files.

Source: https://habr.com/ru/post/347158/

All Articles