How dtraceasm works in JMH

The latest version of Java Microbenchmark Harness (JMH) has a new profiler -

The latest version of Java Microbenchmark Harness (JMH) has a new profiler - dtraceasm , long awaited perfasm port on Mac OS X, which can display the Java benchmark assembler profile.

The survey showed that not everyone understands how, in principle, it is possible to get a Java method at the input, to show an output listing of the compiled method with the hottest instructions, their distribution and a small profile like "And another 5% of the time the virtual machine spent in Method Symbol::as_C_string(char*, int) ".

In the process of perfasm porting perfasm it turned out that in fact ™ everything is not very difficult and there was a desire to tell how such a profiler works.

To understand the article, it is highly desirable to familiarize yourself with JMH, for example, by looking at examples of its use.

Introduction

What should such a profiler do?

What should such a profiler do?

For a Java benchmark, it must show exactly where most of the CPU time is spent at the level of the generated code.

At the same time, the generated code is usually very much, so it should be able to do it quite precisely so that it is not necessary to search for the information we need in the output of the profiler with fire.

For example, for a method that counts logarithm:

@Benchmark public double log(double x) { return Math.log(x); } dtraceasm or perfasm will show the profile as in the screenshot on the left, accusing the fstpl instruction in everything. Because of the strong pipelining of modern processors, such a profile can be wrong, and it often makes sense to look not only at the instruction, which is considered hot, but also at the previous one. Here it is fyl2x , which counts the logarithm.

In fact, such a profiler is very similar to perf annotate , but it can work with JIT-compiled Java code.

What for?

And why bother *asm -profiler may be needed if you are not writing your JIT compiler? Not least, of course, out of curiosity, because it helps to answer the following questions very quickly:

- And what did my method compile at all? (

PrintAssemblycourse, you can go to thePrintAssemblyoutput and find the right place or use JITWatch , but this is usually less convenient) - What optimizations the JIT compiler can or cannot do, can it be tricked or confused?

- How to change the generated code, if you change the garbage collector?

- How much your own perception of reality ("in this method

Math.sqrtwill definitely slow down") differs from the harsh reality - And why does the code written in one way work faster than the same code, but written a little differently?

In addition to curiosity, it is useful to be able to answer the same questions if you suddenly decide to optimize a small place, for example, your thread-safe queue or a highly specialized class .

Well, if you use the tool, it is useful to at least understand approximately how it works inside, so as not to perceive it as some kind of magic and to have an idea of its capabilities and limitations.

PrintAssembly

To build a profile using the generated code, you first need to get this generated code from somewhere

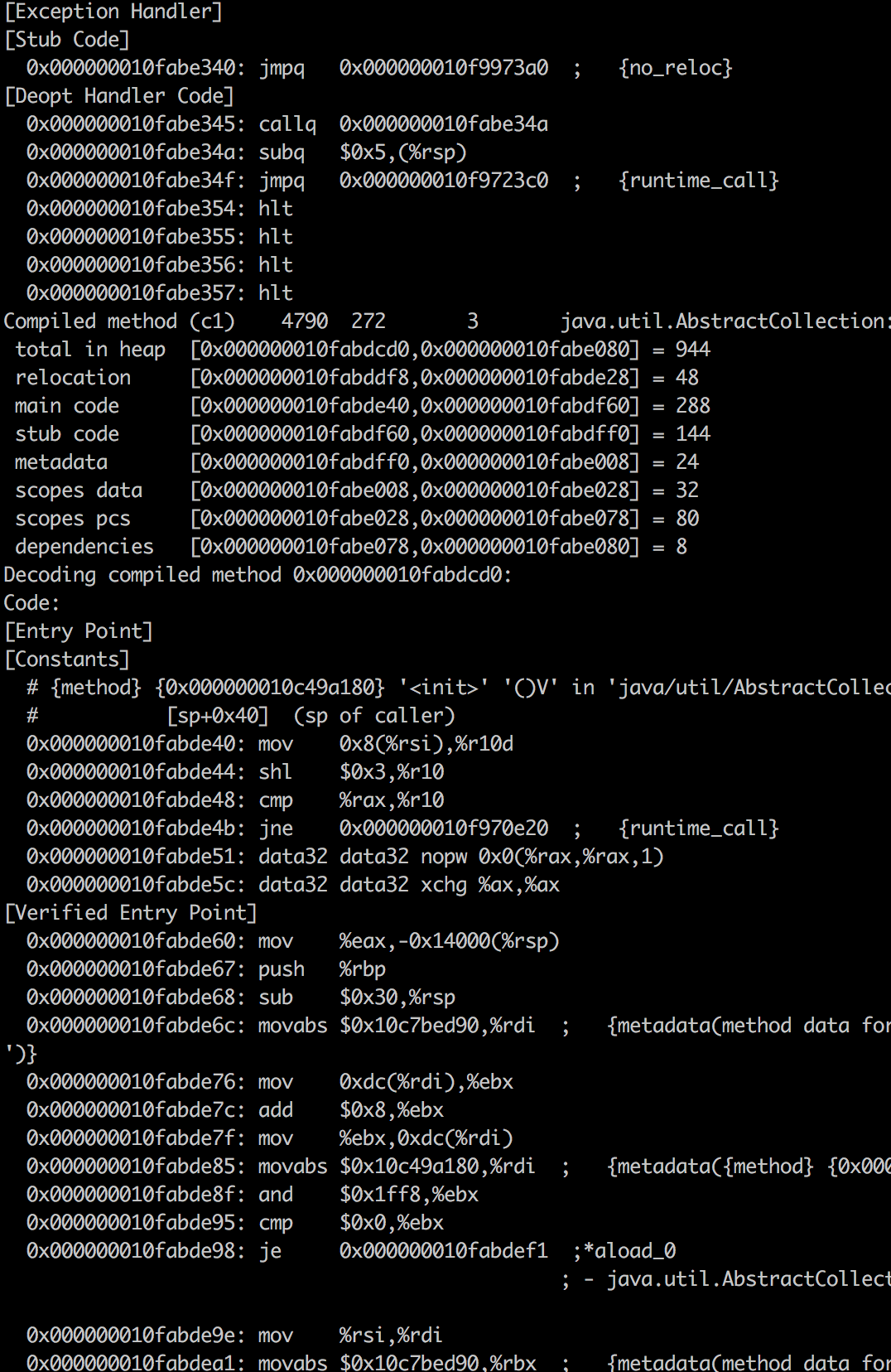

To build a profile using the generated code, you first need to get this generated code from somewhere but we have no money . Fortunately, everything is already invented for us and the virtual machine (hereinafter I mean only hotspot) can print all compiled code to stdout, you just need to enable the necessary flag ( -XX:+PrintAssembly ) and put a special disassembler in $JAVA_HOME . There are enough instructions on the Internet on how to do this, usually you don’t need to collect anything yourself and you just need to download the assembled disassembler for your platform.

PrintAssembly is useful, but not the most convenient. Its output has a previously known format, it is even annotated with comments to which bytecode instructions the current line belongs, which method is now typed or in which case is the argument, but it is measured in megabytes, and it will contain all versions of the compiled method (C1-compiler, C2 compiler, version after de-optimization, GOTO 1), therefore, to find the desired in it is usually extremely difficult.

The profiler in this huge output should show exactly where we need to look to see the hottest part of the benchmark. And for writing such a profiler, in this output we are interested in information on which method the instructions refer to, what is their address in memory and, optionally, comments from the disassembler.

DTrace

DTrace is a dynamic trace framework supported in Solaris, FreeBSD, Mac OS X and, in part, in Linux. It consists of a kernel module, which implements the basic functionality and client programs in a special language D (do not confuse with another language D). The client program declares what event is interesting to it, and the kernel module compiles the program into a special bytecode, does some preparatory work, and starts this program at the necessary events to execute. In this case, the D language is safe and does not allow to unfold strongly, for example, to go into an infinite loop or paint an application, so programs on it can be executed directly in the core. The framework itself is very powerful and allows you to do many very interesting and non-trivial things that remain beyond the scope of this article; I will only consider the functionality necessary for dtraceasm .

dtraceasm uses the profile-n event provider, which does not hang onto any special event, but simply calls the user program at fixed intervals.

The mechanism is used simple, the kernel registers a timer with a given frequency and starts to interrupt the process that is now running on the CPU, and in the handler calls our DTrace script.

The script itself looks like this:

profile-1001 /arg1/ { printf("%d 0x%lx %d", pid, arg1, timestamp); ufunc(arg1)} You can read it like this: "1001 times per second, type the pid of the current executable process, its PC, the current time and the name of the executable method (along with the library name) if the process is currently running in userspace."

The PC ( program counter ) is a special register containing the address of the instruction that is currently being executed. But where does the name of the method come from?

Since the kernel knows everything about loaded libraries, executable files and their symbols (methods are symbols), and the addresses at which they are loaded, it can use this knowledge to build the index "instruction address -> library -> specific method". That is, knowing the value of the PC, you can find out where it came from.

Example

In the lib.so library lib.so the foo() method starts at offset 1024 , the next bar() method starts at offset 2048 , and the library itself is loaded into the process at address 1048576 . If the current value of the PC is in the interval [1048576 + 1024, 1048576 + 2048] , then the foo() method from lib.so is now executed.

But if the code was loaded dynamically (actually, what the JIT compilers do) and there is no information about the characters, the kernel will not find the method name.

Just add water

How to get an annotated assembler benchmark now?

The benchmark is launched in a separate JVM with the PrintAssembly flag and immediately after its launch a DTrace script is launched, which writes its result to the file.

Having on hand this data it is necessary only to do the following steps:

- The DTrace exhaust is filtered by the pid and time of the measured benchmark iterations.

- Strings without symbol names are combined with instruction addresses from the PrintAssembly output, they have a string representation of the form

inc %r10dand an optional comment from the disassembler - The result is aggregated into a profile in which identical lines collapse and their frequency counter is wound for them.

- With the help of some heuristics, the profile contains a continuous region of hot instructions. For example, if instructions in a region add up to 10% of the total profile and adding neighboring instructions adds a small number to the weight of the region, then we can assume that we are interested in watching it

- Native methods fall into a separate profile, "hot methods", also sorted by frequency

- The result of the work is beautifully formatted and sent to the user in the console.

At the same time, the problems with the fact that in the PrintAssembly output there is a code compiled by both the C1 compiler and C2 go off themselves, because only one version of the compiled code will get into the hot profile after warmup iterations (if you have a steady state there are constant recompilations, plus *asm profilers filter events from warmup iterations, and native methods (internals of the JVM itself, native calls, etc.) will get to the top of hot methods.

NB: from the point of view of porting to Mac OS X, it was necessary to “only” do a part with PC gaming using DTrace, the rest of the infrastructure for processing the results in JMH existed since perfasm and the author of the article (I) didn’t do anything.

Conclusion

Using a combination of simple tools, you get a fairly powerful profiler, which for an unprepared developer can look like a black box, and now you know how it actually works and that there is no magic or rocket science in it (and you can easily understand how it works, for example, perfasm ).

Special thanks to Alexey Shipilyov for having read all the inaccuracies in the article and made sure that I bring knowledge to the masses, but not nonsense :)

')

Source: https://habr.com/ru/post/347124/

All Articles