Analyze it. Mista.ru

What, How, Why

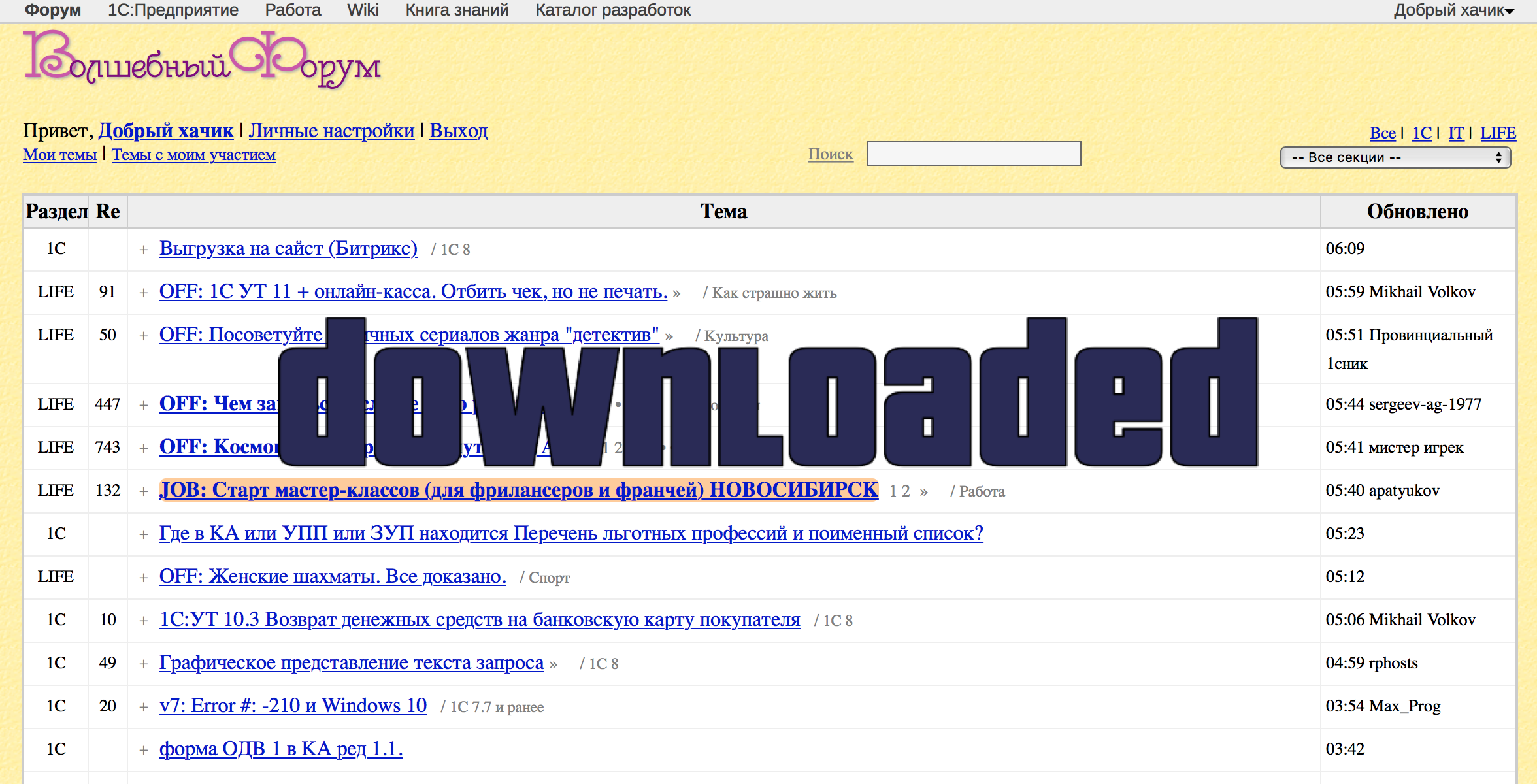

The Mista.ru forum is one of the oldest and most active forums devoted to 1C. The first message is dated 2000, and at the moment the counter has passed over 800,000, and the number of messages is over 16,000,000. The forum was so popular that it was even tried to be “mirrored” because it contained a good base of 1C questions and answers, due to What forum admins added "protection from downloading". This article will describe how you can download this (and probably any other) forum in a relatively short time using the Google Cloud Platform.

Intro

After my previous mini-project to get an interesting (in my opinion) data set , I needed another interesting task, at the solution of which I could train my skills as a data engineer. As a goal, I chose the Mista.ru adinesnegov forum and did it for several reasons at once. First of all, this is a rather old forum and for many years it has accumulated millions of messages on various topics. Secondly, he is one of the most popular among 1C programmers (at least 6-7 years ago) and the activity on it is quite high. Thirdly, 99.99% of forum users are supporters of the current president, and the concentration of “stupid patriots” just rolls over, and this in turn gives hope for a very interesting analytics, such as the frequency of using the famous word "###", "Putin" or "Putin ###". And fourthly, thanks to the “protection”, the task of downloading the forum became a bit more interesting.

Grabbing script

The first attempts to approach the task with the tools already available, which I had left from the previous project, failed. After 20 requests for GET requests, the forum stopped responding. In the web backend is not strong, but I suspect that frequent requests from one SP were tracked and everything that was not similar to requests from a regular user was banned. A bunch of downloaded downloads and site grabbers ran across the same rake and went to the trash. I needed a fresh idea.

And she was found. In the partially completed Ruby-on-Rails course, I heard that there are automated testing tools that allow you to encode user behavior and use these emulation scripts for test cases. Remembering the word Selenium and using Google, I quickly found some small examples, with a single nuance - they were in python, and in my arsenal there was only 1C and R. However, given that python is considered one of the easiest to learn, after a couple of hours I could already login to the forum:

import selenium from selenium import webdriver from selenium.webdriver.common.keys import Keys from selenium.webdriver.support.ui import WebDriverWait driver = webdriver.Chrome("/usr/local/bin/chromedriver") base_url = 'http://www.forum.mista.ru/' driver.get(base_url) username = " " password = "11" uname = driver.find_element_by_name("user_name") uname.send_keys(username.decode('utf-8')) passw = driver.find_element_by_name("user_password") passw.send_keys(password) submit_button = driver.find_element_by_class_name("sendbutton").click() And still (rather long I have to say) the time appeared such a class MistaDownloader, which allowed immediately after initialization to log in on the forum and download forum threads by its number:

#!/usr/bin/env python # -*- coding: utf-8 -*- # export PYTHONIOENCODING=UTF-8 import base64 import selenium from selenium import webdriver from selenium.webdriver.common.keys import Keys from selenium.webdriver.support.ui import WebDriverWait from bs4 import BeautifulSoup from urllib import quote import sys import codecs import binascii import os import datetime import subprocess import syslog reload(sys) sys.setdefaultencoding("utf-8") syslog.syslog("MistaDownloader class loaded.") print ("MistaDownloader class loaded.") class MistaDownloader: def print_to_log(self, message): syslog.syslog(message) print (message) def __init__(self): self.print_to_log("MistaDownloader initializing...") self.driver = webdriver.Chrome("/usr/local/bin/chromedriver") self.base_url = 'http://www.forum.mista.ru/' self.folder = '//home/gomista/files' if not os.path.exists(self.folder): os.makedirs(self.folder) self.print_to_log("MistaDownloader initialized.") def authenticate(self): self.driver.get(self.base_url) username = " " password = "11" uname = self.driver.find_element_by_name("user_name") uname.send_keys(username.decode('utf-8')) passw = self.driver.find_element_by_name("user_password") passw.send_keys(password) submit_button = self.driver.find_element_by_class_name("sendbutton") .click() self.print_to_log("Authentication done.") def download_by_id(self, topic_id): self.print_to_log("ID: " + topic_id) def write_source_to_file(topic_id, page_number, page_url, page_source, folder): filename = folder + '/' + '{0:0>7}'.format(topic_id) + '_' + '{0:0>2}'.format(page_number) + '_' + binascii.hexlify(page_url) + '.txt' file = open(filename,'w') page_source_to_save = page_source.replace('\t', ' ') .replace('\n', ' ') .replace('\r', ' ') res = '%s\t%s\t%s\t%s' % (topic_id, page_number, page_url, page_source_to_save) file.write(res) file.close() page_number = 1 current_url = '%s%s%s%s%s' % (self.base_url, 'topic.php?id=', topic_id, '&page=', page_number) self.print_to_log('getting page: ' + current_url) self.driver.set_page_load_timeout(240) try: self.driver.get(current_url) self.print_to_log('done') html = self.driver.page_source write_source_to_file(topic_id, page_number, current_url, html, self.folder) soup = BeautifulSoup(html, "lxml") pages_tag = soup.find('span', { 'class' : 'pages' }) additional_pages = set() if pages_tag: pages_tag = pages_tag.findAll('a', attrs = {'data-page' : True}) if pages_tag: for page_tag in pages_tag: additional_pages.add(page_tag['data-page']) additional_pages = list(sorted(additional_pages)) for additional_page in additional_pages: current_url = '%s%s%s%s%s' % (self.base_url, 'topic.php?id=', topic_id, '&page=', additional_page) self.print_to_log('getting page: '+current_url) self.driver.set_page_load_timeout(240) self.driver.get(current_url) self.print_to_log('done') html = self.driver.page_source write_source_to_file(topic_id, additional_page, current_url, html, self.folder) except Exception as e: write_source_to_file(topic_id, page_number, current_url, 'ERRORERRORERROR', self.folder) The download of the pages itself could be done with the following lines:

do = MistaDownloader() do.authenticate() topic_id = 1 do.download_by_id(topic_id) ... topic_id = 99 do.download_by_id(topic_id) Then I wanted this script to run not only on the desktop, but also on a server without a graphical interface. For this, with the help of Google, I released the next version of the script, which with the help of an emulator could be run anywhere:

from xvfbwrapper import Xvfb from mista_downloader import MistaDownloader vdisplay = Xvfb() vdisplay.start() do = MistaDownloader() ... vdisplay.stop() And it seemed that it was enough to start the cycle from 1 to 1,000,000 (all new branches are now being created with an index of slightly more than 811 thousand). However ... Preliminary measurements suggested that it would take about 2-3 weeks to smoke while the entire forum was in the form of files on the hard disk. Yes, you could go along a proven path and run in several streams on your own laptop, but recently viewed Google Cloud Platform for Data Engineers courses suggested a new idea.

Parallel do

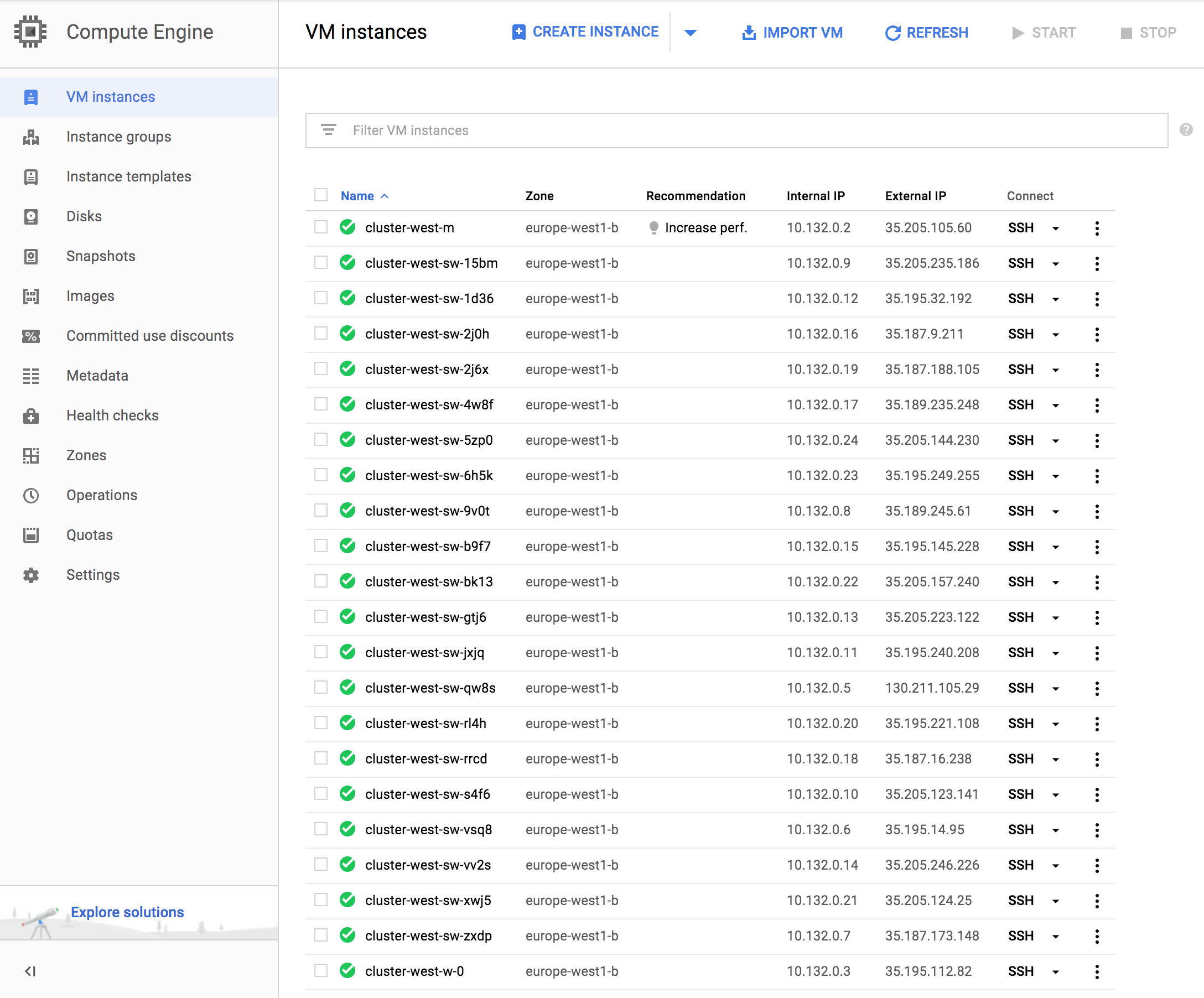

Google Cloud Platform, allows you to create a virtual server in less than a minute and delete it immediately after it copes with the task. Those. In accordance with the idea, I did not need to search for one powerful server and make it work for a week, it was easier for me to rent 20 simple servers for example for 10 hours. And since I was watching courses on Hadoop, HDFS, MapReduce and so on, I decided to make my own cluster with blackjack and nodes.

The essence of the cluster - the central server (master) contains a list of links (namely, forum forum IDs) that need to be downloaded. Work servers (Nodes) connect to the Wizard, receive a batch of IDs, download them, add the received files to the GCS (Google Cloud Storage) storage, notify the Wizard that the task is completed and receive a new batch of IDs.

The Google Cloud Platform has a pretty handy thing - Google Cloud Shell. This is a small free virtual machine that is always available through the browser and with its help you can manage the entire cloud. For almost every action through the web interface there is a similar command that can be executed using the Google Cloud Shell. All scripts further in the article will be run through it.

Using the following script, I created a virtual machine named cserver :

#! /bin/bash gcloud compute --project "new-mista-project" instances create cserver \ --zone "europe-west1-b" \ --machine-type "n1-standard-2" \ --subnet "default" \ --metadata-from-file startup-script=startupscript_server.sh \ --metadata "ssh-keys=gomista:ssh-rsa key-key-key gomista" \ --maintenance-policy "MIGRATE" \ --service-account "1007343160266-compute@developer.gserviceaccount.com" \ --scopes "https://www.googleapis.com/auth/cloud-platform" \ --min-cpu-platform "Automatic" \ --image "image-mista-node" \ --image-project "new-mista-project" \ --boot-disk-size "10" \ --boot-disk-type "pd-standard" \ --boot-disk-device-name cserver gcloud compute instances add-tags cserver --tags 'mysql-server' --zone \ "europe-west1-b" This is a server of typen1-standard-2, which in fact means 2CPUs 7.5Gb. As a hard disk - 10Gb Standard Disk. By the way, the pleasure is48.95 per month estimated. Effective hourly rate $0.067 (730 hours per month)48.95 per month estimated. Effective hourly rate $0.067 (730 hours per month), i.e. an hour of using such a server will cost you 4 rubles, and a day less than $ 100.

The server was created on the basis of a Ubuntu 16.04 LTS image that I had prepared in advance, with installed libraries and applications. In fact, the server could be created from bare Ubuntu, and the command to install all the necessary programs in the parameter - --metadata-from-file , for example, type sudo apt-get install xvfb x11-xkb-utils -y . The transferred command or script would start immediately after the creation of the VM and install everything necessary. But since I knew that I would still create / delete this car many times, I decided to spend the extra 10 minutes and prepare the image (by GCP). Also at the time of the creation of the server, my start startupscript_server.sh run startupscript_server.sh , which copied my repo and added a script to crontab, which distributed tasks to the Nodes.

I used this script to create the Nodes:

#! /bin/bash gcloud compute --project "new-mista-project" instances create $1 \ --zone $2 \ --machine-type "n1-standard-1" \ --subnet "default" \ --metadata-from-file startup-script=startupscript.sh \ --metadata "ssh-keys=gomista:ssh-rsa key-keykey gomista" \ --no-restart-on-failure \ --maintenance-policy "TERMINATE" \ --preemptible \ --service-account "1007343160266-compute@developer.gserviceaccount.com" \ --scopes "https://www.googleapis.com/auth/cloud-platform" \ --min-cpu-platform "Automatic" \ --image "image-mista-node" \ --image-project "new-mista-project" \ --boot-disk-size "10" \ --boot-disk-type "pd-standard" \ --boot-disk-device-name $1 gcloud compute instances add-tags $1 --tags 'mysql-client' --zone $2 As Noda, there was ann1-standard-1server (1CPUs 3.75Gb). The main difference between Noda and the Master is the--preemptible. Creating a server with this flag means that this server can be forcibly disabled by Google at any time and that the maximum server time is 24 hours. If you rent such a server and agree to such conditions, then Google will drop the price for it from 24.67 per month to 7.70 per month (hourly $ 0.011), which is slightly more than 15 rubles per day. And since I just needed a couple of dozens of "for batch jobs and fault-tolerant workloads" machines, preemptible machines became a good option. By the way, adding tags to servers, I thus added Firewall Rules to them (which I had previously attached to these tags).

Create 20 virtual machines with one team and get them ready for work in 1 minute? You are welcome:

#! /bin/bash ./create_node_west.sh node01 europe-west1-b & ./create_node_west.sh node02 europe-west1-b & ./create_node_west.sh node03 europe-west1-b & ./create_node_west.sh node04 europe-west1-b & ./create_node_west.sh node05 europe-west1-b & ./create_node_west.sh node06 europe-west1-b & ./create_node_west.sh node07 europe-west2-b & ./create_node_west.sh node08 europe-west2-b & ./create_node_west.sh node09 europe-west2-b & ./create_node_west.sh node10 europe-west2-b & ./create_node_west.sh node11 europe-west2-b & ./create_node_west.sh node12 europe-west2-b & ./create_node_west.sh node13 europe-west2-b & ./create_node_west.sh node14 europe-west3-b & ./create_node_west.sh node15 europe-west3-b & ./create_node_west.sh node16 europe-west3-b & ./create_node_west.sh node17 europe-west3-b & ./create_node_west.sh node18 europe-west3-b & ./create_node_west.sh node19 europe-west3-b & ./create_node_west.sh node20 europe-west3-b & Blackjack and nodes

And now how it all worked.

After the start of the Wizard, I ran a script that installed MySQL and created the necessary tables (by the way, the first muscle experience is also the first):

#! /bin/bash sudo apt-get install mysql-server -y sudo sed -i -e "s/bind-address/#bind-address/g" \ /etc/mysql/mysql.conf.d/mysqld.cnf sudo service mysql restart echo "Please enter root user MySQL password!" read rootpasswd mysql -uroot -p${rootpasswd} \ -e "CREATE DATABASE mistadb DEFAULT CHARACTER SET utf8;" mysql -uroot -p${rootpasswd} \ -e "CREATE USER 'gomista'@'%' IDENTIFIED BY 'gomista';" mysql -uroot -p${rootpasswd} \ -e "GRANT ALL PRIVILEGES ON *.* TO 'gomista'@'%' WITH GRANT OPTION;" mysql -uroot -p${rootpasswd} -e "FLUSH PRIVILEGES;" mysql -ugomista -pgomista -e "CREATE TABLE server_statuses ( node_name VARCHAR(50) PRIMARY KEY, node_ip VARCHAR(15) NOT NULL, node_status TINYINT(50), updated_at TIMESTAMP );" mistadb mysql -ugomista -pgomista -e "CREATE TABLE server_commands ( id INT(6) UNSIGNED AUTO_INCREMENT PRIMARY KEY, node_name VARCHAR(50) NOT NULL, node_ip VARCHAR(15) NOT NULL, job_id VARCHAR(70), n_links INT(50), received TINYINT(50), updated_at TIMESTAMP, CONSTRAINT node_name_unique UNIQUE (node_name, job_id) );" mistadb mysql -ugomista -pgomista -e "CREATE TABLE links ( job_id VARCHAR(70), link VARCHAR(200), status TINYINT(50), updated_at TIMESTAMP, INDEX(job_id), INDEX(link), CONSTRAINT node_name_unique UNIQUE (job_id, link) );" mistadb python -c "exec(\"import sys\\nfor i in range(1,1000000): \ print ('\t'+'{0:0>6}'.format(str(i))+'\t0')\")" > numbers mysql -ugomista -pgomista -e "LOAD DATA LOCAL INFILE 'numbers' \ INTO TABLE links;" mistadb rm numbers Numbers from 1 to 999999 were stored in thelinkstable, information on whether the number was processed or not (status 0 - not processed, 1 - in operation, 2 - processed), the identifier of the job that performs or performs the processing of one or another number. In theserver_commandstable, the jobs that the Master distributed to the Nodes (received 0 — issued, received 1 — received, received 2 — completed). In theserver_statusestable, theserver_statusesstatuses (node_status 0 is free, node_status 1 is busy).

Every 5 seconds the following script was launched on the Master:

#!/usr/bin/env python import mysql.connector import socket import uuid import datetime import sys if len(sys.argv) == 2: number_of_links = int(sys.argv[1]) else: number_of_links = 500 cnx = mysql.connector.connect(user='gomista', password='gomista', host='cserver', database='mistadb') cursor = cnx.cursor() select_servers =("SELECT server_statuses.node_name, server_statuses.node_status," "server_commands.received " "FROM server_statuses " "LEFT JOIN server_commands " "ON server_statuses.node_name = server_commands.node_name and " "server_commands.received <> 2 " "WHERE server_statuses.node_status = %(node_status)s and " "server_commands.received IS NULL LIMIT 1") server_status = {'node_status': 0} cursor.execute(select_servers, server_status) row = cursor.fetchone() if row: node_name = row[0] print (node_name) cursor = cnx.cursor() new_job_id = node_name + "_" + datetime.datetime.now().strftime("%Y%m%d_%H%M%S") + "_" + str(uuid.uuid4()) received = 0 add_job_command = ("INSERT INTO server_commands " "(node_name, node_ip, job_id, n_links, received) " "VALUES (%(node_name)s, %(node_ip)s, %(job_id)s, %(n_links)s, %(received)s) " "ON DUPLICATE KEY UPDATE node_ip = %(node_ip)s, " "n_links = %(n_links)s, received = %(received)s, updated_at=now();" "UPDATE links " "SET job_id = %(job_id)s " "WHERE job_id = '' AND status = 0 LIMIT %(n_links)s;") server_job = {'node_name': str(node_name), 'node_ip': str(node_name), 'job_id': new_job_id, 'n_links': number_of_links, 'received': received} for result in cursor.execute(add_job_command, server_job, multi=True): pass cnx.commit() cursor.close() cnx.close() else: print ("no available worker found") This script checked theserver_statusestable for the presence of available servers, and if it was found, created a job for it in theserver_commandstable and bound a certain number of numbers from thelinkstable to the job.

The nodes, in turn, added the following script to their crontab at the time of start, which was executed every minute:

#!/usr/bin/env python from xvfbwrapper import Xvfb from cserver_connector import CserverConnector from mista_downloader import MistaDownloader import sys import subprocess import syslog import datetime MAX_PROCESS_TIME = 40 # min def print_to_log(message): syslog.syslog(message) print (message) def get_process_time_in_min(connector): mod_time = datetime.datetime.fromtimestamp(connector.modified_time) current_time = datetime.datetime.now() delta = current_time - mod_time delta_min = delta.seconds / 60 return delta_min print_to_log("Creating connector...") connector = CserverConnector() print_to_log("Connector created. Working: "+str(connector.working)) if connector.working: process_time_in_min = get_process_time_in_min(connector) if process_time_in_min > MAX_PROCESS_TIME: print_to_log("Canceling job...") connector.cancel_job() connector.remove_file_flag() print_to_log("Updating 0 status on cserver...") connector.update_status_on_cserver(0) print_to_log("Exiting...") exit(0) else: connector.update_status_on_cserver(1) print_to_log("Exiting...") exit(0) else: print_to_log("Updating 0 status on cserver...") connector.update_status_on_cserver(0) print_to_log("Getting new job...") connector.get_new_job() if not connector.job_id: print_to_log("No assigned jobs found...") print_to_log("Exiting...") exit(0) print_to_log("Job found: "+connector.job_id) connector.create_file_flag() print_to_log("Updating 1 status on cserver...") connector.update_status_on_cserver(1) print_to_log("Getting list of links...") connector.get_links_list() print_to_log("Starting Xvfb...") vdisplay = Xvfb() vdisplay.start() print_to_log("Creating MistaDownloader...") try: do = MistaDownloader() except Exception as e: print_to_log(str(e)) raise print_to_log("Do authenticate...") do.authenticate() folder = do.folder print_to_log("Downloading links in loop...") for link in connector.links_list: do.download_by_id(link[0].lstrip('0')) vdisplay.stop() print_to_log("Moving files to GS...") move_command = "gsutil -m mv " + folder + "/* gs://mistabucket-west/files" subprocess.call([move_command], shell=True) print_to_log("Updating links on finish...") connector.update_links_on_finish() connector.remove_file_flag() print_to_log("Updating 0 status on cserver...") connector.update_status_on_cserver(0) print_to_log("Exiting...") This script was connected to theserver_commandsand looked at theserver_commandstable. If he found the task, then by the number he received the list of numbers, started the download and informed the Master that he had taken the job to work and that he was now busy. After the download was completed, Nod updated theserver_statusestables (he said that he was free now),server_commands(said he had completed the task), andlinksnoted which numbers he downloaded. The master, in his turn, as soon as he saw that Nod was free, he threw a new job for him into the tasks with still unprocessed numbers, so that in less than a minute Nod would pick up this task and set to work ...

All communication between Noda and the Master brought to a separate class CServerConnector :

#!/usr/bin/env python import mysql.connector import socket import os import syslog class CserverConnector: def print_to_log(self, message): syslog.syslog(message) print (message) def __init__(self): self.hostname = socket.gethostname() self.file_flag = '/home/gomista/WORKING' self.mysql_connector = mysql.connector.connect(user='gomista', password='gomista', host='cserver', database='mistadb', buffered=True) self.working = os.path.isfile(self.file_flag) if self.working: self.modified_time = os.path.getmtime(self.file_flag) with open(self.file_flag, 'r') as f: self.job_id = f.readline().strip() else: self.modified_time = None self.job_id = None self.n_links = 0 self.links_list = list() def create_file_flag(self): file_flag_to_write = open(self.file_flag, "w") file_flag_to_write.write(self.job_id) file_flag_to_write.close() def update_file_flag(self): self.create_file_flag() def remove_file_flag(self): if os.path.isfile(self.file_flag): os.remove(self.file_flag) def update_status_on_cserver(self, status): cursor = self.mysql_connector.cursor() add_server_status_query = ("INSERT INTO server_statuses " "(node_name, node_ip, node_status) " "VALUES (%(node_name)s, %(node_ip)s, %(node_status)s)" "ON DUPLICATE KEY UPDATE node_ip = %(node_ip)s, " "node_status = %(node_status)s, updated_at=now()") server_status = {'node_name': self.hostname, 'node_ip': self.hostname, 'node_status': status} cursor.execute(add_server_status_query, server_status) self.mysql_connector.commit() cursor.close() def get_new_job(self): cursor = self.mysql_connector.cursor() get_command_query = ("SELECT node_name, job_id, n_links " "FROM server_commands " "WHERE node_name = %(node_name)s AND job_id <> '' AND " "received = %(node_name)s LIMIT 1") server_status = {'node_name': self.hostname, 'node_ip': self.hostname, 'received': 0} cursor.execute(get_command_query, server_status) row = cursor.fetchone() if row: node_name = str(row[0]) self.job_id = str(row[1]) self.n_links = str(row[2]) else: self.job_id = None self.n_links = 0 cursor.close() def get_links_list(self): self.print_to_log("Getting coursor and prepare the query...") cursor = self.mysql_connector.cursor() update_job_command_query = ("UPDATE server_commands " "SET received = 1 " "WHERE job_id = %(job_id)s;") server_job = {'job_id': self.job_id} self.print_to_log("Executing query 1.") cursor.execute(update_job_command_query, server_job) self.print_to_log("Commiting query 1.") self.mysql_connector.commit() update_links_query = ("UPDATE links " "SET status = 1 " "WHERE job_id = %(job_id)s;") server_job = {'job_id': self.job_id} self.print_to_log("Executing query 2. with job_id: "+self.job_id) try: cursor.execute(update_links_query, server_job) except Exception as e: self.print_to_log(str(e)) self.print_to_log(cursor.statement) raise self.print_to_log("Commiting query 2.") self.mysql_connector.commit() cursor.close() cursor = self.mysql_connector.cursor() get_links_query = ("SELECT link FROM links WHERE job_id = %(job_id)s") self.print_to_log("Executing query 3.") cursor.execute(get_links_query, {'job_id': self.job_id}) for (link) in cursor: self.links_list.append(link) cursor.close() self.print_to_log("Finished.") def update_links_on_finish(self): cursor = self.mysql_connector.cursor() update_job_command_query = ("UPDATE server_commands " "SET received = 2 " "WHERE job_id = %(job_id)s;") server_job = {'job_id': self.job_id} cursor.execute(update_job_command_query, server_job) self.mysql_connector.commit() update_links_query = ("UPDATE links " "SET status = 2 " "WHERE job_id = %(job_id)s;") server_job = {'job_id': self.job_id} cursor.execute(update_links_query, server_job) self.mysql_connector.commit() cursor.close() def cancel_job(self): cursor = self.mysql_connector.cursor() update_job_command_query = ("DELETE FROM server_commands " "WHERE job_id = %(job_id)s;") server_job = {'job_id': self.job_id} cursor.execute(update_job_command_query, server_job) self.mysql_connector.commit() update_links_query = ("UPDATE links " "SET status = 0, job_id = '' " "WHERE job_id = %(job_id)s;") server_job = {'job_id': self.job_id} cursor.execute(update_links_query, server_job) self.mysql_connector.commit() cursor.close() Here are the tables in the process of work:

gomista@cserver:~/mista$ ./get_mysql_status.sh NODES: +-----------+---------+-------------+---------------------+ | node_name | node_ip | node_status | updated_at | +-----------+---------+-------------+---------------------+ | node01 | node01 | 1 | 2018-01-05 08:07:02 | | node02 | node02 | 1 | 2018-01-05 08:07:02 | | node03 | node03 | 1 | 2018-01-05 08:07:02 | | node04 | node04 | 1 | 2018-01-05 08:07:02 | | node05 | node05 | 1 | 2018-01-05 08:07:02 | | node06 | node06 | 1 | 2018-01-05 08:07:02 | | node07 | node07 | 1 | 2018-01-05 08:07:03 | | node08 | node08 | 1 | 2018-01-05 08:07:03 | | node09 | node09 | 1 | 2018-01-05 08:07:03 | | node10 | node10 | 1 | 2018-01-05 08:07:03 | | node11 | node11 | 0 | 2018-01-05 08:07:17 | | node12 | node12 | 1 | 2018-01-05 08:07:03 | | node13 | node13 | 1 | 2018-01-05 08:07:03 | | node14 | node14 | 1 | 2018-01-05 08:07:03 | | node15 | node15 | 1 | 2018-01-05 08:07:03 | | node16 | node16 | 1 | 2018-01-05 08:07:03 | | node17 | node17 | 0 | 2018-01-05 08:07:15 | | node18 | node18 | 1 | 2018-01-05 08:07:03 | | node19 | node19 | 1 | 2018-01-05 08:07:03 | | node20 | node20 | 1 | 2018-01-05 08:07:04 | | node21 | node21 | 1 | 2018-01-05 08:07:03 | | node22 | node22 | 1 | 2018-01-05 08:07:03 | +-----------+---------+-------------+---------------------+ JOBS DONE: +-----------+-----------+ | node_name | jobs_done | +-----------+-----------+ | node08 | 5 | | node10 | 5 | | node11 | 6 | | node07 | 6 | | node09 | 7 | | node22 | 8 | | node13 | 9 | | node06 | 9 | | node20 | 10 | | node17 | 10 | | node14 | 10 | | node21 | 10 | | node12 | 10 | | node18 | 11 | | node15 | 11 | | node02 | 12 | | node19 | 12 | | node04 | 12 | | node01 | 12 | | node05 | 13 | | node03 | 13 | | node16 | 15 | +-----------+-----------+ COMMANDS TO BE DONE: +-----+-----------+---------+-------+---------+----------+---------------------+ | id | node_name | node_ip | job_id| n_links | received | updated_at | +-----+-----------+---------+-------+---------+----------+---------------------+ | 266 | node17 | node17 | ad062 | 500 | 0 | 2018-01-05 08:07:17 | | 267 | node11 | node11 | ab531 | 500 | 0 | 2018-01-05 08:07:22 | +-----+-----------+---------+-------+---------+----------+---------------------+ COMMANDS IN PROGRESS: +-----+-----------+---------+-------+---------+----------+---------------------+ | id | node_name | node_ip | job_id| n_links | received | updated_at | +-----+-----------+---------+-------+---------+----------+---------------------+ | 244 | node14 | node14 | 5e1b6 | 500 | 1 | 2018-01-05 07:55:04 | | 245 | node06 | node06 | d0235 | 500 | 1 | 2018-01-05 07:56:02 | | 246 | node13 | node13 | c82fd | 500 | 1 | 2018-01-05 07:56:04 | | 249 | node09 | node09 | 1d553 | 500 | 1 | 2018-01-05 07:59:04 | | 250 | node12 | node12 | 9176f | 500 | 1 | 2018-01-05 07:59:03 | | 251 | node22 | node22 | 1c8ae | 500 | 1 | 2018-01-05 08:00:03 | | 252 | node15 | node15 | 3ca50 | 500 | 1 | 2018-01-05 08:01:04 | | 253 | node18 | node18 | 8836c | 500 | 1 | 2018-01-05 08:01:03 | | 254 | node21 | node21 | 091f7 | 500 | 1 | 2018-01-05 08:01:03 | | 255 | node16 | node16 | 6475d | 500 | 1 | 2018-01-05 08:02:04 | | 256 | node19 | node19 | 489b3 | 500 | 1 | 2018-01-05 08:03:04 | | 257 | node04 | node04 | 9fd5a | 500 | 1 | 2018-01-05 08:03:02 | | 258 | node05 | node05 | 49b9f | 500 | 1 | 2018-01-05 08:03:02 | | 259 | node01 | node01 | 579a7 | 500 | 1 | 2018-01-05 08:04:02 | | 260 | node20 | node20 | d3fe8 | 500 | 1 | 2018-01-05 08:05:03 | | 261 | node08 | node08 | ff1c7 | 500 | 1 | 2018-01-05 08:05:04 | | 262 | node03 | node03 | b1165 | 500 | 1 | 2018-01-05 08:06:02 | | 263 | node02 | node02 | 7d138 | 500 | 1 | 2018-01-05 08:06:02 | | 264 | node10 | node10 | 4ad65 | 500 | 1 | 2018-01-05 08:06:04 | | 265 | node07 | node07 | e6501 | 500 | 1 | 2018-01-05 08:06:04 | +-----+-----------+---------+-------+---------+----------+---------------------+ LINKS TO BE PROCESSED: +-------------+ | count(link) | +-------------+ | 881999 | +-------------+ LINKS IN PROCESS: +-------------+ | count(link) | +-------------+ | 10000 | +-------------+ LINKS DONE +-------------+ | count(link) | +-------------+ | 108000 | +-------------+ gomista@cserver:~/mista$ The whole process took about 20 hours. Who cares, in money it is: $ 0.067 20 hours + $ 0.011 20 hours * 20 cars or 5.74 in the currency of the enemy or about 350 in our native rubles. The spent 20 hours and 350 rubles bore fruit in the form of an over million files weighing 30GB.

The list of users has been downloaded by this script. In fact, this is simply a search of user pages from 1 to 200,000. In fact, there are fewer users, however, for some reason, users with an ID above 100,000 were found, so it was decided to download with a margin:

#!/usr/bin/env python # -*- coding: utf-8 -*- # export PYTHONIOENCODING=UTF-8 import base64 import selenium from selenium import webdriver from selenium.webdriver.common.keys import Keys from selenium.webdriver.support.ui import WebDriverWait from bs4 import BeautifulSoup from urllib import quote from xvfbwrapper import Xvfb import sys import codecs import binascii import os import datetime print(sys.getdefaultencoding()) reload(sys) sys.setdefaultencoding("utf-8") print(sys.getdefaultencoding()) if len(sys.argv) == 3: N_START = int(sys.argv[1]) N_END = int(sys.argv[2]) else: N_START = 1 N_END = 2 URL_TO_SAVE = 'users' if not os.path.exists(URL_TO_SAVE): os.makedirs(URL_TO_SAVE) vdisplay = Xvfb() vdisplay.start() def _convert(param): if isinstance(param, str): return param.decode('utf-8') else: return param def get_driver(): url = "http://www.forum.mista.ru/index.php" driver = webdriver.Chrome() return driver def authenticate(url, driver): driver.get(url) username = " " password = "11" uname = driver.find_element_by_name("user_name") uname.send_keys(_convert(username)) passw = driver.find_element_by_name("user_password") passw.send_keys(password) submit_button = driver.find_element_by_class_name("sendbutton").click() url_edit = "http://www.forum.mista.ru/users.php?action=edit" driver.get(url_edit) a = driver.find_element_by_xpath("//a[@href='#tab3']") a.click() topics = driver.find_element_by_name("topics_per_page") topics.clear() topics.send_keys(99) section = driver.find_element_by_name("column_forum") if not section.is_selected(): section.click() section = driver.find_element_by_name("column_replies") if not section.is_selected(): section.click() section = driver.find_element_by_name("column_section") if not section.is_selected(): section.click() section = driver.find_element_by_name("show_topic_section") if not section.is_selected(): section.click() section = driver.find_element_by_name("column_author") if not section.is_selected(): section.click() section = driver.find_element_by_name("column_updated") if not section.is_selected(): section.click() submit_button = driver.find_element_by_name("Submit").click() base_url = 'http://www.forum.mista.ru/' print("getting driver") driver = get_driver() print("logging") authenticate(base_url, driver) def save_user_page(page, driver, n=0): links_list = list() print('getting page: '+page) driver.get(page) print('done') html = driver.page_source.replace('\t', ' ').replace('\n', ' ') .replace('\r', ' ') filename = URL_TO_SAVE + '/' + '{0:0>10}'.format(n) + '_' + binascii.hexlify(page) + '.txt' file = open(filename,'w') file.write(html + '\n') file.close() limit = 100 for i in range(N_START, N_END): current_url = 'http://www.forum.mista.ru/users.php?id=' + str(i) n = i save_user_page(current_url, driver, n) vdisplay.stop() ( ) .

#!/usr/bin/env python # -*- coding: utf-8 -*- # export PYTHONIOENCODING=UTF-8 import base64 import selenium from selenium import webdriver from selenium.webdriver.common.keys import Keys from selenium.webdriver.support.ui import WebDriverWait from bs4 import BeautifulSoup from urllib import quote from xvfbwrapper import Xvfb import sys import codecs import binascii import os import datetime print(sys.getdefaultencoding()) reload(sys) sys.setdefaultencoding("utf-8") print(sys.getdefaultencoding()) URL_TO_SAVE = 'links_backward' if not os.path.exists(URL_TO_SAVE): os.makedirs(URL_TO_SAVE) vdisplay = Xvfb() vdisplay.start() def _convert(param): if isinstance(param, str): return param.decode('utf-8') else: return param def get_driver(): url = "http://www.forum.mista.ru/index.php" driver = webdriver.Chrome() return driver def authenticate(url, driver): driver.get(url) username = " " password = "11" uname = driver.find_element_by_name("user_name") uname.send_keys(_convert(username)) passw = driver.find_element_by_name("user_password") passw.send_keys(password) submit_button = driver.find_element_by_class_name("sendbutton").click() url_edit = "http://www.forum.mista.ru/users.php?action=edit" driver.get(url_edit) a = driver.find_element_by_xpath("//a[@href='#tab3']") a.click() topics = driver.find_element_by_name("topics_per_page") topics.clear() topics.send_keys(99) section = driver.find_element_by_name("column_forum") if not section.is_selected(): section.click() section = driver.find_element_by_name("column_replies") if not section.is_selected(): section.click() section = driver.find_element_by_name("column_section") if not section.is_selected(): section.click() section = driver.find_element_by_name("show_topic_section") if not section.is_selected(): section.click() section = driver.find_element_by_name("column_author") if not section.is_selected(): section.click() section = driver.find_element_by_name("column_updated") if not section.is_selected(): section.click() submit_button = driver.find_element_by_name("Submit").click() base_url = 'http://www.forum.mista.ru/' print("getting driver") driver = get_driver() print("logging") authenticate(base_url, driver) def save_links_list_on_page(page, driver, n=0): links_list = list() print('getting page: '+page) driver.get(page) print('done') html = driver.page_source soup = BeautifulSoup(html, "lxml") tr_list = soup.findAll('tr', attrs = {'data-topic_id' : True}) for tr_element in tr_list: topic_id = tr_element['data-topic_id'] tr_element_1 = tr_element.findAll('td', { 'class' : 'cc' }) section = tr_element_1[0].getText().replace('\t', '') length = tr_element_1[1].getText().replace('\t', '') tr_element_2 = tr_element.find('a', { 'class' : 'agb' }) title = tr_element_2.getText().replace('\t', '') tr_element_2_1 = tr_element.find('a', { 'class' : 'userlink' }) user_link = tr_element_2_1['href'] user_name = tr_element_2_1.getText().replace('\t', '') tr_element_2_2 = tr_element.find('a', { 'class' : 'sectionlink' }) subsection = '' if tr_element_2_2: subsection = tr_element_2_2.getText().replace('\t', '') classes = ' '.join(tr_element_2['class']).replace('\t', '') link = tr_element_2['href'].replace('\t', '') tr_element_3 = tr_element.find('a', { 'class' : 'sectionlink-gray' }) link_attributes = '%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s' % (topic_id, link, section, subsection, length, title, user_link, user_name, classes) links_list.append(link_attributes) next_link_tag = soup.find('a', text='<<') if next_link_tag: nex_page_link = base_url+next_link_tag['href'] else: nex_page_link = '' filename = URL_TO_SAVE + '/' + '{0:0>10}'.format(n) + '_' + binascii.hexlify(page) + '.txt' file = open(filename,'w') for link in links_list: file.write(link + '\n') file.close() return nex_page_link current_url = 'http://www.forum.mista.ru/index.php?id=30309&after=2000/07/06_14:17:00' n = 1 while current_url != '': current_url = save_links_list_on_page(current_url, driver, n) n += 1 print('next page to process: '+current_url) vdisplay.stop() #!/usr/bin/env python # -*- coding: utf-8 -*- # export PYTHONIOENCODING=UTF-8 import base64 import selenium from selenium import webdriver from selenium.webdriver.common.keys import Keys from selenium.webdriver.support.ui import WebDriverWait from bs4 import BeautifulSoup from urllib import quote from xvfbwrapper import Xvfb import sys import codecs import binascii import os import datetime import re from dateutil import parser from pytz import timezone reload(sys) sys.setdefaultencoding("utf-8") URL_TO_SAVE = 'users_bans' if not os.path.exists(URL_TO_SAVE): os.makedirs(URL_TO_SAVE) vdisplay = Xvfb() vdisplay.start() def get_empty_ban(): empty_ban = {} empty_ban['ban_user_id'] = '' empty_ban['ban_type'] = '' empty_ban['ban_user_ip'] = '' empty_ban['ban_section'] = '' empty_ban['ban_subsection'] = '' empty_ban['ban_date'] = '' empty_ban['ban_end_date'] = '' empty_ban['ban_moderator_id'] = '' empty_ban['ban_reason'] = '' empty_ban['ban_topic_id'] = '' return empty_ban def get_list_of_users_on_page(source): tz = timezone('Europe/Moscow') fmt = '%Y-%m-%d %H:%M:%S %Z%z' def parse_message_time(str_message_time): message_time = parser.parse(str_message_time) message_time = tz.localize(message_time) return message_time.strftime(fmt) soup = BeautifulSoup(source, "html.parser") table = soup.find('table', { 'bgcolor' : '#CCCCCC' }) tr_elements = table.findAll('tr', { 'class' : 'active' }) ban_list = list() for user_element in tr_elements: ban = get_empty_ban() tds = user_element.findAll('td') ban['ban_user_id'] = tds[0].find('a')['data-user_id'] ban['ban_type'] = tds[1].getText() ban['ban_user_ip'] = tds[2].getText() ban['ban_section'] = tds[3].getText() ban['ban_subsection'] = tds[4].getText() ban['ban_date'] = parse_message_time(tds[8].getText()) ban['ban_end_date'] = parse_message_time(tds[5].getText()) ban['ban_moderator_id'] = tds[7].find('a')['href'].split('=')[1] ban['ban_reason'] = tds[9].getText() ban['ban_topic_id'] = tds[10].getText() print ('%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s' % ( ban['ban_user_id'].encode('utf-8') , ban['ban_type'].encode('utf-8') , ban['ban_user_ip'].encode('utf-8') , ban['ban_section'].encode('utf-8') , ban['ban_subsection'].encode('utf-8') , ban['ban_date'].encode('utf-8') , ban['ban_end_date'].encode('utf-8') , ban['ban_moderator_id'].encode('utf-8') , ban['ban_reason'].encode('utf-8') , ban['ban_topic_id'])) ban_list.append(ban) return ban_list def _convert(param): if isinstance(param, str): return param.decode('utf-8') else: return param def get_driver(): url = "http://www.forum.mista.ru/index.php" driver = webdriver.Chrome() return driver def authenticate(url, driver): driver.get(url) username = " " password = "11" uname = driver.find_element_by_name("user_name") uname.send_keys(_convert(username)) passw = driver.find_element_by_name("user_password") passw.send_keys(password) submit_button = driver.find_element_by_class_name("sendbutton").click() url_edit = "http://www.forum.mista.ru/users.php?action=edit" driver.get(url_edit) a = driver.find_element_by_xpath("//a[@href='#tab3']") a.click() topics = driver.find_element_by_name("topics_per_page") topics.clear() topics.send_keys(99) section = driver.find_element_by_name("column_forum") if not section.is_selected(): section.click() section = driver.find_element_by_name("column_replies") if not section.is_selected(): section.click() section = driver.find_element_by_name("column_section") if not section.is_selected(): section.click() section = driver.find_element_by_name("show_topic_section") if not section.is_selected(): section.click() section = driver.find_element_by_name("column_author") if not section.is_selected(): section.click() section = driver.find_element_by_name("column_updated") if not section.is_selected(): section.click() submit_button = driver.find_element_by_name("Submit").click() base_url = 'http://www.forum.mista.ru/' driver = get_driver() authenticate(base_url, driver) def save_links_list_on_page(page, driver, n=0): driver.get(page) ban_list = get_list_of_users_on_page(driver.page_source) html = driver.page_source.replace('\t', ' ').replace('\n', ' ') .replace('\r', ' ').encode('utf-8') soup = BeautifulSoup(html, "html.parser") noindex_tag = soup.find('noindex') a_tag =noindex_tag.findAll('a') if len(a_tag) == 1: link_to_return = '' else: link_to_return = base_url+a_tag[1]['href'] return link_to_return current_url = 'http://www.forum.mista.ru/ban_list.php' n = 1 while current_url != '': current_url = save_links_list_on_page(current_url, driver, n) n += 1 if current_url == '' or current_url == 'http://www.forum.mista.ru/ban_list.php?next=': break vdisplay.stop() 4 — , - , .

" ", . 1 20 data . Google Cloud Storage , - , . , , , GCS .

, :

cat * > combo :

-bash: /bin/cat: Argument list too long , :

for f in *.txt; do (cat "${f}") >> ~/combo; done; , - . , , :

echo * | xargs paste -s -d \n > ~/foo.txt — . .

:

- 30

- 500 200 ( )

- 100

- 500

.

Parsing stage

:

#!/usr/bin/env python # -*- coding: utf-8 -*- # export PYTHONIOENCODING=UTF-8 from bs4 import BeautifulSoup from dateutil import parser from pytz import timezone import datetime import re import sys reload(sys) sys.setdefaultencoding("utf-8") def get_empty_message(): message = {} message['message_id'] = '' message['message_date_time'] = '' message['message_user_id'] = '' message['message_user_name'] = '' message['message_user_id'] = '' message['message_user_class'] = '' message['message_text'] = '' message['message_reply_to'] = '' message['message_external_links'] = '' return message def get_list_of_messages_on_page(source): tz = timezone('Europe/Moscow') fmt = '%Y-%m-%d %H:%M:%S %Z%z' def parse_message_time(str_message_time): str_message_time = '-'.join(str_message_time.split('-')[-2:]) message_time = parser.parse(str_message_time) message_time = tz.localize(message_time) return message_time.strftime(fmt) soup = BeautifulSoup(source, "html.parser") message_elements = soup.findAll('tr', id=re.compile("^message_\d+")) message_list = list() for message_element in message_elements: message = {} # message info message['message_id'] = int(re.sub("message_", "", message_element['id'])) message_date_time = message_element .find('div', { 'class' : 'message-info' }) .getText().replace(u'\xa0', u'') message['message_date_time'] = parse_message_time(message_date_time) # message user info user_info_element = message_element .find('td', id=re.compile("^tduser\d+")) .find('a', attrs = {'data-user_id' : True}) if user_info_element: message['message_user_id'] = user_info_element['data-user_id'] message['message_user_name'] = user_info_element['data-user_name'] .replace('\t','') message['message_user_id'] = user_info_element['href'] .replace('users.php?id=','') message['message_user_class'] = ' '.join(user_info_element['class']) .replace('\t','') else: user_info_element = message_element .find('span', { 'class' : 'anonym-user' }) message['message_user_id'] = '' message['message_user_name'] = user_info_element.getText() .replace('\t','') message['message_user_id'] = '' message['message_user_class'] = 'anonym-user' # message content message_text_element = message_element .find('div', { 'class' : 'message-text' }) if not message_text_element: message['message_text'] = '' message['message_reply_to'] = '' message['message_external_links'] = '' else: answer_link_elements = message_text_element .findAll('a', { 'class' : 'answer-link' }) for answer_link_element in answer_link_elements: answer_link_element.decompose() inner_link_elements = message_text_element .findAll('a', { 'class' : 'interlink', 'data-rel' : re.compile("^#\d+") }) inner_link_list = list() for inner_link_element in inner_link_elements: inner_link_list.append(inner_link_element.extract().getText() .encode('utf-8')) other_link_elements = message_text_element.findAll('a') other_link_list = list() for other_link_element in other_link_elements: other_link = other_link_element.extract()['href'] other_link_list.append(other_link.encode('utf-8')) message_text = message_text_element.getText() message_text = message_text.replace('\t',' ').replace('\n',' ') .replace('()','').strip() message['message_text'] = message_text message['message_reply_to'] = ' '.join(inner_link_list) message['message_external_links'] = ' '.join(other_link_list) message_list.append(message) return message_list if __name__ == "__main__": for line in sys.stdin: try: topic_id, page_number, link, source = line.strip().split('\t') except Exception as e: continue if source == '<html xmlns="http://www.w3.org/1999/xhtml"><head></head><body> .</body></html>': message_list = list() message = get_empty_message() message_list.append(message) else: try: message_list = get_list_of_messages_on_page(source) except Exception as e: message_list = list() message = get_empty_message() message['message_user_name'] = 'ERRORERRORERROR' message['message_text'] = str(e) message_list.append(message) for message in message_list: # 12 columns print ('%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s' % ( topic_id , page_number , link , message['message_id'] , message['message_date_time'].encode('utf-8') , message['message_user_name'].encode('utf-8') , message['message_user_id'].encode('utf-8') , message['message_user_class'].encode('utf-8') , message['message_text'].encode('utf-8') , message['message_reply_to'].encode('utf-8') , message['message_external_links'].encode('utf-8'))) , , tsv. BeautifulSoup. , , . .

:

#!/usr/bin/env python # -*- coding: utf-8 -*- # export PYTHONIOENCODING=UTF-8 from bs4 import BeautifulSoup from dateutil import parser from pytz import timezone import datetime import re import sys reload(sys) sys.setdefaultencoding("utf-8") def get_empty_user(): empty_user = {} empty_user['user_id'] = '' empty_user['user_name'] = '' empty_user['user_full_name'] = '' empty_user['user_email'] = '' empty_user['user_contacts'] = '' empty_user['user_url'] = '' empty_user['user_city_country'] = '' empty_user['user_dob'] = '' empty_user['user_timezone'] = '' empty_user['user_gender'] = '' empty_user['user_position'] = '' empty_user['user_achievement'] = '' empty_user['user_interests'] = '' empty_user['user_forum_role'] = '' empty_user['user_registration'] = '' empty_user['user_messages'] = '' empty_user['user_topics'] = '' return empty_user months_dic = {} months_dic[''] = 'January' months_dic[''] = 'February' months_dic[''] = 'March' months_dic[''] = 'April' months_dic[''] = 'May' months_dic[''] = 'June' months_dic[''] = 'July' months_dic[''] = 'August' months_dic[''] = 'September' months_dic[''] = 'October' months_dic[''] = 'November' months_dic[''] = 'December' def get_user_info_on_page(source): fmt = '%Y-%m-%d' def parse_str_date(str_date): if str_date == '': return ''; try: date_str = str_date.split(';')[0] if date_str[4:5] == ' ': dd = '01' mm = 'January' yy = date_str[:4] date = parser.parse(' '.join([dd, mm, yy])) else: dd, mm, yy = date_str.split(' ') date = parser.parse(' '.join([dd, months_dic[mm], yy])) return date.strftime(fmt) except Exception as e: return '' user = get_empty_user() soup = BeautifulSoup(source, "html.parser") id_tag = soup.find('a', href=re.compile('index.php\?user_id=\d+')) if id_tag: user['user_id'] = id_tag['href'].split('=')[1].encode('utf-8').strip() main_table = soup.findAll('table', { 'class' : 'table' }) main_table = main_table[len(main_table)-1] main_table_rows = main_table.findAll('tr') user['user_name'] = main_table_rows[0].findAll('td')[1].getText() .encode('utf-8').strip() user['user_full_name'] = main_table_rows[1].findAll('td')[1].getText() .encode('utf-8').strip() user['user_email'] = main_table_rows[3].findAll('td')[1].getText() .encode('utf-8').strip() user['user_contacts'] = main_table_rows[4].findAll('td')[1].getText() .encode('utf-8').strip() user['user_url'] = main_table_rows[5].findAll('td')[1].getText() .encode('utf-8').strip() user['user_city_country'] = main_table_rows[6].findAll('td')[1].getText() .encode('utf-8').strip() str_dob = main_table_rows[7].findAll('td')[1].getText().encode('utf-8').strip() user['user_dob'] = parse_str_date(str_dob) user['user_timezone'] = main_table_rows[8].findAll('td')[1].getText() .encode('utf-8').strip() user['user_gender'] = main_table_rows[9].findAll('td')[1].getText() .encode('utf-8').strip() user['user_position'] = main_table_rows[10].findAll('td')[1].getText() .encode('utf-8').strip() user['user_achievement'] = main_table_rows[11].findAll('td')[1].getText() .encode('utf-8').strip() user['user_interests'] = main_table_rows[12].findAll('td')[1].getText() .encode('utf-8').strip() user['user_forum_role'] = main_table_rows[13].findAll('td')[1].getText() .encode('utf-8').strip() str_reg = main_table_rows[14].findAll('td')[1].getText() .encode('utf-8').strip() user['user_registration'] = parse_str_date(str_reg) user['user_messages'] = main_table_rows[15].findAll('td')[1].getText() .encode('utf-8').strip() user['user_topics'] = main_table_rows[16].findAll('td')[1].getText() .encode('utf-8').strip() return user if __name__ == "__main__": for line in sys.stdin: try: source = line.strip() except Exception as e: continue if source == '' or source == '<html xmlns="http://www.w3.org/1999/xhtml"><head></head><body> : id .</body></html>': user = get_empty_user() else: user = get_empty_user() try: user = get_user_info_on_page(source) except Exception as e: user = get_empty_user() user['user_name'] = 'ERRORERRORERROR' user['user_full_name'] = str(e) print ('%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s' % ( user['user_id'] , user['user_name'] , user['user_full_name'] , user['user_email'] , user['user_contacts'] , user['user_url'] , user['user_city_country'] , user['user_dob'] , user['user_timezone'] , user['user_gender'] , user['user_position'] , user['user_achievement'] , user['user_interests'] , user['user_forum_role'] , user['user_registration'] , user['user_messages'] , user['user_topics'])) .

Hadoop and distributed computing

Hadoop MapReduce. MapReduce WordCount , , . GCP , distributed computing .

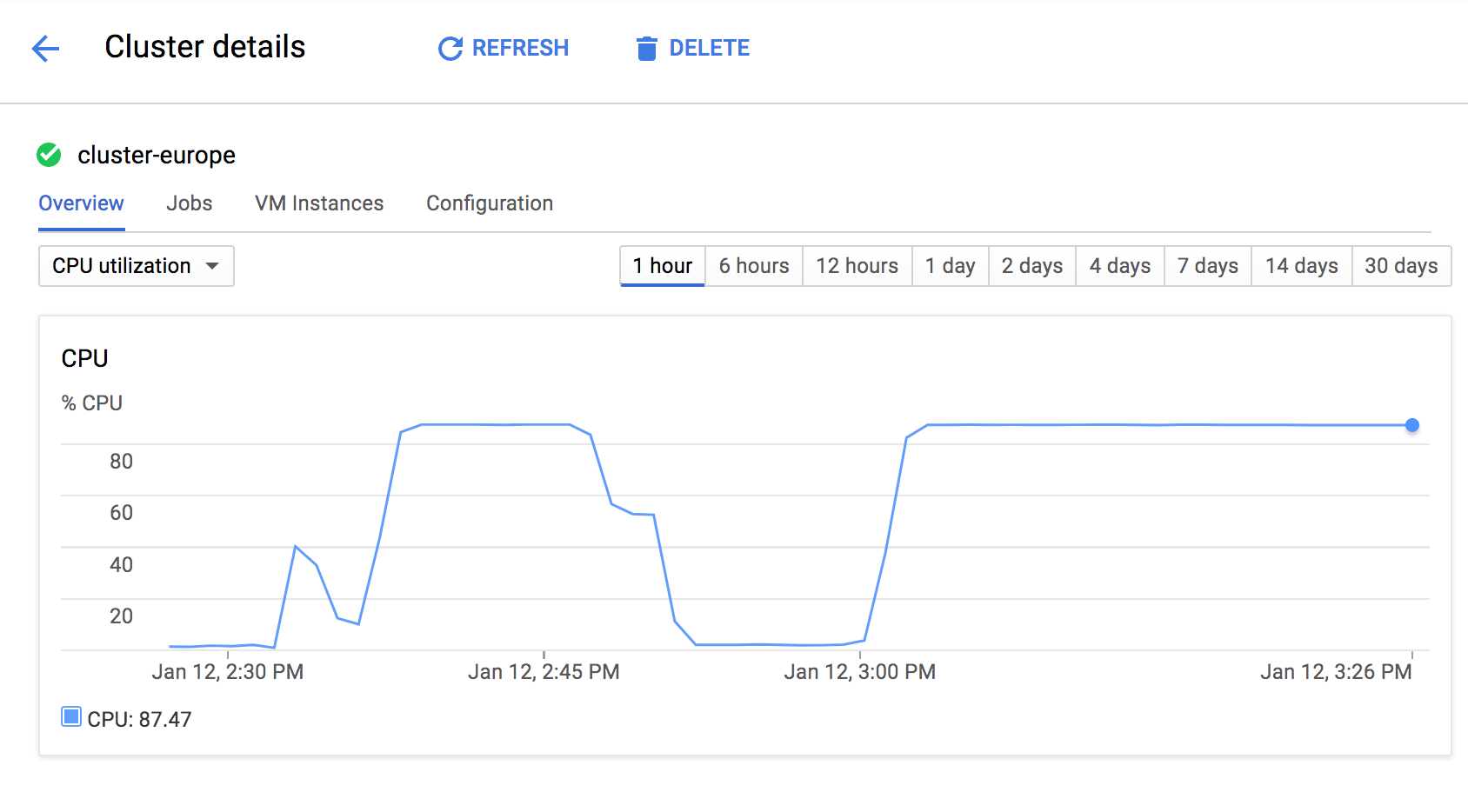

GCP DataProc. , ( High Availability) . 2-3 , (Hadoop, HDFS, Spark etc). . — job . , Hadoop c Google Storage Platform HDSF (.. HDFS ). — , job , . . Those. 2 5 20 30 . , , ?

20 n1-standard-1 (1CPUs 3.75Gb), . :

#! /bin/bash gcloud dataproc clusters create cluster-west \ --region europe-west1 \ --zone europe-west1-b \ --bucket mistabucket-west \ --subnet default \ --master-machine-type n1-standard-1 \ --master-boot-disk-size 100 \ --num-workers 20 \ --worker-machine-type n1-standard-1 \ --worker-boot-disk-size 100 \ --scopes 'https://www.googleapis.com/auth/cloud-platform' \ --project new-mista-project \ --initialization-actions 'gs://mistabucket-europe/install_environment.sh' preemptible , hdfs .3 ( , -).

, Google Cloud Shell:

#!/bin/bash PARSER_FILE="gs://mistabucket-west/mapper_parser.py" IN_DIR="gs://mistabucket-west/grabbing_results_html/messages_big" OUT_DIR="gs://mistabucket-west/output_mid" gsutil rm -r ${OUT_DIR} > /dev/null gsutil cp mapper_parser.py ${PARSER_FILE} gcloud dataproc jobs submit hadoop \ --cluster cluster-west \ --region europe-west1 \ --jar file:///usr/lib/hadoop-mapreduce/hadoop-streaming.jar \ -- \ -D mapred.jab.name="Parsing mista pages job 1 (parsing)" \ -files ${PARSER_FILE} \ -mapper mapper_parser.py \ -reducer 'cat' \ -input ${IN_DIR} \ -output ${OUT_DIR} IN_DIR="gs://mistabucket-west/output_mid" OUT_DIR="gs://mistabucket-west/grabbing_results_tsv/messages_dirty" gsutil rm -r ${OUT_DIR} > /dev/null gcloud dataproc jobs submit hadoop \ --cluster cluster-west \ --region europe-west1 \ --jar file:///usr/lib/hadoop-mapreduce/hadoop-streaming.jar \ -- \ -D mapred.jab.name="Parsing mista pages job 2 (sorting)" \ -mapper 'cat' \ -reducer 'sort -k1,1n -k2,2n -k4,4n' \ -input ${IN_DIR} \ -output ${OUT_DIR} gsutil rm -r ${IN_DIR} > /dev/null , — .

, :

#!/bin/bash IN_DIR="gs://mistabucket-west/grabbing_results_html/messages" OUT_DIR="gs://mistabucket-west/output_mid" OUT_LOG=out.$(date +"%s%6N").log gsutil rm -r ${OUT_DIR} > /dev/null hadoop jar /usr/lib/hadoop-mapreduce/hadoop-streaming.jar \ -D mapred.jab.name="Parsing mista pages job 1 (parsing)" \ -files mapper_parser.py \ -mapper mapper_parser.py \ -reducer 'cat' \ -input ${IN_DIR} \ -output ${OUT_DIR} > /dev/null 2>${OUT_LOG} IN_DIR="gs://mistabucket-west/output_mid" OUT_DIR="gs://mistabucket-west/grabbing_results_tsv/messages_dirty" gsutil rm -r ${OUT_DIR} > /dev/null hadoop jar /usr/lib/hadoop-mapreduce/hadoop-streaming.jar \ -D mapred.jab.name="Parsing mista pages job 2 (sorting)" \ -mapper 'cat' \ -reducer 'sort -k1,1n -k2,2n -k4,4n' \ -input ${IN_DIR} \ -output ${OUT_DIR} gsutil rm -r ${IN_DIR} > /dev/null - .

:

:

CPU[######################################################################93.8%] Mem[|||||||||||||||||||||||||||||||||||||||||||#********** 2112/3711MB] Swp[ 0/0MB] PID USER PRI NI VIRT RES SHR S CPU% MEM% TIME+ Command 10054 yarn 20 0 60432 36780 8224 R 25.0 1.0 3:04.25 python /hadoop... 10182 yarn 20 0 64908 41096 8156 R 25.0 1.1 2:28.96 python /hadoop... 10257 yarn 20 0 70280 46392 8288 R 25.0 1.2 2:17.99 python /hadoop... 10982 igab 20 0 25244 4376 2940 R 16.7 0.1 0:00.02 htop -C 10015 yarn 20 0 2420M 394M 26584 S 8.3 10.6 0:01.21 /usr/lib/jvm/j... 10039 yarn 20 0 2420M 394M 26584 R 8.3 10.6 0:00.85 /usr/lib/jvm/j... 10008 yarn 20 0 2420M 394M 26584 S 8.3 10.6 0:13.12 /usr/lib/jvm/j... 1 root 20 0 29640 5872 3104 S 0.0 0.2 0:06.54 /sbin/init 144 root 20 0 41524 6392 4012 S 0.0 0.2 0:00.40 /lib/systemd/s... 147 root 20 0 40808 3300 2772 S 0.0 0.1 0:00.07 /lib/systemd/s... 259 root 20 0 25400 7856 892 S 0.0 0.2 0:00.00 dhclient -v -p... 314 root 20 0 37080 2728 2296 S 0.0 0.1 0:00.00 /sbin/rpcbind ... 323 statd 20 0 37280 3060 2468 S 0.0 0.1 0:00.00 /sbin/rpc.stat... 337 root 20 0 23356 204 4 S 0.0 0.0 0:00.00 /usr/sbin/rpc.... 338 root 20 0 27476 2732 2500 S 0.0 0.1 0:00.01 /usr/sbin/cron... 355 root 20 0 252M 3344 2700 S 0.0 0.1 0:00.02 /usr/sbin/rsys... 356 root 20 0 252M 3344 2700 S 0.0 0.1 0:00.00 /usr/sbin/rsys... 357 root 20 0 252M 3344 2700 S 0.0 0.1 0:00.06 /usr/sbin/rsys... 339 root 20 0 252M 3344 2700 S 0.0 0.1 0:00.09 /usr/sbin/rsys... 343 messagebu 20 0 42120 3300 2920 S 0.0 0.1 0:00.27 /usr/bin/dbus-... 377 root 20 0 19856 2564 2296 S 0.0 0.1 0:00.11 /lib/systemd/s... 379 root 20 0 4256 1624 1480 S 0.0 0.0 0:00.00 /usr/sbin/acpi... 386 root 20 0 14416 1936 1784 S 0.0 0.1 0:00.00 /sbin/agetty -... 387 root 20 0 14236 2040 1888 S 0.0 0.1 0:00.00 /sbin/agetty -... 391 ntp 20 0 29168 4028 3452 S 0.0 0.1 0:00.62 /usr/sbin/ntpd... 425 root 20 0 55184 5400 4724 S 0.0 0.1 0:00.00 /usr/sbin/sshd... 431 root 20 0 53908 19460 7224 S 0.0 0.5 0:00.99 /usr/bin/pytho... 434 root 20 0 53484 19304 7224 S 0.0 0.5 0:00.43 /usr/bin/pytho... 435 root 20 0 55556 19192 7124 S 0.0 0.5 0:00.77 /usr/bin/pytho... 4 :

gomista@cluster-west-m:~/mista/mapreduce$ ./parser_pages_run.sh CommandException: No URLs matched: gs://mistabucket-west/output_mid 13/01/19 02:23:22 INFO gcs.GoogleHadoopFileSystemBase: GHFS version: 1.6.2-ha... packageJobJar: [] [/usr/lib/hadoop-mapreduce/hadoop-streaming-2.8.2.jar] /tmp... 13/01/19 02:23:22 INFO client.RMProxy: Connecting to ResourceManager at clust... 13/01/19 02:23:23 INFO client.RMProxy: Connecting to ResourceManager at clust... 13/01/19 02:23:25 INFO mapred.FileInputFormat: Total input files to process :... 13/01/19 02:23:25 INFO mapreduce.JobSubmitter: number of splits:238 13/01/19 02:23:25 INFO mapreduce.JobSubmitter: Submitting tokens for job: job... 13/01/19 02:23:26 INFO impl.YarnClientImpl: Submitted application application... 13/01/19 02:23:26 INFO mapreduce.Job: The url to track the job: http://cluste... 13/01/19 02:23:26 INFO mapreduce.Job: Running job: job_1516327218763_0003 13/01/19 02:23:36 INFO mapreduce.Job: Job job_1516327218763_0003 running in u... 13/01/19 02:23:36 INFO mapreduce.Job: map 0% reduce 0% ... 13/01/19 02:56:09 INFO mapreduce.Job: map 10% reduce 0% ... 13/01/19 05:52:02 INFO mapreduce.Job: map 90% reduce 0% ... 13/01/19 06:06:53 INFO mapreduce.Job: map 98% reduce 0% ... 13/01/19 06:14:38 INFO mapreduce.Job: map 99% reduce 30% 13/01/19 06:14:47 INFO mapreduce.Job: map 100% reduce 30% ... 13/01/19 06:22:58 INFO mapreduce.Job: map 100% reduce 99% 13/01/19 06:23:09 INFO mapreduce.Job: map 100% reduce 100% 13/01/19 06:23:14 INFO mapreduce.Job: Job job_1516327218763_0003 completed su... 13/01/19 06:23:14 INFO mapreduce.Job: Counters: 55 File System Counters FILE: Number of bytes read=6430372113 FILE: Number of bytes written=12899232930 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 GS: Number of bytes read=31919556146 GS: Number of bytes written=6347504555 GS: Number of read operations=0 GS: Number of large read operations=0 GS: Number of write operations=0 HDFS: Number of bytes read=28798 HDFS: Number of bytes written=0 HDFS: Number of read operations=238 HDFS: Number of large read operations=0 HDFS: Number of write operations=0 Job Counters Killed map tasks=10 Launched map tasks=248 Launched reduce tasks=22 Rack-local map tasks=248 Total time spent by all maps in occupied slots (ms)=3439064804 Total time spent by all reduces in occupied slots (ms)=144019192 Total time spent by all map tasks (ms)=859766201 Total time spent by all reduce tasks (ms)=18002399 Total vcore-milliseconds taken by all map tasks=859766201 Total vcore-milliseconds taken by all reduce tasks=18002399 Total megabyte-milliseconds taken by all map tasks=880400589824 Total megabyte-milliseconds taken by all reduce tasks=36868913152 Map-Reduce Framework Map input records=1062462 Map output records=16818747 Map output bytes=6372056899 Map output materialized bytes=6430403397 Input split bytes=28798 Combine input records=0 Combine output records=0 Reduce input groups=957787 Reduce shuffle bytes=6430403397 Reduce input records=16818747 Reduce output records=16818747 Spilled Records=33637494 Shuffled Maps =5236 Failed Shuffles=0 Merged Map outputs=5236 GC time elapsed (ms)=188467 CPU time spent (ms)=277329350 Physical memory (bytes) snapshot=115141345280 Virtual memory (bytes) snapshot=682591932416 Total committed heap usage (bytes)=89168744448 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=31919556146 File Output Format Counters Bytes Written=6347504555 13/01/19 06:23:14 INFO streaming.StreamJob: Output directory: gs://mistabucke... 13/01/19 06:23:17 INFO gcs.GoogleHadoopFileSystemBase: GHFS version: 1.6.2-ha... packageJobJar: [] [/usr/lib/hadoop-mapreduce/hadoop-streaming-2.8.2.jar] /tmp... 13/01/19 06:23:17 INFO client.RMProxy: Connecting to ResourceManager at clust... 13/01/19 06:23:18 INFO client.RMProxy: Connecting to ResourceManager at clust... 13/01/19 06:23:19 INFO mapred.FileInputFormat: Total input files to process :... 13/01/19 06:23:19 INFO mapreduce.JobSubmitter: number of splits:198 13/01/19 06:23:20 INFO mapreduce.JobSubmitter: Submitting tokens for job: job... 13/01/19 06:23:20 INFO impl.YarnClientImpl: Submitted application application... 13/01/19 06:23:20 INFO mapreduce.Job: The url to track the job: http://cluste... 13/01/19 06:23:20 INFO mapreduce.Job: Running job: job_1516327218763_0004 13/01/19 06:23:29 INFO mapreduce.Job: Job job_1516327218763_0004 running in u... 13/01/19 06:23:29 INFO mapreduce.Job: map 0% reduce 0% ... 13/01/19 06:23:56 INFO mapreduce.Job: map 19% reduce 0% ... 13/01/19 06:24:48 INFO mapreduce.Job: map 93% reduce 0% 13/01/19 06:24:49 INFO mapreduce.Job: map 97% reduce 0% 13/01/19 06:24:52 INFO mapreduce.Job: map 99% reduce 0% 13/01/19 06:24:57 INFO mapreduce.Job: map 100% reduce 0% ... 13/01/19 06:25:15 INFO mapreduce.Job: map 100% reduce 100% 13/01/19 06:26:00 INFO mapreduce.Job: Job job_1516327218763_0004 completed su... 13/01/19 06:26:00 INFO mapreduce.Job: Counters: 55 File System Counters FILE: Number of bytes read=6430372113 FILE: Number of bytes written=12893310310 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 GS: Number of bytes read=6348254123 GS: Number of bytes written=6347504555 GS: Number of read operations=0 GS: Number of large read operations=0 GS: Number of write operations=0 HDFS: Number of bytes read=18810 HDFS: Number of bytes written=0 HDFS: Number of read operations=198 HDFS: Number of large read operations=0 HDFS: Number of write operations=0 Job Counters Killed map tasks=2 Launched map tasks=199 Launched reduce tasks=22 Rack-local map tasks=199 Total time spent by all maps in occupied slots (ms)=17328576 Total time spent by all reduces in occupied slots (ms)=5680336 Total time spent by all map tasks (ms)=4332144 Total time spent by all reduce tasks (ms)=710042 Total vcore-milliseconds taken by all map tasks=4332144 Total vcore-milliseconds taken by all reduce tasks=710042 Total megabyte-milliseconds taken by all map tasks=4436115456 Total megabyte-milliseconds taken by all reduce tasks=1454166016 Map-Reduce Framework Map input records=16818747 Map output records=16818747 Map output bytes=6372056899 Map output materialized bytes=6430398117 Input split bytes=18810 Combine input records=0 Combine output records=0 Reduce input groups=957787 Reduce shuffle bytes=6430398117 Reduce input records=16818747 Reduce output records=16818747 Spilled Records=33637494 Shuffled Maps =4356 Failed Shuffles=0 Merged Map outputs=4356 GC time elapsed (ms)=104202 CPU time spent (ms)=849850 Physical memory (bytes) snapshot=99081195520 Virtual memory (bytes) snapshot=580507611136 Total committed heap usage (bytes)=78608355328 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=6348254123 File Output Format Counters Bytes Written=6347504555 13/01/19 06:26:00 INFO streaming.StreamJob: Output directory: gs://mistabucke... , , , 4 20 4 . html/xml BeautifulSoup , . , BeautifulSoup lxml etree . . :

#!/usr/bin/env python # -*- coding: utf-8 -*- # export PYTHONIOENCODING=UTF-8 from bs4 import BeautifulSoup from dateutil import parser as parserDate from pytz import timezone import datetime import re import sys from lxml import etree from io import StringIO, BytesIO reload(sys) sys.setdefaultencoding("utf-8") def get_empty_message(): message = {} message['message_id'] = '' message['message_date_time'] = '' message['message_user_id'] = '' message['message_user_name'] = '' message['message_user_link'] = '' message['message_user_class'] = '' message['message_text'] = '' message['message_reply_to'] = '' message['message_external_links'] = '' return message def get_list_of_messages_on_page(source): tz = timezone('Europe/Moscow') fmt = '%Y-%m-%d %H:%M:%S %Z%z' def parse_message_time(str_message_time): str_message_time = '-'.join(str_message_time.split('-')[-2:]) message_time = parserDate.parse(str_message_time) message_time = tz.localize(message_time) return message_time.strftime(fmt) parser = etree.HTMLParser() tree = etree.parse(StringIO(source.decode('utf-8')), parser) message_elements = tree.xpath("//tr[starts-with(@id, 'message_')]") message_list = list() for message_element in message_elements: message = get_empty_message() message['message_id'] = int(re.sub("message_", "", message_element.attrib['id'])) message_date_time = message_element .xpath(".//div[@class = 'message-info']//text()")[0] .replace(u'\xa0', u'') message['message_date_time'] = parse_message_time(message_date_time) user_info_elements = message_element .xpath(".//td[starts-with(@id, 'tduser')]/a") if len(user_info_elements) == 1: user_info_element = user_info_elements[0] message['message_user_id'] = user_info_element.attrib['data-user_id'] message['message_user_name'] = user_info_element.attrib['data-user_name'] .replace('\t','') message['message_user_link'] = user_info_element.attrib['href'] message['message_user_class'] = user_info_element.attrib['class'] .replace('\t','') else: user_info_element = message_element .xpath(".//span[@class = 'anonym-user']/text()")[0] message['message_user_id'] = '' message['message_user_name'] = user_info_element.replace('\t','') message['message_user_link'] = '' message['message_user_class'] = 'anonym-user' message_text_elements = message_element .xpath(".//div[@class = 'message-text']") if len(message_text_elements) == 1: message_text_element = message_text_elements[0] message_text = message_text_element.xpath("table/tbody/tr/td/text()") + message_text_element.xpath("text()") message['message_text'] = ''.join(message_text).replace('\t',' ') .replace('\n',' ').replace('( )','') .replace('()','').strip() #answer_link_elements = message_text_element .xpath(".//a[@class='answer-link interlink']") inner_link_elements = message_text_element .xpath(".//a[@class='interlink']") inner_link_list = list() for inner_link_element in inner_link_elements: inner_link_list.append(inner_link_element.attrib['href'] .replace('#','')) other_link_elements = message_text_element .xpath(".//a[not(@class='interlink') and not(@class='answer-link interlink')]") other_link_list = list() for other_link_element in other_link_elements: other_link_list.append(other_link_element.attrib['href']) message['message_reply_to'] = ' '.join(inner_link_list) message['message_external_links'] = ' '.join(other_link_list) else: message['message_text'] = '' message['message_user_name'] = 'HIDDEN MESSAGE' message['message_reply_to'] = '' message['message_external_links'] = '' message_list.append(message) return message_list if __name__ == "__main__": for line in sys.stdin: try: topic_id, page_number, link, source = line.strip().split('\t') except Exception as e: continue if source == '<html xmlns="http://www.w3.org/1999/xhtml"><head></head><body> .</body></html>': message_list = list() message = get_empty_message() message_list.append(message) else: try: message_list = get_list_of_messages_on_page(source) except Exception as e: message_list = list() message = get_empty_message() message['message_user_name'] = 'ERRORERRORERROR' message['message_text'] = str(e) message_list.append(message) for message in message_list: # 12 columns print ('%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s' % ( topic_id , page_number , link , message['message_id'] , message['message_date_time'] , message['message_user_name'] , message['message_user_id'] , message['message_user_class'] , message['message_text'] , message['message_reply_to'] Hadoop Streaming job . 12 :

gomista@cluster-west-m:~/mista/mapreduce$ ./parser_pages_lxml_run.sh Removing gs://mistabucket-west/output_mid/#1516326888923617... Removing gs://mistabucket-west/output_mid/_temporary/#1516326889037377... Removing gs://mistabucket-west/output_mid/_temporary/1/#1516326889036731... / [3 objects] Operation completed over 3 objects. 13/01/19 02:03:41 INFO gcs.GoogleHadoopFileSystemBase: GHFS version: 1.6.2-ha... packageJobJar: [] [/usr/lib/hadoop-mapreduce/hadoop-streaming-2.8.2.jar] /tmp... 13/01/19 02:03:42 INFO client.RMProxy: Connecting to ResourceManager at clust... 13/01/19 02:03:42 INFO client.RMProxy: Connecting to ResourceManager at clust... 13/01/19 02:03:46 INFO mapred.FileInputFormat: Total input files to process :... 13/01/19 02:03:48 INFO mapreduce.JobSubmitter: number of splits:238 13/01/19 02:03:48 INFO mapreduce.JobSubmitter: Submitting tokens for job: job... 13/01/19 02:03:49 INFO impl.YarnClientImpl: Submitted application application... 13/01/19 02:03:49 INFO mapreduce.Job: The url to track the job: http://cluste... 13/01/19 02:03:49 INFO mapreduce.Job: Running job: job_1516327218763_0001 13/01/19 02:04:07 INFO mapreduce.Job: Job job_1516327218763_0001 running in u... 13/01/19 02:04:07 INFO mapreduce.Job: map 0% reduce 0% ... 13/01/19 02:05:24 INFO mapreduce.Job: map 9% reduce 0% 13/01/19 02:05:30 INFO mapreduce.Job: map 10% reduce 0% ... 13/01/19 02:13:04 INFO mapreduce.Job: map 90% reduce 0% ... 13/01/19 02:14:12 INFO mapreduce.Job: map 99% reduce 0% 13/01/19 02:14:22 INFO mapreduce.Job: map 100% reduce 0% 13/01/19 02:14:29 INFO mapreduce.Job: map 100% reduce 2% ... 13/01/19 02:14:52 INFO mapreduce.Job: map 100% reduce 99% 13/01/19 02:14:53 INFO mapreduce.Job: map 100% reduce 100% 13/01/19 02:15:00 INFO mapreduce.Job: Job job_1516327218763_0001 completed su... 13/01/19 02:15:01 INFO mapreduce.Job: Counters: 56 File System Counters FILE: Number of bytes read=6193967355 FILE: Number of bytes written=12426341774 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 GS: Number of bytes read=31919556146 GS: Number of bytes written=6110532053 GS: Number of read operations=0 GS: Number of large read operations=0 GS: Number of write operations=0 HDFS: Number of bytes read=28798 HDFS: Number of bytes written=0 HDFS: Number of read operations=238 HDFS: Number of large read operations=0 HDFS: Number of write operations=0 Job Counters Killed map tasks=8 Killed reduce tasks=1 Launched map tasks=246 Launched reduce tasks=23 Rack-local map tasks=246 Total time spent by all maps in occupied slots (ms)=143665988 Total time spent by all reduces in occupied slots (ms)=5776576 Total time spent by all map tasks (ms)=35916497 Total time spent by all reduce tasks (ms)=722072 Total vcore-milliseconds taken by all map tasks=35916497 Total vcore-milliseconds taken by all reduce tasks=722072 Total megabyte-milliseconds taken by all map tasks=36778492928 Total megabyte-milliseconds taken by all reduce tasks=1478803456 Map-Reduce Framework Map input records=1062462 Map output records=16828105 Map output bytes=6135355287 Map output materialized bytes=6193998639 Input split bytes=28798 Combine input records=0 Combine output records=0 Reduce input groups=957787 Reduce shuffle bytes=6193998639 Reduce input records=16828105 Reduce output records=16828105 Spilled Records=33656210 Shuffled Maps =5236 Failed Shuffles=0 Merged Map outputs=5236 GC time elapsed (ms)=120521 CPU time spent (ms)=10644810 Physical memory (bytes) snapshot=111635165184 Virtual memory (bytes) snapshot=682604023808 Total committed heap usage (bytes)=89146138624 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=31919556146 File Output Format Counters Bytes Written=6110532053 13/01/19 02:15:01 INFO streaming.StreamJob: Output directory: gs://mistabucke... packageJobJar: [] [/usr/lib/hadoop-mapreduce/hadoop-streaming-2.8.2.jar] /tmp... 13/01/19 02:15:05 INFO client.RMProxy: Connecting to ResourceManager at clust... 13/01/19 02:15:06 INFO client.RMProxy: Connecting to ResourceManager at clust... 13/01/19 02:15:06 INFO gcs.GoogleHadoopFileSystemBase: GHFS version: 1.6.2-ha... 13/01/19 02:15:08 INFO mapred.FileInputFormat: Total input files to process :... 13/01/19 02:15:08 INFO mapreduce.JobSubmitter: number of splits:199 13/01/19 02:15:08 INFO mapreduce.JobSubmitter: Submitting tokens for job: job... 13/01/19 02:15:09 INFO impl.YarnClientImpl: Submitted application application... 13/01/19 02:15:09 INFO mapreduce.Job: The url to track the job: http://cluste... 13/01/19 02:15:09 INFO mapreduce.Job: Running job: job_1516327218763_0002 13/01/19 02:15:19 INFO mapreduce.Job: Job job_1516327218763_0002 running in u... 13/01/19 02:15:19 INFO mapreduce.Job: map 0% reduce 0% ... 13/01/19 02:15:48 INFO mapreduce.Job: map 17% reduce 0% ... 13/01/19 02:16:43 INFO mapreduce.Job: map 91% reduce 0% ... 13/01/19 02:17:11 INFO mapreduce.Job: map 100% reduce 100% 13/01/19 02:17:28 INFO mapreduce.Job: Job job_1516327218763_0002 completed su... 13/01/19 02:17:29 INFO mapreduce.Job: Counters: 55 File System Counters FILE: Number of bytes read=6193967355 FILE: Number of bytes written=12420328221 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 GS: Number of bytes read=6111257045 GS: Number of bytes written=6110532053 GS: Number of read operations=0 GS: Number of large read operations=0 GS: Number of write operations=0 HDFS: Number of bytes read=18905 HDFS: Number of bytes written=0 HDFS: Number of read operations=199 HDFS: Number of large read operations=0 HDFS: Number of write operations=0 Job Counters Killed map tasks=2 Launched map tasks=200 Launched reduce tasks=22 Rack-local map tasks=200 Total time spent by all maps in occupied slots (ms)=18822720 Total time spent by all reduces in occupied slots (ms)=5538912 Total time spent by all map tasks (ms)=4705680 Total time spent by all reduce tasks (ms)=692364 Total vcore-milliseconds taken by all map tasks=4705680 Total vcore-milliseconds taken by all reduce tasks=692364 Total megabyte-milliseconds taken by all map tasks=4818616320 Total megabyte-milliseconds taken by all reduce tasks=1417961472 Map-Reduce Framework Map input records=16828105 Map output records=16828105 Map output bytes=6135355287 Map output materialized bytes=6193993491 Input split bytes=18905 Combine input records=0 Combine output records=0 Reduce input groups=957787 Reduce shuffle bytes=6193993491 Reduce input records=16828105 Reduce output records=16828105 Spilled Records=33656210 Shuffled Maps =4378 Failed Shuffles=0 Merged Map outputs=4378 GC time elapsed (ms)=116848 CPU time spent (ms)=914030 Physical memory (bytes) snapshot=98494140416 Virtual memory (bytes) snapshot=583041585152 Total committed heap usage (bytes)=78180368384 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=6111257045 File Output Format Counters Bytes Written=6110532053 13/01/19 02:17:29 INFO streaming.StreamJob: Output directory: gs://mistabucke... , 20 ...

, Spark job. ( ) , :

from pyspark import SparkContext, SparkConf sc =SparkContext() def clear_user_id(line): line[6]=line[6].replace('users.php?id=','') return line rdd = sc.textFile("gs://mistabucket-west/grabbing_results_tsv/messages_dirty/*") \ .map(lambda line: str(line.encode('utf-8')).split("\t")) \ .filter(lambda line: line[3]<>'') \ .map(lambda line: clear_user_id(line)) \ .sortBy(lambda line: (int(line[0]), int(line[1]), int(line[3]))) \ .repartition(100) \ .map(lambda line: '\t'.join(line)) \ .saveAsTextFile("gs://mistabucket-west/grabbing_results_tsv/messages") Shell :

#!/bin/bash OUT_DIR="gs://mistabucket-west/grabbing_results_tsv/messages" gsutil -m rm -r ${OUT_DIR} > /dev/null gcloud dataproc jobs submit pyspark \ file:///home/gomista/mista/pyspark/clear_messages.py \ --cluster cluster-west \ --region europe-west1 20 2 .

Making a sense

( -) Hive.

:

#!/bin/bash gcloud dataproc jobs submit hive \ --cluster cluster-west \ --region europe-west1 \ --file $1 :

CREATE EXTERNAL TABLE IF NOT EXISTS messages (topic_id INT, page_number INT, link STRING, message_id INT, message_date_time TIMESTAMP, message_user_name STRING, message_user_id STRING, message_user_class ARRAY<STRING>, message_text STRING, message_reply_to ARRAY<STRING>, message_external_links ARRAY<STRING>) COMMENT 'Data contains users messages' ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' COLLECTION ITEMS TERMINATED BY ' ' STORED AS TEXTFILE LOCATION 'gs://mistabucket-west/grabbing_results_tsv/messages'; :

SELECT COUNT(*) FROM messages; Result:

+-----------+--+ | c0 | +-----------+--+ | 16576543 | +-----------+--+ 1 row selected (51.307 seconds) " " :

SELECT DATE_FORMAT(message_date_time,'yyyy') AS year, COUNT(message_id) AS total_messages FROM messages GROUP BY DATE_FORMAT(message_date_time,'yyyy') ORDER BY year; Result:

+-------+-----------------+--+ | year | total_messages | +-------+-----------------+--+ | 2000 | 3412 | | 2001 | 4900 | | 2003 | 5854 | | 2004 | 248704 | | 2005 | 834114 | | 2006 | 1319828 | | 2007 | 1077894 | | 2008 | 1071649 | | 2009 | 1650880 | | 2010 | 1704566 | | 2011 | 1821790 | | 2012 | 2041743 | | 2013 | 1471078 | | 2014 | 1218941 | | 2015 | 834300 | | 2016 | 726237 | | 2017 | 529598 | | 2018 | 11055 | +-------+-----------------+--+ 18 rows selected (100.316 seconds) . , , 1 8.0. . 2007-2008 . , , 2009 35 . " " , " , " , " " . ( ) , , 2012 .

. 2011 , 2012, , . ? For the same reason. , 2012 , "" . - , , - " ", "" ( , ). — 2013 . , , , , , , .

2014 , . ? Right. . 1 , . ( 10 ) " ", , - " " " ", .

— : 500 2017 ( 10 ). , 5% , , , , . :

gomista@cluster-west-m:~$ cat data4 | python bar_chart.py -A # each ∎ represents a count of 665. total 531754 201701 [ 48446] ∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎ 201702 [ 39747] ∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎ 201703 [ 49150] ∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎ 201704 [ 42766] ∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎ 201705 [ 47175] ∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎ 201706 [ 43566] ∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎ 201707 [ 45087] ∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎ 201708 [ 40789] ∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎ 201709 [ 40850] ∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎ 201710 [ 45386] ∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎ 201711 [ 43041] ∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎ 201712 [ 43595] ∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎∎ 201801 [ 2156] ∎ , , 50 100 . , , , 20 5.

— SparkSQL. SparkSQL, , Hive , hive spark-sql gcloud dataproc jobs submit hive . , :

2000 3412 2001 4900 2003 5854 2004 248704 2005 834114 2006 1319828 2007 1077894 2008 1071649 2009 1650880 2010 1704566 2011 1821790 2012 2041743 2013 1471078 2014 1218941 2015 834300 2016 726237 2017 529598 2018 11055 Time taken: 22.113 seconds, Fetched 18 row(s) — 22 SparkSQL 100 Hive. , in-memory computation MapReduce.

, — BigQuery. Google Cloud Storage, Standard SQL . BigQuery SQL . — ( , GCS) ( ). 1 10 — .

, , ( ) , BigQuery supports loading and exporting nested and repeated data in the form of JSON and Avro files . Those. message_user_class , message_reply_to , message_external_links , , . 5 :

#!/bin/bash bq load \ --field_delimiter "\t" \ --quote '' \ --source_format CSV mistadataset.messages \ gs://mistabucket-west/grabbing_results_tsv/messages/part-* \ topic_id:INTEGER,\ page_number:INTEGER,\ link:STRING,\ message_id:INTEGER,\ message_date_time:TIMESTAMP,\ message_user_name:STRING,\ message_user_id:STRING,\ message_user_class:STRING,\ message_text:STRING,\ message_reply_to:STRING,\ message_external_links:STRING :

gomista@cluster-west-m:~$ bq show mistadataset.messages Table new-mista-project:mistadataset.messages Last modified Schema Total Rows Total Bytes ----------------- ----------------------------------- ------------ ------------ 20 Jan 06:31:57 |- topic_id: integer 16576543 6013007928 |- page_number: integer |- link: string |- message_id: integer |- message_date_time: timestamp |- message_user_name: string |- message_user_id: string |- message_user_class: string |- message_text: string |- message_reply_to: string |- message_external_links: string , :

gomista@cluster-west-m:~$ bq query 'SELECT COUNT(*) FROM mistadataset.messages' Waiting on bqjob_r3390fd1a48d7adbf_000001611c99d989_1 ... (0s) Current status: D +----------+ | f0_ | +----------+ | 16576543 | +----------+ , :