How many participants can be in a WebRTC call?

Almost any business loves conferencing, especially video conferencing. Voximplant helps businesses including this one: video conferencing successfully works for us, both regular and HD (for example, see the article Video Conferencing ). Now our konfy work on peer-to-peer architecture, but soon we will tell about client-server solution with preference and courtesans. In the meantime, we suggest looking at approaches to creating server conferences using WebRTC: we have prepared a translation of a fresh article from BlogGeek.me blog. The author of the blog is Tzahi Levent-Levy, an independent expert of WebRTC, an analyst and entrepreneur; In the past, Tzachi worked on VoIP and 3G projects as a developer, marketer and technical director. In a word, he knows what he is talking about.

How many participants can be in a WebRTC call?

How much you want. You can cram from one to a million participants into any WebRTC call.

Imagine that you were asked to make a group video call and it is obvious that the choice of technology fell on WebRTC. There are almost no alternatives for this task, except for the WebRTC technology, which also has the best price-performance ratio. However, the question arises: how many users can fit in a single WebRTC call?

At least once a week, someone says that WebRTC is a peer-to-peer technology and is hardly suitable for a large number of participants ... Well, WebRTC is great for large group calls.

')

Today, the largest structural unit of WebRTC is the SFU (Selective Forwarding Unit - approx. Translator) - a media router that receives media streams from all participants in a session and decides where to send these streams.

In this article I want to tell about some approaches and solutions that you can use when creating applications on WebRTC, which should support large video sessions.

Analyze the complexity

The first step is to analyze the complexity of our particular case. In WebRTC (and in general, in real-time video communications) it all comes down to speed and flow:

- Speed - resolution and bit rate, which we expect to achieve in our service.

- Threads - the number of threads in a session

Let's start with an example.

Suppose you want to start a group call service for an enterprise. Or even run around the world. People will be grouped into sessions. You plan to limit group sessions to 4 people. I know that you want more, but for now I try not to complicate things.

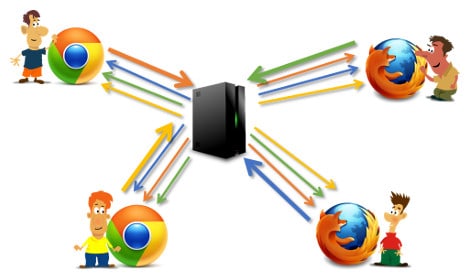

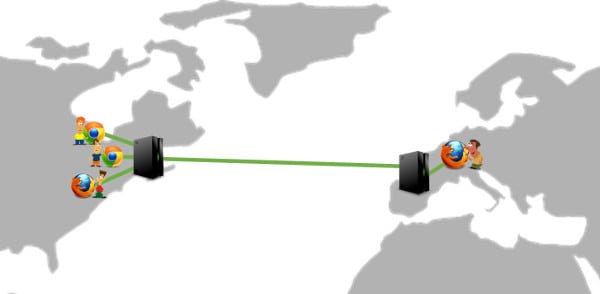

The picture above shows how the conference should look like for 4 people.

Magic squares: 720p

If the conference markup looks like a magic square (4 images of the same size - approx. Translator), then the following logic will apply.

You want high quality video. Well, everyone wants it. So, you expect that all participants send 720p video and use WQHD monitors (2560x1440). Suppose this requires a bit rate of 1.5 Mb / s (I am modest here; it is obvious that the value may be higher), thus:

- each session participant gives 1.5 Mb / s and receives 3 streams of 1.5 Mb / s;

- with 4 participants, the media server should receive 6 Mb / s and give 18 Mb / s.

As a table, it looks like this:

| Resolution | 720p |

| Bitrate | 1.5 Mb / s |

| Member gives | 1.5 Mb / s (1 stream) |

| The participant accepts | 4.5 Mb / s (3 threads) |

| SFU gives | 18 Mb / s (12 streams) |

| SFU accepts | 6 Mb / s (4 streams) |

Magic squares: VGA

If you need a smaller resolution, you can use a VGA resolution (640x480 - approx. Translator) and even limit the bitrate to 600 Kb / s:

| Resolution | VGA |

| Bitrate | 600 Kb / s |

| Member gives | 0.6 Mb / s (1 stream) |

| The participant accepts | 1.8 MB / s (3 streams) |

| SFU gives | 7.2 Mb / s (12 streams) |

| SFU accepts | 2.4 MB / s (4 streams) |

You will probably want to avoid scaling VGA under the displays - it can get awful, especially on 4K displays.

Approximate calculations show that theoretically you can fit 3 VGA conferences “for the price” of one 720p conference.

In the spirit of hangouts

But what if the conference should look different? One main participant and small videos of other participants:

I call it “in the spirit of the Hangouts” because the Hangouts app is well-known for such markup and was the first to use such a presentation without offering other options.

In this case, we use simulcast , meaning that all participants send out HQ video and the SFU decides which stream to use as the main one and sets the resolution for it higher, for the other streams - lower.

You decide to use 720p, because after a couple of experiments, you realize that lower resolutions when scaling to large displays do not look too good. As a result, you have:

- each session participant gives 2.2 Mb / s (1.5 Mb / s for the 720p stream and an additional 800 Kb / s for other resolutions, along with which there will be a broadcast);

- each session participant receives 1.5 Mb / s from the main participant and 2 additional streams of ~ 300 Kb / s for small videos;

- with 4 participants, the media server should receive 8.8 MB / s and give 8.4 MB / s.

| Resolution | 720p max (simulcast) |

| Bitrate | 150 Kb / s - 1.5 Mb / s |

| Member gives | 2.2 MB / s (1 stream) |

| The participant accepts | 1.5 Mb / s (1 stream) 0.3 Mb / s (2 streams) |

| SFU gives | 8.4 MB / s (12 streams) |

| SFU accepts | 8.8 MB / s (4 threads) |

What we learned:

Different configurations of group calls with the same number of participants exert a different load on the media server.

This was not stated explicitly, but Simulcast (what we used in the Hangouts example) works great and improves the efficiency and quality of group calls.

Using the example of 3 video call scenarios with 4 streams (for each participant - a comment of the translator), we obtained such SFU activity:

| Magic squares: 720p | Magic squares: VGA | In the spirit of hangouts | |

| SFU gives | 18 Mb / s | 7.2 Mb / s | 8.4 Mb / s |

| SFU accepts | 6 Mb / s | 2.4 MB / s | 8.8 MB / s |

Home task: Imagine that we want to make a session with 2 threads (for each participant - comment of the translator), which is available to 100 people through WebRTC. Count the number of streams and bandwidth that you need for the server.

How many active users can be in a WebRTC call?

Hard question.

If you use an MCU (Multipoint Conferencing Unit - translator comment), you will get as many participants in a call as your MCU can handle.

If you are using SFU, it depends on 3 parameters:

- The complexity and performance of your media server

- Capacity of client devices available to you

- How your infrastructure is designed and how you cascaded (connection between several media servers - approx. Translator)

Now we look at these parameters.

One scenario, different implementations

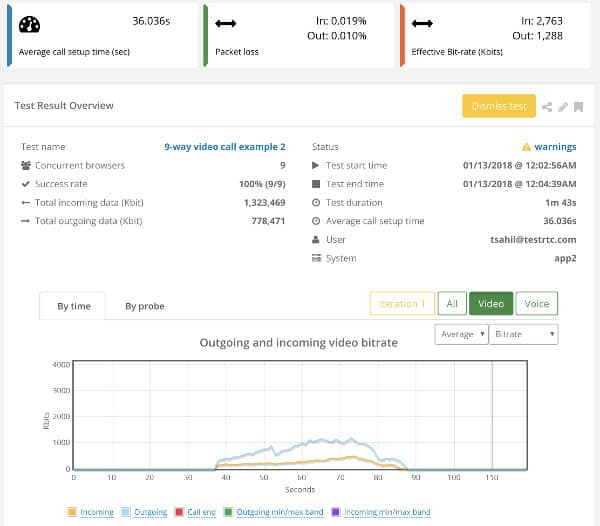

As for 8-10 participants in one call, it becomes difficult. Here is an example of a publicly available service that I want to share.

Scenario:

- 9 participants in one session, marking - “magic square”;

- I use testRTC to create a session with users, i.e. everything is automated;

- I run for 1 minute. Then the session is forcibly terminated, because this is a demo;

- The demo takes into account that there are 9 people on the screen, so the resolution for all is reduced to VGA, but 1.3 MB / s is used as a result;

- browsers receive a 10 Mb / s stream for processing.

In this case, the media server decided how to limit and measure traffic.

But another service with an online demo, which worked on the same scenario:

Now the average incoming bitrate for each browser is only 2.7 Mb / s - almost a quarter of the bitrate in the first service.

One script. Different implementations.

What about popular services?

What about popular video conferencing services using SFU? What are their limitations on the number of participants?

Here is what I managed to find:

- Google Hangouts - up to 25 participants per session. It used to be 10. When I was doing the first seminar for my training on WebRTC, I rested on this restriction, which made me use other services;

- Hangouts Meet - maximum 50 people;

- Houseparty - limited to 8 participants;

- Skype - 25 members;

- appear.in - their PRO account supports up to 12 people in the room;

- Amazon Chime - 16 participants in the desktop version and up to 8 participants in the iOS version (Android is not currently supported);

- Atlassian Stride and Jitsi Meet - up to 50 participants.

Does this mean that you cannot exceed the threshold of 50 participants?

In my opinion, there is a relationship between the size of the conference and its complexity:

CPaaS size limit

CPaaS platforms that support video and group calls often have limits on the size of conferences. In most cases, it indicates an arbitrary number of participants that has been tested. As we understood, these restrictions are only suitable for certain scenarios and not the fact that your usage scenarios are included here.

For CPaaS platforms, these limits range from 10 to 100 participants for one session. And usually, if you exceed the limit, the additional participants will only be in view mode.

Key points

Here is what to remember:

- the larger the conference, the harder it is to implement and optimize;

- the browser is forced to run a lot of decoders, which is very resource intensive;

- Mobile devices (especially old ones) can not withstand such a load. Do tests on the oldest and weakest devices for which you are planning support - and only then determine the maximum supported conference size;

- You can configure the SFU so that it does not send all incoming flows to all participants, but select the data to send. For example, send an audio stream of only one participant to everyone else, or send only the 4 loudest audio streams.

Measure your media server

Measurements and media servers are what I have been doing recently with testRTC. Earlier we played a little with Kurento , and also plan to tinker with other media servers. I am asked the same question in every project where I participate:

How many sessions / participants / streams can we use in one media server?

Remembering the above speed and flow, the answer to the question is very, very dependent on what you are doing.

If you are targeting group calls where each participant is active, then you should count on 100-500 participants on one media server. The exact amount depends on which machine the media server is running on and which bitrate you need.

If you are aiming to broadcast one person to a large audience (Broadcast) made using WebRTC to reduce delays, then the number of participants will be around 200-1000 people. And maybe more.

Big or small cars?

Another important point is the machine on which the media server will be hosted. Will it be ukkruty server or you will be comfortable with something smaller?

Using large, serious machines will allow you to serve more people and sessions on one server, so the complexity of your decision will be lower. If something fails (media servers do that, yes), it will affect a large number of users. And when you need to update the media server (and you will definitely do it ), this process can be costly for you or it can be very difficult.

The larger the server, the more cores it has. Because of this, the media server will be forced to work multi-threaded, which, in turn, increases the complexity of creating a media server, debugging it and fixing problems.

Using smaller servers means that you will encounter scaling problems earlier, and solving them will require more elaborate algorithms and heuristics. You will have more borderline cases during load balancing.

Measure in streams, bandwidth or CPU?

How do you determine if your media server is running at full capacity? How do you decide whether to place a new session on a free machine, on a new unoccupied or already used one? If you select an already used car and new participants start adding to the active session, is there enough space for everyone?

These are not easy questions.

I saw 3 different metrics by which it can be determined that it is time to use other servers. Here they are:

CPU - when CPU utilization reaches a certain percentage, it means that the machine is “full”. It works best when using small servers, as in their case, the CPU is one of the first “exhausted” resources.

Bandwidth - SFU shows great network activity. If you use large machines, then most likely you will not reach the CPU limit, but you will require too much bandwidth. Therefore, you can determine the residual power by monitoring the bandwidth.

Flows - The difficulty with the CPU and bandwidth is that the number of supported sessions and flows can vary depending on conditions. Your scaling strategy may not cope with this and you will want more control over the calculations. This will lead you not to measure the load across the CPU or the bandwidth, but to create balancing rules based on the number of threads the server can handle.

-

The main challenge is that whatever method you choose, you have to do the measurements yourself. I saw how many people started using testRTC when faced with this task.

Cascading one session

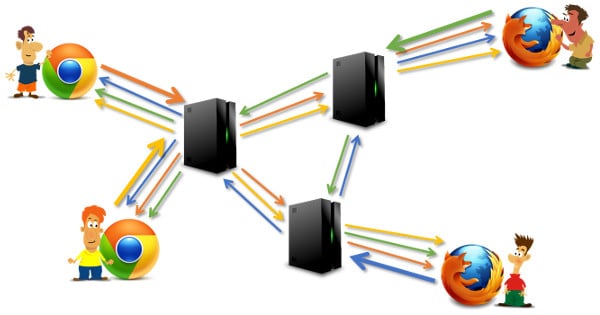

Cascading is the process of connecting one media server to another. The picture below shows what I mean:

We have a 4-way group video call that is distributed between 3 media servers. These servers route video and voice to each other so that the media streams are connected. Why do you need this?

# 1 - Geographical distribution

When you start a global service and use SFU in it, the very first question for each new session is which SFU do you use? In which of the data centers? Since we want our media servers to be located closest to users, we either know in advance about the new session and understand where to define it, or decide it with reasonable means like geolocation — choose a data center that is closer to the user who created the conference.

Suppose there are four involved in the call. Three from New York, and one from France. What happens if a guy from France is the first in a group call?

Media server will be located in France. 3 out of 4 participants will be far away from the media server. Not the best approach ...

One of the solutions for such a conference is to distribute it between servers that are close to each of the participants:

Servers will need more resources to service the session, but we will have more control over the media routes, so that we can better optimize them. This will improve the quality of the media in the session.

# 2 - Flexible backup

Suppose that up to 100 participants can connect to one media server. In addition, each group call can have up to 10 participants. Ideally, we don’t want to host more than 10 sessions on one media server.

But what if I tell you that on average in one call 2 participants? That is about the distribution:

Most server resources are wasted. How can we fix this?

- Oblige people to pre-fill calls to the maximum. This is not what you would like to do.

- We take the risk and assume that if the server is used by 50%, then the remaining capacity will allow current conferences to increase. You are still wasting resources, but less. In this case, borderline cases will arise where you can no longer increase the conference with server resources.

- Move sessions between media servers in an attempt to “fragment” servers. This is as awful as it sounds (and perhaps as bad for users).

- Cascade sessions, that is, allow them to grow on different machines.

What about cascading? Reserve resources of one media server for hosting sessions from other media servers.

# 3 - Huge Conferences

If you want to create a conference with more than one media server can handle, then cascading is your only choice.

If your media server is designed for 100 participants and you want conferences of up to 5,000 participants, then you need cascading. This is not easy, so there are few such solutions, but it is definitely possible.

Keep in mind that in such large conferences, the media stream will not be bidirectional. You will have fewer participants sending media and more participants who only receive media. For the case of pure Broadcast, I have already described scaling problems on the Red5 Pro blog.

Conclusion

We have covered many topics in this article. Here is a list of what you need to do to determine the maximum number of users in your WebRTC calls:

- Whatever the size of the conferences you have conceived, it is realizable through WebRTC.

- This will be a matter of cost and correlation with the business model (which will either burn out or not).

- The larger the conference, the harder it is to do it correctly and the more “variables in the equation”.

- Rate the complexity of the support.

- Count incoming and outgoing flows for each device and media server.

- Decide on the video quality (resolution and bit rate) for each stream.

- Decide which media server you will use.

- Select which machine will run the media server.

- Measure enough to scale.

- Verify that the increase in load is linear with respect to server resources.

- Decide which boot metrics you use: CPU, bandwidth, threads or something else.

- How does cascading fit into the big picture?

- Improved geolocation support.

- Help with the fragmentation of resources in the cloud infrastructure.

- Or an increase in conferences beyond the capabilities of a single media server.

So, what is the size of your WebRTC conferences?

Source: https://habr.com/ru/post/346924/

All Articles