Robots inside the office: what can be done in 3 days instead of six months

The story began with the fact that we needed access to the HR system for the chat bot. So that the latter could search for the contacts of the employees on request, such as: “Find someone who can fix the picnic”. In corporate infrastructure, it looks like this: we go to the automation department and say that we need data from HRMS. And we get a logical answer:

- Write TK, we will provide API and integration server in 6 months.

- Guys, what are you doing? We would be quick!

- Then write TZ and a letter to the head, we will be able to URGENTLY keep within 3 months.

And we had to for 3 days. Therefore, we went the other way: they asked the personnel officers to get us a bot in the list of employees and give it access to the system. Then he did what a man could do in his place.

As a result, the old joke about the fact that if programmers get to power, then the whole parliament can be replaced with a simple bash script, it was not such a joke. Our robots, of course, are not the most optimal from the point of view of architecture for the years ahead, but they work. About what they are doing and where such deadlines come from, I'll tell you now.

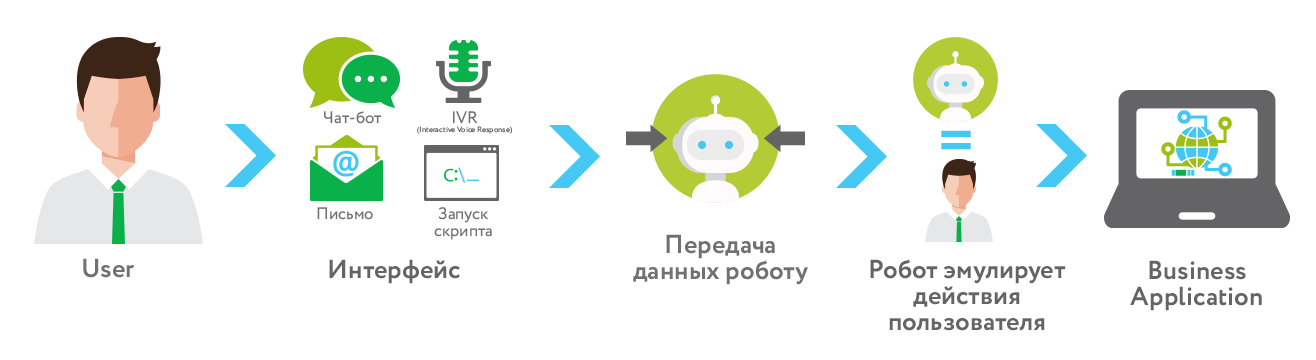

So, the primary idea is very simple: you can take a robot that will simulate the actions of users. For example, if we need access to the HR system, there is no need to build an integration server and receive an API. You just need to teach the robot to press the necessary buttons and parse the answers. The benefit of almost all modern interfaces is very, very friendly to robots - in MS-software everywhere there are tags of controls for alternative access, hot keys or recognizable icons. We just teach the robot to press the right buttons in the right sequence. It takes about an hour to automate the process by type of a macro in Word or to record actions in Photoshop, and the remaining 90% are tests and exception handling.

')

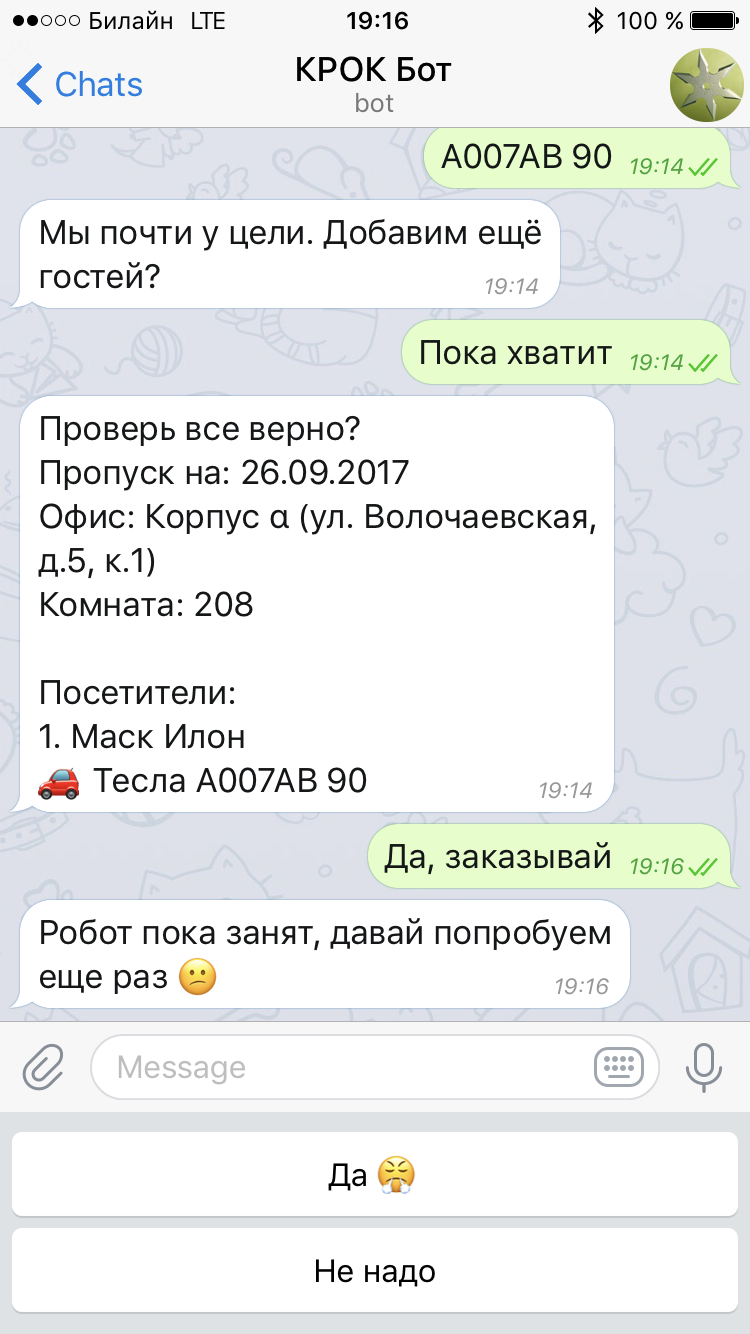

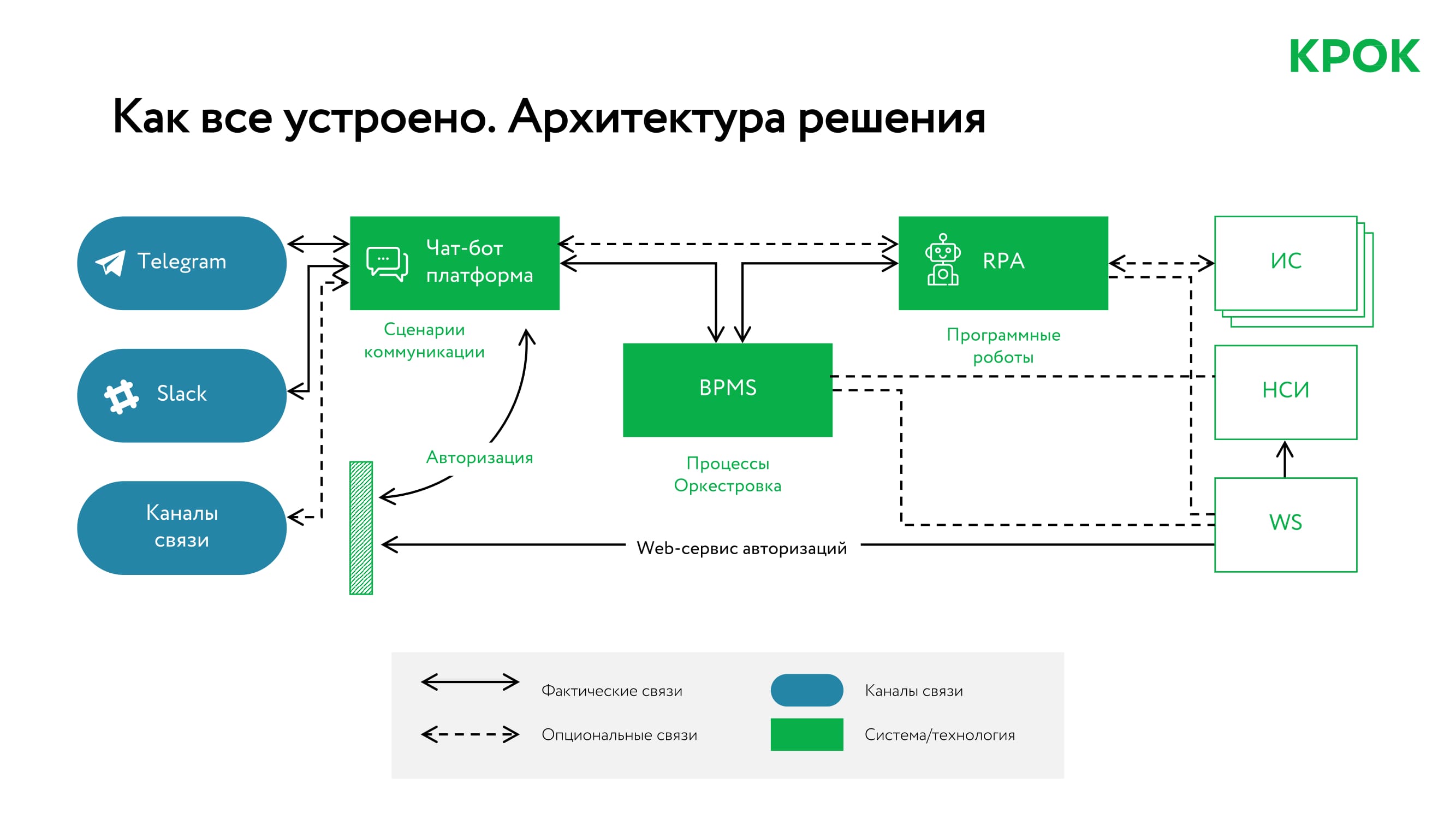

The user presses a button in the interface. The data is then collected and the robot is started. A button as a separate interface (frontend) is needed because, for example, a chat bot cannot take a screenshot by itself. But he can tell you about the button, since the user is used to the fact that all questions can be addressed to the chat bot as the main interface. Chat bot also launches robots.

In general, the user sets the task through one of the interfaces. For example, makes a request to the database of employees via chat bot. The interface transfers the consistent data to the robot processor, that is, it runs the script with the specified parameters. The handler emulates the user and does something in the butt, then gives the result to the interface.

I.e:

- There are robot launch interfaces: these are chat bots, buttons, scripts, events and timers or actions of other robots.

- There are actions of robots: this is receiving and transforming data.

- And there is the output of the result to the user: this is uploading back to the chat bot interface, the result in the file in the mail, and so on.

Here is an example of how the robot logs in to the system and makes a pass:

I hasten to cool the heat

Yes, it is very fast. Yes, it is very cheap compared to full automation through the built-in API or integration server. But there are a lot of flaws:

- The robot emulates a user of some system. This means that he needs a separate workplace with this system. That is, you need to have an additional license if licensing by users or machines.

- The robot works much faster than humans, but through human interfaces. That is what a person does in 8 hours, he does conditionally in 4 minutes. But this is not 2 seconds, as in the case of API integration, the robot is waiting for the interfaces to load and for them to be fully drawn. This means that the robot server can be busy on bulk requests. You need several machines = an orchestrator appears and more licenses are required to have several streams at the same time.

- The robot that emulates user actions is sensitive to large system updates. If the interface changes significantly, a bot requires reconfiguration (this takes from 3 to 10 business hours).

- The robot can not act on its own behalf (logs in), it acts on behalf of a specific user each time.

About the latter should be explained separately. For example, when we ran into the HR-system, there were certain problems. It was necessary to put a bot account in the system, which has the same rights as a personnel officer, so that he would use HRSM, Outlook with mail and contacts and calendars. When it came to the idea that the robot would work in the butt, it plunged everyone into ideological shock and stupor.

The rules are simple: the rights of each employee are indicated in the Human Resource Management System. Access is taken from there. In HRMS you can not get robots, because it is “Human”. And the data on the accounts and contacts are only there. For an employee to work, he must be a man. As a result, for alpha, we took the developer’s account and started a robot on his behalf. This automatically means that we have recorded all the shoals of the robot on him. In beta, we came up with a more interesting life hacking: we brought a contractor (consisting of this robot) and started it with the developer-responsible in the system. However, if the robot does something wrong, you will have to somehow take him to court to answer. And I solder the negligence and lack of proper verification of the counterparty.

And one more drawback: yes, the robot may be wrong. Our policy is no irreparable actions without a person looking. That is, the robot can prepare all the documents for a business trip and order it itself, but the latter we do not allow it. He puts all the documents on the network ball to the employee of the business travel department, and he already spends the company's money. Because we don’t want to send the entire office of two thousand employees to Anadyr and pay for it because of some rare bug.

Therefore, we have the first law of robotics: the robot does everything on behalf of a person.

What happened next

We came up with a dozen more applications for bots: reporting in a chat bot, loading primary documents into Telegrams to be added to 1C, dialogue on filling in documents, reports on business trips, mass creation of documents for a report and sending to all participants. And so on.

It turned out that almost all of this can be done in 2 days, which we proved. With the limitations described above.

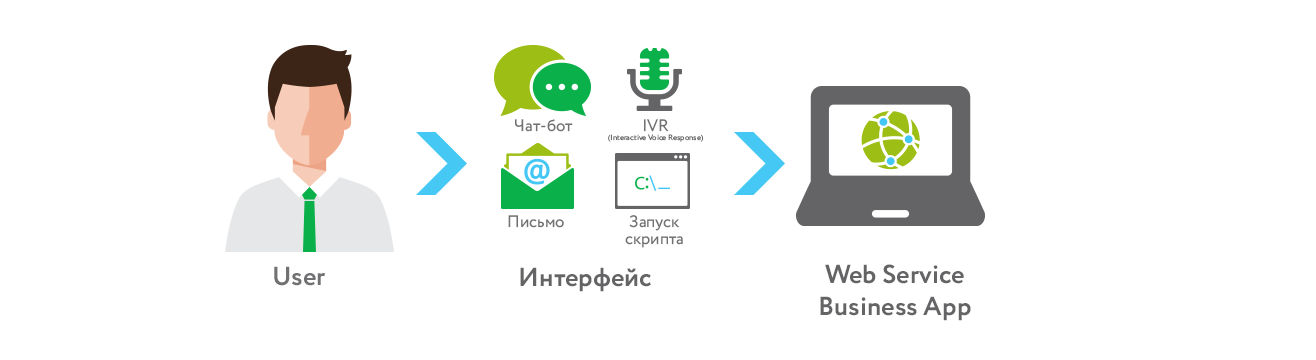

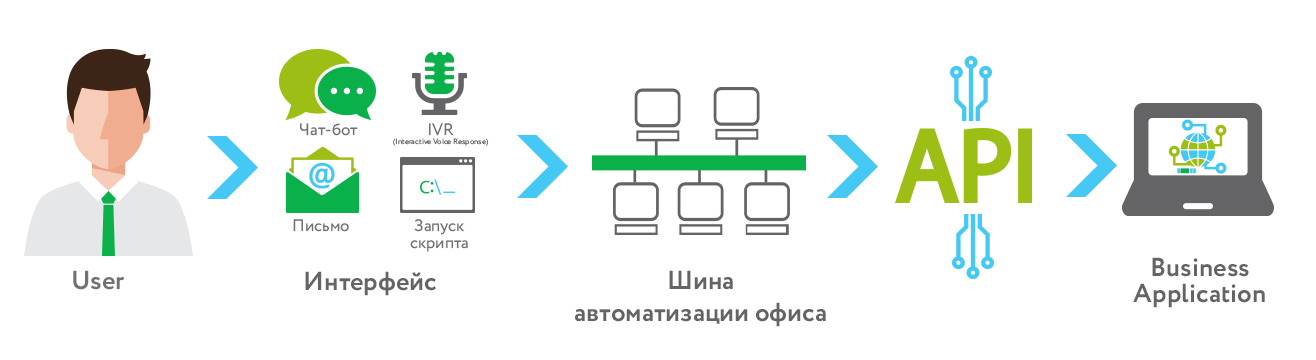

The logic is this: if everything works, then you can estimate how much the robot saves time and if someone uses it. If you need to do everything “with feeling, with sense, with arrangement” and the customer has a lot of time - you can, of course, transfer the task to the automation department, and there the power guys do everything smoothly and smoothly without haste. Since the interfaces for the user are the same, he does not even notice the transition. If you need to quickly automate tasks such as printing badges and certificates (it took two nights for our girls from the event direction), then the robot just works, that's all.

The evolution is as follows:

1. Prototype or not very responsible task

2. Stage of operation

3. Responsible service

And then a dangerous myth was born. When our tops saw how it works, - let slip about the process with the words "labor zero." So, this is not zero. Yes, for quick prototyping everything can be done in two days. For normal debugging - two weeks. But then everything that works should be replaced with API-driven or something systemic, and not user emulation.

Another robot acts on behalf of someone. In our case, it acts on behalf of the user authorized in the chatbot. We do not allow it outside - accordingly, the robot cannot order from someone. Hard check that is sewn at all levels from the interface to the middleland. The correctness of the entered data for the form is checked by the bot itself. The interface bot (chat bot) checks the data entry logic (a trip cannot be earlier than the current date), and the executing bot (user emulator) checks the integrity of the incoming data.

The first application was in the training department. For them, it was real magic. The girls load the list of participants (and sometimes 200 people) into the BPM system, and the robot in 5-6 minutes does the following:

- Orders a pass at all.

- Imposes badges on the template and prepares certificates.

- Considers catering (food, coffee break) at all the well-known suppliers. In the current implementation - one, because we have it technically one (the choice is made before the bot).

- Forms razdatku and sends to print or secretaries. Warns anyone who may need it, for example: security, training hall engineers, sound engineers, and so on.

- Collects a meeting and sends all participants an invitation, a map to the venue, contact person and program of the event.

- Reminds everyone for a day.

Each task is a separate microservice. It is clear that it is difficult to maintain all this, but as it develops, these microservices will go not to emulate a user in a terminal server, but to more advanced automation. This will also allow to unload the robot, but it can be anything, since we have only 4 threads:

As a result, this part proved to be excellent in operation. The technology stack is:

The hardest part is the robot orchestrator to distribute the streams. We have a large office, almost 2 thousand people. The orchestrator is needed for controlling robots, assigning tasks to them, setting up a schedule of tasks for robots, monitoring their work, managing task queues, sharing tasks between robots, etc. From a technical point of view, you need a separate entity - and you need a terminal access server so that different tasks go in different jobs bot. During the tests there was a lot of negativity due to the employment of the robot - it is single-tasked. If one robot fails, and the task execution time is quite critical, then you need to put a second robot to help (for the add. License). If the task execution time is NOT critical, then the task can be put in a queue (using the orchestra), and when the robot is free, it will execute the set in the queue.

The bottom line is that our developers are already showing fingers and saying that these are the very people who will ensure a massive reduction in staff. We are a little afraid that we will be beaten, but proud. And retell all the joke about the government.

Links

- What can chat bots in the office

- What can IVR

- My mail is digital@croc.ru

Source: https://habr.com/ru/post/346720/

All Articles