Announcement of the conference Heisenbug 2018 Piter. Make Heisenbug, not warǃ

Conference : Heisenbug 2018 Piter

Date : May 17-18, 2018

Location : St. Petersburg, Park Inn by Radisson Pulkovskaya Hotel

Fourth Heisenbug officially be. Between the two Heisenbug passes six months. This is a long time, which allows you to learn how to live with the information received, and we - to improve the conference.

Analysis of feedback from participants

Before writing this announcement, I opened the feedback from participants and reviewed 292 responses. The main theme of half of the reviews is the need (or lack thereof) to have reports on manual testing. This is what I would like to discuss with you.

The day before Heisenbug swore that he had turned into some kind of Autobug. I hope no one will be offended if I bring one impersonal review on this topic, both from the Weights and Measures Chamber:

“If in a nutshell -“ brain automation . ” It should have called the conference. There were only a couple of reports about NOT automation. After all, you yourself know that automation is just a part of testing, and not at all the main one. ”

In drawing up the next program, such opinions were taken into account. The program was more balanced: “manual” testing was already discussed in a large part of the reports. But right at the conference, in the discussion zones, we met with heaters, who, firstly, vehemently want to automate everything ©, and secondly, they consider the “manual” reports too light and boring. And some of the people in the reviews wrote that they would like more general reports (such as a story about testing capital objects - descriptions , slides ), and even the appearance of reports about testing process management (now they are obviously not there).

On the one hand, this is good: if a lot of people are ready to have a snack on a certain topic, it means that it is really interesting for them, it takes them for the soul.

On the other hand, I am somewhat confused by the question itself.

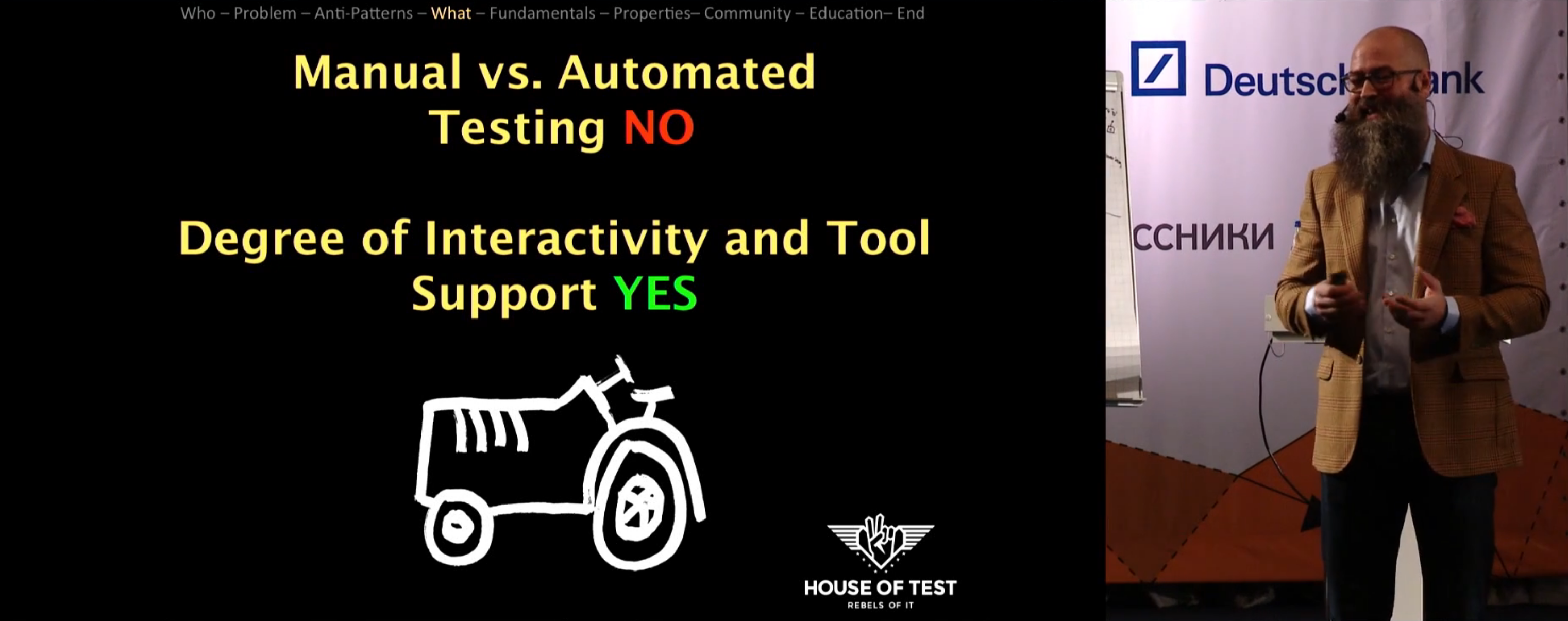

No Such Thing as Manual Testing

In the very first report of the very first Heisenbug ( description , slides , YouTube ), the speaker well formulated: “No Such Thing as Manual Testing!” I highly recommend it.

On Habré somehow did not develop historically with watching videos, so further there will be a short squeeze from the first part of the report.

When discussing testing, in shaping our views on it, we often encounter violations of the system-conceptual model or simply its absence. There are whole areas of testing where attention focuses on some particular aspects - absolutely not those that are needed in meaning. Tests made in this way are useless, and the work they have tested is, to put it mildly, not perfect.

Typical shoals can be described as anti-patterns (which are described in the report). For example, there is a completely stupid idea about using certifications as an input filter for a tester's vacancy - we all know that many tough testers do not have any certifications (unless they come up with them and will distribute them to others). Just an example.

The same cashier is standardization resulting from the commoditization of test methods. Is the familiar word ISO 29119? (Do not google, this is International Software Testing Standard ). Some systems benefit from standardization, while others do not. For example, outlets better be the same. Software testing, on the contrary, benefits from diversity. The greater the variety, the more likely it is to find something interesting in the tested product.

Sometimes we are completely pointless focusing not on the work itself, but on the auxiliary and intermediate results of this work. Preparation of test plans of different types and types, which takes 7 hours out of 8 per day. And all this is just to show the manager how we are moving up to the second decimal place. All this is bad and wrong. A tester is not a writer of papers, but a person engaged in solving really interesting problems.

After that, the speaker goes to the burning topic: do not automate anything vs. automate everything. The first option is the stone age, and no one in their right mind would agree to that. The second is usually impossible: either it is too expensive, or simply impossible.

The discussion is illustrated by an example of an MVP (minimum viable product) calculator with buttons for performing “2 + 2” addition. Very funny example, it is necessary to look. ( link to youtube ). The speaker collects the opinions of the audience about what test cases are needed, and then demonstrates that the audience didn’t find much, even with such a simple example. The idea is that we have a bunch of implicit assumptions about behavior. A bunch of things that we can not foresee. The task is too complicated to solve in the general case for an arbitrarily large system. Conclusion: a person is a machine for searching for meaning in different situations (both standard and non-standard), using a person for this is the most effective solution.

Then the speaker asks us a question: what, in fact, is the essence of our work as testers? Most likely, in the delivery of product quality information to interested parties (such as stakeholders or development managers).

Therefore, it is necessary to clarify the nature of the activity we are talking about. The speaker proposes to distinguish between "verification" and "experimentation." Verification is done with respect to some standard or reference.

As for test automation, not all tests are pass / fail tests, even if they are formulated as checks. A not always failed test means we have a problem. Part of the tests will necessarily work, then not work. If SUDDENLY everything turned green - this is usually not a reason for joy, “as we are all right,” but rather, an occasion to quickly begin a check: where are we? Or, for example, if suddenly all the tests turned red, then it was rather because of a broken testing environment, than because the developers broke the entire code in one evening. You as a person (a machine for searching for meaning) will understand this much better than slyly written heuristics. However, automation in this area does very well.

But there are things about which we do not know that we don’t know something about them. Unknown unknowns. This is an area of experimentation. An ordinary programmer cannot automate the search for truly new information — this is unusually difficult. Even to think about it is difficult (we recall the example of a calculator) - but for a person, nevertheless, it is quite possible.

For example, if you look at it this way, it turns out that TDD is not “testing” in its pure form. It does not find new information, but only checks the existing one. (The speaker refers to the law of the required diversity of Ashby ). Rather, this is such a special, lively documentation.

As a result, the speaker comes to the central idea of the report: “No Such Thing as Manual Testing”. He asks the audience: “Are there any software developers among you?” Several people raise their hands. The next question: “Are you manual developers or automatic? You press the buttons on the keyboard, enter all sorts of things into the IDE - obviously you are manual? ”(There is a funny discussion on this topic, you can watch on YouTube ). Everybody laughs.

Such a separation sounds crazy about programmers. It is argued that with respect to testers it is no less wild. The main part of the work of both the programmer and the tester is the use of his or her brain for everything discussed above. Use yourself as a machine to search for meaning.

If we say that part of the work is some kind of “manual” testing, then this usually means that someone who performs it is much less experienced and unreasonable than you. Relatively speaking, we take the homeless from the street and make figachit according to plan, from cover to cover. It is usually assumed that “manual testing” is done only because they are not yet ready to “automate” it. This dichotomy is embedded in the very word “manual testing”.

In fact, as a tester, you benefit in many ways. Sometimes automation helps, sometimes - work on the test plan, sometimes - deep experiments. At the beginning of the project, a lot of good can be done by simply asking the right questions to colleagues. If we decide to throw out the term “manual testing”, we can think about this: how much do we use tools in our activities? We cannot live without tools (even the keyboard is a tool, and no one has yet learned how to enter a code with the power of thought), and different tasks require different degrees of interaction with them, but the most important element is you and your ability to search for meaning.

findings

There is another half of the report , but let's return to the original holivor. If you look at the previous Heisenbug program , the reports can be easily distributed across the interactivity gradient from 0 to 10. Starting from the Tester Tools by Yulia Atlygina, which is closer to the left side, and ending with Docker taming by Sergey Test Sergey Egorov. From this point of view, there are no particular contradictions between the camps of the “manual operators” and the “automatizers”, and the reports will be useful to everyone.

Of course, with certain reservations: a tester needs to have a certain level of understanding of programming for quick perception of reports on automation, and the programmer has a wide range of topics related at the same time (and not only in his one favorite tool like Java or .NET). But nothing supernatural. On the program's website, next to each report, there are special icons ("smoothies", "bearded" and "hardcore"), which show the necessary level of immersion in the topic. It makes no sense to go on "hardcore" in someone else's topic (you will not understand anything at all), but a trip to "hardcore" in "your" topic may be useful. As always there will be discussion zones in which you can find the speaker of interest and interrogate with passion - the speakers know their subject very well and can answer even the most bizarre questions.

In short, in my opinion, there is nothing impossible in the idea of finding “manual workers” and “automatizers” under one roof, and this division itself emerged solely as a result of a distorted belief system regarding modern IT realities. Make Heisenbug, not war.

Next steps

Call for Papers!

The Heisenbug 2018 Piter Conference will be held May 17-18 of this year. This is a great technical conference for testing professionals. Here everyone will gather: testers, programmers (developing tests for their code), specialists in automatic, load and other testing, managers of testers teams, and so on.

Therefore, we need reports! You can also make a report.

(And if you do, you'll get to the conference for free, of course.)

I will quickly and decisively dispel a few doubts.

Many believe that they have nothing to tell. Some of the best reports began precisely with such a delusion. In fact, what may seem like a chore to you will be a revelation for someone. For example, you took and debugged the process of load testing in a cunning way - and that’s it, now it’s become a routine for you. But for most people, it remains the same challenge, for the chance to hear the solution to which they will do anything. Right now, for a moment, distract from reading this post and think about what you could tell.

What if you never thought of yourself as a speaker? You do not know how to properly feed material from the scene, arrange the slides and so on? No problem. We have people specifically for this who help to do everything — they tell, show, and conduct training. This is a proven technology, the output is not only a well-developed report, but also an understanding of how to make reports in general. This is a chance to work with our Program Committee - people like Nikita Makarov (Head of Automated Testing at Odnoklassniki), Andrei Satarin from Amazon, and Vladimir Sitnikov from Netcracker.

Yes, we have a large well-known platform on which there is competition. Perhaps your report will not pass through the selection of the Program Committee. But even in this case there will be only pluses: the report, which has almost passed through our committee, will be taken almost anywhere.

I remind you that the reception of reports will end on February 14, i.e. just a month later. If you are thinking about coming with a report, fill out the registration form right now !

We are particularly interested in the following topics:

- Test automation;

- Stress Testing;

- Performance testing, benchmarking;

- Integration testing of modular / distributed systems;

- Concurrency testing;

- Testing mobile applications;

- Manual testing;

- UX, Security, A / B, static code analysis, code and product metrics, etc .;

- Instrumentation, environment for testing;

- Comparison tools for testing;

- Testing in GameDev;

If your topic is related to testing, but does not fit exactly one of these descriptions - send it anyway, let's figure it out.

Product placement and other advertising reports are not accepted, and people offering them are taken on a pencil ( we warned ).

For participants

What can be done next?

First, write in the comments what you think about all this. Do you agree with me? And with Ilari Henrik Aegerter and the “No Such Thing as Manual Testing” talk? Write a comment right now, otherwise you'll forget.

Secondly, the conference program is still not fixed. In the comments you can tell what reports you want to visit. You can even ask for specific speakers! The only rule is that you cannot demand a replay of the old report (you can then look at old reports on our channel on YouTube when they are available to everyone).

Well and most importantly: you can come to Heisenbug 2018 in St. Petersburg or join the online broadcast. Tickets can be purchased on the official conference website .

')

Source: https://habr.com/ru/post/346646/

All Articles