Richard Hamming: Chapter 4. Computer History - Software

“Please remember that the inventor often has a very limited idea of what he invented.”

Hi, Habr. Remember the awesome article "You and your work" (+219, 2265 bookmarks, 353k readings)?

Hi, Habr. Remember the awesome article "You and your work" (+219, 2265 bookmarks, 353k readings)?So Hamming (yes, yes, self-checking and self-correcting Hamming codes ) has a whole book based on his lectures. Let's translate it, because the man is talking.

This book is not just about IT, it is a book about the thinking style of incredibly cool people. “This is not just a charge of positive thinking; it describes the conditions that increase the chances of doing a great job. ”

')

We have already translated 9 (out of 30) chapters.

Chapter 4. Software

(For the translation, thanks to Stanislav Sukhanitsky, who responded to my call in the “previous chapter.”) Who wants to help with the translation - write in a personal or mail magisterludi2016@yandex.ru

As I pointed out in the last chapter, in the early days of computers, the control of calculations was done manually. Slow desktop computers were first manually controlled, for example, multiplication was performed by repeating the addition operation in a column with a shift of columns after each multiplier. The division was implemented by a similar operation of repeating subtractions. Over time, electric motors began to be used both for power and for more automatic control of multiplication and division operations.

Punching machines were controlled by wired connections through the patch panel, which told the machine where to look for the necessary information, what to do with it, and where to put dots on punch cards (or printed tabs); However, some control commands could also come from the cards themselves, usually from the X and Y holes on punch cards (other numbers could also control what happens to the program from time to time). For each work performed, their own switching panel was prepared, supplied with individual switching paths, while in accounting the switching boards were usually stored and used again every week or month, as they had to be taken into account in the accounting cycle.

When we went to the relay machines, after the creation by Stibitts of the first computer operating with complex numbers, they were mainly controlled by punched tapes. Paper punched tapes were a real damn for performing one-time problems — they are messy, and gluing punched tapes for fixing and looping is a very painful procedure (because glue tends to penetrate the machine’s fingers!). Because of the very small internal storage, in the early days of computing on computers, programs could not be stored in machines from an economic point of view (although I am inclined to believe that it was the designers who calculated this point).

ENIAC was first (1945-1946) controlled with wires, as if it were a giant switching card, but over time Nick Metropolis and Dick Clipperin turned it into a machine programmable from ballistic tables, which are huge racks with dials , using the decimal switch knobs, the decimal digits of the program being run were set.

The independent (internally) programming of the computer became a reality when the storage of memory became more accessible to programs, and, although this invention is usually attributed to von Neumann, he was only a consultant to Mauchly and Eckert and their team. According to Harry Husky, the internal programming of the computer was often discussed by the group before the start of the von Neumann consultations. The first widely available notes on internal programming (after Lady Lovelace wrote and published several programs for the Babbage analytical machine) were presented by von Neumann in the form of reports for the army. These notes were subsequently widely distributed, but never published in any printing house.

The early codes were mostly unicast, which means each instruction contained part of the instruction and the address where the required number should be located or to which it should be sent. We also had dual-address codes that were used for drum computers, which ensured the availability of the next instruction immediately after the completion of the previous instruction. The same logic was applied to mercury delay lines and other storage devices that were commercially available at that time. Such coding was called coding with minimal waiting time, and you can imagine the problems that a programmer encountered when calculating where to enter the next instruction and numbers (this was done to avoid possible delays and computer conflicts), not to mention the search for programming errors . After some time, a program called SOAP (a symbolic optimization build program) appeared that performed this optimization work on the IBM 650 machine. There were also triad and quad codes, but I will not talk about them in this book.

It is interesting to consider the work of the program SOAP - a copy of the program, let's call it program A, was loaded into the machine as a program and processed as data. The result of the calculations was program B. Then program B was loaded into IBM650, and program A was launched again, used as data to create a new program B. The difference between these times needed to create program B showed how optimized the SOAP program was (according to the SOAP metrics ). This action was an early example of self-compilation.

First, we programmed in absolute binary language, that is, we wrote the actual address and part of the instructions in binary language! We had two tendencies to avoid a binary language — the use of octal numbers, where you simply group binary numbers into a set of three numbers, and hexadecimal numbers, where you take four digits at a time. For the operation of hexadecimal numbers, we had to use A, B, C, D, E, F to represent numbers beyond 9 (and you, of course, already know the multiplication tables and the additions to 15).

If, when correcting an error, you wanted to insert some missing instructions, then you took the preceding instruction and replaced it with a transfer to some empty space. In this empty space, you entered the necessary instructions, which were removed from the previous memory, added instructions that you would like to insert, and then go back to the main program. Thus, the program soon became a sequence of jumps in rather strange places. Sometimes, as it almost always happens, program errors were detected and corrected, after which the above-described trick was used in which another free space was used. As a result, the program management path using the data warehouse soon became spaghetti jars. You might ask: why not just insert the fix directly into the working instructions? Because in this case, you had to go through the entire program and change all the addresses that in any way related to any of the transferred instructions! And nothing but this would help the program to work correctly!

We soon turned to the idea of creating reusable programs, as they are called now. Babbage had the following idea. We wrote math libraries to reuse blocks of code. But the absolute addressing of the library meant that every time the library procedure was used, it had to occupy the same places in the data warehouse. When the full library became too large, we had to switch to relocatable programs. The necessary software tricks were in the von Neumann reports that were never officially published.

The first published book on programming was Wilkes, Wheeler and Gill, and was intended for Cambridge, English EDSAC (1951). I, by the way, learned a lot from this book, and you will read about it in a few minutes.

Then someone realized that it was possible to write a short part of the program that would read the symbolic names of operations (for example, ADD) and translate them, during the input of the program, into a binary code used inside the machine (say, 01100101). This was soon followed by the idea of using symbolic memory addresses in a computer, which was a real heresy for old programmers. Now you will not see that very old heroic absolute programming (unless you are fooling around with a programmable computer hand trying to get him to do more than his designer and builder had ever planned).

Once I spent a whole year, thanks to the help of a lady programmer from Bell Telephone Laboratories, working on one big IBM 701 coding problem in absolute binary language, which, at that time, used the existing 32K registers. After a similar experience, I swore that I would never again ask anyone to do something like that. Having learned about the symbolic system in the town of Poughkeepsie, IBM, I ask the lady to take this symbolic system and use it to solve the next problem, which she did. As I expected, the lady said that the work has become much easier. Therefore, we told everyone about the new method - about 100 people in total, who ate in the cafeterias of the IBM office where this machine was located. About half of them were people from IBM, and half, like us, were hired by outsiders. As far as I know, only one person - yes, only one of all 100 showed interest!

Finally, a more complete and more useful Symbolic Assembly Program (SAP) program was developed - more years later than you think, during which most programmers continued their heroic way of programming in binary language. At the time when SAP first appeared, I would assume that about 1% of mature programmers were interested in it - using SAP was “for sissies”, and a real programmer would not waste machine power on building a program using SAP. Yes! The programmers did not want to admit it, but when they were pressed, they recognized that their old programming methods used much more computer time, which was spent on finding and fixing errors, than if using the SAP program. One of the main complaints about SAP was its use of a symbolic system, in the presence of which you do not know the address of any information in memory. Although in the early years we provided a scheme for displaying symbolic links to the actual storage, but, believe it or not, programmers later looked at these schemes with love, not realizing that they no longer need to know this information if they encountered problems in their work. in the system! But, when correcting errors, they still preferred to do this in the binary representation of the address.

FORTRAN, meaning FORmula TRANslation, was proposed by Backus and friends, and he was again confronted by almost all programmers. First, they said that it was impossible to create such a language. Secondly, if it could be done, it would be too wasteful for machine time and power. Thirdly, even if the idea of such a system worked, no respected programmer would use it - after all, only sissies can work with such things!

Using FORTRAN, like earlier symbolic programming, was very difficult for professionals to perceive. And such behavior is typical for almost all professional groups. Doctors absolutely do not follow the advice that they give to others, and even among them there is a high proportion of drug addicts. Lawyers often do not leave decent wills when they die. Almost all professionals slowly use their own experience in their work. This situation is well described by the old saying: "shoemaker without shoes." Think about how you will avoid such a common mistake in the future when you become a serious specialist!

Having access to FORTRAN, I lined up the work with him as follows: I told my programmer to do work on FORTRAN; analyze software errors; let me check this program to make sure that it solves the problem correctly; and only in this case could she, if she wanted to, rewrite the internal cycle of the work program in machine language to speed up work and save machine time. As a result, we were able, with about the same return on our part, to do almost 10 times more work than others. However, for the rest, programming on FORTRAN was also not for real programmers!

Physically managing the IBM 701 at IBM headquarters in New York, where we shot it, was terrible. It was a waste of machinery (at that time it cost 300 dollars an hour, and it was a lot) and human time. As a result, I refused to order a larger machine until I figured out where to get the monitoring system that someone created for our first IBM 709, and then modified it for IBM 7096.

Again, monitors, often referred to as “monitoring systems” these days, like all the previous things I mentioned, should be an obvious acquisition for everyone who used a computer day after day; However, most users, it seems to me, were too busy to think or observe to see how bad everything is and how much a computer can do to make it easier and cheaper to use various things. Obvious things are often noticed by a person suspended from work, or someone like me, who thinks and wonders what he does and why this work is necessary. Old people will learn and work as they are used to, probably because of pride in their past and unwillingness to admit that there are better ways than those they have used for such a long time.

One way of describing what happened in the history of software is to gradually move from absolute to virtual machines. First, we got rid of the actual code instructions, and then from the actual addresses, and then at FORTRAN and the need to study the many internal components of complex computers and how they worked. We made the machine user from the machine itself.

Pretty early in Bell Telephone Laboratories we built a device that made tape devices virtual, independent of the machine itself. Then, and only when you have a fully virtual machine, can you transfer software from one machine to another without endless problems and errors.

FORTRAN was incredibly successful, much more successful than the expectations of someone because of the psychological fact that he did exactly what he meant by his name - he transmitted all those things that were studied in school as a formula. And it did not require the study of a new set of ways of thinking.

Algol, around 1958-1960, was supported by many worldwide computer organizations, including ACM. This was an attempt by theorists to significantly improve FORTRAN. But, being logicians, they created a logical, not a human language and, of course, as you know, this experiment failed. This, by the way, was due to Boolean logic, which is not understandable to mere mortals (and often even to the logicians themselves!). Many other developed logical languages that were supposed to replace the FORTRAN pioneer came and went, while FORTRAN (it is worth noting slightly modified) remains a widely used language, clearly showing the power of languages designed with psychology into account over languages developed with logic.

This was the beginning of a great deal of hope for specialized languages, which were POLs, which means problem-oriented languages. There is some merit in this idea, but the enthusiasm soon disappeared, because too many problems arose in more than one area and the languages tended to be incompatible. Moreover, in the long run, they were too complicated at the training stage for people at a sufficient level.

In 1962, the LISP language appeared. Various rumors spread around how it actually appeared, the probable truth is this: John McCarthy suggested elements of the language for theoretical purposes; this proposal was reviewed and significantly reworked by other people, and when a student noticed that he could write a compiler for this language in LISP using a simple self-compile trick, everyone was amazed, including, apparently, McCarthy himself . He urged the student to try to do this and almost overnight they switched from theory to the real LISP compiler!

Let me digress and discuss my experience with the IBM 650. It was a two-dress drum machine that worked with fixed decimal numbers. From my past research experience, I knew that I needed floating point numbers (von Neumann architecture, to say the opposite), and I need index registers that were not in the machine provided. IBM will once offer floating point routines, as they said, but that was not enough for me. I looked through the EDSAC programming journal, and there, in Appendix D, was a special program written to house a large program in a small repository. It was an interpreter. But if it was in Appendix D, did they see the importance of this program? I doubt it! In addition, in the second edition he was still present in Appendix D, apparently still not recognized by the authors.

This raises, I hope, the ugly question: when does something begin to be understood? , , ? . , , , , . , , , , , . , , (1937) , , , , , — .

. , - , - . : « , , , ». , , . , - , .

. , , : «, , !». , . . , , , , , , . , , , , (?) . , - ; , , . , , , .

IBM 650 . ( 1956 ) :

1. .

2. .

3. ( ).

4. .

, «» «» , !

, (top-down) , ( (bottom-up) ), , . , , , , .

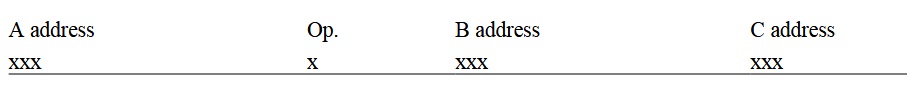

, — -A = . ( , ) :

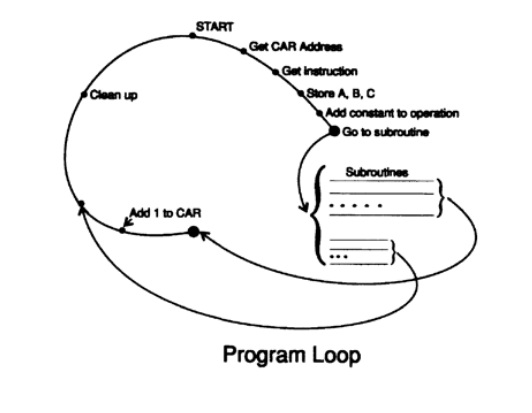

? Easy! ( 4.I): -, , CAR, 2000 IBM 650 . , . (1) CAR . (2) , , A, B C, IBM650. (3) . , . , , : , , . , , « B ». , , .(4) 1 CAR, , , . , ( 7 , ) CAR CAR.

— , , . . - , , , , . , , , . , .

, , , , , , .

1000 1999.

, , , 3 , 000 999 , . .

, , , , . , , , . , , - . , EDSAC, , .

. , , . , APL. APL — , , . « », , , . , .

APL , . ; 60% , — 40%. , , , , . , , , . , , , .

, , , . , . , , , . , (, “there” («») “their” («»)), (“record” («») , “tear” («»), as in tear in the eye, vs. tear in a dress ( , )). , — , , ; , . , , , , , , ( , ).

, , -. , , , , . , , , 2020 , ( ) .

, , , ADA , () , . , , — , , . ADA, , , ADA, , 90% , FORTRAN , , ADA !

. - 1950- ( ), IBM 701, : « , ». , , , .. — ( ), , — , . , ; ; ; ; , . . , , , . , — , , , — -. .

, - -, , .

( ), , .

, « » , , . , . , , , . , ( -), , . , , « », , , , , . .

« », , . , « ». : « , ?» ! , , , , - . . « », , , ( , ). , , . , , . , . , , — , , , , , ! , . ; , , , ! , , «», « » , .

There are many suggestions on how to improve the performance of both individual and groups of programmers. I have already mentioned the ascending and descending design; There are other approaches that use the positions of the main programmer, lead programmer, who proves that the program is correct in the mathematical sense (meaning the hierarchical design approach). The waterfall model for programming is one of these approaches. Although each has its merits, I believe only in one that is almost never mentioned. It can be called: "think before writing a program." Before you start programming, think carefully about all the details, including what tests your program should pass, as well as how the subsequent maintenance of your program will be performed. Initially the right decisionthan making changes to the code produced!

: , ! , , , , , , « » , .

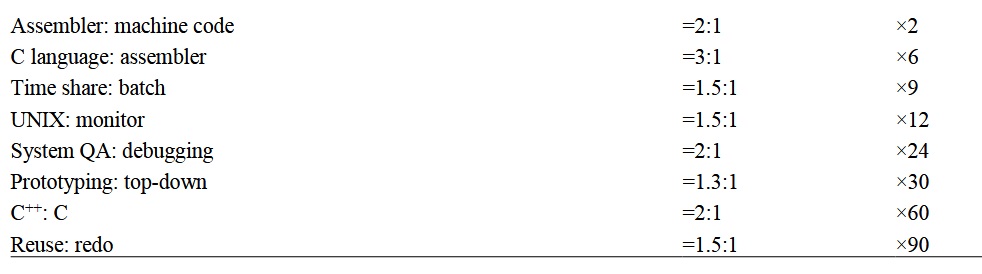

. 30 :

, , 90 30 ( 16%!). , , , . — ! , , , , .

, , , 10 . , , , — !

, , , , , , , , - . , ( )!

, . , , , , . , , . , . « » — , , . , , , , . , « » ( ) .

— . , , , - , . , , , , . - , , . - , .

, , , « ».

— , . - .

; , , . ? « », , ! , .

Does experience help? Can bureaucrats, after many years of writing reports and instructions, look at their work differently? I have no real data, but I suspect that over time these procedures only get worse! The usual use of the “language of government documents” for many years probably penetrates the writing style of bureaucrats and makes them only worse. And I suspect that the same thing awaits programmers! Neither many years of experience nor the number of languages used are grounds for accepting the fact that the programmer has become better due to this experience. When studying books on programming, you can make the obvious conclusion that most authors are not good programmers!

, , — . — !

To be continued...

Who wants to help with the translation - write in a personal or mail magisterludi2016@yandex.ru

Book content and translated chapters

Who wants to help with the translation - write in a personal or mail magisterludi2016@yandex.ru

- Intro to Doing Science and Engineering: Learning to Learn (March 28, 1995) (in work)

- Foundations of the Digital (Discrete) Revolution (March 30, 1995) Chapter 2. Basics of the digital (discrete) revolution

- "History of Computers - Hardware" (March 31, 1995) (in work)

- "History of Computers - Software" (April 4, 1995) is ready

- "History of Computers - Applications" (April 6, 1995) (in work)

- "Artificial Intelligence - Part I" (April 7, 1995) (in work)

- "Artificial Intelligence - Part II" (April 11, 1995) (in work)

- "Artificial Intelligence III" (April 13, 1995) (in work)

- N-Dimensional Space (April 14, 1995) Chapter 9. N-Dimensional Space

- "Coding Theory - The Representation of Information, Part I" (April 18, 1995) (in work)

- "Coding Theory - The Representation of Information, Part II" (April 20, 1995)

- "Error-Correcting Codes" (April 21, 1995) (in work)

- Information Theory (April 25, 1995) (in work, Alexey Gorgurov)

- Digital Filters, Part I (April 27, 1995) ready

- Digital Filters, Part II (April 28, 1995)

- Digital Filters, Part III (May 2, 1995)

- Digital Filters, Part IV (May 4, 1995)

- Simulation, Part I (May 5, 1995) (in work)

- Simulation, Part II (May 9, 1995) ready

- Simulation, Part III (May 11, 1995)

- "Fiber Optics" (May 12, 1995) in work

- “Computer Aided Instruction” (May 16, 1995) (in work)

- "Mathematics" (May 18, 1995) Chapter 23. Mathematics

- Quantum Mechanics (May 19, 1995) Chapter 24. Quantum Mechanics

- Creativity (May 23, 1995). Translation: Chapter 25. Creativity

- Experts (May 25, 1995) Chapter 26. Experts

- “Unreliable Data” (May 26, 1995) (in work)

- Systems Engineering (May 30, 1995) Chapter 28. System Engineering

- "You Get What You Measure" (June 1, 1995) (in work)

- How Do We Know What We Know (June 2, 1995)

- Hamming, “You and Your Research” (June 6, 1995). Translation: You and Your Work

Who wants to help with the translation - write in a personal or mail magisterludi2016@yandex.ru

Source: https://habr.com/ru/post/346566/

All Articles