Track for extracting word meanings from texts and resolving lexical polysemy

Every year, Russia hosts the largest conference on computational linguistics “Dialogue”, where experts discuss the methods of computer analysis of the Russian language, assess the level of computer linguistics and determine the direction of its development. Every year, as part of the “Dialogue” , competitions for the automatic processing of the Russian language are organized - Dialogue Evaluation. In this post, we will talk about how the Dialogue Evaluation competition is organized, and in more detail about how one of its components, RUSSE, passes and what awaits its participants this year. Go.

Over the past seven years, there have been 13 Dialogue Evaluation contests on various topics: from the competition of morphological analyzers to the campaign to assess the quality of machine translation systems. All of them resemble SemEval international text analysis system competitions, but with a focus on the features of word processing in Russian, a rich morphology or freer than in English language order. The tasks for Dialogue Evaluation in structure are similar to those for Kaggle in data analysis, SemEval in computational linguistics, TREC in information retrieval, and ILSVRC in image recognition.

')

The participants of the competition are given a task that needs to be solved within the stipulated time frame (usually within a few weeks). At SemEval and Dialogue Evaluation competitions are held in two stages. First, participants receive a task description and a training sample, which can be used to develop methods for solving the problem and assess the quality of the methods obtained. For example, in the 2015 track, there were pairs of semantically close words that participants could use to develop models of vector representations of words. Organizers discuss the list of external resources that can or cannot be used. At the second stage, participants receive a test sample. They should apply to it the models developed at the first stage. Unlike the training sample, the test does not contain any markup. The markup of the test sample at this stage is available only to the organizers, which guarantees the integrity of the competition. As a result, participants send their decisions on a test sample to the organizers, who evaluate the results and publish the rank of the participants.

As a rule, after the end of the competition test samples lay out in public access. They can be used in further research. If the competition is held within the framework of a scientific conference, participants can publish reports on participation in the conference proceedings.

RUSSE - competitions for evaluating computational lexical semantics methods for the Russian language

RUSSE (Russian Semantic Evaluation) - a series of events for the systematic evaluation of methods of computational lexical semantics of the Russian language. The first RUSSE competition took place in 2015 during the conference “Dialogue” and was devoted to the comparison of methods on how to determine the semantic similarity of words . To assess the quality of distributive semantics models, for the first time, data sets in Russian were created, similar to widely used datasets in English, such as WordSim353 . More than ten teams evaluated the quality of such models of vector representations of words for the Russian language, like word2vec and GloVe .

The second RUSSE competition will take place this year. It will focus on evaluating vector representations of word meanings ( word sense embeddings ) and other models for extracting meanings and resolving lexical ambiguity ( word sense induction & disambiguation ).

RUSSE 2018: extracting word meanings from texts and resolving lexical polysemy

Many words of a language have several meanings. However, simple models of vector representations of words, such as word2vec, do not take this into account and mix up different meanings of the word in one vector. This problem is designed to solve the problem of extracting the values of words from texts and automatically detect the meanings of an ambiguous word in the body of texts. As part of the competition, SemEval investigated methods for automatically extracting the meanings of words and resolving lexical ambiguity for Western European languages - English, French and German. In this case, a systematic assessment of such methods for the Slavic languages was not carried out. The 2018 competition will attract the attention of researchers to the problem of automatic resolution of lexical ambiguity and will reveal effective approaches to solving this problem using the Russian language as an example.

One of the main difficulties in processing Russian and other Slavic languages is the absence or limited availability of high-quality lexical resources, such as WordNet for English. We believe that the results of RUSSE will be useful for the automatic processing of not only Slavic languages, but also other languages with limited lexical-semantic resources.

Task Description

The participants of RUSSE 2018 are invited to solve the problem of clustering short texts. In particular, at the testing stage, participants receive a set of ambiguous words, for example, the word “lock”, and a set of text fragments (contexts) in which target ambiguous words are mentioned. For example, "Vladimir Monomakh's lock in Lyceche" or "movement of the bolt with the key in the lock." Participants must cluster the resulting contexts so that each cluster corresponds to a separate word value. The number of values and, accordingly, the number of clusters is not known in advance. In this example, it is necessary to group contexts into two clusters, corresponding to two meanings of the word “lock”: “a device preventing access to somewhere” and “structure”.

For the competition, the organizers have prepared three sets of data from different sources. For each such set, you need to fill in the column “predicted value identifier” and upload the file with answers using the CodaLab platform. CodaLab gives the participant the opportunity to immediately see their results calculated on the part of the test dataset.

The task posed at this competition is close to the task formulated for the English language at the SemEval-2007 and SemEval-2010 competitions. Note that in tasks of this type the participants are not provided with a reference list of word meanings - the so-called. inventory of values . Therefore, to mark contexts, a participant can use arbitrary identifiers, for example, lock # 1 or lock (device).

Main stages of the competition

From December 15 to January 15, participants can download the results of solving the problem on the CodaLab platform. In total, it is proposed to mark out three sets of data, designed in the form of separate tasks on CodaLab :

The competition offers three data sets for building models based on different shells and word value inventories described in the table below:

The first data set ( wiki-wiki ) uses as the word meanings the division proposed in Wikipedia; contexts are taken from Wikipedia articles. The bts-rnc data set uses the Big Dictionary of the Russian Language as an inventory of word meanings, edited by S. A. Kuznetsov ( BTS ); Contexts are from the Russian National Corps ( NCRF ). Finally, the active-dict uses the meanings of words from the Active Dictionary of the Russian Language, edited by Yu. D. Apresyan; Contexts are also taken from the Active Dictionary of the Russian language - these are examples and illustrations from the dictionary entries.

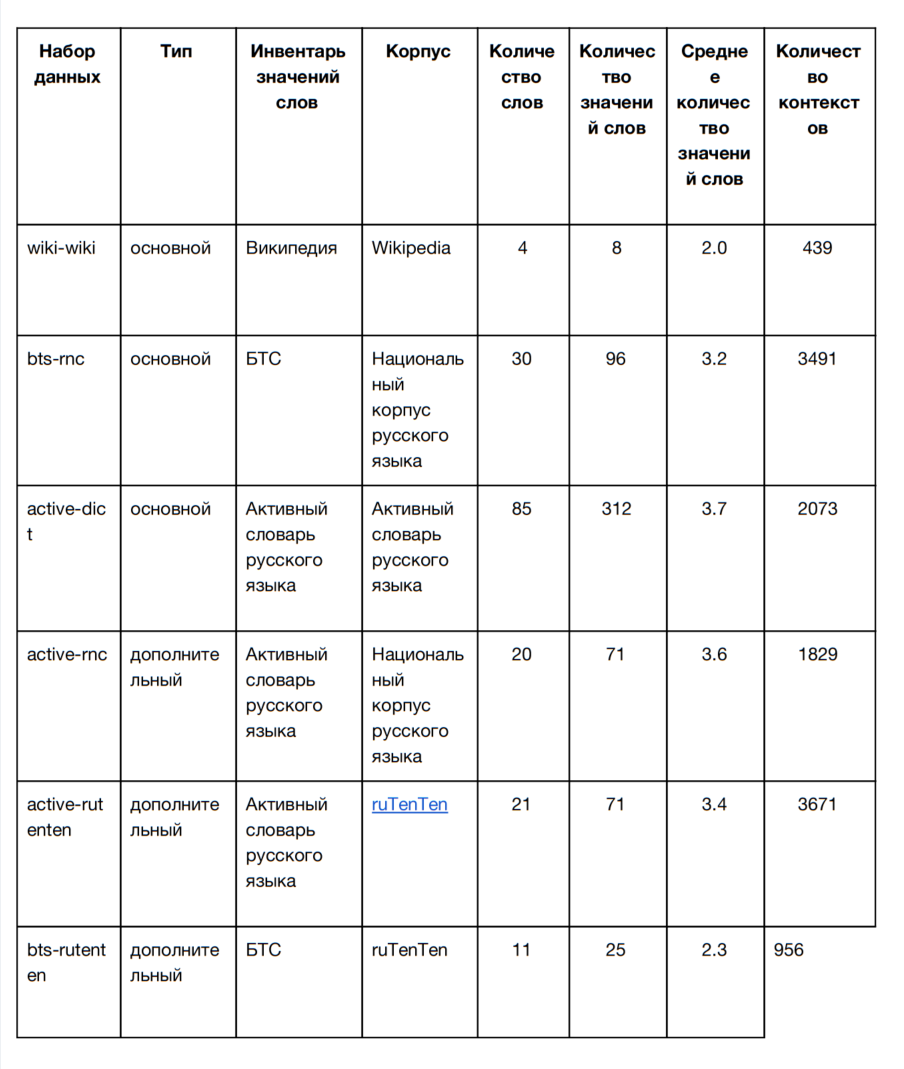

For training and system development, participants are provided with six data sets (three main and three additional), using different inventories of word meanings. All training data sets are listed in the table:

Test datasets (wiki-wiki, bts-rnc, active-dict) are designed in the same way as training ones. But they do not contain a field with the value of the target word - 'predicted value identifier'. This field is filled by participants. Their answers will be compared with the reference ones. Participating systems are compared based on a quality measure. Training and test datasets are tagged on the same principle, but the ambiguous target words in them are different.

Measure of quality

As in other similar competitions, for example, SemEval-2010 , in our competition the quality of the system is assessed by comparing its answers with the gold standard. The gold standard is the set of sentences in which people define the meaning of ambiguous target words. The target word in each sentence is manually assigned to one or another identifier from the specified inventory of word meanings. After each participant's system specifies the identifier of the predicted value of the target word in each sentence of the test sample, we compare the grouping of sentences by the word values from the participant's system with the gold standard. For comparison, we will use the adjusted Rand factor . Such a comparison can be considered a comparison of two clusterings .

Competition tracks

The competition includes two tracks:

Our approach to evaluating systems assumes that virtually any model of resolving lexical ambiguity can take part in the competition: approaches based on machine learning without a teacher (distributed vector representations, graph methods), and approaches based on lexical resources such as WordNet .

Reference systems

To make the task more understandable, we publish several ready-made solutions with which you can compare your results. For the track without the use of linguistic resources (knowledge-free), we recommend to pay attention to the system of resolving lexical ambiguity without a teacher, for example, AdaGram . For the track with the use of linguistic resources (knowledge-rich), we recommend using vector representations of word meanings built using existing lexical-semantic resources, for example, RuTez and RuWordNet . This can be done using methods such as AutoExtend .

Recommendations to participants

Training datasets have already been published. For a starting point, you can take our models published in the repository on GitHub (there is a detailed guide), and refine and improve them. Please follow the instructions, but do not be afraid to ask questions - the organizers will be happy to help!

Discussion and publication of results

Participants are encouraged to write an article about their system and submit it to

international conference on computational linguistics "Dialogue 2018" . The proceedings of this conference are indexed by Scopus. Within the special section of this conference the results of the competition will be discussed.

The organizers

The competition organizers will be happy to answer your questions in the group on Google and on Facebook . More information is provided on the competition page .

Sponsors and partners

Dialogue Evaluation - competition for assessing the quality of methods for analyzing texts in Russian

Over the past seven years, there have been 13 Dialogue Evaluation contests on various topics: from the competition of morphological analyzers to the campaign to assess the quality of machine translation systems. All of them resemble SemEval international text analysis system competitions, but with a focus on the features of word processing in Russian, a rich morphology or freer than in English language order. The tasks for Dialogue Evaluation in structure are similar to those for Kaggle in data analysis, SemEval in computational linguistics, TREC in information retrieval, and ILSVRC in image recognition.

')

The participants of the competition are given a task that needs to be solved within the stipulated time frame (usually within a few weeks). At SemEval and Dialogue Evaluation competitions are held in two stages. First, participants receive a task description and a training sample, which can be used to develop methods for solving the problem and assess the quality of the methods obtained. For example, in the 2015 track, there were pairs of semantically close words that participants could use to develop models of vector representations of words. Organizers discuss the list of external resources that can or cannot be used. At the second stage, participants receive a test sample. They should apply to it the models developed at the first stage. Unlike the training sample, the test does not contain any markup. The markup of the test sample at this stage is available only to the organizers, which guarantees the integrity of the competition. As a result, participants send their decisions on a test sample to the organizers, who evaluate the results and publish the rank of the participants.

As a rule, after the end of the competition test samples lay out in public access. They can be used in further research. If the competition is held within the framework of a scientific conference, participants can publish reports on participation in the conference proceedings.

RUSSE - competitions for evaluating computational lexical semantics methods for the Russian language

RUSSE (Russian Semantic Evaluation) - a series of events for the systematic evaluation of methods of computational lexical semantics of the Russian language. The first RUSSE competition took place in 2015 during the conference “Dialogue” and was devoted to the comparison of methods on how to determine the semantic similarity of words . To assess the quality of distributive semantics models, for the first time, data sets in Russian were created, similar to widely used datasets in English, such as WordSim353 . More than ten teams evaluated the quality of such models of vector representations of words for the Russian language, like word2vec and GloVe .

The second RUSSE competition will take place this year. It will focus on evaluating vector representations of word meanings ( word sense embeddings ) and other models for extracting meanings and resolving lexical ambiguity ( word sense induction & disambiguation ).

RUSSE 2018: extracting word meanings from texts and resolving lexical polysemy

Many words of a language have several meanings. However, simple models of vector representations of words, such as word2vec, do not take this into account and mix up different meanings of the word in one vector. This problem is designed to solve the problem of extracting the values of words from texts and automatically detect the meanings of an ambiguous word in the body of texts. As part of the competition, SemEval investigated methods for automatically extracting the meanings of words and resolving lexical ambiguity for Western European languages - English, French and German. In this case, a systematic assessment of such methods for the Slavic languages was not carried out. The 2018 competition will attract the attention of researchers to the problem of automatic resolution of lexical ambiguity and will reveal effective approaches to solving this problem using the Russian language as an example.

One of the main difficulties in processing Russian and other Slavic languages is the absence or limited availability of high-quality lexical resources, such as WordNet for English. We believe that the results of RUSSE will be useful for the automatic processing of not only Slavic languages, but also other languages with limited lexical-semantic resources.

Task Description

The participants of RUSSE 2018 are invited to solve the problem of clustering short texts. In particular, at the testing stage, participants receive a set of ambiguous words, for example, the word “lock”, and a set of text fragments (contexts) in which target ambiguous words are mentioned. For example, "Vladimir Monomakh's lock in Lyceche" or "movement of the bolt with the key in the lock." Participants must cluster the resulting contexts so that each cluster corresponds to a separate word value. The number of values and, accordingly, the number of clusters is not known in advance. In this example, it is necessary to group contexts into two clusters, corresponding to two meanings of the word “lock”: “a device preventing access to somewhere” and “structure”.

For the competition, the organizers have prepared three sets of data from different sources. For each such set, you need to fill in the column “predicted value identifier” and upload the file with answers using the CodaLab platform. CodaLab gives the participant the opportunity to immediately see their results calculated on the part of the test dataset.

The task posed at this competition is close to the task formulated for the English language at the SemEval-2007 and SemEval-2010 competitions. Note that in tasks of this type the participants are not provided with a reference list of word meanings - the so-called. inventory of values . Therefore, to mark contexts, a participant can use arbitrary identifiers, for example, lock # 1 or lock (device).

Main stages of the competition

- November 1, 2017 - publication of a training dataset

- December 15, 2017 - release of test data set

- February 1, 2018 - completion of acceptance of results for evaluation

- February 15, 2018 - announcement of the competition results

From December 15 to January 15, participants can download the results of solving the problem on the CodaLab platform. In total, it is proposed to mark out three sets of data, designed in the form of separate tasks on CodaLab :

- based on Wikipedia ,

- based on illustrations and examples from the explanatory dictionary ,

- based on texts from the internet

Datasets

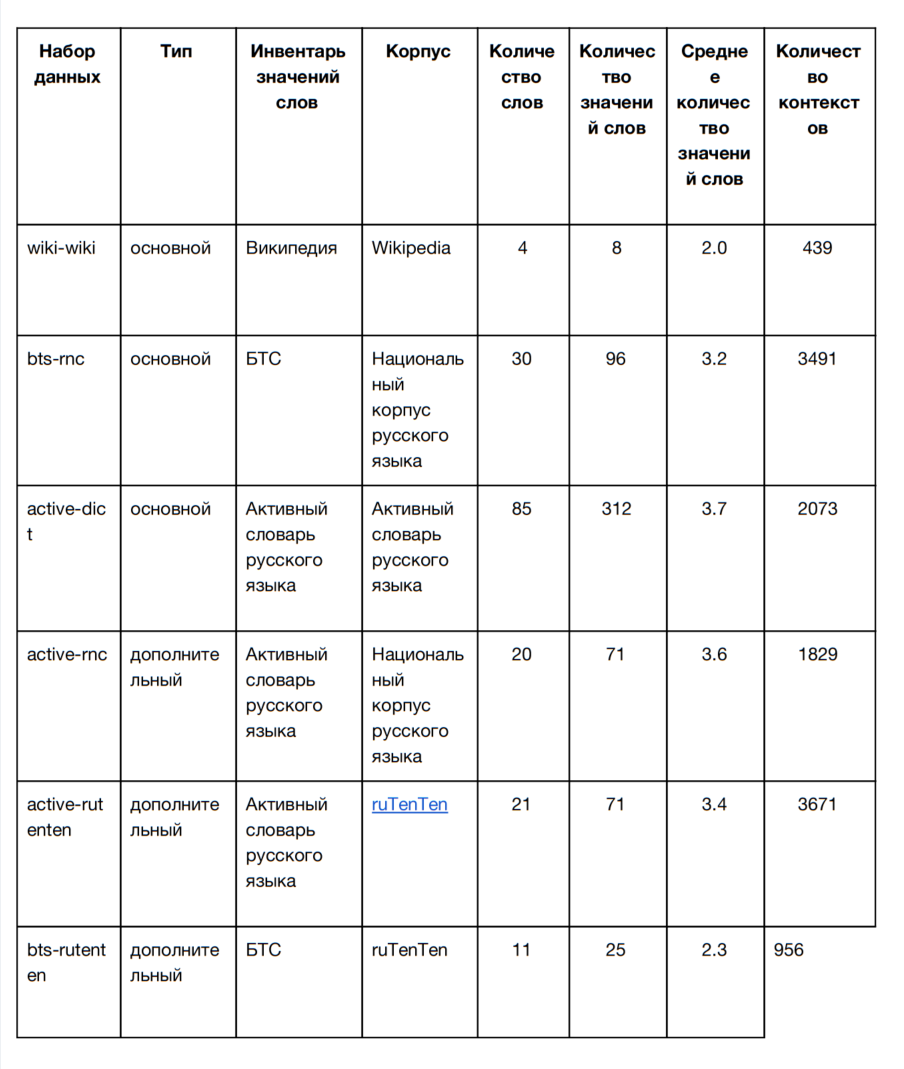

The competition offers three data sets for building models based on different shells and word value inventories described in the table below:

The first data set ( wiki-wiki ) uses as the word meanings the division proposed in Wikipedia; contexts are taken from Wikipedia articles. The bts-rnc data set uses the Big Dictionary of the Russian Language as an inventory of word meanings, edited by S. A. Kuznetsov ( BTS ); Contexts are from the Russian National Corps ( NCRF ). Finally, the active-dict uses the meanings of words from the Active Dictionary of the Russian Language, edited by Yu. D. Apresyan; Contexts are also taken from the Active Dictionary of the Russian language - these are examples and illustrations from the dictionary entries.

For training and system development, participants are provided with six data sets (three main and three additional), using different inventories of word meanings. All training data sets are listed in the table:

Test datasets (wiki-wiki, bts-rnc, active-dict) are designed in the same way as training ones. But they do not contain a field with the value of the target word - 'predicted value identifier'. This field is filled by participants. Their answers will be compared with the reference ones. Participating systems are compared based on a quality measure. Training and test datasets are tagged on the same principle, but the ambiguous target words in them are different.

Measure of quality

As in other similar competitions, for example, SemEval-2010 , in our competition the quality of the system is assessed by comparing its answers with the gold standard. The gold standard is the set of sentences in which people define the meaning of ambiguous target words. The target word in each sentence is manually assigned to one or another identifier from the specified inventory of word meanings. After each participant's system specifies the identifier of the predicted value of the target word in each sentence of the test sample, we compare the grouping of sentences by the word values from the participant's system with the gold standard. For comparison, we will use the adjusted Rand factor . Such a comparison can be considered a comparison of two clusterings .

Competition tracks

The competition includes two tracks:

- In a track without the use of linguistic resources (knowledge-free track), participants must cluster contexts according to different values and assign an identifier to each value using only a corpus of texts.

- In a knowledge-rich track lane, participants can use any additional resources, such as dictionaries, to identify the meaning of the target words.

Our approach to evaluating systems assumes that virtually any model of resolving lexical ambiguity can take part in the competition: approaches based on machine learning without a teacher (distributed vector representations, graph methods), and approaches based on lexical resources such as WordNet .

Reference systems

To make the task more understandable, we publish several ready-made solutions with which you can compare your results. For the track without the use of linguistic resources (knowledge-free), we recommend to pay attention to the system of resolving lexical ambiguity without a teacher, for example, AdaGram . For the track with the use of linguistic resources (knowledge-rich), we recommend using vector representations of word meanings built using existing lexical-semantic resources, for example, RuTez and RuWordNet . This can be done using methods such as AutoExtend .

Recommendations to participants

Training datasets have already been published. For a starting point, you can take our models published in the repository on GitHub (there is a detailed guide), and refine and improve them. Please follow the instructions, but do not be afraid to ask questions - the organizers will be happy to help!

Discussion and publication of results

Participants are encouraged to write an article about their system and submit it to

international conference on computational linguistics "Dialogue 2018" . The proceedings of this conference are indexed by Scopus. Within the special section of this conference the results of the competition will be discussed.

The organizers

- Alexander Panchenko , University of Hamburg

- Konstantin Lopukhin, Scrapinghub Inc.

- Anastasia Lopukhina , Laboratory of Neurolinguistics, National Research University Higher School of Economics and Russian Academy of Sciences

- Dmitry Ustalov , University of Mannheim and IMM UB RAS

- Nikolai Arefyev, MSU and Samsung Research Center

- Natalia Lukashevich , MSU

- Alexey Leontyev, ABBYY

Contacts

The competition organizers will be happy to answer your questions in the group on Google and on Facebook . More information is provided on the competition page .

Sponsors and partners

Source: https://habr.com/ru/post/346550/

All Articles