Docker in production: update

Clarification from the translator: the post, the translation of which you see in front of you, was written on February 23, 2017, based on the original Moby / Docker post in production. The history of failure , the translation of which (for the olegchir authorship) here, on Habré, caused lively discussions. Please read, making an amendment to the date of writing - the original text was written almost a year ago!

You can relate to the text in different ways. Try enjoying the morning coffee, look at yourself through the prism of the author's perception, and think about how he, with his experience and attitude to work, reacted upon hearing this or that advice about Docker?

Previous Moby / Docker article in production. The failure story turned out to be a hit. Today, after long discussions, hundreds of reviews, thousands of comments, meetings with various people, major players, even more experiments and more failures, it is time to publish updates of the picture.

We will look at the lessons learned from all the latest conversations and articles, but, to begin with, a clarification, a reminder, and a bit of context.

Disclaimer: intended audience

A large number of comments have shown that the world is divided into people of all 10 types:

1) Lovers

Usually support test or hobby projects where there are no real users. It may be considered that the use of the Ubuntu beta is normal, and everything is called “ stable ” obsolete.

You can't blame him: the code works on his machine.

2) Professionals

Supports critical systems for real business with real users; certainly responsible for their actions; probably gets phone calls when shit hits the fan .

... because it may not work in a system where another 586 million users log in.

What type of audience are you?

The border between these worlds is very thin, however, obviously, they have very different standards and expectations.

One of the reasons why I love the field of finance is because the risk culture is well developed. Contrary to popular belief, this does not mean a greater propensity for risk, but the habit of considering the possible risks and potential benefits, and comparing them with each other.

Think about your own standards for a minute. What do you expect to achieve with Docker? What will you lose if all the systems fail where it is running and the mounted storage volumes are damaged? This is an important factor that can influence your choice.

By publishing the last article I was pushed by a conversation with a guy from a certain financial company who asked what I thought about Docker, because they were going to consider the possibility of using it. Among other things, his company - and this guy in particular - manages systems that handle trillions of dollars, including the pensions of millions of Americans.

Docker is absolutely not ready to support the processing of my mom's pension, how can someone even think the opposite ??? Well, I think the experience gained with Docker was not well documented.

What do you want to be sure of to start using Docker?

As you should have known at this point, Docker is very sensitive to the selected kernel, host, and file system on which it is running. Choose the wrong combination and you will experience kernel panic, file system corruption, Docker daemon blocking, etc.

I had time to collect feedback on various working conditions and check out a couple more myself.

We will look at the results of the research: what was considered to be confidently working, what was not working, what was experiencing intermittent failures, or just as epically unsuccessful.

Attention, spoiler: in the case of Docker, there is nothing that is guaranteed to work.

Disclaimer: understand the risks and consequences

I tend to trust my own standards (as a professional who has to handle real money) and follow the findings (as I trust reliable sources working with real-world systems).

For example, if the combination of the OS and the file system is marked “ does not work: a catastrophic file system crash was lost with data loss of the entire volume, ” it is (from my point of view) not ready to work in production, but it is quite suitable for use by a student who needs Once again, run something in a raised vagrant virtual machine.

You may or may not face these problems. In any case, they are mentioned because their existence "in nature" is confirmed by people who have encountered them. If your environment is close enough to being used by these people, you are on the right path to becoming the next witness.

The worst thing that can happen - and usually happens - to the Docker, is that everything will be OK during the test on the test bench, and problems may emerge much later, after implementation, when you can’t just take and roll back all changes .

CoreOS

CoreOS is an OS that only knows how to launch containers, and is intended solely for launching containers.

In the last article, I concluded that CoreOS may be the only system on which Docker can successfully use. This statement may or may not be true.

We abandoned the idea of using CoreOS.

First, the main advantage of Docker is the unification of development and production environments. Having a separate OS in production only for container operation completely destroys this moment.

Second, Debian (we sat on Debian) announced the next major release in the first quarter of 2017. Since it takes a lot of effort to figure out the current system, and transfer everything to CoreOS — without a guarantee of success — it was much more sensible to decide to wait for the next Debian release.

CentOS / RHEL

CentOS / RHEL 6

Docker on CentOS / RHEL 6 - "will not work: there are known problems with the file system, until complete loss of data on the volume"

- Various known problems with the devicemapper driver.

- Critical issues with LVM volumes in conjunction with devicemapper, resulting in data corruption, crash of the container, and hang of the Docker daemon, requiring a hard reset.

- Docker packages are not supported in this distribution. There are many critical bug fixes that were released in CentOS / RHEL 7 packages, but were not transferred (backported) to CentOS / RHEL 6 packages.

The only sane way to switch to Docker in a large company still working on RHEL 6 => don't do it!

CentOS / RHEL 7

Initially, working on the kernel of branch 3, RedHat backed the functions from the kernel of branch 4 into it, which was mandatory for running Docker.

Sometimes this leads to problems, because Docker cannot correctly determine the custom version of the kernel and the presence of necessary functions in it. As a result, Docker cannot set the correct system settings, and time after time, mysteriously falls. Each time this happens, this can only be solved by the Docker developers themselves, who publish fixes that detect specific kernels for certain characteristics, and the appearance of these fixes is by no means guaranteed either by time or by systematicity.

There are various problems with using LVM volumes, depending on the OS version.

Otherwise, it may be lucky and not, and the result for you may be different than for me.

Starting with CentOS 7.0, RedHat recommended some settings, but now I can’t find this page on their website anymore. Anyway, there are a lot of critical fixes for later versions, so you MUST always upgrade to the latest version.

Starting with CentOS 7.2, RedHat recommends and supports only XFS, and they set special configuration flags. AUFS no longer exists, OverlayFS is officially considered unstable, BTRFS is in beta status (technology preview).

RedHat employees admit that it is very difficult for them to create suitable working conditions for Docker, and this is a serious problem because they resell Docker as part of their OpenShift solution. Try to make a product on an unstable core!

If you like to play with fire, then this OS seems to be for you!

Please note that this is exactly the case when you probably want to have RHEL, and not CentOS, since you will receive timely updates and useful support at your disposal.

Debian

Debian 8 jessie (stable)

The main reason for the problems we encountered was that, as described in the last article , we used Debian (stable) in production.

Roughly speaking, Debian froze the kernel on a version that does not support anything that requires Docker, and several components that are present contain bugs.

Docker on Debian - serious "will not work: there is a large number of problems in the AUFS driver (and not only), from which the host usually crashes, potentially destroying data, and this is only the tip of the iceberg.

Using Docker on Debian 8 is a 100% guaranteed, suicidal step, and it has been so since the creation of Docker a few years ago. The thought kills me that no one has ever documented this until today.

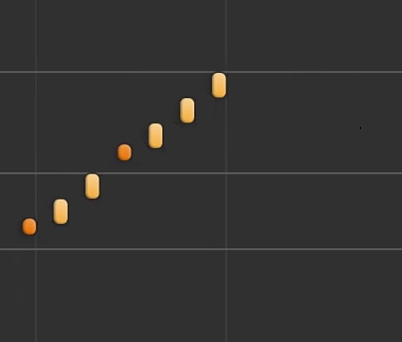

I wanted to show you a graph of AWS instances falling like dominoes, but I don’t have a suitable tool for monitoring and rendering, so instead I’ll give a piano chart that looks similar.

A typical Docker cascade failure on our test system.

A typical Docker cascade failure on our test systems: Test slave failure ... the next one tries to take the load in two minutes ... and also dies ... This particular cascade made 6 attempts to get around the problem, which is somewhat more than usual, but nothing unusual.

You need to set up alarms in CloudWatch to automatically restart dead hosts and send crash notifications.

By the way: you can also set up an alarm in CloudWatch to automatically send a finished problem report to your controller every time a problem lasts longer than 5 minutes.

I don’t brag, but we’ve supported Docker well. Forget about Chaos Monkey, this is a childish game, try running trading systems that process billions of dollars - on Docker [1].

[1] Please do not do this. This is a terrible idea!

Debian 9 stretch

Debian stretch is scheduled to become stable in 2017 (note: was supposed to be a release while I was writing and editing this article).

It will contain kernel 4.10, which turned out to be the last LTS published by that time.

At the time of release, Debian Stretch will become the most up-to-date stable operating system, and it is said to have all the great features needed to run Docker (of course, until the Docker requirements change).

He can solve many problems, and he can create a ton of new ones. We'll see how it goes.

Ubuntu

Ubuntu has always been fresher than regular server distributions.

Unfortunately, I don’t know any serious companies that run Ubuntu. This has become a source of big misunderstandings in the Docker lovers community, because developers and amateur bloggers are testing everything on the latest Ubuntu (even LTS [2]), but they have absolutely no idea of operating systems in the real world (RHEL, CentOS, Debian or some of these exotic unix / bsd / solaris).

I cannot comment on the situation with LTS 16, since I do not use this release. Is this the only distribution in which Overlay2 and ZFS are available, which gives a few more options for testing and maybe something will be found working?

The LTS 14 release is the final "will not work": too old, does not have the necessary components .

[2] I received quite a few comments and unfriendly emails from people who advise “just” to use the latest beta version of Ubuntu. As if the migration of all the working systems, changing the distribution kit and switching to the beta platform, which did not even exist at the time of the tests, would be a reasonable solution.

Clarification: as I said, I will not return to Docker, so I don’t want to spend an hour searching and collecting links, but it seems that I’ll still have to do it, since I myself receive them now in the most unusual form.

I received a rather offensive letter from a guy who is clearly in the amateur league, because he writes that “any idiot can run Docker on Ubuntu” and then goes on to list the software packages and advanced system settings that are required to run Docker on Ubuntu i.e. the fact that supposedly "everyone can find in 5 seconds with Google" .

His message is based on this error report, which is actually Google’s first result for “Ubuntu docker not working” and “Ubuntu docker crash” requests : Ubuntu 16.04 install for 1.11.2 hangs .

This bug report, published in June 2016, stresses that the installer on Ubuntu simply does not perform its task at all , because it does not install some of the dependencies that Docker needs to run, and then a lot of comments on how to work around the problem from different users, and shitless #WONTFIX from Docker developers.

The Docker employee gives the last answer in 5 months, and writes that the installer on Ubuntu will never be fixed, but the next major version of Docker may use some other components, so the problem itself may not be lost.

A new version (v1.13) was recently released - 8 months after that answer - and it has not yet been confirmed whether the same error manifests itself in it, but it is known for sure that it contains new changes in the Docker itself, breaking the old settings.

Quite usually against what you can expect from a docker. Here is the checklist for verification:

- Is everything so broken that Docker can't work at all? YES

- Is this broken for all users of, say, a large distribution? YES

- Is there a timely response from the developers confirming the problem? NO

- Does the acknowledgment acknowledge the existence of the problem and indicate the level of its seriousness? NO

- Are any fixes planned? NO

- Are there many ways around the problem that have different levels of danger and complexity? YES

- Will the problem ever be fixed? WHO KNOWS .

- Will there be a fix, if it ever happens, backported? NEVER .

- Is the advice to "just upgrade to the latest version" a universal answer to all problem reports? Of course .

AWS Container Service

AWS has an AMI specifically designed to launch Docker . It is based on Ubuntu.

As their internal sources confirm, AWS had serious problems in ensuring the operation of Docker at least somehow well enough.

As a result, they released AMI, collecting a custom OS with a custom Docker package with custom bug fixes and custom backport files. And after the release, they still spend a lot of effort and conduct ongoing tests to make it all work together.

If you are tied to Docker and are working on AWS, your only salvation may be to take the trouble with AWS.

Google Container Service

Google offers containers as a service. Google simply provides the Docker interface, containers run on Google’s internal containerization technologies, which may not suffer from all the flaws in the Docker implementation.

Do not misunderstand me! Containers are beautiful as a concept, the problem is not in theory, but in practical implementation and the tools available to us (that is, Docker), which are experimental at best.

If you really want to play with Docker (or containers) and do not work on AWS, then Google’s option will be your only reliable choice, especially since it comes with Kubernetes for orchestration, which makes Google’s solution into a separate league.

This option should still be considered experimental and set as a game with fire. It just so happened that the solution from Google is the only thing that can be trusted, as well as the only thing where there are both containers and orchestration at once.

Openshift

It is impossible to build a stable product on a broken core, but RedHat is trying.

From the reviews that I had, they try their best to mitigate the problems of Docker, and with mixed success. In your case, the result may be different.

Considering that the latter two options are oriented towards large companies that have something to lose, I really doubt the advisability of making such a choice (that is, choosing something that is built on top of Docker).

It is best to try using "regular" clouds: AWS, Google or Azure. Using virtual machines, plus the services offered by providers, will give, plus or minus, 90% of what Docker can do, and 90% of what Docker doesn’t. This is the best long-term strategy.

Most likely, in your case, the reason for wanting to launch OpenShift may be the inability to use a public cloud. Well, this is quite a hard path to the goal! (Good luck with that. Please write a comment about what your experience was)

Total

- CentOS / RHEL: Russian Roulette.

- Debian: Jump out of the plane naked.

- Ubuntu:

Not sureAmendment: LOL. CoreOS: Not worth the effort.

- AWS Containers: Your only salvation if you have to deal with both Docker and AWS at once.

- Google Containers: The only practical way to launch Docker, which is not completely insane.

- OpenShift: Not sure. It depends on how well support and engineers can work.

Business perspective

Docker does not have a business model and cannot be monetized. It is fair to say that they make releases on all platforms (including Mac / Windows) and integrate all sorts of functions (Swarm) in desperate attempts: 1) not to allow any competitor to have any distinctive feature; 2) make everyone use exactly Docker and Docker tools; 3) fully retain customers in their ecosystem; 4) publish a ton of news, articles and issues in the process, increasing HYIP; and 5) justify your own market valuation.

It is extremely difficult to grow immediately and horizontally and vertically with several products and markets (not looking at whether this approach is a reasonable or sustainable business decision, which is another aspect).

At the same time, competitors, namely Amazon, Microsoft, Google, Pivotal and RedHat, compete in a variety of ways, and earn more money on containers than Docker itself, while CoreOS works on the operating system (CoreOS) and competing technology containerization (Rocket).

As a result, many large market players with high firepower aim at active and decisive competition with Docker. They are not at all interested in letting Docker bind anyone to him. And one by one, and all together, they are interested in the death of Docker and the replacement of it with something else.

Call it a container war. Let's see where it leads.

Google is currently leading, they are replacing Docker, and they are the only ones who can provide out-of-box orchestration ( Kubernetes ).

Conclusion

Did I say that Docker is an unstable, "toy" project?

Of course, someone will say that the problems described did not even exist, or were already in the past. They are not in the past, the problems are very relevant and very real. Docker suffers from critical errors, which makes it unsuitable for use in all major distributions, bugs that have been constantly interfering for many years, some of them are still present, even today.

If you search for a line with a specific “Docker + version + file system + OS” combination in Google, you will find links to many problems, at any time in the past, right up to the moment Docker is born. This is a mystery, how something can be so much tumbling, and no one writes about it (in fact, there were several articles, they were simply lost under a mass of advertising and quick value judgments). The latest software with such a level of expectations and with such a level of failures was MongoDB.

I could not find anyone on the planet who would use Docker seriously, successfully and without much hassle. The experience mentioned in this article was acquired by the blood and blood of employees and companies who diligently studied Docker, while every second of inactivity cost $ 1,000.

I hope we can learn from our own and others' mistakes, and not repeat them.

If you're wondering if you should have been involved in Docker years ago => The answer is "damn it, no, you didn't have to shoot!" You can say this to your boss (even today, the benefits of Docker are few if there is no orchestration around, which in itself is a rather experimental matter).

If you're wondering if you should start using Docker today, although your current ways of running your software work well and you have any expectations about the quality of work => A reasonable answer would be to wait until RHEL 8 and Debian 10 are released. Do not drive. Things must be settled and packages should not develop faster than the distributions on which they will be used.

If you like to play with fire => look in the direction of Google Container Engine in Google Cloud. Definitely high risk, possible benefits are also high.

Would this article be more reliable if I linked numerous error reports, screenshots of kernel panics, personal system crash diagrams during the day, relevant forum posts and would include private conversations on this topic? Probably.

Do I want to spend a few hundred more hours collecting this information again? Nope I'd rather spend the evening on Tinder than with the Docker. Goodbye, Docker!

What's next?

Back to me. My action plan for managing containers and clouds had a big flaw that I missed: the average tenure in technical companies is still not measured in large years, so 2017 began with the fact that I was lured to a new place.

The bad news: no clouds and the docker where I go. No news about breakthroughs in technology. Sit down and do your work yourself.

The good news is that you don’t have to “play” with billions of other people’s dollars ... as I move at least 3 orders of magnitude! Now my "sandbox" will be working with pensions of several million Americans, including someone from those people who read this blog.

Be sure: your pension is in good hands!

')

Source: https://habr.com/ru/post/346430/

All Articles