How to implement Secure Development Lifecycle and not turn gray. The story of Yandex on ZeroNights 2017

From 16 to 17 November, the international information security conference ZeroNights 2017 was held in Moscow. This year, Yandex, as usual, co-organized and presented a number of reports during the Defensive Track, a section dedicated to the protection of services and infrastructure. In particular, we shared our experience in implementing the Secure Development Lifecycle (SDL) for such a large and complex project as Yandex Browser.

In short, the SDL is a set of different security controls at all stages of the product life cycle. By itself, this methodology has been around for quite some time, originally announced by Microsoft, and you can read about the canonical approach on their website , on Habré , as well as on the Internet . SDL reduces the likelihood of vulnerabilities, complicates their exploitation to the maximum and speeds up the correction. All this allows you to make the product more secure.

However, at present in the industry there is still a very widespread opinion that for projects that are developed as part of Agile-based processes, the implementation of SDL is a very difficult and almost unsolvable task, because Many checks create a bottleneck and prevent developers from meeting deadlines. We will not argue with the first thesis, but we are ready to challenge the second one and share a story about what controls we have built into the browser development process and what difficulties we encountered along the way. If you missed our report or want to know more details - please look under the cat.

Input data

To make it clearer, we denote the input data for the problem we are solving. The SDL for the Browser was already integrated into the existing development process of a fairly large-scale project. Yandex.Browser is built on one of the most advanced and secure platforms - Chromium. For all versions of the browser, incl. for a mobile browser, a single repository is used, the code base is approximately 10 gigabytes in size and includes various components written in C ++, JavaScript, Java and Objective C. TeamCity with our own modifications is used as the build system. During product development, the team solves several types of tasks: product (creation of new functionality), technological (improvements and improvements with which the user usually does not interact, such as performance optimization or codec replacement) and infrastructure (tasks to refine the browser assembly infrastructure).

It is also important to note that at the very initial stage of SDL implementation, the product already had its established release cycle (the new release of the Browser is released on average every three weeks), so the primary task was not to break existing processes and not make life much harder for developers. That is why the first thing that has been done is the decomposition of the existing development life cycle into stages and the selection of the most optimal from the point of view of the implementation of security controls. Below on the diagram you can see what came out of it. Optimal, in our opinion, stages are highlighted in yellow: feature freeze is the stage when the list of new developments and features that will go to release is clear, and code freeze is the stage when developers do not add new code to the release for no particular reason.

Another feature of the development cycle of the Browser is that in the process of solving product problems the team draws up two mandatory documents (we just have pages in the internal wiki):

product description (software) - it contains a description of what task the functionality should solve, and also how it should interact with the user, i.e. where exactly and what size the button or input field should be, which dialog should show the browser, etc.

- technical task (TZ) - here, with additional technical details, the task is to be solved exactly: what components should be implemented, how they should work "under the hood", etc.

Detailing these documents usually depends on the complexity of the development. From a security point of view, the most interesting is usually the TK.

Secure development cycle

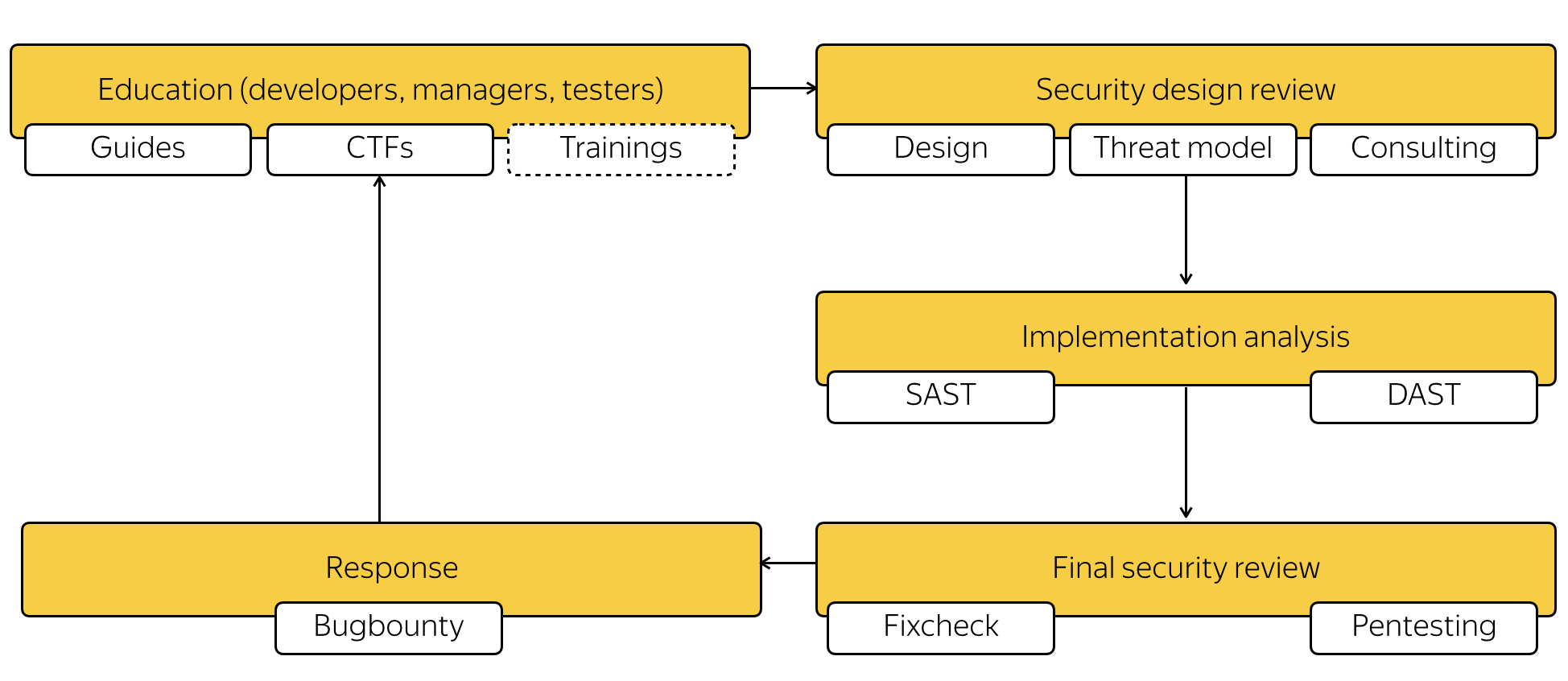

Then we began to design the process itself. Microsoft's canonical SDL seemed a bit redundant to us, so in the end we formed a cycle of 5 steps:

- education and training;

- security design review;

- implementation analysis;

- final security review (final security review / security audit);

- support (response / bug bounty).

Some of these controls are tied to the life cycle, in particular, the design review, implementation analysis and final review. Part - no. For example, training takes place independently of releases, and support is generally continuous. A design review and final security review are conducted specifically for new product features, but the implementation analysis applies generally to the release. The full scheme can be viewed below.

Covering the entire SDL product is almost completely unrealistic, so we started small, gradually expanding the coverage of checks, now our list has already been prioritized and looks something like this:

- Features and development that are related to security directly (for example, wi-fi protection or a new password manager ).

- New and complex in terms of implementation mechanisms (cloud viewing of documents, Turbo).

- Improvements and new mechanisms in the web-api browser.

- Anything that potentially affects the security or privacy of users.

Now let's take a closer look at the controls themselves. During the training phase, the Yandex product security team works with managers, developers, and testers. The purpose of this work is to describe to all the participants in the process the basic threat models for the product and to teach the team to deal with the causes of their implementation - vulnerabilities. This is one of the most important stages, because code is written by people and safety depends on its quality directly, and we take care of this, for example, for developers we have a separate internal manual. It describes the basic principles of secure development, a list of possible vulnerabilities for different types of applications, and shows typical examples of vulnerable code and fixes. Also there is information on how to use various techniques and technologies to reduce the likelihood of exploitation of vulnerability (so-called mitigations). More about our documentation and the processes that exist around it, we have already told this year at OWASP Poland Day . There is a similar guide for testers, it lists some of the "markers" - the properties of products and services, as well as data on how to connect our internal security analysis tools to the product. It should be noted that this set of manuals exists not only for the Browser, it is common to the entire company.

The design review is already tied to the release cycle in such a way that it must complete before a feature gets into one release or another and feature freeze happens.

This control lies in the fact that a member of the development team (the manager responsible for the feature) communicates in any way convenient to him with the product security team and transmits the development documents for analysis: the product description and the technical task. If security officers see, from an architectural point of view, a particular feature, potential problems in its work, they give their comments, and their wishes fall into the TK. Also, if a feature is related to security or simply carries new security threats, the security team develops a separate threat model for the feature, which will then be used at other stages. In a number of cases, threats also fall into the main threat model.

Implementation analysis is the stage in which automated security controls are processed. Their task is to identify vulnerabilities, and they are no longer applied to individual features, but entirely to browser assemblies. His task is to cover a common model of product threats. We have implemented 2 types of controls: static analysis (SAST) and dynamic analysis (DAST).

The static analysis process is divided into 2 subprocesses: analyzing the code with various static analyzers and searching for vulnerable third-party components in the database with vulnerabilities. To solve the second problem, we have created our own service. And to solve the first one, we use the well-known static analyzers Coverity and Clan static analyzer, as well as our own mechanisms for analyzing particular cases: for example, to evaluate the security of regular expressions, search for secrets in repositories, or to detect unsafe internal API calls. In addition, we monitor the security situation of the Chromium platform and (despite the fact that our version of Chromium usually lags only 3 weeks behind the release), we try to inject some separate security patches into our code if critical ones are found in Chromium vulnerabilities.

Dynamic security analysis also applies to the assembly and includes additional steps:

- Running unit tests compiled with various sanitizers (Asan, Msan, Ubsan, etc.) - we call this stage sanity testing;

- Run fuzzy tests (for this we have developers write special fuzzy tests based on libfuzzer'a).

Everything related to the implementation analysis is worked out in TeamCity both on the master branch and on RC / Beta assemblies in order to track the appearance of vulnerabilities. The result of the work is monitored by both the developers themselves and the product safety group. It is very important that all these processes be completed to the next and final stage, which is tied to the development cycle.

This stage is the final security review - control, which is again applied to the feature and allows you to confirm that the functionality was developed with the participation of the product security team, went through SAST and DAST, and all major security issues have been resolved. If the feature is complex, and it is clear that it may affect the user's security, then at this stage additional manual or automated testing (pentest) is done. Typically, during such a pentest, the likelihood of threats that were identified in the threat model for the feature is assessed.

Problems with the implementation of controls and their solution

Let's move on to the most interesting - to the description of the problems we have encountered. One of the most problematic stages for us was the stage of implementation analysis, in particular, at the stage of static code analysis. This stage is very controversial in terms of comparing the results of DAST and SAST (since DAST finds reproducible problems, and SAST - often only potential), but we still decided to implement it, because The main platform for our browser is Windows, and implementing an effective DAST for Windows is very problematic. In addition, DAST usually requires additional development of fuzzy tests, as well as the infrastructure for running these tests, and SAST can be relatively easy to run in a CI system. Regardless of the manufacturer, on our 10 gigabyte code base, all analyzers out of the box had a signal-to-noise ratio of 20/80. Those. only 20% of the analyzers' responses reflected real or at least potential security problems, and 80% of the alarms were false. We also encountered the problem of code coverage, since The browser is built using Ninja , and we have our own set of compilers on the build agent, which are not always intercepted by the static analyzer. To solve the latter problem, we resorted to creating additional configuration steps for the build agent in TeamCity, but with the first problem we had to fight much longer.

The solution to the problem of excessive noise was setting the triggering rules for static analyzers and displaying the most probable problems to the browser team. We initially made 2 dashboards with SAST analysis results: one for developers, one for the security team. The product safety group analyzed the results of SAST, chose the triggering rules (or individual components-test modules) and classified the triggers for each rule: TP (True Positive, true positive positives), FP (False Positive, false positive positives). As a result, the accuracy metric P (Precision) was calculated for each validation rule / module. The rules that overcame the threshold of accuracy 0.7 fell on the developers' dashboard. Those that showed poor accuracy were disabled with the sending of additional information to the developers of SAST.

Below is a diagram that illustrates this process.

Thus, we were able to identify the most effective types of rules for SAST, for our set of tools it is:

- unitialized variables;

- integer overflow;

- null pointer dereference;

- deadcode.

But the most problematic were the most interesting for us:

- use after free;

- out of bounds.

Their accuracy, unfortunately, on our code base is about 30-50%, often due to the fact that static analyzers are confused during the analysis of the semantics of the weak / shared pointer (and the Chromium code actively uses them), there were also problems in the promotion of macros CHECK / DCHECK, which are also characteristic of Chromium.

Acting iteratively, we gradually improved both the overall SAST detection accuracy and the code coverage.

DAST also had its difficulties. The infrastructure on which browser unit tests were run already existed. First of all, the running of unit tests run with various sanitizers was launched. This immediately allowed to start finding problems with the product. The second stage was writing fuzz tests for libfuzer, and this stage required significant investments from the development team. Special attention should be paid to fuzzing parameters, we actively use libfuzzer dictionaries, and also configure maxlen for mutation data individually, based on the size of the original data. In addition to writing the most fuzz tests and running them, you also need to monitor their performance. On an industrial scale, it is inconvenient to simultaneously perform a libfuzzer input data mutation and search for the process creeps (which works before the first crush), so we divided the fuzzing into 2 steps:

- Build new input data generated by fuzzer based on existing ones;

- Run on the assembly with a sanitizer already all received input data in order to search for kreshy.

The second important issue to solve during fuzzing is when to stop it. We use 2 basic conditions: a stop in time and a stop in coverage. Browser coverage is measured using llvm-cov .

But implementation analysis is not the only problem area. Another potential bottleneck is the final review process, which we call a security audit. In Yandex, this stage of SDL has existed for a long time and not only for Yandex.Browser, it is as automated as possible, you can read more about it here . For the Browser, we made this process non-blocking for functionality that is not explicitly related to security. For most features, this is rather a checklist for the fact that the feature has passed all of our controls. But if the feature is related to security, or at the previous stages vulnerabilities were found, the product security team checks that the vulnerabilities have been fixed. In some cases, even express pentest is done, checking the threat model created specifically for the feature.

Conclusions and results

In this publication, we described not just abstract security measures. With our measures over the past year, we found more than 50 security issues (not counting those that were sent to us in the Hunt for Errors or in cooperation with the CVE community ). Our experience shows that it is real to launch SDL for a large product, but in the course of solving such a complex task you encounter a set of problems that are best solved gradually. From the point of view of processes, we recommend everyone who tries to repeat our experience to pay attention to several important things:

- When implementing SDL, first of all focus on safety-critical functionality;

- work with your developers, managers, testers, people create your product, the quality of the final product depends on their skills and knowledge, as well as the amount of work of the product safety personnel within the SDL processes;

- Update your threat model, pay attention to both the basic problems described in the popular manuals, and non-standard problems.

From the side of automated tools, we would also like to share a set of tips:

- out-of-the-box static code analyzers do not work as well as we would like - implement SAST if you understand that it will be even more difficult to implement DAST;

- To get SAST accuracy at the level of at least 70%, you need to tune the rules / fix the plugins themselves to check the code;

- With complex semantics of modern standards, C ++ SAST also does not work very well, for example, weak / shared pointers have become a headache for us;

- if you want to implement DAST - start with the run of unit tests with the help of sanitizers, as soon as the number of problems found decreases, you can proceed to fuzzing;

- show your developers the results of only verified analyzers / rules, act gradually, this will simplify the implementation of the SDL.

Building SDL for Yandex. Browser took about a year. Some security controls have already been fully debugged (for example, static code analysis), others are at the stage of a pilot project and experiments (primarily fuzzing). We plan to increase their quantity, improve quality and share our experience further. Stay in touch!

')

Source: https://habr.com/ru/post/346426/

All Articles