Unlimited speech recognition. Or how I translate voice messages into text in a bot

Hi, Habr. I usually write programs for non-speaking people, but I decided to go to extremes and make a product for speaking people. I want to talk about the development of a bot for VK, which translates the voice messages sent to it into text. At first, I used Yandex SpeechKit , but then I rested on the daily limit of recognizable units and switched to wit.ai , and I want to talk about this, as well as a framework for creating vk bots using node.js, a google dialog box .

Make a bot for VC on node.js, which receives voice messages, direct or forwarded, sends them to the speech recognition api and responds to the user with recognized text. In response to text messages, the bot must maintain a dialogue using the google service.

To work with api vk, I took the node-vk-bot-api library, a link to which I found in the official documentation of VKontakte, although the same author has a steeper library with support for webhooks botact .

')

To work with Google Dialogflow, the apiai package was taken.

Since it was originally intended to use Yandex's api, a ready-made library I did not find, I wrote the file for working with voice api myself using the request-promise module.

Comments in the code Russian only in the article, so I // true programmer

In this file we will describe the function of receiving text from the buffer with the audio file:

In general, wit.ai is a facebook platform for processing native speech to create artificial intelligence bots that take as input a text or voice phrase in natural language and tries to respond using a neural network. I use only voice recognition, so to speak, pin from the back.

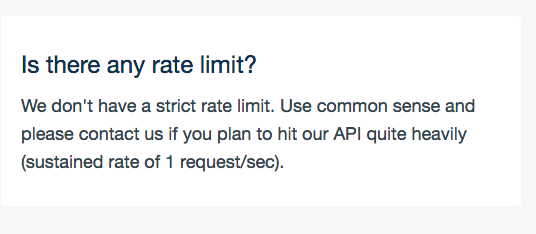

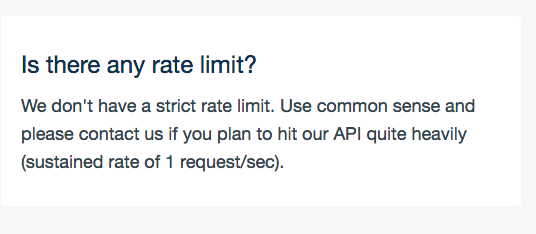

The platform has no limits, except for the limitation in the form of a single request per second, and that is monitored by the platform itself and the request is simply put in a queue.

In this file we describe the work with the dialogflow platform, which will respond to text messages. Why not wit.ai? This has two reasons. Firstly, the project was entertaining and developing, respectively, the goal was to try as many technologies as possible, secondly, speechkit was used initially, only after blocking due to the daily limit, an emergency decision was made to switch to wit.

The code is very banal:

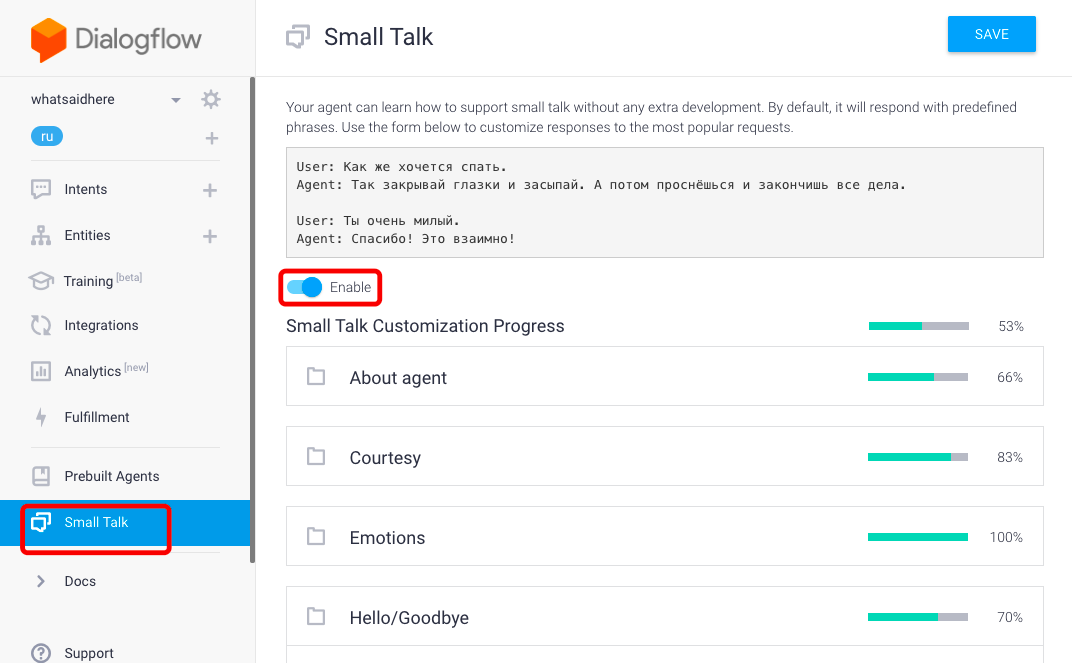

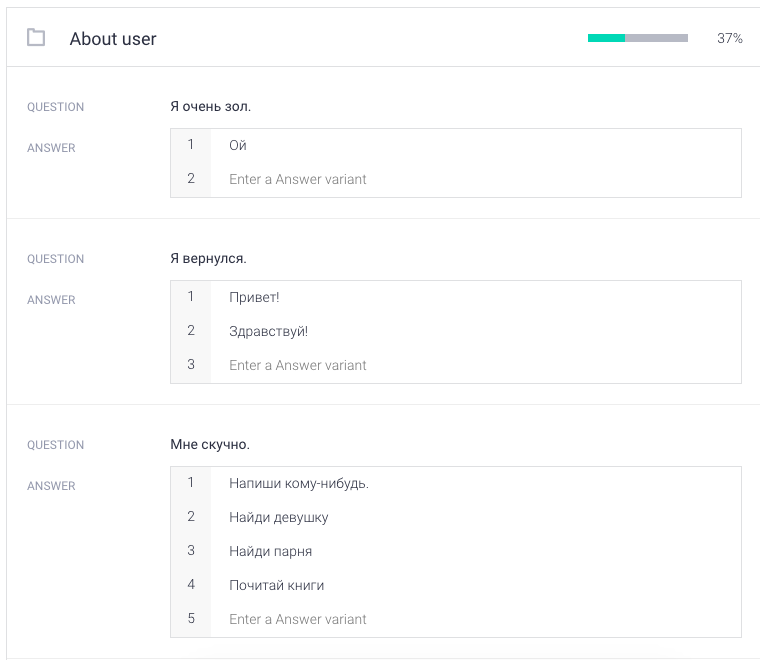

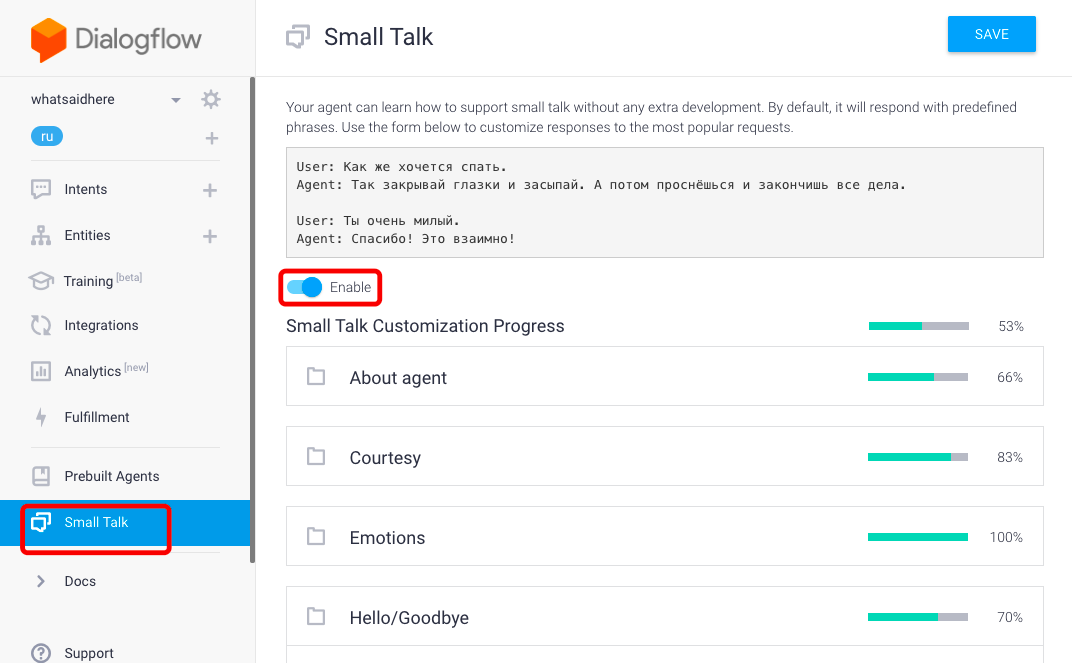

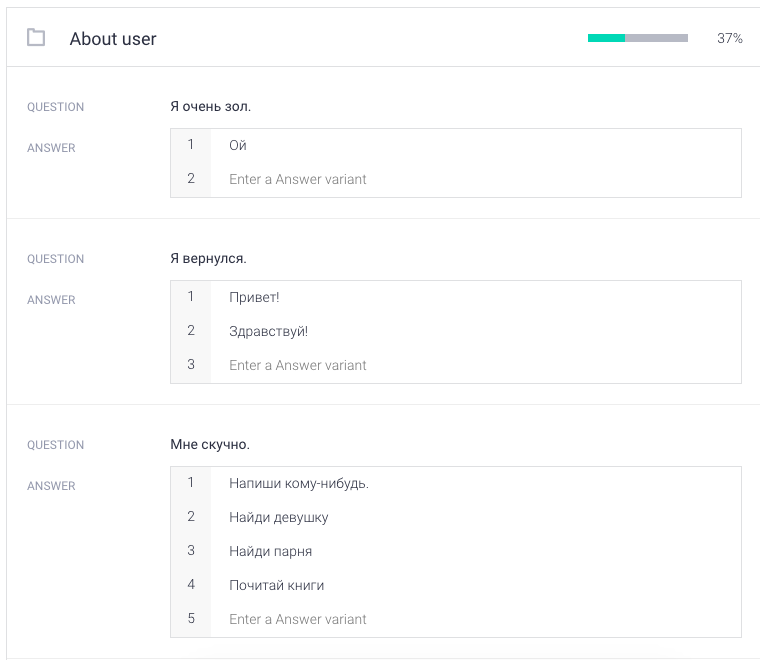

In the consoleflow, we enable and configure the ready-made Small Talk module from the default agents.

The module will ask to customize itself by adding thematic phrases, it will take a lot of time.

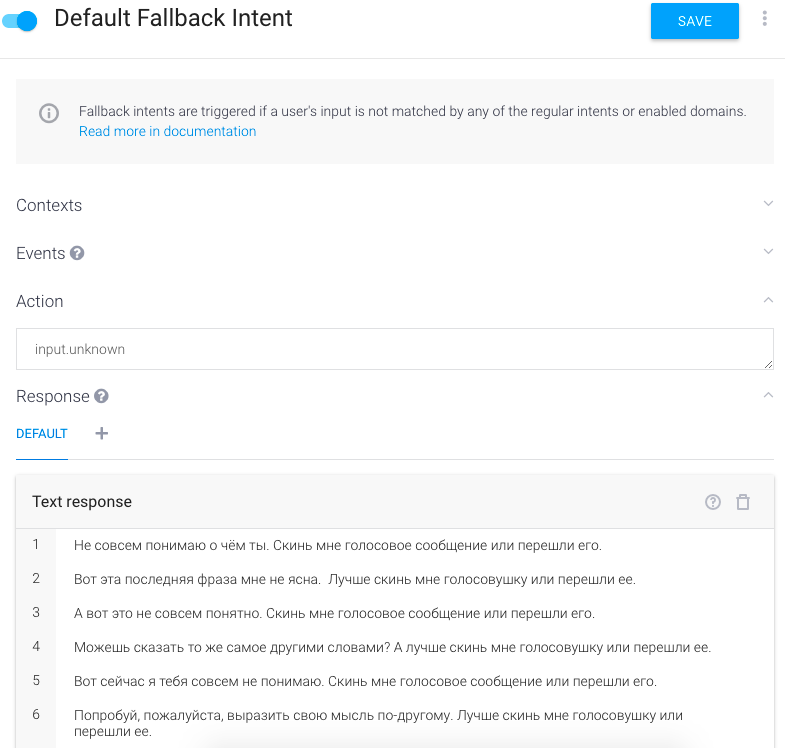

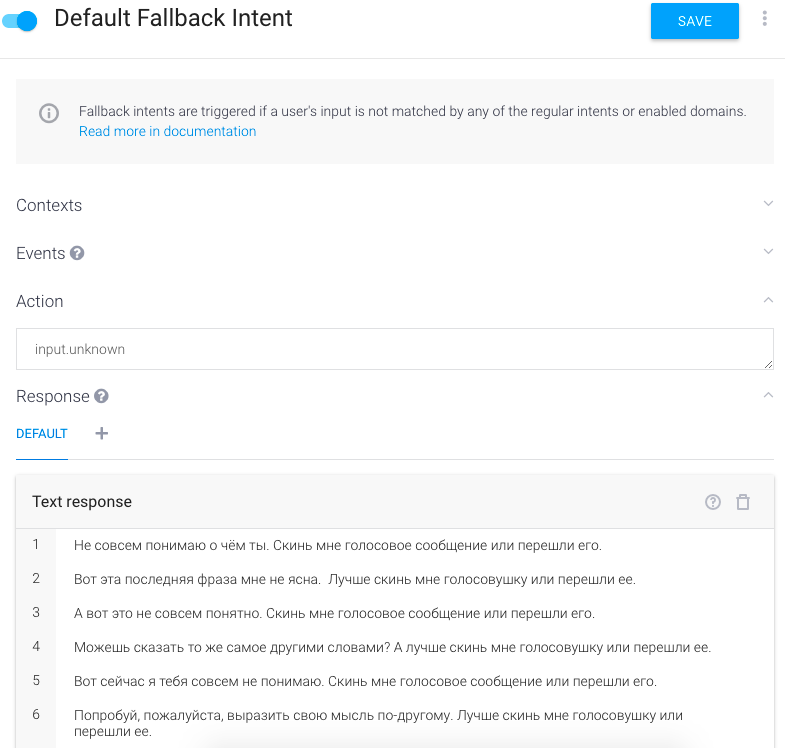

You must also enable Default FallBack Intent, which will respond in case of incomprehensible requests.

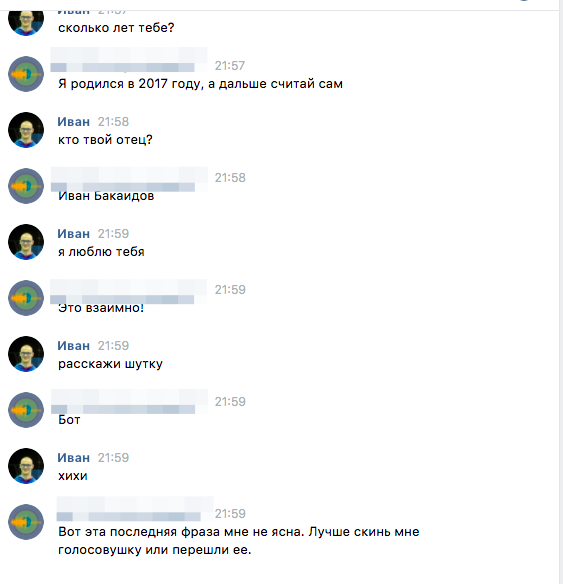

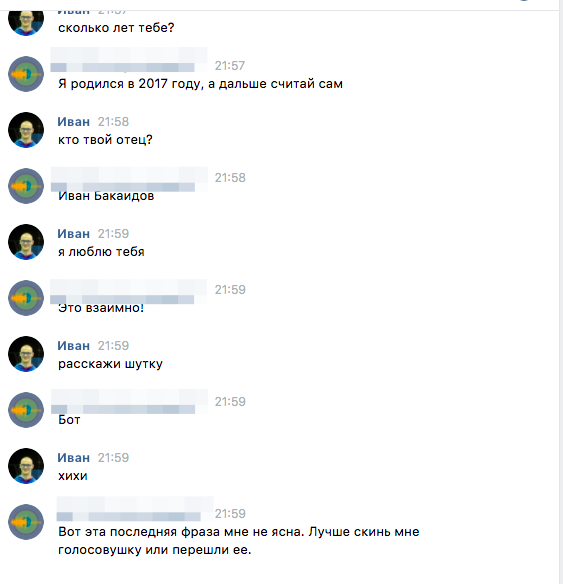

The result is such a conversation:

Putting it all in the main file.

Not the cleanest code, there are a lot of jambs and stupid things, but still I hope that I told you about the technologies useful to you and inspired you to do something with them.

Since I couldn’t test the bot myself, I don’t say I used another bot that translated text to speech.

0. TK

Make a bot for VC on node.js, which receives voice messages, direct or forwarded, sends them to the speech recognition api and responds to the user with recognized text. In response to text messages, the bot must maintain a dialogue using the google service.

1. Stack

To work with api vk, I took the node-vk-bot-api library, a link to which I found in the official documentation of VKontakte, although the same author has a steeper library with support for webhooks botact .

')

To work with Google Dialogflow, the apiai package was taken.

Since it was originally intended to use Yandex's api, a ready-made library I did not find, I wrote the file for working with voice api myself using the request-promise module.

2. Separation of the project

Comments in the code Russian only in the article, so I // true programmer

2.0 asr.js

In this file we will describe the function of receiving text from the buffer with the audio file:

const request = require('request-promise') const uri = `https://api.wit.ai/speech` const apikey = '<...>' // wit.ai module.exports = async (body) => { // post const response = await request.post({ uri, headers: { 'Accept': 'audio/x-mpeg-3', 'Authorization': `Bearer ` + apikey, 'Content-Type': 'audio/mpeg3', 'Transfer-Encoding': 'chunked' }, body }) // return JSON.parse(response)._text } In general, wit.ai is a facebook platform for processing native speech to create artificial intelligence bots that take as input a text or voice phrase in natural language and tries to respond using a neural network. I use only voice recognition, so to speak, pin from the back.

The platform has no limits, except for the limitation in the form of a single request per second, and that is monitored by the platform itself and the request is simply put in a queue.

1. assistent.js

In this file we describe the work with the dialogflow platform, which will respond to text messages. Why not wit.ai? This has two reasons. Firstly, the project was entertaining and developing, respectively, the goal was to try as many technologies as possible, secondly, speechkit was used initially, only after blocking due to the daily limit, an emergency decision was made to switch to wit.

The code is very banal:

const apiai = require('apiai'); // , - , , 36 , id // const uniqid = 'e4278f61-9437-4dff-a24b-aaaaaaaaaaaa'; const app = apiai('<...>'); module.exports = (q, uid) => { // , return new Promise((resolve, reject) => { uid = uid + '' // don't touch, magic const cuniqid = uniqid.slice(0, uniqid.length - uid.length) + uid // const request = app.textRequest(q, { sessionId: uniqid }) // ! request.on('response', (response) => { resolve(response) }) // ! request.on('error', (error) => { reject(error); }) // , request.end(); }) }

In the consoleflow, we enable and configure the ready-made Small Talk module from the default agents.

The module will ask to customize itself by adding thematic phrases, it will take a lot of time.

You must also enable Default FallBack Intent, which will respond in case of incomprehensible requests.

The result is such a conversation:

2. index.js

Putting it all in the main file.

const vk = require('api.vk.com') const request = require('request-promise') const Bot = require('node-vk-bot-api') const asr = require('./asr') const assistent = require('./assistent') const token = require('./token'); // api const api = (method, options) => { return new Promise((resolve, reject) => { vk(method, options, (err, result) => { if (err) return reject(err) resolve(result) }) }) } // const bot = new Bot({ token }) // bot.on(async (object) => { // catcher , try { // api('messages.setActivity', { access_token: token, type: 'typing', user_id: object.user_id }) api('messages.markAsRead', { access_token: token, message_ids: object.message_id }) //, uri let uri // if (object.attachments.length != 0) { // const [msg] = (await api('messages.getById', { access_token: token, message_ids: object.message_id, v: 5.67 })).items // , if (msg.attachments[0].type != 'audio' || msg.attachments[0].type != 'doc' || msg.attachments[0].doc.type != 5) { uri = null } // uri try { if (msg.attachments[0].type === 'doc') uri = msg.attachments[0].doc.preview.audio_msg.link_mp3 else if (msg.attachments[0].type === 'audio') uri = msg.attachments[0].audio.url } catch (e) { uri = null } // } else if (object.forward != null) { try { uri = object.forward.attachments[0].doc.preview.audio_msg.link_mp3 } catch (e) { uri = null } } else { uri = null } // if (uri === '') { throw (' ') } if (uri == null) { // c, dialogflow if (object.body != '') { const { speech } = (await assistent(object.body, object.user_id)).result.fulfillment object.reply(speech) return } else { throw (' . ') } } // const audio = await request.get({ uri, encoding: null }) let phrase try { phrase = await asr(audio) } catch (e) { console.error('asr error', e) throw (' ') } // if (phrase != null) { object.reply(phrase, (err, mesid) => { }); } else { throw (' ') } } catch (error) { // console.error(error) object.reply('string' == typeof error ? error : ' . , !') } }) // , long pong. bot.listen() Not the cleanest code, there are a lot of jambs and stupid things, but still I hope that I told you about the technologies useful to you and inspired you to do something with them.

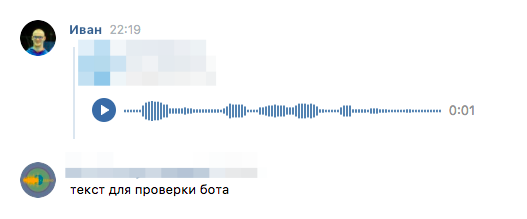

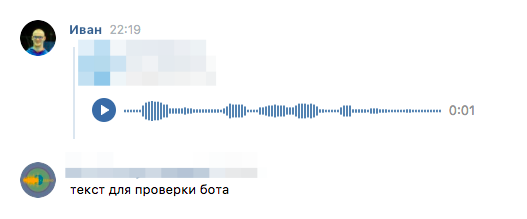

Result:

Since I couldn’t test the bot myself, I don’t say I used another bot that translated text to speech.

Source: https://habr.com/ru/post/346334/

All Articles