Troubleshooting the Xbox 360 processor architecture

Your attention is invited to the translation of the latest article by Bruce Dawson - the developer, today working in Google on Chrome for Windows.

The recent discovery of the vulnerabilities of Meltdown and Specter reminded me of the fact that I once discovered a similar vulnerability in an Xbox 360 processor. The reason for this was the instruction recently added to the processor, the very existence of which was a danger.

In 2005, I was working on an Xbox 360 processor. I lived and breathed exclusively with this chip. I still have a semiconductor processor plate with a diameter of 30 cm and a 1.5-meter poster with the architecture of this CPU on my wall. I spent so much time trying to figure out how the processor’s computing pipelines work, that when I was asked to find out the cause of the mysterious drops, I could intuitively guess that an error in the design of the processor could lead to their appearance.

')

However, before moving on to the problem itself, first a bit of theory.

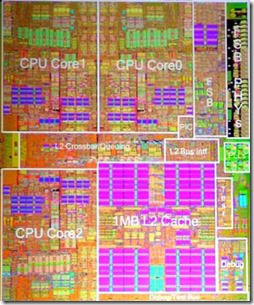

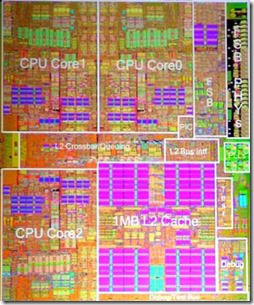

The Xbox 360 processor is a triple-core PowerPC chip manufactured by IBM. Each of the three cores is located in a separate quadrant, and the fourth quadrant is reserved for 1 MB L2 cache - you can see all this in the image next. Each kernel has an instruction cache of 32 KB and a data cache of 32 KB.

The Xbox 360 processor is a triple-core PowerPC chip manufactured by IBM. Each of the three cores is located in a separate quadrant, and the fourth quadrant is reserved for 1 MB L2 cache - you can see all this in the image next. Each kernel has an instruction cache of 32 KB and a data cache of 32 KB.

Fact: Core 0 was physically located closest to the L2 cache, and therefore has a significantly lower latency when accessing the L2 cache.

The Xbox 360 processor had high latencies for everything, in particular, memory latencies were bad. In addition, 1 MB L2 cache (and this is all that could get into the processor) was too small for a three-core CPU. Therefore, it was important to save space in the L2 cache in order to minimize cache misses.

As you know, processor caches improve performance due to spatial locality ( temporal locality ) and temporal locality ( temporal locality ). Spatial localization means the following: if you used one byte of data, you may soon be using other nearby bytes of data; temporary - if you used some memory, then maybe you use it again in the near future.

Moreover, sometimes temporary locality actually does not occur. If you process a large data array once-per-frame , then you can trivially prove that it leaves the L2 cache by the time you need it again. You will still want the data to be stored in the L1 cache so that you can benefit from spatial locality - but if this data continues to remain in the L2 cache, they will crowd out other data, which can result in slowing down the other two cores.

This is usually inevitable. The memory coherence mechanism of our PowerPC processor required that all data from the L1 caches also be in the L2 cache. The MESI protocol , which was used for memory coherence, required that one core write to the cache line, which any other core with a copy of the same cache line should discard - and the L2 cache should be responsible for tracking which of the L1 caches involved in caching what addresses.

However, the processor was intended for the video game console, and performance was considered the main priority, so a new instruction, xdcbt, was added to the CPU. The usual PowerPC instruction, dcbt , was a typical instruction for performing prefetch . The xdcbt instruction was an advanced instruction for executing prefetch , which allowed getting data from memory directly to the L1 data cache, bypassing the L2 cache. This meant that memory coherence was no longer guaranteed - but you know the game developers: we know what we are doing, everything will be OK !

Oops ...

I wrote a frequently used function for copying memory to the Xbox 360, which optionally used xdcbt . Prefetching was key to performance and usually used dcbt , but when transmitting the PREFETCH_EX flag , it performed a sample from xdcbt . Alas, as practice has shown, this turned out to be an ill-considered decision.

The game developer who used this feature regularly talked about strange crashes - heap damage occurred, but the heap structure in memory dumps looked fine. After watching the dumps of crashes, I finally realized where I made a mistake.

The memory that was selected using xdcbt was “toxic”. If it was written by another core before it was dropped from the L1 cache, then the other two cores had a different view of memory — and there was no guarantee that their views would ever coincide. The Xbox 360 cache lines were 128 bytes, and my copy function ran right through to the end of the original memory — as a result, xdcbt was applied to cache lines, the last parts of which were parts of adjacent data structures. These were usually heap metadata — at least, that’s where we saw crashes. The incoherent kernel saw obsolete data (despite careful use of locks) and fell, but the crash dump gave out the actual RAM content, so we could not see what was really going on.

In summary, the only safe way to use xdcbt was to perform the preliminary samples very carefully so that even a single byte after the end of the buffer would not get into it. I fixed my memory copying function so that it would not “run” so far, but it turned out that without waiting for my bug fix, the game developer simply stopped using the PREFETCH_EX flag, and the problem went away by itself.

It seems to be all right? The game developer played with fire, flew too close to the sun, and the release of the game console almost missed Christmas. But we found this problem in time, solved it, and now we were ready to release the console and games - and also go home carefree

And then this game began to crash again.

The symptoms were identical - except that the game no longer used the xdcbt instruction. I could debug the code step by step, and saw that this was indeed the case. It looks like we really have a serious problem.

I had to resort to the most ancient method of debugging - I cleared my mind, allowed the computing pipelines to fill my subconscious - and suddenly it dawned on me what the problem could be. I quickly wrote an email to IBM, and my concerns about one subtlety of the internal structure of the processors, which I had never thought of before, were confirmed. The villain was the same as in the case of Meltdown and Specter .

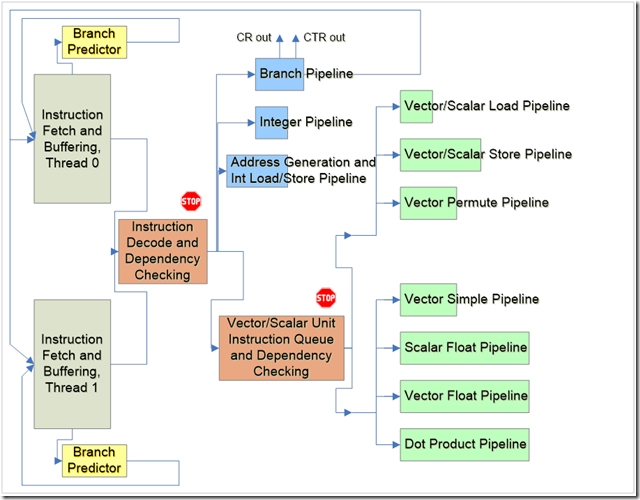

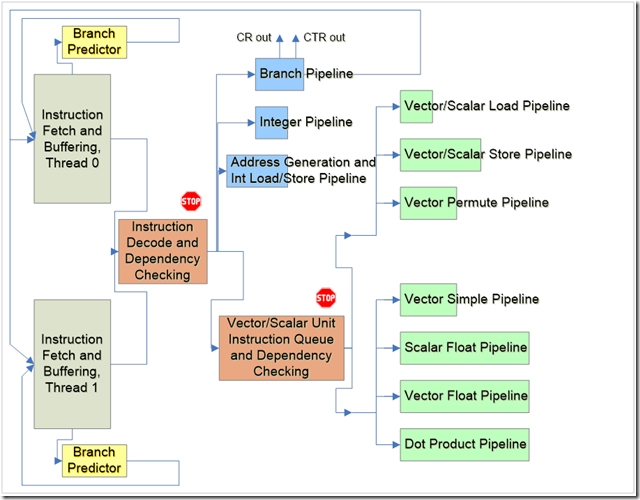

The Xbox 360 processor performs in-order execution instructions. In fact, this processor is quite simple, and relies on its high frequency (albeit not as high as expected) to achieve high performance. However, it includes a predictor of transitions - it is a necessary necessity due to the very long computing pipelines. Here is a diagram illustrating the device of the CPU pipelines, which shows all the pipelines (if you want to know more details, then do not miss this link ):

In this diagram, you can see the predictor of transitions, and the fact that the pipelines are very long (wide in the diagram) are long enough so that erroneously predicted instructions ( mispredicted instructions ) can keep up with the rest, despite the execution of commands in order.

So, the transition predictor makes the prediction, and the predicted instructions are selected, decoded, and executed — but not deleted until it becomes known whether the prediction is correct. Sounds familiar? The discovery that I made for myself - I hadn’t thought about it before - was what actually happened during the speculative implementation of the pre-sampling. Since the delays were large, it was important to get the prefetch transaction to the bus as quickly as possible, and as soon as the sample started, there was no way to cancel it. Therefore, the speculatively executed xdcbt was identical to the real xdcbt ! (A speculatively executed loading command was just a prefetch)

That was the problem. The predictor of transitions sometimes led to speculative execution of xdcbt commands, and this was as bad as their actual execution. One of my colleagues suggested an interesting way to test this theory — to replace each xdcbt call in the game with a breakpoint. This allowed to achieve the following result:

For me, this was an expected result, but it was still very impressive. Even years later, when I read about Meltdown , it was still cool to see how instructions that were not followed became causes of crashes.

My insight about the predictor of transitions made it clear that this instruction was too dangerous to include in any code segment of any game - controlling when the instruction could be “speculative” executed turned out to be too complicated. In theory, the predictor of transitions could predict any address, so there was no safe place to put the xdcbt instruction. Risks could be reduced, but not removed completely, and the efforts were not worth it. Despite the fact that discussions of the Xbox 360 architecture continue to mention this instruction, I doubt that at least one game using it has reached the release.

Once, during an interview in response to a classic question “describe the most difficult bug that you had to deal with,” I told about this case. The interviewer's reaction was “Yes, we came across something similar on the DEC Alpha processors .”

Here, really, everything is new - well forgotten old.

The recent discovery of the vulnerabilities of Meltdown and Specter reminded me of the fact that I once discovered a similar vulnerability in an Xbox 360 processor. The reason for this was the instruction recently added to the processor, the very existence of which was a danger.

In 2005, I was working on an Xbox 360 processor. I lived and breathed exclusively with this chip. I still have a semiconductor processor plate with a diameter of 30 cm and a 1.5-meter poster with the architecture of this CPU on my wall. I spent so much time trying to figure out how the processor’s computing pipelines work, that when I was asked to find out the cause of the mysterious drops, I could intuitively guess that an error in the design of the processor could lead to their appearance.

')

However, before moving on to the problem itself, first a bit of theory.

The Xbox 360 processor is a triple-core PowerPC chip manufactured by IBM. Each of the three cores is located in a separate quadrant, and the fourth quadrant is reserved for 1 MB L2 cache - you can see all this in the image next. Each kernel has an instruction cache of 32 KB and a data cache of 32 KB.

The Xbox 360 processor is a triple-core PowerPC chip manufactured by IBM. Each of the three cores is located in a separate quadrant, and the fourth quadrant is reserved for 1 MB L2 cache - you can see all this in the image next. Each kernel has an instruction cache of 32 KB and a data cache of 32 KB.Fact: Core 0 was physically located closest to the L2 cache, and therefore has a significantly lower latency when accessing the L2 cache.

The Xbox 360 processor had high latencies for everything, in particular, memory latencies were bad. In addition, 1 MB L2 cache (and this is all that could get into the processor) was too small for a three-core CPU. Therefore, it was important to save space in the L2 cache in order to minimize cache misses.

As you know, processor caches improve performance due to spatial locality ( temporal locality ) and temporal locality ( temporal locality ). Spatial localization means the following: if you used one byte of data, you may soon be using other nearby bytes of data; temporary - if you used some memory, then maybe you use it again in the near future.

Moreover, sometimes temporary locality actually does not occur. If you process a large data array once-per-frame , then you can trivially prove that it leaves the L2 cache by the time you need it again. You will still want the data to be stored in the L1 cache so that you can benefit from spatial locality - but if this data continues to remain in the L2 cache, they will crowd out other data, which can result in slowing down the other two cores.

This is usually inevitable. The memory coherence mechanism of our PowerPC processor required that all data from the L1 caches also be in the L2 cache. The MESI protocol , which was used for memory coherence, required that one core write to the cache line, which any other core with a copy of the same cache line should discard - and the L2 cache should be responsible for tracking which of the L1 caches involved in caching what addresses.

However, the processor was intended for the video game console, and performance was considered the main priority, so a new instruction, xdcbt, was added to the CPU. The usual PowerPC instruction, dcbt , was a typical instruction for performing prefetch . The xdcbt instruction was an advanced instruction for executing prefetch , which allowed getting data from memory directly to the L1 data cache, bypassing the L2 cache. This meant that memory coherence was no longer guaranteed - but you know the game developers: we know what we are doing, everything will be OK !

Oops ...

I wrote a frequently used function for copying memory to the Xbox 360, which optionally used xdcbt . Prefetching was key to performance and usually used dcbt , but when transmitting the PREFETCH_EX flag , it performed a sample from xdcbt . Alas, as practice has shown, this turned out to be an ill-considered decision.

The game developer who used this feature regularly talked about strange crashes - heap damage occurred, but the heap structure in memory dumps looked fine. After watching the dumps of crashes, I finally realized where I made a mistake.

The memory that was selected using xdcbt was “toxic”. If it was written by another core before it was dropped from the L1 cache, then the other two cores had a different view of memory — and there was no guarantee that their views would ever coincide. The Xbox 360 cache lines were 128 bytes, and my copy function ran right through to the end of the original memory — as a result, xdcbt was applied to cache lines, the last parts of which were parts of adjacent data structures. These were usually heap metadata — at least, that’s where we saw crashes. The incoherent kernel saw obsolete data (despite careful use of locks) and fell, but the crash dump gave out the actual RAM content, so we could not see what was really going on.

In summary, the only safe way to use xdcbt was to perform the preliminary samples very carefully so that even a single byte after the end of the buffer would not get into it. I fixed my memory copying function so that it would not “run” so far, but it turned out that without waiting for my bug fix, the game developer simply stopped using the PREFETCH_EX flag, and the problem went away by itself.

Real bug

It seems to be all right? The game developer played with fire, flew too close to the sun, and the release of the game console almost missed Christmas. But we found this problem in time, solved it, and now we were ready to release the console and games - and also go home carefree

And then this game began to crash again.

The symptoms were identical - except that the game no longer used the xdcbt instruction. I could debug the code step by step, and saw that this was indeed the case. It looks like we really have a serious problem.

I had to resort to the most ancient method of debugging - I cleared my mind, allowed the computing pipelines to fill my subconscious - and suddenly it dawned on me what the problem could be. I quickly wrote an email to IBM, and my concerns about one subtlety of the internal structure of the processors, which I had never thought of before, were confirmed. The villain was the same as in the case of Meltdown and Specter .

The Xbox 360 processor performs in-order execution instructions. In fact, this processor is quite simple, and relies on its high frequency (albeit not as high as expected) to achieve high performance. However, it includes a predictor of transitions - it is a necessary necessity due to the very long computing pipelines. Here is a diagram illustrating the device of the CPU pipelines, which shows all the pipelines (if you want to know more details, then do not miss this link ):

In this diagram, you can see the predictor of transitions, and the fact that the pipelines are very long (wide in the diagram) are long enough so that erroneously predicted instructions ( mispredicted instructions ) can keep up with the rest, despite the execution of commands in order.

So, the transition predictor makes the prediction, and the predicted instructions are selected, decoded, and executed — but not deleted until it becomes known whether the prediction is correct. Sounds familiar? The discovery that I made for myself - I hadn’t thought about it before - was what actually happened during the speculative implementation of the pre-sampling. Since the delays were large, it was important to get the prefetch transaction to the bus as quickly as possible, and as soon as the sample started, there was no way to cancel it. Therefore, the speculatively executed xdcbt was identical to the real xdcbt ! (A speculatively executed loading command was just a prefetch)

That was the problem. The predictor of transitions sometimes led to speculative execution of xdcbt commands, and this was as bad as their actual execution. One of my colleagues suggested an interesting way to test this theory — to replace each xdcbt call in the game with a breakpoint. This allowed to achieve the following result:

- Breakpoints did not work anymore, which proved that the game did not follow the xdcbt instructions;

- Kreshi disappeared.

For me, this was an expected result, but it was still very impressive. Even years later, when I read about Meltdown , it was still cool to see how instructions that were not followed became causes of crashes.

My insight about the predictor of transitions made it clear that this instruction was too dangerous to include in any code segment of any game - controlling when the instruction could be “speculative” executed turned out to be too complicated. In theory, the predictor of transitions could predict any address, so there was no safe place to put the xdcbt instruction. Risks could be reduced, but not removed completely, and the efforts were not worth it. Despite the fact that discussions of the Xbox 360 architecture continue to mention this instruction, I doubt that at least one game using it has reached the release.

Once, during an interview in response to a classic question “describe the most difficult bug that you had to deal with,” I told about this case. The interviewer's reaction was “Yes, we came across something similar on the DEC Alpha processors .”

Here, really, everything is new - well forgotten old.

Source: https://habr.com/ru/post/346250/

All Articles