Docker-compose: the perfect working environment

Hello!

Recently, I have been increasingly thinking about the optimality of the workflow and would like to share my research on this issue.

In this post we will talk about docker-compose , which in my opinion is a panacea in the organization and optimization of the developer's workflow.

I will describe everything almost on my fingers, so if you have never heard of a docker before (which is strange), you have never worked with it and want to understand it, then I ask for the cat.

Foreword

In the article I deliberately simplify some points, do not delve into the details and superficially touch on many issues.

I do it with a full understanding of my actions, and I think that it is not necessary to climb under the hood, if everything works.

Those who think otherwise is your full right, but you just don’t have to impose your point of view.

Thank!

Docker

A technology that makes it easy (in all senses) to deploy the necessary working environment.

You can learn more from the links at the end of the article, but it’s better not to switch to it now, so as not to clog your brain.

Docker-compose

A package manager (similar to composer and npm, only docker has containers), which allows you to describe the necessary structure in one file (config).

More details can also be found in the links at the end of the article.

Docker hub

Container repository (similar to packagist and npm).

Important note : carefully read the description of the image, 70-80% of stupid questions are described there, do not waste time on google.

Installation

I will not begin to rewrite the docker documentation, so I’ll just throw links:

Installation of conventional software (software), problems should arise.

If you do appear, then you can not read further, probably you stumbled upon this article and the development as a whole ...

If you installed a docker for Windows, then you need to work through the special Docker Quickstart Terminal console. After installation, the corresponding shortcut should appear on the desktop.

Project structure

To begin with, we will define the structure of projects:

- project 1

- project 2

- project N

- src

- container 1

- container 2

- container N

- docker-compose.yml

Each project necessarily has a docker-compose.yml and src directory.

Also for each container there must be its own directory (coinciding with the name of the container), where all the information necessary for the operation of the container (configs, data, ...) will be stored.

CMD / Terminal

To work with docker and compose, we will use only a few commands:

- docker ps - view all containers ( more ),

- docker-compose up - build - build the project. The build parameter is used to force compose to re-create containers. ( more info ).

Description of other teams can be found on the official website .

Let's get straight to the point.

apache

https://hub.docker.com/_/httpd/

Let's start with the most popular server - Apache.

Create a project directory:

- project

- src

- docker-compose.yml

The config will look like this:

version: '3' services: apache: image: httpd:2.4 ports: - 80:80 volumes: - ./src:/usr/local/apache2/htdocs What's going on here:

image: httpd:2.4- we specify which image we need and its version (the list of available versions and modifications can be viewed in the corresponding docker-hub).ports: 80:80- we forward ports between the docker and our machine, i.e. all requests that will go to port 80 of our machine will be transmitted to port 80 docker.volumes: ./src:/usr/local/apache2/htdocs- link the directory on our machine, from the working directory apache, i.e. All files in the src directory will be accessible to apache, as if they were in the htdocs directory (the opposite also works, everything that is created in the docker is "copied" to the local machine).

Create a file src / index.html in the working directory with the contents:

Hi, I'am Apache We start our project:

docker-compose up --build Go to the browser at the PC address and watch our server greeting.

To destroy the containers of our project, it is enough to execute Ctrl + C in the console.

If the docker is working through VirtualBox, then you need to go through the virtualka IP. In any case, if you work through Docker Quickstart Terminal, the address will be displayed when the console starts.

Work in the background

If you need to run docker and continue working in the console, then you can run the project in background mode:

docker-compose up --build -d After launch, the console will be available for operation.

In this situation, to destroy the container, you need to stop and delete it, but first, you need to know its ID:

docker ps In my case, I got the following conclusion:

CONTAINER ID IMAGE ... 988e27da7bdf httpd:2.4 ... Now stop and delete our container:

docker stop 988e27da7bdf docker rm 988e27da7bdf Or you can do roughly and immediately delete:

docker rm -f 988e27da7bdf nginx

https://hub.docker.com/_/nginx/

The nginx config is built using the same scheme as apache: image, ports, working directory. The file looks like this:

version: '3' services: nginx: image: nginx:1.13 ports: - 80:80 volumes: - ./src:/usr/share/nginx/html Create the src / index.html file in the working directory with the contents:

Hi, I'am Nginx Go to the browser, and see the greeting of the next server.

php + apache

If we talk about a bunch of PHP and Apache, then we already have a ready image for it, so we’ll talk about linking containers later. And now just a config:

version: '3' services: web: image: php:7.2-apache ports: - 80:80 volumes: - ./src:/var/www/html Create the src / index.php file in the working directory with the contents:

<?php phpinfo(); We check its work in the browser.

php + nginx

https://hub.docker.com/_/nginx/

https://hub.docker.com/_/php/

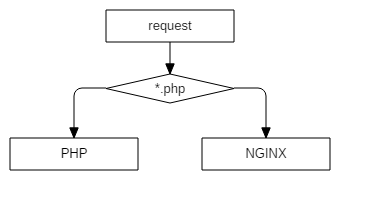

In this combination, php will be in fpm format. Schematically, it looks like this:

Accordingly, we will need to rewrite the server config.

To do this, in addition to the working directory, the server configuration file will also need to be linked:

version: '3' services: nginx: image: nginx:1.13 ports: - 80:80 volumes: - ./src:/usr/share/nginx/html - ./nginx/nginx.conf:/etc/nginx/nginx.conf depends_on: - php php: image: php:7.2-fpm volumes: - ./src:/usr/share/nginx/html What changed:

volumes: ./nginx/nginx.conf:/etc/nginx/nginx.conf- link the nginx config file;depends_on: php- we specify nginx dependence on php, i.e. in fact, this is a guarantee that the php container will start before nginx.

If we do not specify depends_on , then we can catch a similar error:

nginx_1 | 2018/01/05 08:56:42 [emerg] 1#1: host not found in upstream "php" in /etc/nginx/nginx.conf:23 nginx_1 | nginx: [emerg] host not found in upstream "php" in /etc/nginx/nginx.conf:23 Create the file /nginx/nginx.conf in the directory of our project. The config itself looks like this:

worker_processes 1; events { worker_connections 1024; } http { include mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65; server { root /usr/share/nginx/html; listen 80; server_name localhost; location / { index index.html index.htm; } location ~ \.php$ { fastcgi_pass php:9000; fastcgi_index index.php; include fastcgi_params; fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name; fastcgi_param PATH_INFO $fastcgi_path_info; } } } Everything is standard, as long as the root directive matches docker-compose.yml.

Looking at the config, I ask you to pay attention to the fastcgi_pass directive, namely the php:9000 value php:9000 .

Usually, when a local server is configured, localhost:9000 specified, BUT, because php is located in another container, then we need to apply to it (docker itself "substitutes" the desired IP container, in fact, all the magic is hidden in the simple addition of the hosts file).

To make this possible, we need to add the directive to our docker-compose (although in fact it is not necessary, more ).

After all the actions, the directory of our project looks like this:

- project

- src

- index.php

- nginx

- nginx.conf

- docker-compose.yml

- src

We start, check, rejoice!

php + apache + nginx

https://hub.docker.com/_/nginx/

https://hub.docker.com/_/php/

https://hub.docker.com/_/httpd/

Probably the most popular bundle for web projects. Schematically it looks like this:

A couple of comments:

- php is used as php-fpm because it is faster and more fashionable;

- apache is used because htaccess is also popular.

In order to configure everything, we will also need to link the apache config, and thus docker-compose will look like this:

version: '3' services: apache: image: httpd:2.4 volumes: - ./src:/var/www/html - ./httpd/httpd.conf:/usr/local/apache2/conf/httpd.conf depends_on: - php nginx: image: nginx:1.13 ports: - 80:80 volumes: - ./src:/var/www/html - ./nginx/nginx.conf:/etc/nginx/nginx.conf depends_on: - apache php: image: php:7.2-fpm volumes: - ./src:/var/www/html Since I didn’t find a normal config on the Internet (I didn’t just search for OC), and I’m having a docker at hand, it was decided to pull it out of the standard container.

It all fit into 3 teams:

docker run -d httpd:2.4 docker ps docker cp [ID ]:/usr/local/apache2/conf/httpd.conf ./httpd.conf After executing these commands, the httpd.conf file will appear in the current directory, which we will use as the basis.

In essence, this is a simple copy from a running container.

Create the file /httpd/httpd.conf in the working directory, which after editing looks like this:

ServerRoot "/usr/local/apache2" Listen 80 LoadModule authn_file_module modules/mod_authn_file.so LoadModule authn_core_module modules/mod_authn_core.so LoadModule authz_host_module modules/mod_authz_host.so LoadModule authz_groupfile_module modules/mod_authz_groupfile.so LoadModule authz_user_module modules/mod_authz_user.so LoadModule authz_core_module modules/mod_authz_core.so LoadModule access_compat_module modules/mod_access_compat.so LoadModule auth_basic_module modules/mod_auth_basic.so LoadModule reqtimeout_module modules/mod_reqtimeout.so LoadModule filter_module modules/mod_filter.so LoadModule mime_module modules/mod_mime.so LoadModule log_config_module modules/mod_log_config.so LoadModule env_module modules/mod_env.so LoadModule headers_module modules/mod_headers.so LoadModule setenvif_module modules/mod_setenvif.so LoadModule version_module modules/mod_version.so LoadModule unixd_module modules/mod_unixd.so LoadModule status_module modules/mod_status.so LoadModule autoindex_module modules/mod_autoindex.so LoadModule dir_module modules/mod_dir.so LoadModule alias_module modules/mod_alias.so # additional LoadModule proxy_module modules/mod_proxy.so LoadModule proxy_fcgi_module modules/mod_proxy_fcgi.so ServerAdmin you@example.com <Directory /> AllowOverride none Require all denied </Directory> DocumentRoot "/var/www/html" <Directory "/var/www/html"> Options Indexes FollowSymLinks Includes ExecCGI AllowOverride All Require all granted </Directory> <IfModule unixd_module> User daemon Group daemon </IfModule> <IfModule dir_module> DirectoryIndex index.php index.pl index.cgi index.asp index.shtml index.html index.htm \ default.php default.pl default.cgi default.asp default.shtml default.html default.htm \ home.php home.pl home.cgi home.asp home.shtml home.html home.htm </IfModule> <Files ".ht*"> Require all denied </Files> <IfModule log_config_module> LogFormat "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\"" combined LogFormat "%h %l %u %t \"%r\" %>s %b" common <IfModule logio_module> LogFormat "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\" %I %O" combinedio </IfModule> </IfModule> <IfModule alias_module> ScriptAlias /cgi-bin/ "/usr/local/apache2/cgi-bin/" </IfModule> <Directory "/usr/local/apache2/cgi-bin"> AllowOverride All Options None Require all granted </Directory> <IfModule headers_module> RequestHeader unset Proxy early </IfModule> <IfModule mime_module> TypesConfig conf/mime.types AddType application/x-compress .Z AddType application/x-gzip .gz .tgz AddType text/html .shtml AddHandler cgi-script .cgi .pl .asp AddOutputFilter INCLUDES .shtml </IfModule> # # FPM # <IfModule proxy_module> <FilesMatch "\.php$"> SetHandler "proxy:fcgi://php:9000" </FilesMatch> </IfModule> Create the file /nginx/nginx.conf in the working directory with the following contents:

worker_processes 1; events { worker_connections 1024; } http { include mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65; server { listen 80; server_name localhost; location ~ \.(jpg|jpeg|gif|png|ico|css|zip|tgz|gz|rar|bz2|doc|xls|exe|pdf|ppt|txt|tar|mid|midi|wav|bmp|rtf|js)$ { root /var/www/html; } location ~ /\.ht { deny all; } location / { proxy_pass http://apache; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $remote_addr; proxy_connect_timeout 120; proxy_send_timeout 120; proxy_read_timeout 180; } } } In the proxy_pass http://apache we again indicate not the IP address, but the name of the container (recall the magic with the hosts).

For testing, we will need to check if PHP is working and Apache is working.

Let's form the following project structure:

- nginx

- nginx.conf

- httpd

- httpd.conf

- src

- protected

- .htaccess

- index.html

- index.php

- protected

- docker-compose.yml

Content .htaccess :

Deny from all Content index.php :

<?php phpinfo(); Content index.html :

Apache not working :-( If everything is set up correctly, the picture should be as follows:

- /index.php - will open php information

- /protected/index.html - 403 apache error will open

- /protected/.htaccess - a 403 nginx error will open (visually they differ)

mariadb + phpmyadmin

https://hub.docker.com/_/mariadb/

https://hub.docker.com/r/phpmyadmin/phpmyadmin/

Let's talk about the database.

The config to connect is as follows:

version: '3' services: mariadb: image: mariadb:10.3 restart: always volumes: - ./mariadb:/var/lib/mysql environment: MYSQL_ROOT_PASSWORD: qwerty phpmyadmin: image: phpmyadmin/phpmyadmin links: - mariadb:db ports: - 8765:80 environment: MYSQL_ROOT_PASSWORD: qwerty depends_on: - mariadb For mariadb and phpmyadmin, we specified an environment directive that contains container-specific variables (details can be found in the repositories of the containers themselves).

In this case, this is the password for the root user.

For phpmyadmin, we manually set the links directive:

links: - mariadb:db This is necessary so that phpmyadmin knows which database to connect to.

If the mariadb container was called db, then this directory would not be necessary.

For mariadb, we link the data directory:

volumes: - ./mariadb:/var/lib/mysql This is done to ensure that the data is stored in the directory of our project, and not inside the container.

If you are not working on a linux machine, then you will have problems locating the database data on the local machine.

These insuperable circumstances, unfortunately, at the moment is not overcome.

Who has a solution, please share.

However, by default (even after the container is destroyed), the database data is saved and you can recreate the container as many times as you want - the data will be stored in the depths of the local machine.

php + apache + nginx + mariadb + phpmyadmin

https://hub.docker.com/_/nginx/

https://hub.docker.com/_/php/

https://hub.docker.com/_/httpd/

https://hub.docker.com/_/mariadb/

https://hub.docker.com/r/phpmyadmin/phpmyadmin/

Well, now we combine our configs, and get a good web environment:

version: '3' services: apache: image: httpd:2.4 volumes: - ./src:/var/www/html - ./httpd/httpd.conf:/usr/local/apache2/conf/httpd.conf depends_on: - php nginx: image: nginx:1.13 ports: - 80:80 volumes: - ./src:/var/www/html - ./nginx/nginx.conf:/etc/nginx/nginx.conf depends_on: - apache php: build: ./php volumes: - ./src:/var/www/html - ./php/php.ini:/usr/local/etc/php/php.ini depends_on: - mariadb mariadb: image: mariadb:10.3 volumes: - ./mariadb:/var/lib/mysql environment: MYSQL_ROOT_PASSWORD: qwerty phpmyadmin: image: phpmyadmin/phpmyadmin links: - mariadb:db ports: - 8765:80 environment: MYSQL_ROOT_PASSWORD: qwerty depends_on: - mariadb For php, we added a build directive ( more info), in which we specified the php directory where the Dockerfile is stored with the following content:

FROM php:7.2-apache RUN apt-get update RUN docker-php-ext-install pdo pdo_mysql mysqli In this file, we update the repositories and install php modules ( more about docker-php-ext-install ).

We also linked the php config so that we can configure it in the way we want.

The contents of php.ini can be taken, for example, here .

We start, check, rejoice!

If everything is done correctly, then index.php will work without errors, and the base files will appear in the project / mysql directory.

Docker production

Regarding this issue, unfortunately I can not say anything, but official documentation can say.

If you have experience using docker on combat projects, then please share your experience in the comments: is it worth it, what difficulties and pitfalls do you have and other useful information for the young and inexperienced.

Conclusion

That's all that I wanted to share.

As you can see, it is not necessary to know how docker works in order to work successfully with it.

Yes, of course, for fine tuning or complex tasks, it is necessary to delve into the wilds of the docker, but in the average statistical case this is not necessary.

If you have something to add, or you noticed some kind of a jamb, or you disagree with something, then I ask you in the comment, we will discuss ;-)

Useful links (aka list of used literature)

Official website documentation

Overview of Docker Compose (official site)

The Complete Docker Practical Guide: From Scratch to AWS Cluster

Understanding Docker

Full automation of the “development” environment using docker-compose

PS

To be honest, I do not understand where so much negativity comes from.

Judging by the comments, the main complaints about the wording and terminology, and this in view of the fact that in the preface I wrote that I intentionally simplify many points.

The purpose of this article is to show how easy it is to work with docker-compose, how instead of deploying 100,500 environments and software for each of your projects, you can use docker-compose and be content.

There is no talk about prodUction (one paragraph is enough), about deploy, about migration between dev and prod environment.

No, the article is not about that.

Pps

Many thanks to krocos Caravus Vershnik Fesor for the efficient comments.

')

Source: https://habr.com/ru/post/346086/

All Articles