Why programmers need restrictions

We were born in culture with the motto “No Borders” or “Push the Limits” , but in fact we need borders. With them, we become better, but it must be the right boundaries.

Censorship for quality music

When we face external constraints on what can be said in a song, book, or film, authors must use metaphors to convey the right meaning.

Take for example the classic Cole Porter song of 1928 Let's Do It (Let's Fall in Love) . We all understand what is meant by “It” and this is definitely not “let's fall in love”. I suspect that the author had to add a part in brackets to avoid censorship.

Fast forward to 2011 and look at the Three 6 Mafia Slob on my Knob . Except for the first metaphorical verse, everything else is disgustingly obvious.

')

If we ignore for a moment the artistic execution (or lack thereof), then we can say that the Cole Porter song hints that the Three 6 Mafia dumps us with unbearable details that leave nothing to the imagination to do.

The problem is that if you do not share the views on sex described in the texts of Three 6 Mafia, you will find the song at best vulgar and completely non-revealing. And by including the song of Cole Porter, the listener can conjure up his own imagination.

That is, restrictions can make the subject more attractive.

Shark broke

Initially, Steven Spielberg planned to tell the story "Jaws" through scenes with a shark. But she constantly broke. Most of the time the film crew could not show the shark - the star of this film.

The tape, which has become a blockbuster, would not exist in its current form, if the difficulties with the mechanics did not impose restrictions on the possibilities of Spielberg.

Why is this movie much better than the one in which the shark is shown? Because each viewer independently fills the gaps with the help of his imagination. He recalls his own phobias and projects them onto the screen. Therefore, fear is personal for each viewer.

Animators this principle has been known for a long time. Turn on the sound of falling behind the screen, and then show its consequences. There are two advantages to this. First, you do not need to animate the fall, and secondly, the fall occurs in the mind of the viewer.

Almost all people believe that they saw how Bambi's mother was shot. But we not only do not see how they shoot at her - we never even saw her AFTER the shot. But people can swear that they saw both scenes. But this NEVER showed .

So the constraints are doing better. Much better.

Choice everywhere

Imagine that you are an artist, and I ask you to paint a picture. The only thing I ask is: “Draw me something beautiful. What I like.

You come to your studio and sit there, looking at a blank canvas. You look at it endlessly, and you just can’t start writing. Why?

Because there are too many options. You can literally draw anything. I have not put before you any restrictions. This phenomenon is called the paradox of choice .

However, if I asked to draw a landscape that I would like, I would at least eliminate half of the endless options. Even though there are still an infinite number of options, any thoughts about the portrait will be quickly dismissed.

If I went further and said that I like seascapes and waves breaking on the shore during a golden sunset, there would still be an infinite number of possible pictures, but these restrictions would actually help you think about what to draw.

And while unknowingly you could start writing a seascape.

So, restrictions make creativity easier.

Hardware is simpler than software

In hardware, it never happens that a transistor or capacitor is used by several components of a computer. Resistors in the keyboard circuit cannot be used by a graphic card.

The graphics card has its own resistors, which controls only it. Hardware engineers are not doing this because they want to sell more resistors. They do this because they have no choice.

The laws of the universe say that such a system cannot be created without causing chaos. The universe sets the rules for the developers of "hardware", that is, limits the limits of the possible.

Such restrictions make working with hardware easier than working with software.

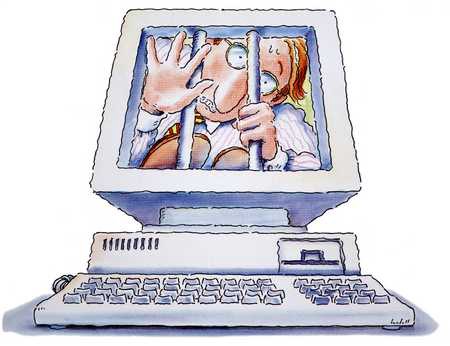

There is nothing impossible in programs

We now turn to software, in which almost everything is possible. Nothing prevents a software developer from using a variable in any part of the program. Such variables are called global.

In assembly language, we can simply go to any point in the code and start its execution. And this can be done at any time. You can even write to the data, causing the program to run unsolicited code. This method is used by hackers who exploit buffer overflow vulnerabilities.

Typically, the operating system limits the actions that a program can perform outside of its limits. But no restrictions are imposed on what she can do with the code and data she owns.

It is the absence of restrictions that makes writing and supporting software such a difficult task.

How to set restrictions in software development

We know that when developing software we need limitations, and we know from experience that limitations in other creative professions can be for our benefit.

We also know that we cannot allow society to randomly censor our code or place mechanical barriers that limit our paradigms. And we cannot expect from users of such a level of qualification that they put appropriate restrictions in software design.

We must limit ourselves. But we must ensure that these restrictions will go to all good. So what boundaries should we choose and how should we make such decisions at all?

To answer this question, we can rely on our experience and years of practice. But the most useful tool is our past mistakes.

The pain of our previous actions, for example, when we touched the hot plate, tells us what limitations we have to put on ourselves in order to get rid of such torment in the future.

Let my people go

A long time ago people wrote programs whose code was jumping from one place to another. This was called spaghetti code , because tracking such a code was like watching one macaroni in a pan.

The industry realized that this practice was counterproductive and at first prohibited the use of the languages in which it was allowed in the GOTO construct.

Over time, new programming languages completely abandoned GOTO support. They began to be called structural programming languages. And today, all popular high-level languages do not contain GOTO.

When this happened, some began to complain that new languages are too strict and that when using GOTO it is easier to write code.

But the more progressive-minded won, and we should be grateful for their rejection of such a destructive tool.

Progressive-minded people realized that the code is much more readable than written or changed. That is, it may be less convenient for conservatives, but in the long run, life with this restriction will be much better.

Computers can still run GOTO. In fact, they even need it. We just, as an industry as a whole, decided to limit the direct use of them by programmers. All computer languages are compiled into code using GOTO. But language developers have created constructs that use more ordered branching, for example, using the break construct, which exits the for loop.

The software industry has greatly benefited from the limitations set by language developers.

Putting on the shackles

So what is GOTO today and what language developers are preparing for us, unsuspecting programmers?

To answer this question, we need to consider the problems that we face daily.

- Complexity

- Reuse

- Global changeable state

- Dynamic typing

- Testing

- Moore's Collapse

How can we limit the ability of programmers to solve these problems?

Complexity

The difficulty grows with time. What is initially a simple system evolves over time into a complex one. What begins as a complex system evolves over time into chaos.

So how do we limit programmers to help them reduce complexity?

First, we can force programmers to write code that is completely broken down into small pieces. Although it is difficult, if not impossible, we can create languages that encourage and reward such behavior.

Many functional programming languages, especially the purest , implement both of these effects.

Writing a function that is a computation forces one to write code that is very much broken into pieces. It also makes you think through the mental model of the task.

We can also impose restrictions on what programmers can do in functions, for example, to make all functions clean . Pure functions are those that have no side effects, for example, functions cannot access data outside of them.

Pure functions work only with the data passed to them, calculate their results and transfer them. Each time you call a pure function with the same input data, it will ALWAYS produce the same output.

This makes working with pure functions much more logical, because all the tasks they perform are entirely within the function itself. It is also easier for them to perform unit testing, because they are self-sufficient units. If the calculations of such functions turn out to be costly, then their results can be cached. If you have the same input data, then you can be sure that the output is always the same too - the perfect scenario for using the cache.

By limiting programmers to pure functions, we significantly limit complexity, because functions can have only local influence; In addition, it helps developers naturally break apart their program.

Reuse

The software industry has been struggling with this problem almost from the very beginning of programming. First we had libraries, then structured programming, and then object-oriented inheritance.

All of these approaches have limited appeal and success. But there is one way that always works and has been used by almost every programmer - Copy / Paste, or copy-paste.

If you copy and paste your code, then you do something wrong.

We cannot forbid programmers to copy-paste, because they still write programs in the form of text, but we can give them something better.

In functional programming, there are standard practices that are much better than copy-paste, namely , higher-order functions , currying (currying), and composition .

Higher-order functions allow programmers to pass parameters that are data and functions. In languages that do not support this feature, the only solution is to copy and paste the function and then edit the logic . Thanks to higher-order functions, logic can be passed as a parameter as a function.

Currying (currying) allows you to apply to a function one parameter at a time. This allows programmers to write generalized versions of functions and bake some of the parameters to create more specialized versions.

The composition allows programmers to assemble functions like Lego cubes, allowing them to reuse the functionality that they or others have built into the pipeline, in which data passes from one function to another. The simplified form of this is Unix pipelines.

So, although we cannot get rid of copy-paste, we can make it optional thanks to the support of the language and by analyzing the code that prohibits its presence in the code bases.

Global changeable state

This is probably the greatest problem in programming, although many do not realize it as a problem.

Have you ever wondered why most often software “bugs” are corrected by restarting the computer or restarting the problem application? This happens because of the condition. The program damages its condition.

Somewhere in the program, the state changes in an unacceptable way. Such "bugs" are usually one of the most difficult to fix. Why? Because they are very difficult to reproduce.

If you fail to consistently reproduce such a "bug", then you can not find a way to fix it. You can check your fix and nothing will happen. But did it happen because the problem is fixed, or because it has not yet arisen?

Proper state management is the most important principle that needs to be implemented to ensure program reliability.

Functional programming solves this problem by setting restrictions for programmers at the language level. Programmers cannot create mutable variables.

At first it seems that the developers have gone too far, and it is time to raise them on the forks. But when you really work with such systems, you can see that you can control the state, at the same time making all data structures immutable , that is, after the variable gets its value, it can never change.

This does not mean that the state can not change. It simply means that to do this, you must pass the current state to the function that creates the new state. While you, hacking lovers, have not begun to sharpen your pitchfork again, I can assure you that there are mechanisms for optimizing such operations "behind the scenes" with the help of Structural Sharing .

Please note that such changes occur "under the hood." As in the old days of GOTO destruction, the compiler and the executable program still use GOTO. They are simply not available to programmers.

Where side effects should occur, functional programming has ways of limiting potentially dangerous parts of a program. In good implementations, these parts of the code are clearly marked as dangerous and are separated from the clean code.

And when in 98% of the code there are no side effects, the spoiling state of the bugs can remain only in the remaining 2%. This gives the programmer a good chance to find errors of this type, because the dangerous parts are driven into a pen.

That is, by limiting programmers to purely (or at most) pure functions, we create more secure and reliable programs.

Dynamic typing

There is another long and old argument about static typing and dynamic typing . Static typing is when the type of a variable is checked at compile time. After you specify the type, the compiler helps you determine if you are using it correctly.

The objections against static typing are that it puts an unnecessary burden on the programmer and contaminates the code with detailed typing information. And this typing information is syntactically “noisy” because it is next to the definition of functions.

With dynamic typing, the type of the variable is never specified and is not checked at the compilation stage. In fact, most languages with dynamic typing are non-compiled.

The objections against dynamic typing are that despite significant code cleaning, the programmer cannot track all instances of the variable being misused. They cannot be detected until the program is launched. This means that, despite all efforts, type errors get to the production stage.

So what's better? Since here we are considering the restriction of programmers, you probably expect me to advocate static typing, despite its shortcomings. Generally yes, but why don't we take the best of both worlds?

It turns out that not all systems with static typing are created equal. Many functional programming languages support type inference , in which the compiler can determine the types of functions you create based on how you use them.

This means that we can use static typing without setting too much types. Recommendations tell us that typing should be defined, not determined by the compiler, but in languages such as Haskell and Elm, the typing syntax does not actually destroy the structure and is quite useful.

Non-functional, i.e. imperative languages with static typing make it hard for a programmer to specify types without giving almost anything in return.

Compared to them, the Haskell and Elm type systems actually help programmers to code better and notify them at compile time if the program does not work correctly.

So, by limiting programmers to good static typing, the compiler can help in recognizing errors, identify types and help in coding, and not burden the developer with verbose, obsessive information about types.

Testing

Writing test code poisons the life of a modern programmer. Very often, developers spend more time writing test code than on the test code itself.

Writing test code for functions interacting with databases or web servers is difficult (if not impossible) to automate. Usually there are two options.

- Do not write tests

- Simulate database or server

Option 1 is definitely not the best, but many people choose it, because imitating complex systems can be more time consuming than writing a module that needs to be tested.

But if we restrict the code to pure functions, they will not be able to directly interact with the database, because this can lead to side effects or mutations. We still need to access the database, but now our layer of dangerous code will be a very thin interface layer, while most of the module remains clean.

Testing pure functions is much easier. But we still need to write the test code that will poison our lives. Or not?

It turns out that there are programs for automatic testing of functional programs. The only thing that a programmer should provide are properties that functions should follow, for example, inverse functions. Haskell’s automated tester is called QuickCheck .

So, by limiting most of the functions so that they are clean, we make testing much easier, and in some cases just trivial.

Moore's Collapse

Moore's law is not really a law, but a practical observation, is that the computing power of computers doubles every two years.

This law has been fair for over 50 years. But, unfortunately, we have reached the limits of modern technology. And the development of technology for creating computers not based on silicon may take decades.

Until then, the best way to double the speed of a computer is to double the number of cores, i.e. the number of computing "engines" of the CPU. But the problem is not that the manufacturers of "iron" can not give us more cores. The problem is batteries and software.

Doubling the processing power means doubling the power consumed by the processor. This will lead to even more battery consumption than today. Battery technology lags far behind the insatiable appetites of users.

Therefore, instead of adding new cores, discharging batteries, we may need to optimize the use of existing cores. This is where software comes into play. In modern imperative programming languages, it is very difficult to make programs run in parallel.

Today, the implementation of parallelism is a heavy burden for a programmer. The program must be cut lengthwise and across into parallel parts. This is not an easy task. And in practice, in languages such as JavaScript, programmers cannot control this, because the code cannot run in parallel, it is single-threaded.

But when using pure functions, it does not matter in which order they are performed. The most important thing about them is the availability of input data. This means that the compiler or the execution system can determine when and what functions to perform.

Limiting only to pure functions, the programmer gets rid of the concern for concurrency.

Functional programs will be able to optimally use the advantages of multi-core machines without increasing the complexity for the developer.

Do more with less

As we can see, with the right restrictions, we can significantly improve our artwork, design and life itself.

The hardware developers have greatly benefited from the natural limitations of their tools, which have simplified their work and have made amazing progress over the past decades.

It seems to me that for us, software developers, it is time to limit ourselves in order to achieve more.

Source: https://habr.com/ru/post/345886/

All Articles