Introducing AVAudioEngine for working with sound on iOS

Looking through the Internet in search of articles on this topic, one wonders at their absence. Perhaps people have enough descriptions in the class headers, or maybe everyone just prefers to study it empirically. Anyway, for those who first encounter this class or feel the need for illustrative examples, we offer this simple practical guide from our partners, Music Topia.

In terms of working with sound, Apple is ahead of its competitors, and this is no accident. The company offers good tools for playing, recording and processing tracks. Thanks to this, on the AppStore you can see a huge number of applications that somehow work with audio content. These include players with good sound ( Vox ), audio editors with tools for editing and applying effects ( Sound Editor ), voice changing applications ( Voicy Helium ), and various musical instrument simulators that give a fairly accurate simulation of the corresponding sound ( Virtuoso Piano ) , and even simulators DJ-installations ( X Djing ).

')

To work with audio, Apple provides the AVFoundation framework, which combines several tools. For example, AVAudioPlayer is used to play a single track, and AVAudioRecorder is used to record sound from a microphone. And if you only need the functions provided by these classes, then just use them. If you need to impose any effect, play several tracks at the same time, mix them, do processing or editing audio, or record sound from the output of a certain audio node, AVAudioEngine will help you with this. Most of all this class attracts the possibility of imposing effects on the track. Just on the basis of these effects is built a lot of applications with equalizers and the ability to change the voice. In addition, Apple allows developers to create their own effects, sound generators and instruments.

Let's start with the main AVAudioEngine engine. This engine is a group of audio connections that generate and process audio signals and represent audio inputs and outputs. It can also be described as a scheme of audio nodes that are arranged in a certain way to achieve the desired result. That is, we can say that AVAudioEngine is the motherboard, on which the chips are placed (in our case, audio nodes).

By default, the engine is connected to an audio device and automatically renders in real time. It can also be configured to work in manual mode, that is, without connecting to a device, to perform rendering in response to a request from a client, usually in real time or even faster.

Working with this class, it is necessary to perform initializations and methods in a certain order so that all operations are carried out correctly. Here is a sequence of actions for the points:

1. Audio session setup

Without setting up an audio session, the application may not work correctly or there will be no sound at all. The specific list of configuration actions depends on what functions the engine performs in the application: recording, playing, or both. The following example sets up the audio session only for playback:

To set up for recording, you must set the category AVAudioSessionCategoryRecord. All categories can be found here .

2. Creating the engine

For this engine to work, you need to import the AVFoundation framework and initialize the class.

Initialization is as follows:

3. Creating nodes

AVAudioEngine works with various child classes of the AVAudioNode class that are attached to it. Nodes have bus entry and exit, which can be considered as points of connection. For example, effects usually have one input bus and one output bus. The buses have formats presented in the form of AudioStreamBasicDescription , which include such parameters as the sampling frequency, the number of channels and others. When connections are created between nodes, in most cases these formats should match each other, but there are exceptions.

Consider each type of audio nodes separately.

The most common type of audio node is AVAudioPlayerNode. This variety, as the name implies, is used to play audio. The node plays the sound either from the specified AVAudioBuffer view buffer, or from segments of the audio file opened by AVAudioFile. Buffers and segments can be scheduled to play at a certain moment or be played immediately after the previous segment.

Example of initialization and playback:

Next, consider the AVAudioUnit class, which is also a child of AVAudioNode. AVAudioUnitEffect (a child class of AVAudioUnit) acts as a parent class for effect classes. The most commonly used effects are: AVAudioUnitEQ (equalizer), AVAudioUnitDelay (delay), AVAudioUnitReverb (echo effect) and AVAudioUnitTimePitch (acceleration or deceleration effect, pitch). Each of them has its own set of settings for changing and processing sound.

Initialization and adjustment of the effect on the example of the delay effect:

The next node, AVAudioMixerNode, is used for mixing. Unlike other nodes that have exactly one input node, the mixer can have several. Here you can combine player nodes with effect nodes and an equalizer, after which the node output is connected to AVAudioOutputNode to output the final sound.

An example of initializing mixer nodes and output:

4. Attaching nodes to the engine

However, a node ad alone is not enough to make it work. It must be attached to the engine and connected to other nodes via input and output buses. The engine supports dynamic connection, disconnection and deletion of nodes during startup with a few limitations:

For attaching nodes to the engine, the “attachNode:” method is used, and for detaching from the engine - “detachNode:”.

5. Connecting the nodes together

All attached nodes must be connected to the general scheme for audio output. Connections depend on what you want to output. You need effects - connect the effect nodes to the player node, you need to combine two tracks - connect two player nodes to the mixer. Or maybe you need two separate tracks: one with a clear sound, and the second with a processed one — this can also be realized. The order of the connection is not so important, but the sequence of connections in the end must be connected to the main mixer, which, in turn, is connected to the audio output node. The only exception is the input nodes, which are usually used for recording from a microphone.

I will give examples on schemes and in the form of a code:

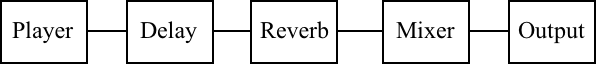

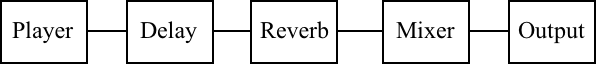

1) Player with effects

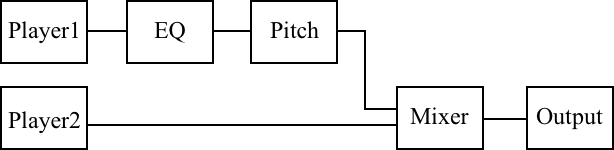

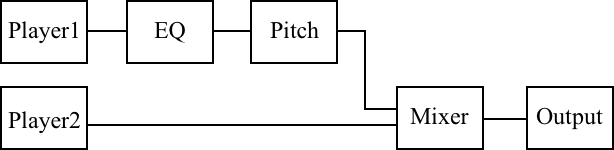

2) Two players, after one of which sound processing

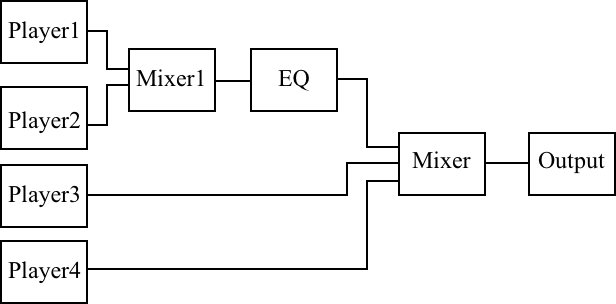

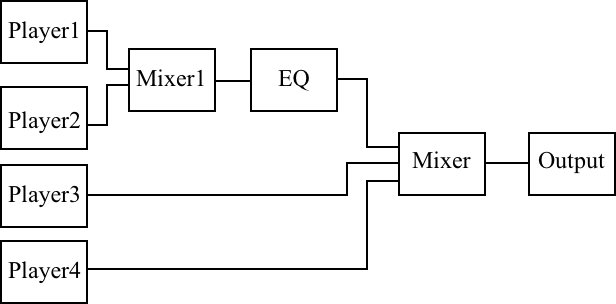

3) Four players, two of which are processed by the equalizer

Now that we have reviewed all the necessary steps for working with the engine, we will write a small example to see all these actions together and better present the order in which they should be performed. Here is an example of creating an equalizer player with low, middle and high frequencies on the AVAudioEngine engine:

Well, an example of the playback method:

When developing with AVAudioEngine you should not forget about some things, without which the application will not work correctly or crash.

1. Audio session and launch test before playing

Before playing, you should always set up an audio session: for one reason or another, it may drop or change the category during the use of the application. Also, before playing, you should check whether the engine is running, as it may be stopped (for example, as a result of switching to another application with the same engine). Otherwise there is a risk that the application will crash.

2. Switching the headset

Another important point is the connection / disconnection of headphones or a bluetooth headset. At the same time, the engine also stops, and often the played track stops playing. Therefore, it is worth catching this moment by adding an observer to receive the notification “AVAudioSessionRouteChangeNotification”. In the selector, you will need to set the audio session and restart the engine.

In this article, we familiarized ourselves with AVAudioEngine, examined by points how to work with it, and gave some simple examples. This is a good tool in the arsenal of Apple, with which you can make a lot of interesting applications of varying degrees of complexity, to some extent working with sound.

In terms of working with sound, Apple is ahead of its competitors, and this is no accident. The company offers good tools for playing, recording and processing tracks. Thanks to this, on the AppStore you can see a huge number of applications that somehow work with audio content. These include players with good sound ( Vox ), audio editors with tools for editing and applying effects ( Sound Editor ), voice changing applications ( Voicy Helium ), and various musical instrument simulators that give a fairly accurate simulation of the corresponding sound ( Virtuoso Piano ) , and even simulators DJ-installations ( X Djing ).

')

To work with audio, Apple provides the AVFoundation framework, which combines several tools. For example, AVAudioPlayer is used to play a single track, and AVAudioRecorder is used to record sound from a microphone. And if you only need the functions provided by these classes, then just use them. If you need to impose any effect, play several tracks at the same time, mix them, do processing or editing audio, or record sound from the output of a certain audio node, AVAudioEngine will help you with this. Most of all this class attracts the possibility of imposing effects on the track. Just on the basis of these effects is built a lot of applications with equalizers and the ability to change the voice. In addition, Apple allows developers to create their own effects, sound generators and instruments.

Key elements and their interrelationship

Let's start with the main AVAudioEngine engine. This engine is a group of audio connections that generate and process audio signals and represent audio inputs and outputs. It can also be described as a scheme of audio nodes that are arranged in a certain way to achieve the desired result. That is, we can say that AVAudioEngine is the motherboard, on which the chips are placed (in our case, audio nodes).

By default, the engine is connected to an audio device and automatically renders in real time. It can also be configured to work in manual mode, that is, without connecting to a device, to perform rendering in response to a request from a client, usually in real time or even faster.

Working with this class, it is necessary to perform initializations and methods in a certain order so that all operations are carried out correctly. Here is a sequence of actions for the points:

- To begin, we set the AVAudioSession audio session.

- Create an engine.

- Create separately AVAudioNode nodes.

- Attaching nodes to the engine (attach).

- Connect nodes to each other (connect).

- Run the engine.

1. Audio session setup

Without setting up an audio session, the application may not work correctly or there will be no sound at all. The specific list of configuration actions depends on what functions the engine performs in the application: recording, playing, or both. The following example sets up the audio session only for playback:

[[AVAudioSession sharedInstance] setCategory:AVAudioSessionCategoryPlayback error:nil]; [[AVAudioSession sharedInstance] overrideOutputAudioPort:AVAudioSessionPortOverrideSpeaker error:nil]; [[AVAudioSession sharedInstance] setActive:YES error:nil]; To set up for recording, you must set the category AVAudioSessionCategoryRecord. All categories can be found here .

2. Creating the engine

For this engine to work, you need to import the AVFoundation framework and initialize the class.

#import <AVFoundation/AVFoundation.h> Initialization is as follows:

AVAudioEngine *engine = [[AVAudioEngine alloc] init]; 3. Creating nodes

AVAudioEngine works with various child classes of the AVAudioNode class that are attached to it. Nodes have bus entry and exit, which can be considered as points of connection. For example, effects usually have one input bus and one output bus. The buses have formats presented in the form of AudioStreamBasicDescription , which include such parameters as the sampling frequency, the number of channels and others. When connections are created between nodes, in most cases these formats should match each other, but there are exceptions.

Consider each type of audio nodes separately.

The most common type of audio node is AVAudioPlayerNode. This variety, as the name implies, is used to play audio. The node plays the sound either from the specified AVAudioBuffer view buffer, or from segments of the audio file opened by AVAudioFile. Buffers and segments can be scheduled to play at a certain moment or be played immediately after the previous segment.

Example of initialization and playback:

AVAudioPlayerNode *playerNode = [[AVAudioPlayerNode alloc] init]; … NSURL *url = [[NSBundle mainBundle] URLForResource:@"sample" withExtension:@"wav"]; AVAudioFile *audioFile = [[AVAudioFile alloc] initForReading:url error:nil]; [playerNode scheduleFile:audioFile atTime:0 completionHandler:nil]; [playerNode play]; Next, consider the AVAudioUnit class, which is also a child of AVAudioNode. AVAudioUnitEffect (a child class of AVAudioUnit) acts as a parent class for effect classes. The most commonly used effects are: AVAudioUnitEQ (equalizer), AVAudioUnitDelay (delay), AVAudioUnitReverb (echo effect) and AVAudioUnitTimePitch (acceleration or deceleration effect, pitch). Each of them has its own set of settings for changing and processing sound.

Initialization and adjustment of the effect on the example of the delay effect:

AVAudioUnitDelay *delay = [[AVAudioUnitDelay alloc] init]; delay.delayTime = 1.0f;// , 0 2 The next node, AVAudioMixerNode, is used for mixing. Unlike other nodes that have exactly one input node, the mixer can have several. Here you can combine player nodes with effect nodes and an equalizer, after which the node output is connected to AVAudioOutputNode to output the final sound.

An example of initializing mixer nodes and output:

AVAudioMixerNode *mixerNode = [[AVAudioMixerNode alloc] init]; AVAudioOutputNode *outputNode = engine.outputNode; 4. Attaching nodes to the engine

However, a node ad alone is not enough to make it work. It must be attached to the engine and connected to other nodes via input and output buses. The engine supports dynamic connection, disconnection and deletion of nodes during startup with a few limitations:

- all dynamic reconnections should occur in front of the mixer node;

- while deleting effects usually leads to the automatic connection of neighboring nodes, deleting a node with a different number of input and output channels or a mixer is likely to damage the graph.

For attaching nodes to the engine, the “attachNode:” method is used, and for detaching from the engine - “detachNode:”.

[engine attachNode:playerNode]; [engine detachNode:delay]; 5. Connecting the nodes together

All attached nodes must be connected to the general scheme for audio output. Connections depend on what you want to output. You need effects - connect the effect nodes to the player node, you need to combine two tracks - connect two player nodes to the mixer. Or maybe you need two separate tracks: one with a clear sound, and the second with a processed one — this can also be realized. The order of the connection is not so important, but the sequence of connections in the end must be connected to the main mixer, which, in turn, is connected to the audio output node. The only exception is the input nodes, which are usually used for recording from a microphone.

I will give examples on schemes and in the form of a code:

1) Player with effects

[engine connect:playerNode to:delay format:nil]; [engine connect:delay to:reverb format:nil]; [engine connect:reverb to:engine.mainMixerNode format:nil]; [engine connect:engine.mainMixerNode to:outputNode format:nil]; [engine prepare]; 2) Two players, after one of which sound processing

[engine connect:player1 to:eq format:nil]; [engine connect:eq to:pitch format:nil]; [engine connect:pitch to:mixerNode fromBus:0 toBus:0 format:nil]; [engine connect:player2 to:mixerNode fromBus:0 toBus:1 format:nil]; [engine connect:mixerNode to:outputNode format:nil]; [engine prepare]; 3) Four players, two of which are processed by the equalizer

[engine connect:player1 to:mixer1 fromBus:0 toBus:0 format:nil]; [engine connect:player2 to:mixer1 fromBus:0 toBus:1 format:nil]; [engine connect:mixer1 to:eq format:nil]; [engine connect:eq to:engine.mainMixerNode fromBus:0 toBus:0 format:nil]; [engine connect:player3 to: engine.mainMixerNode fromBus:0 toBus:1 format:nil]; [engine connect:player4 to: engine.mainMixerNode fromBus:0 toBus:2 format:nil]; [engine connect:engine.mainMixerNode to:engine.outputNode format:nil]; [engine prepare]; Now that we have reviewed all the necessary steps for working with the engine, we will write a small example to see all these actions together and better present the order in which they should be performed. Here is an example of creating an equalizer player with low, middle and high frequencies on the AVAudioEngine engine:

- (instancetype)init { self = [super init]; if (self) { [[AVAudioSession sharedInstance] setCategory:AVAudioSessionCategoryPlayback error:nil]; [[AVAudioSession sharedInstance] overrideOutputAudioPort:AVAudioSessionPortOverrideSpeaker error:nil]; [[AVAudioSession sharedInstance] setActive:YES error:nil]; engine = [[AVAudioEngine alloc] init]; playerNode = [[AVAudioPlayerNode alloc] init]; AVAudioMixerNode *mixerNode = engine.mainMixerNode; AVAudioOutputNode *outputNode = engine.outputNode; AVAudioUnitEQ *eq = [[AVAudioUnitEQ alloc] initWithNumberOfBands:3]; eq.bands[0].filterType = AVAudioUnitEQFilterTypeParametric; eq.bands[0].frequency = 100.f; eq.bands[1].filterType = AVAudioUnitEQFilterTypeParametric; eq.bands[1].frequency = 1000.f; eq.bands[2].filterType = AVAudioUnitEQFilterTypeParametric; eq.bands[2].frequency = 10000.f; [engine attachNode:playerNode]; [engine attachNode:eq]; [engine connect:playerNode to:eq format:nil]; [engine connect:eq to:engine.mainMixerNode format:nil]; [engine connect:engine.mainMixerNode to:engine.outputNode format:nil]; [engine prepare]; if (!engine.isRunning) { [engine startAndReturnError:nil]; } } return self; } Well, an example of the playback method:

-(void)playFromURL:(NSURL *)url { AVAudioFile *audioFile = [[AVAudioFile alloc] initForReading:url error:nil]; [playerNode scheduleFile:audioFile atTime:0 completionHandler:nil]; [playerNode play]; } Possible problems

When developing with AVAudioEngine you should not forget about some things, without which the application will not work correctly or crash.

1. Audio session and launch test before playing

Before playing, you should always set up an audio session: for one reason or another, it may drop or change the category during the use of the application. Also, before playing, you should check whether the engine is running, as it may be stopped (for example, as a result of switching to another application with the same engine). Otherwise there is a risk that the application will crash.

[[AVAudioSession sharedInstance] setCategory:AVAudioSessionCategoryPlayback error:nil]; [[AVAudioSession sharedInstance] overrideOutputAudioPort:AVAudioSessionPortOverrideSpeaker error:nil]; [[AVAudioSession sharedInstance] setActive:YES error:nil]; if (!engine.isRunning) { [engine startAndReturnError:nil]; } 2. Switching the headset

Another important point is the connection / disconnection of headphones or a bluetooth headset. At the same time, the engine also stops, and often the played track stops playing. Therefore, it is worth catching this moment by adding an observer to receive the notification “AVAudioSessionRouteChangeNotification”. In the selector, you will need to set the audio session and restart the engine.

[[NSNotificationCenter defaultCenter] addObserver:self selector:@selector(audioRouteChangeListenerCallback:) name:AVAudioSessionRouteChangeNotification object:nil]; Conclusion

In this article, we familiarized ourselves with AVAudioEngine, examined by points how to work with it, and gave some simple examples. This is a good tool in the arsenal of Apple, with which you can make a lot of interesting applications of varying degrees of complexity, to some extent working with sound.

Source: https://habr.com/ru/post/345768/

All Articles