Memory segmentation (Computer memory scheme)

I present to you, the translation of the article of one of the PHP developers, including version 7 and above, a certified engineer of ZendFramework. Currently working in SensioLabs and most of them are engaged in low-level things, including programming in C under Unix. Original article here.

I plan in the future to write technical articles on PHP related to a deep understanding of memory. I need my readers to have such knowledge that will help them understand some of the concepts of the further explanation. In order to answer this question, we will have to rewind time back in the 1960s. I'm going to explain to you how a computer works, or rather, how memory access occurs in a modern computer, and then you will understand what is causing this strange error message - Segmentation Fault .

What you will read here is a summary of the basics of computer architecture design. I will not go too far if it is not needed, and I will use well-known wording, so whoever works with a computer every day can understand such important concepts about how a PC works. There are many books about computer architecture. If you want to go further in this topic, I suggest you get some of them and start reading. In addition, open the source code of the OS kernel and examine it, be it the Linux kernel, or any other.

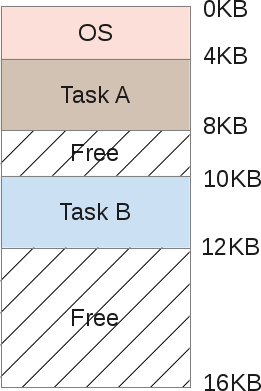

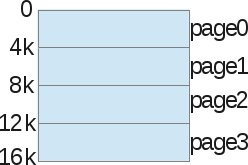

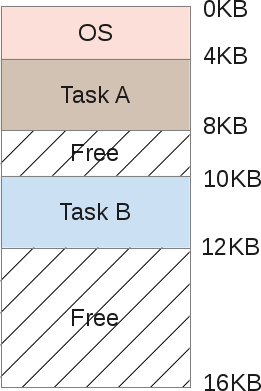

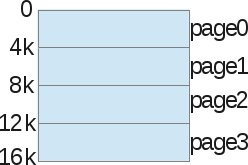

Back at the time when the computers were huge machines, heavier than a ton, inside you could find one processor with something like 16Kb of RAM. We will not go further =) During this period, the computer cost about $ 150,000 and could perform exactly one task at a time. If at that time we drew a scheme of its memory, the architecture would look like this:

')

As you can see, the memory size is 16KB, and consists of two parts:

The role of the operating system was to control the interruptions of the central process by hardware. Thus, the operating system needed in memory for itself to copy data from the device and work with them (PIO mode). The main memory was also needed to provide data to the screen, since the video adapters had from zero to several kilobytes of memory. And our solo program used the OS memory to achieve the objectives.

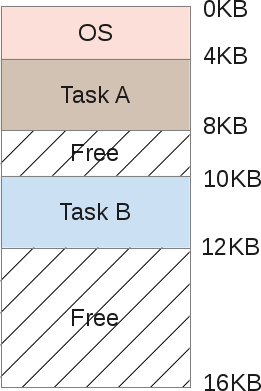

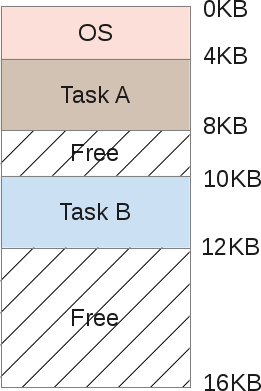

But one of the main problems of such a model is that a computer (worth $ 150,000) could perform only one task at a time, and this task was carried out for an awfully long time: whole days to process several kilobytes of data. At such a huge price, it is clearly not possible to buy several machines in order to perform several procedures at the same time. So people tried to allocate machine resources. It was the time of birth multitasking. Keep in mind that it was still very early to talk about multiprocessor PCs. How can we force one machine with one processor, and actually solve several different problems? The answer to this question is planning. While one process is busy waiting for I / O (waiting for an interrupt), the processor can start another process. We will not talk about planning at all levels (too broad a topic), only about memory. If a machine can perform several tasks, one process after another, this means that the memory will be distributed approximately like this:

Both tasks A and B are stored in RAM, as copying them back and forth to disk is a very resource-intensive process. The data must remain in memory on an ongoing basis, as their respective tasks still work. The scheduler gives some processor time, then task A, then B, and so on ... each time giving access to its memory area. But wait, there is a problem. When one programmer writes task code B, he must know the addresses of the memory boundaries. For example, task B will be located with 10Kb of memory up to 12Kb. Therefore, each address in the program must be strictly recorded precisely in these limits. If the machine then performs 3 more tasks, the address space will be divided into more areas, and the memory boundaries of task B will move. The programmer would have to rewrite his program in order to use less memory and rewrite each memory pointer address.

Another problem is also obvious here: what if task B accesses memory of task A? This can easily happen because when you manipulate pointers, a small error in the calculation results in a completely different address. This can spoil task A data (overwrite it). There is also a security issue, that if Task A works with very sensitive data? There is no way to prevent task B from reading some area of task A. Lastly, what if task B overwrites the OS memory by mistake? For example, OS memory from 0Kb to 4Kb, in case task B overwrites this section, the operating system will most likely crash.

Thus, you need the help of the operating system and hardware to be able to run multiple tasks in memory. One way to help is to create what is called an address space. The address space is an abstraction of memory that the operating system will give to the process, and this concept is absolutely fundamental, since at the present time every part of the computer that you encounter in your life is designed in this way. Now there is no other model (in civil society, the army can keep a secret).

The address space contains all the tasks (processes) that must be run:

The address space is divided as follows:

Since the stack and the heap are expandable zones, they are located at opposite places in the same address space: the stack will grow up, and the heap down. Both can grow freely, each in the direction of the other. The OS should simply check that the zones do not overlap, using the virtual space correctly.

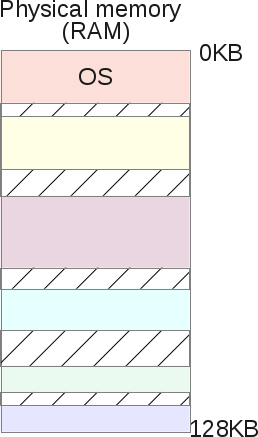

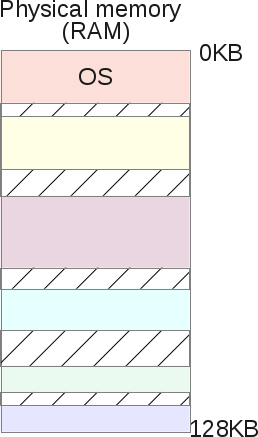

If task A received the address space, the one we saw, as well as task B. So how can we place them in memory? It seems strange, but the address space of task A starts from 0KB to 16Kb, as well as task B. The trick is to create a virtual environment. In fact, the picture of the placement of A and B in memory:

When task A tries to access memory in its address space, for example, the 11K index, somewhere in its own stack, the operating system executes the hack and actually loads the 1500 memory index, because the 11K index belongs to task B. In fact, the entire address space of each program in memory is simply virtual memory. Each program running on a computer accesses virtual addresses; with the help of some hardware chips, the OS will deceive the process when it tries to access any area of memory.

The OS virtualizes memory and ensures that any task cannot access memory that it does not own. Memory virtualization allows you to isolate the process. Task A can no longer access the memory of task B and cannot access the OS memory. And all this is completely transparent to tasks at the user level, thanks to tons of complex OS kernel code. Thus, the operating system serves each process memory request. It works very efficiently - even if too many different programs are running. To achieve this goal, the process receives assistance from the hardware, mainly from the processor and some electronic components around it, such as a memory management unit (MMU). The MMU appeared in the early 70s, along with IBM, as separate chips. Now they are embedded directly in our CPU chips and are required for any modern OS to run. In fact, the operating system does not perform tons of operations, but relies on certain hardware behaviors that facilitate memory access.

Here is a small C program showing some memory addresses:

On my LP64 x86_64 machine, it shows:

We can see that the stack address is much higher than the heap address, and the code is located below the stack. But each of these 3 addresses are fakes: in physical memory, an integer 3 is not exactly located at 0x7ffe60a1465c. Remember that each user program manipulates virtual memory addresses, while kernel-level programs, such as the OS kernel itself ( or hardware driver code) can manipulate physical RAM addresses.

Address broadcasting is the formulation behind which a certain magic technique is hidden. The hardware (MMU) translates each virtual address of a user-level program to the correct physical address. Thus, the operating system remembers for each task the correspondence between its virtual and physical addresses. And this is a daunting task. The operating system manages the entire memory of tasks at the user level for each memory access requirement, thereby providing a complete illusion of the process. Thus, the operating system converts all physical memory into a useful, powerful, and simple abstraction.

Let's take a closer look at a simple script:

When the process starts, the operating system reserves a fixed area of physical memory, say, 16KB. It then stores the starting address of this space in a special variable called base. Then it sets another special variable, called the boundary (or limit) of the width of the space - 16KB. Next, the operating system saves these two values in a process table called PCB (Process Control Block).

And this is how the virtual address space process looks like:

And here is his physical image:

The OS decided to keep it in physical memory in the address range from 4K to 20K. Thus, the base address is set to 4K, and the limit is set to 4 + 16 = 20K. When this process is scheduled (given some processor time), the operating system reads back the limit values from the PCB and copies them into specific processor registers. When the CPU during operation tries to load, for example, a virtual 2K address (something on its heap), the CPU will add this base address obtained from the operating system. Thus, the memory access process will result in a physical location of 2K + 4K = 6K.

Physical Address = Virtual Address + Limit

If the received physical address (6K) is out of bounds (-4K | 20K-), it means that the process tried to gain access to the wrong memory that it does not own. The processor will generate an exception, and since the OS has an exception handler for this event, the OS is activated by the processor and will know that a memory exception has just occurred on the CPU. Then the OS will, by default, transmit a signal to the corrupted “SIGSEGV” process. A segmentation error, which by default (this can be changed) will complete the task with the message “A malfunction has occurred with invalid memory access.”

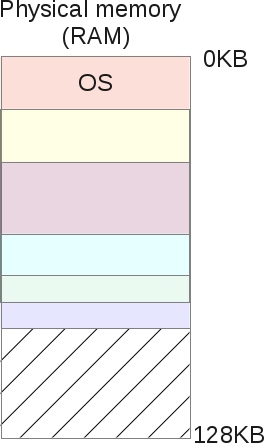

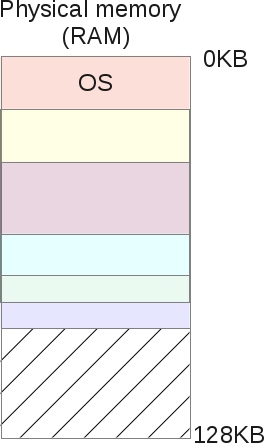

Even better, if task A is not scheduled, it means that it is retrieved from the CPU, as the scheduler asked to start another task (say task B). When performing task B, the OS can freely move the entire physical address space of task A. The operating system often gets processor time when executing user tasks. When the last system call is completed, control of the CPU returns to the OS, and before making a system call, the OS can do whatever it wants, managing memory, moving the entire process space to different physical memory card slots.

This is relatively simple: the operating system moves the 16K area to another 16K of free space, and simply updates the basic and associated task variables A. When the task is resumed and transferred to the CPU, the address translation process will still work, but this will not result same physical address as before.

Task A did not notice anything, from her point of view, her address space still starts from 0K to 16K. The operating system and the MMU hardware take full control of each memory access for task A, giving it complete illusion. Task A programmer manipulates his own resolved virtual addresses, from 0 to 16, and the MMU will take care of positioning in physical memory.

The image of the memory after moving will look like this:

At present, the programmer no longer has to wonder where his program is in RAM, if another task is running next to his own, and what addresses to manipulate. This is done by the OS itself along with a very productive and smart hardware - “Memory Control Unit” (MMU).

Pay attention to the appearance of the word "segmentation" - we are close to explaining why segmentation errors occur. In the previous chapter, we explained about translation and memory movement, but the model we used has its drawbacks:

We assumed that each virtual address space is fixed at a width of 16Kb. Obviously, this is not the case in reality. The operating system must maintain a list of physical memory free slots (width 16 Kb) to be able to find a place for any new process with a request to start or move the running process. How to do this effectively, so as not to slow down the entire system? As you can see, each process occupies a memory space of 16 Kb, even if the process does not use its entire address space, which is very likely. This model obviously spends a lot of memory, the process consumes 1KB of memory, and its memory section in physical memory is 16Kb. Such waste is called internal fragmentation: memory is reserved, but never used.

To solve some of these problems, we are going to dive into a more complex organization of memory in the OS - segmentation. Segmentation is easy to understand: we are expanding the idea of the “base and boundaries” of three logical memory segments: heap, code, and stack; of each process — instead of simply viewing the memory image as one unique object.

With this concept, the memory between the stack and the heap is no longer wasted. Like this:

Now it is easy to see that the free space in virtual memory for tasks A is no longer allocated in physical memory, memory usage becomes much more efficient. The only difference is that currently for any task, the OS must memorize not one pair of bases / borders, but three: one pair for each type of segment. The IMU takes care of the translation, just as before, and now supports 3 basic values, and 3 boundaries.

For example, here, task heap A has a base of 126K and 2K boundaries. The task then asks for access to the virtual address 3KB, on the heap; physical address 3Kb - 2Kb (start of the heap) = 1Kb + 126K (offset) = 127K. 127K is in front of 128K - this is the correct memory address that can be executed.

Segmentation of physical memory not only solves the problem of free virtual memory, freeing up more physical memory, but it also allows you to separate physical segments through various processes of virtual address spaces. If you run the same task twice, task A, for example, the code segment is exactly the same: both tasks perform the same processor commands. Both tasks will use their own stack and stack, access their own data sets. It is inefficient to duplicate a code segment in memory. The OS can now split it and save even more memory. For example:

In the picture above, A and B have their own code area in their respective virtual memory space, but under the hood. The operating system divides this area in the same physical memory segments. Both tasks A and B absolutely do not see this, for both of them - they own their memory. To achieve this, the OS must implement one more feature: the segment protection bit. The OS will create for each physical segment, register boundaries / limits for the MMU unit to work correctly, but it will also register the enable flag.

Since the code is not mutable, the code segments are all created with the RX enable flag. A process can load this area of memory for execution, but any process that attempts to write to this area of memory will be terminated by the OS. The remaining two segments: heap and stack are RW, processes can read and write from their own stack / heap, but they cannot execute code from it (this prevents program security flaws, where a bad user may want to damage the heap or stack to enter code This is impossible, since the heap and stack of segments are often not executable. Please note that this was not always the case, as it requires additional hardware support for efficient operation, This is called the "NX bit" under the Intel processor). Permissions memory segments are changeable at runtime: a task may require mprotect () from the OS. These memory segments are clearly visible under Linux, use the / proc / {pid} / maps or / usr / bin / pmap utilities

The following is an example for PHP:

Here we see in detail all the memory mappings. Addresses are virtual and display their memory permissions. As we can see, each shared object (.so) is mapped into the address space as several mappings (probably code and data), and code areas are executable, and under the hood they are divided into physical memory between each process that displays such a common object in its own address space ...

The biggest advantage of shared objects under Linux (and Unix) is memory savings. It is also possible to create a common zone leading to a common physical memory segment using the mmap () system call. The letter 's', appearing next to this area, means “shared”.

We have seen that segmentation solves the problem of unused virtual memory. When a process does not use a certain amount of memory, this process is not mapped to physical memory due to the segments that correspond to the selected memory. However, this is not entirely true. What if the process requires 16Kb heaps? The OS will most likely create a 16KB physical memory segment. But if the user then releases 2K of such memory? Then, the OS should reduce the 16Kb segment to 14kb. What if the programmer now asks for 30Kb heaps? The old segment of 14Kb should now grow to 30 KB, but can it do it? Other segments can now surround our 14kb segment, hindering its growth. Then the OS will have to look for 30 KB of free space, and then move the segment.

The big problem with segments is that they lead to very fragmented physical memory, because they continue to grow as user tasks request and free memory. The operating system must maintain and manage a list of free pieces of memory.

Sometimes, the OS gains some available space by summing all free segments, but since it does not touch, it cannot use this space and must deny the memory requirement of the process, even if there is space in the physical memory. This is a very bad scenario. The operating system may try to compress the memory by merging all the free sites into one large piece that could be used in the future to satisfy the memory request.

But this compression algorithm is complicated for the CPU, and at this time no user process can get the CPU: the operating system is fully loaded by reorganizing its physical memory, thus the system becomes unusable. Memory segmentation solves many problems of memory management and multitasking, but it also reveals real flaws. Thus, there is a need to expand the possibilities of segmentation and correct these shortcomings. From this came another concept - “pagination of memory”.

The pagination of memory pages shares some concepts of memory segmentation and tries to solve its problems. We saw that the main problem with memory segmentation is that the segments will grow and shrink very often, as the user process requests and frees the memory. Sometimes the operating system encounters a problem. It cannot find a large area of free space to satisfy the memory request of a user process, because physical memory has become heavily fragmented over time: it contains many segments of different sizes throughout physical memory, which leads to highly fragmented physical memory.

A breakdown solves this problem with a simple concept: what if for each physical distribution the kernel allocates a fixed size? Pages are fixed size physical memory segments. If the operating system uses fixed-size distributions, it is much easier to manage space, which ultimately negates memory fragmentation.

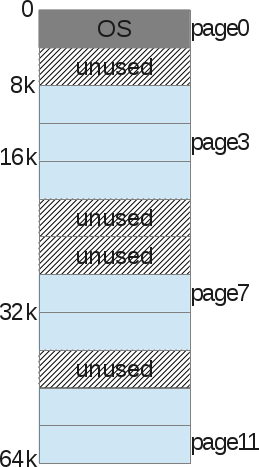

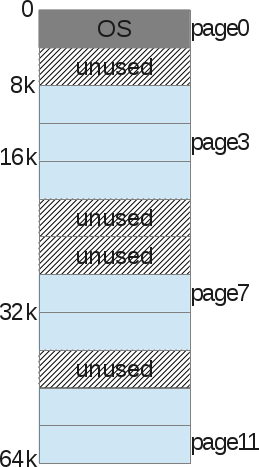

Let's show the example again, assuming a small 16KB virtual address space to facilitate the presentation:

With pagination, we are not talking about a heap, stack, or code segment. We divide the entire virtual memory process into fixed-size zones: we call them pages. In the example above, we have divided the address space in the form of 4 pages, 4KB each.

Then we do the same with physical memory.

And the operating system simply stores what is called the “Processor Pages Table” - the connection between one virtual process memory page and the basic physical page (which we call the “frame page”).

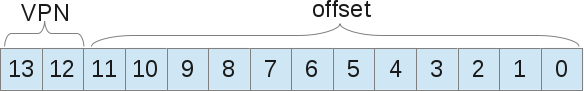

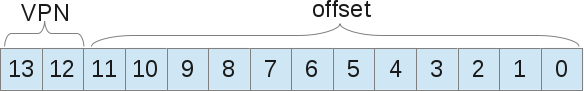

With this method of memory management, there are no more problems in the free management of space. The page frame is displayed (used) or not; it is easy for the kernel to find a sufficient number of pages to satisfy the user process memory request. It simply stores a list of free frame pages, and also looks at it with each request for memory. A page is the smallest unit of memory an OS can manage. The page (at our level) is indivisible. Together with the pages, each process is accompanied by a table of pages that stores address translations. Translations no longer use border values, as they did with segmentation, but use “virtual page number (VPN)” and “offset” on this page.

Let's show an example of address translation with page numbering. The virtual address space is 16Kb in size, i.e. we need 14 bits to represent the address (2 ^ 14 = 16Kb). The page size is 4 Kb, so we need 4kb (16/4) to choose our page:

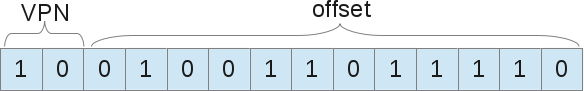

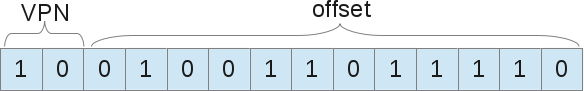

Now, when the process wants to load, for example, the address 9438 (out of 16384 possibilities), this gives 10.0100.1101.1110 in binary, which leads to the following:

That is, the 1246th byte of virtual page 2 (“0100.1101.1110” th byte in the “10th” page). Now, the operating system should search this process table page to find out on which page the 2nd card. According to what we suggested, page 2 is 8K bytes in physical memory. Thus, the virtual address 9438 leads to the physical address 9442 (8k + 1246 offset). We found our data! As we have said, there is only one page table for each process, since each process will have its own translation of the address, as well as with segments. But wait, where are these page tables actually stored? Guess ...: in physical memory, yes, but where is she still to be? If the page tables themselves are stored in memory, therefore, for each memory access, the memory must have access to retrieve the VPN. Thus, with pages, one memory access is actually equal to two memory accesses: one to retrieve the page table entry, and one to access the “real” data. Knowing that memory access is a slow process, we get that this is not the best idea.

Using paging as a kernel mechanism to support virtual memory can result in high performance overhead. Shredding the address space into small fixed-size units (pages) requires a large amount of map information. Because card information is usually stored in physical memory. Logical paging search requires additional memory search for each virtual address generated by the program. And here again come the hardware to speed up and help the OS. In pagination, as in segmentation, hardware helps the OS kernel to translate addresses in an efficient, acceptable way. TLB MMU, VPN . TLB , . MMU , VPN , TLB, VPN. , . , , , TLB, TLB.

, , , , , . . , TLB , . . . Linux , « », , 2Mb 4Kb. , . , TLB TLB. , TLB: , , , , TLB.

TLB , ASID . , - PID. ASID, TLB . , , , , TLB, . , . X86 4 , , . , , , ..… , SIGSEGV, « », . , , . , , , , , . , «swaping» (, , , ).

, « ». . , , MMU . , , , , : SIGSEGV, . : « ”. () „ “. Linux — , , X86 / 64 , , SIGSEGV. X86 / 64 , Linux. , , . , , CPU: Motorola 68HC11 C, VHDL . , ( ). Web; , …

Segmentation Error: (Computer memory layout)

A few words about what this blog entry is about.

I plan in the future to write technical articles on PHP related to a deep understanding of memory. I need my readers to have such knowledge that will help them understand some of the concepts of the further explanation. In order to answer this question, we will have to rewind time back in the 1960s. I'm going to explain to you how a computer works, or rather, how memory access occurs in a modern computer, and then you will understand what is causing this strange error message - Segmentation Fault .

What you will read here is a summary of the basics of computer architecture design. I will not go too far if it is not needed, and I will use well-known wording, so whoever works with a computer every day can understand such important concepts about how a PC works. There are many books about computer architecture. If you want to go further in this topic, I suggest you get some of them and start reading. In addition, open the source code of the OS kernel and examine it, be it the Linux kernel, or any other.

Some computer science history

Back at the time when the computers were huge machines, heavier than a ton, inside you could find one processor with something like 16Kb of RAM. We will not go further =) During this period, the computer cost about $ 150,000 and could perform exactly one task at a time. If at that time we drew a scheme of its memory, the architecture would look like this:

')

As you can see, the memory size is 16KB, and consists of two parts:

- Operating system memory area (4kb)

- Memory area of running processes (12Kb)

The role of the operating system was to control the interruptions of the central process by hardware. Thus, the operating system needed in memory for itself to copy data from the device and work with them (PIO mode). The main memory was also needed to provide data to the screen, since the video adapters had from zero to several kilobytes of memory. And our solo program used the OS memory to achieve the objectives.

<Sharing computer

But one of the main problems of such a model is that a computer (worth $ 150,000) could perform only one task at a time, and this task was carried out for an awfully long time: whole days to process several kilobytes of data. At such a huge price, it is clearly not possible to buy several machines in order to perform several procedures at the same time. So people tried to allocate machine resources. It was the time of birth multitasking. Keep in mind that it was still very early to talk about multiprocessor PCs. How can we force one machine with one processor, and actually solve several different problems? The answer to this question is planning. While one process is busy waiting for I / O (waiting for an interrupt), the processor can start another process. We will not talk about planning at all levels (too broad a topic), only about memory. If a machine can perform several tasks, one process after another, this means that the memory will be distributed approximately like this:

Both tasks A and B are stored in RAM, as copying them back and forth to disk is a very resource-intensive process. The data must remain in memory on an ongoing basis, as their respective tasks still work. The scheduler gives some processor time, then task A, then B, and so on ... each time giving access to its memory area. But wait, there is a problem. When one programmer writes task code B, he must know the addresses of the memory boundaries. For example, task B will be located with 10Kb of memory up to 12Kb. Therefore, each address in the program must be strictly recorded precisely in these limits. If the machine then performs 3 more tasks, the address space will be divided into more areas, and the memory boundaries of task B will move. The programmer would have to rewrite his program in order to use less memory and rewrite each memory pointer address.

Another problem is also obvious here: what if task B accesses memory of task A? This can easily happen because when you manipulate pointers, a small error in the calculation results in a completely different address. This can spoil task A data (overwrite it). There is also a security issue, that if Task A works with very sensitive data? There is no way to prevent task B from reading some area of task A. Lastly, what if task B overwrites the OS memory by mistake? For example, OS memory from 0Kb to 4Kb, in case task B overwrites this section, the operating system will most likely crash.

Address space

Thus, you need the help of the operating system and hardware to be able to run multiple tasks in memory. One way to help is to create what is called an address space. The address space is an abstraction of memory that the operating system will give to the process, and this concept is absolutely fundamental, since at the present time every part of the computer that you encounter in your life is designed in this way. Now there is no other model (in civil society, the army can keep a secret).

Each system is currently organized with a memory layout like “code - stack - heap”, and this is frustrating.

The address space contains all the tasks (processes) that must be run:

- Code: these are machine instructions that the processor must process

- Data: machine instruction data that is processed along with it.

The address space is divided as follows:

- A stack is a region of memory where the program stores information about the functions being called, their arguments, and each local variable in the function.

- A heap is a memory area where a programmer is free to do anything.

- The code is the memory area where instructions of the compiled program will be stored. These instructions are generated by the compiler, but can be written by hand. Please note that the code segment is usually divided into three parts (text, data and BSS), but we will not dive so deep.

- The code is always a fixed size created by the compiler and will weigh (in our example) 1Kb.

- The stack, however, is a mutable zone, since the program is running. When functions are called, the stack expands when returning from a function: the stack shrinks.

- A heap is also a mutable zone, when a programmer allocates memory from a heap (malloc ()), it expands, and when a programmer releases memory back (free ()), the heap narrows.

Since the stack and the heap are expandable zones, they are located at opposite places in the same address space: the stack will grow up, and the heap down. Both can grow freely, each in the direction of the other. The OS should simply check that the zones do not overlap, using the virtual space correctly.

Memory virtualization

If task A received the address space, the one we saw, as well as task B. So how can we place them in memory? It seems strange, but the address space of task A starts from 0KB to 16Kb, as well as task B. The trick is to create a virtual environment. In fact, the picture of the placement of A and B in memory:

When task A tries to access memory in its address space, for example, the 11K index, somewhere in its own stack, the operating system executes the hack and actually loads the 1500 memory index, because the 11K index belongs to task B. In fact, the entire address space of each program in memory is simply virtual memory. Each program running on a computer accesses virtual addresses; with the help of some hardware chips, the OS will deceive the process when it tries to access any area of memory.

The OS virtualizes memory and ensures that any task cannot access memory that it does not own. Memory virtualization allows you to isolate the process. Task A can no longer access the memory of task B and cannot access the OS memory. And all this is completely transparent to tasks at the user level, thanks to tons of complex OS kernel code. Thus, the operating system serves each process memory request. It works very efficiently - even if too many different programs are running. To achieve this goal, the process receives assistance from the hardware, mainly from the processor and some electronic components around it, such as a memory management unit (MMU). The MMU appeared in the early 70s, along with IBM, as separate chips. Now they are embedded directly in our CPU chips and are required for any modern OS to run. In fact, the operating system does not perform tons of operations, but relies on certain hardware behaviors that facilitate memory access.

Here is a small C program showing some memory addresses:

#include <stdio.h> #include <stdlib.h> int main (int argc, ** argv) { int v = 3; printf("Code is at %p \n", (void *)main); printf("Stack is at %p \n", (void *)&v); printf("Heap is at %p \n", malloc(8)); return 0; } On my LP64 x86_64 machine, it shows:

Code is at 0x40054c Stack is at 0x7ffe60a1465c Heap is at 0x1ecf010 We can see that the stack address is much higher than the heap address, and the code is located below the stack. But each of these 3 addresses are fakes: in physical memory, an integer 3 is not exactly located at 0x7ffe60a1465c. Remember that each user program manipulates virtual memory addresses, while kernel-level programs, such as the OS kernel itself ( or hardware driver code) can manipulate physical RAM addresses.

Address Broadcast

Address broadcasting is the formulation behind which a certain magic technique is hidden. The hardware (MMU) translates each virtual address of a user-level program to the correct physical address. Thus, the operating system remembers for each task the correspondence between its virtual and physical addresses. And this is a daunting task. The operating system manages the entire memory of tasks at the user level for each memory access requirement, thereby providing a complete illusion of the process. Thus, the operating system converts all physical memory into a useful, powerful, and simple abstraction.

Let's take a closer look at a simple script:

When the process starts, the operating system reserves a fixed area of physical memory, say, 16KB. It then stores the starting address of this space in a special variable called base. Then it sets another special variable, called the boundary (or limit) of the width of the space - 16KB. Next, the operating system saves these two values in a process table called PCB (Process Control Block).

And this is how the virtual address space process looks like:

And here is his physical image:

The OS decided to keep it in physical memory in the address range from 4K to 20K. Thus, the base address is set to 4K, and the limit is set to 4 + 16 = 20K. When this process is scheduled (given some processor time), the operating system reads back the limit values from the PCB and copies them into specific processor registers. When the CPU during operation tries to load, for example, a virtual 2K address (something on its heap), the CPU will add this base address obtained from the operating system. Thus, the memory access process will result in a physical location of 2K + 4K = 6K.

Physical Address = Virtual Address + Limit

If the received physical address (6K) is out of bounds (-4K | 20K-), it means that the process tried to gain access to the wrong memory that it does not own. The processor will generate an exception, and since the OS has an exception handler for this event, the OS is activated by the processor and will know that a memory exception has just occurred on the CPU. Then the OS will, by default, transmit a signal to the corrupted “SIGSEGV” process. A segmentation error, which by default (this can be changed) will complete the task with the message “A malfunction has occurred with invalid memory access.”

Moving Memory

Even better, if task A is not scheduled, it means that it is retrieved from the CPU, as the scheduler asked to start another task (say task B). When performing task B, the OS can freely move the entire physical address space of task A. The operating system often gets processor time when executing user tasks. When the last system call is completed, control of the CPU returns to the OS, and before making a system call, the OS can do whatever it wants, managing memory, moving the entire process space to different physical memory card slots.

This is relatively simple: the operating system moves the 16K area to another 16K of free space, and simply updates the basic and associated task variables A. When the task is resumed and transferred to the CPU, the address translation process will still work, but this will not result same physical address as before.

Task A did not notice anything, from her point of view, her address space still starts from 0K to 16K. The operating system and the MMU hardware take full control of each memory access for task A, giving it complete illusion. Task A programmer manipulates his own resolved virtual addresses, from 0 to 16, and the MMU will take care of positioning in physical memory.

The image of the memory after moving will look like this:

At present, the programmer no longer has to wonder where his program is in RAM, if another task is running next to his own, and what addresses to manipulate. This is done by the OS itself along with a very productive and smart hardware - “Memory Control Unit” (MMU).

Memory segmentation

Pay attention to the appearance of the word "segmentation" - we are close to explaining why segmentation errors occur. In the previous chapter, we explained about translation and memory movement, but the model we used has its drawbacks:

We assumed that each virtual address space is fixed at a width of 16Kb. Obviously, this is not the case in reality. The operating system must maintain a list of physical memory free slots (width 16 Kb) to be able to find a place for any new process with a request to start or move the running process. How to do this effectively, so as not to slow down the entire system? As you can see, each process occupies a memory space of 16 Kb, even if the process does not use its entire address space, which is very likely. This model obviously spends a lot of memory, the process consumes 1KB of memory, and its memory section in physical memory is 16Kb. Such waste is called internal fragmentation: memory is reserved, but never used.

To solve some of these problems, we are going to dive into a more complex organization of memory in the OS - segmentation. Segmentation is easy to understand: we are expanding the idea of the “base and boundaries” of three logical memory segments: heap, code, and stack; of each process — instead of simply viewing the memory image as one unique object.

With this concept, the memory between the stack and the heap is no longer wasted. Like this:

Now it is easy to see that the free space in virtual memory for tasks A is no longer allocated in physical memory, memory usage becomes much more efficient. The only difference is that currently for any task, the OS must memorize not one pair of bases / borders, but three: one pair for each type of segment. The IMU takes care of the translation, just as before, and now supports 3 basic values, and 3 boundaries.

For example, here, task heap A has a base of 126K and 2K boundaries. The task then asks for access to the virtual address 3KB, on the heap; physical address 3Kb - 2Kb (start of the heap) = 1Kb + 126K (offset) = 127K. 127K is in front of 128K - this is the correct memory address that can be executed.

Segment sharing

Segmentation of physical memory not only solves the problem of free virtual memory, freeing up more physical memory, but it also allows you to separate physical segments through various processes of virtual address spaces. If you run the same task twice, task A, for example, the code segment is exactly the same: both tasks perform the same processor commands. Both tasks will use their own stack and stack, access their own data sets. It is inefficient to duplicate a code segment in memory. The OS can now split it and save even more memory. For example:

In the picture above, A and B have their own code area in their respective virtual memory space, but under the hood. The operating system divides this area in the same physical memory segments. Both tasks A and B absolutely do not see this, for both of them - they own their memory. To achieve this, the OS must implement one more feature: the segment protection bit. The OS will create for each physical segment, register boundaries / limits for the MMU unit to work correctly, but it will also register the enable flag.

Since the code is not mutable, the code segments are all created with the RX enable flag. A process can load this area of memory for execution, but any process that attempts to write to this area of memory will be terminated by the OS. The remaining two segments: heap and stack are RW, processes can read and write from their own stack / heap, but they cannot execute code from it (this prevents program security flaws, where a bad user may want to damage the heap or stack to enter code This is impossible, since the heap and stack of segments are often not executable. Please note that this was not always the case, as it requires additional hardware support for efficient operation, This is called the "NX bit" under the Intel processor). Permissions memory segments are changeable at runtime: a task may require mprotect () from the OS. These memory segments are clearly visible under Linux, use the / proc / {pid} / maps or / usr / bin / pmap utilities

The following is an example for PHP:

$ pmap -x 31329 0000000000400000 10300 2004 0 rx-- php 000000000100e000 832 460 76 rw--- php 00000000010de000 148 72 72 rw--- [ anon ] 000000000197a000 2784 2696 2696 rw--- [ anon ] 00007ff772bc4000 12 12 0 rx-- libuuid.so.0.0.0 00007ff772bc7000 1020 0 0 ----- libuuid.so.0.0.0 00007ff772cc6000 4 4 4 rw--- libuuid.so.0.0.0 ... ... Here we see in detail all the memory mappings. Addresses are virtual and display their memory permissions. As we can see, each shared object (.so) is mapped into the address space as several mappings (probably code and data), and code areas are executable, and under the hood they are divided into physical memory between each process that displays such a common object in its own address space ...

The biggest advantage of shared objects under Linux (and Unix) is memory savings. It is also possible to create a common zone leading to a common physical memory segment using the mmap () system call. The letter 's', appearing next to this area, means “shared”.

Limits of Segmentation

We have seen that segmentation solves the problem of unused virtual memory. When a process does not use a certain amount of memory, this process is not mapped to physical memory due to the segments that correspond to the selected memory. However, this is not entirely true. What if the process requires 16Kb heaps? The OS will most likely create a 16KB physical memory segment. But if the user then releases 2K of such memory? Then, the OS should reduce the 16Kb segment to 14kb. What if the programmer now asks for 30Kb heaps? The old segment of 14Kb should now grow to 30 KB, but can it do it? Other segments can now surround our 14kb segment, hindering its growth. Then the OS will have to look for 30 KB of free space, and then move the segment.

The big problem with segments is that they lead to very fragmented physical memory, because they continue to grow as user tasks request and free memory. The operating system must maintain and manage a list of free pieces of memory.

Sometimes, the OS gains some available space by summing all free segments, but since it does not touch, it cannot use this space and must deny the memory requirement of the process, even if there is space in the physical memory. This is a very bad scenario. The operating system may try to compress the memory by merging all the free sites into one large piece that could be used in the future to satisfy the memory request.

But this compression algorithm is complicated for the CPU, and at this time no user process can get the CPU: the operating system is fully loaded by reorganizing its physical memory, thus the system becomes unusable. Memory segmentation solves many problems of memory management and multitasking, but it also reveals real flaws. Thus, there is a need to expand the possibilities of segmentation and correct these shortcomings. From this came another concept - “pagination of memory”.

Pagination memory

The pagination of memory pages shares some concepts of memory segmentation and tries to solve its problems. We saw that the main problem with memory segmentation is that the segments will grow and shrink very often, as the user process requests and frees the memory. Sometimes the operating system encounters a problem. It cannot find a large area of free space to satisfy the memory request of a user process, because physical memory has become heavily fragmented over time: it contains many segments of different sizes throughout physical memory, which leads to highly fragmented physical memory.

A breakdown solves this problem with a simple concept: what if for each physical distribution the kernel allocates a fixed size? Pages are fixed size physical memory segments. If the operating system uses fixed-size distributions, it is much easier to manage space, which ultimately negates memory fragmentation.

Let's show the example again, assuming a small 16KB virtual address space to facilitate the presentation:

With pagination, we are not talking about a heap, stack, or code segment. We divide the entire virtual memory process into fixed-size zones: we call them pages. In the example above, we have divided the address space in the form of 4 pages, 4KB each.

Then we do the same with physical memory.

And the operating system simply stores what is called the “Processor Pages Table” - the connection between one virtual process memory page and the basic physical page (which we call the “frame page”).

With this method of memory management, there are no more problems in the free management of space. The page frame is displayed (used) or not; it is easy for the kernel to find a sufficient number of pages to satisfy the user process memory request. It simply stores a list of free frame pages, and also looks at it with each request for memory. A page is the smallest unit of memory an OS can manage. The page (at our level) is indivisible. Together with the pages, each process is accompanied by a table of pages that stores address translations. Translations no longer use border values, as they did with segmentation, but use “virtual page number (VPN)” and “offset” on this page.

Let's show an example of address translation with page numbering. The virtual address space is 16Kb in size, i.e. we need 14 bits to represent the address (2 ^ 14 = 16Kb). The page size is 4 Kb, so we need 4kb (16/4) to choose our page:

Now, when the process wants to load, for example, the address 9438 (out of 16384 possibilities), this gives 10.0100.1101.1110 in binary, which leads to the following:

That is, the 1246th byte of virtual page 2 (“0100.1101.1110” th byte in the “10th” page). Now, the operating system should search this process table page to find out on which page the 2nd card. According to what we suggested, page 2 is 8K bytes in physical memory. Thus, the virtual address 9438 leads to the physical address 9442 (8k + 1246 offset). We found our data! As we have said, there is only one page table for each process, since each process will have its own translation of the address, as well as with segments. But wait, where are these page tables actually stored? Guess ...: in physical memory, yes, but where is she still to be? If the page tables themselves are stored in memory, therefore, for each memory access, the memory must have access to retrieve the VPN. Thus, with pages, one memory access is actually equal to two memory accesses: one to retrieve the page table entry, and one to access the “real” data. Knowing that memory access is a slow process, we get that this is not the best idea.

Translational associative buffer: TLB

Using paging as a kernel mechanism to support virtual memory can result in high performance overhead. Shredding the address space into small fixed-size units (pages) requires a large amount of map information. Because card information is usually stored in physical memory. Logical paging search requires additional memory search for each virtual address generated by the program. And here again come the hardware to speed up and help the OS. In pagination, as in segmentation, hardware helps the OS kernel to translate addresses in an efficient, acceptable way. TLB MMU, VPN . TLB , . MMU , VPN , TLB, VPN. , . , , , TLB, TLB.

, , , , , . . , TLB , . . . Linux , « », , 2Mb 4Kb. , . , TLB TLB. , TLB: , , , , TLB.

TLB , ASID . , - PID. ASID, TLB . , , , , TLB, . , . X86 4 , , . , , , ..… , SIGSEGV, « », . , , . , , , , , . , «swaping» (, , , ).

Conclusion

, « ». . , , MMU . , , , , : SIGSEGV, . : « ”. () „ “. Linux — , , X86 / 64 , , SIGSEGV. X86 / 64 , Linux. , , . , , CPU: Motorola 68HC11 C, VHDL . , ( ). Web; , …

Source: https://habr.com/ru/post/345766/

All Articles