The system for preparing video for streaming on the platform ivi

In order to prepare video for streaming on a large number of device types, you need to take several steps - from preparing metadata to packaging in different containers (MP4, DASH, HLS) with different bitrates. Ivi.ru built a flexible system with priorities that takes into account the needs of business in the speed of video preparation and is able to work with five DRM systems. The architectural solution is based on juggling Docker-containers and includes both hardware for video encoding and software. The whole process and all the details of working with video were explained in detail by the expert and technical director of ivi, Evgeny Rossinsky. Under the cut - interpretation of his report with Backend Conf 2017 .

About speaker

Evgeny Rossinsky - since 2012, CTO ivi has been working to this day. He led the company on the development of high-loaded projects Netstream, the fruits of which were projects related to online broadcasting and video (smotri.com, ivi). Since 2012, Netstream, along with the entire team, has been absorbed by ivi.

Since 2006 he has been teaching at MSTU. Bauman author's course "Technologies for team development software"

')

Today I’ll tell you how we gnawed a cactus and cried with mice on how to make a coding system and then upload it to OnDemand video.

First, I will briefly introduce you to our service, so that you understand the context, and then we turn to animal-assorted humor and various details.

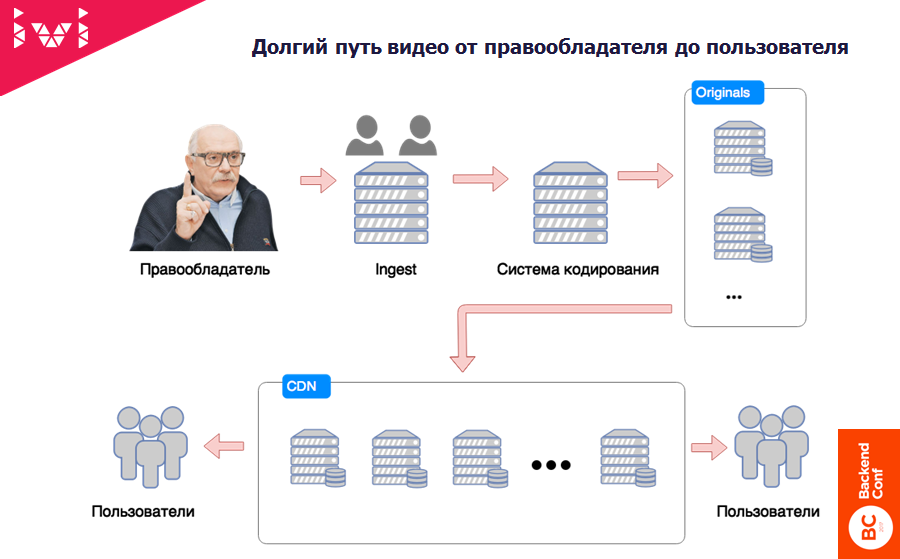

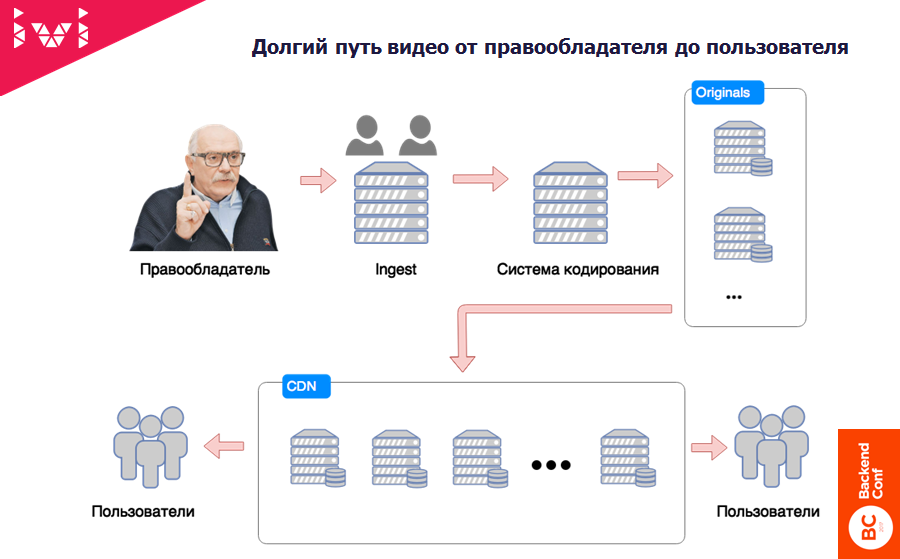

Now let's go directly to what I would like to talk about - how the video from the copyright holder reaches the end user.

1. The right holder sends us either a link or (in 10% of cases, this system is automated) using a special protocol, we retrieve links to the original files from their FTP.

2. The special department Ingest (the term is taken from television) is engaged in preparing the video for transmission to the Coding System.

What is this job? It is necessary to check what the copyright holder sent, if it is suitable for viewing at all, including: see the bitrate, in which codecs, perhaps, somewhere cut off the beginning or end, display the sound level, make color correction and other miscellaneous interesting things.

The Ingest department was reorganized two years ago, when we started all this and identified two types of specialists:

Our task was to ensure that with the growth of the company the number of professionals who understand the video does not grow much. It was necessary to increase the number of those who "throws a sheep and gets two sticks of sausage."

3. From the magical department Ingest video falls into the coding system . This is our patrimony, which I will tell you about.

4. After encoding the video goes to the server.

5. Next, the standard scheme - the video is decomposed (using magic or independently) on the CDN and sent to the end user.

This is a simple classic content distribution scheme, starting from the origin servers and ending with the CDN.

But we will be interested in the coding system itself, about which I will tell you further, and you will ask vile questions.

Or why two years ago we came to the conclusion that we need to change something and make the world a better place and not worse.

We love ffmpeg, mp4box and more. Developer: “Oh, a new version has come out, a class! Now I quickly collect it in my place! Damn, the bitrate fell by 5%! Generally fire! It is necessary to arrest! ".

And here the fun begins - the classic DevOps problem: the developer has one infrastructure, the production servers have another. Begins pain, sodomy, throwing poop, hatred between the departments of development and operation.

In fact, try it - look in the market for people who are well versed in video encoding, and so that there are many more, since about 10 thousand units of content arrive.

For example, a year ago we took the Indian series on trial - we had to dump 10,000 TIPs quickly. All of them are in different bitrates, some copyright holders themselves downloaded from the torrent - complete sodomy. And all this had to be somehow processed.

When you have a system that requires a very intelligent and understanding person, you can’t handle 10 thousand TIPs so that the quality is normal. Therefore, we have taken a number of steps.

The queuing system needs to be brought to life and either restart this very task, either correct and restart it, or say: “OK, we cannot encode it in principle”.

These are generally funny guys who wake up around the night and start to be creative.

Some article like “Israeli startup released a new codec that allows you to reduce the vidos bitrate by 10%” came to TechCrunch. Of course, we must try! And suddenly it turns out: “So, OK, we are in contact now with an Israeli startup, we make a concept out of g * vna and sticks, and now we need to start all this in production!”.

And here we return to the first problem, when another consumer appears in the food chain, and this is very unpleasant.

In fact, the codecs are not very large zoo, but with containers and DRM is really huge. Since we are a legal service, we cannot just take and give away a great Dash or HLS, because we are simply not allowed to do this. The rightholder says, for example: "Guys, the movie" Star Wars "you can give only on such a platform and only in such a DRM."

DRM is Digital rights management - a thing that seems to protect against downloading and the ability to steal audio and video content. These systems are actually several. We are almost all popular implemented. This is true pain and suffering.

Why? Because in a good way, you should not know how DRM works - after all, it is a protection system. You do not have the right to engage in reverse engineering, but if you do not, this nonsense does not work. Therefore, how to test and debug DRM work is generally a separate song.

As a result, we have 52 different configurations of encoding and packaging video streams.

For example, there is an old LG TV, which has a very specific picture of HLS. If you give him an honest, good, cool HLS, which passes by all standards, he will not be able to play him, although he says in the dock that he can. In general, he has nothing but MP4 to play - with the corresponding minuses for the user: start time, buffering, etc.

Therefore, in order to play on the largest possible number of devices, we had to learn either to generate these formats in advance, or to do it on the fly. Here the money is decided - where it is profitable for us and where it is not. I will tell about it a little later.

For example, you need to find all vidos with keyframe in two seconds. Why it may be necessary, I can tell.

For example, you need to conduct a test on how much a change in chunk length affects the load on a CDN and how this is related to caching algorithms. This information is needed. This is not a vital history, without which it is impossible to exist, but very useful if you want to make the operation of your service somewhat more cost-effective.

So that you understand: our cost is six to seven times cheaper than the commercial offers of any CDN. There are horse prices are laid. But this is a business, and it should lay a normal margin. Therefore, the coding system for us is not an empty sound.

The first one who looks at any features before we roll this onto a prod is a QA engineer. He must first read the multi-kilometer instruction and then pick it up in his cluster.

In general, the coding features are the most complex because they require a lot of time. The tester is also a man: he launched something coded and went to drink tea cheerfully. At this point, his context was lost. He returned - everything fell - it is necessary to understand that. There are many such iterations.

With that on the same Web or on the backend we drive 15-20 releases per day. This is normal.

In the case of a coding system, it takes a lot of time to wait for the video to be encoded. Processing a test file, even if it is all black, there are no scene changes, it still requires a certain time.

We have several departments using a coding system. This is a section of content that is taken away from the copyright holder, and there are still funny guys called “Advertising”.

Since we are proud and independent, in order not to sit on other people's pills and needles, we have launched our advertising twister. Advertisers need to be able to quickly roll out one or another advertising material to our user.

In such an intensive mode, we simply could not recruit people in the right quantity, who know about coding.

What did business want? Business wanted cheap professionals who can do everything and are delighted with the routine work of the same type - just come from it!

What did the technical department expect from the coding specialist? Skills: “Well, please, do it like in the picture!”.

What were we going to?

When a video arrives to our system , the original itself is checked first - since even great guys who understand how to properly encode video can make mistakes.

According to the statistics of the previous version of the system, there were very frequent cases when, for example, the original came in the bit rate of 1 Mbit, and the coding operator asked me to the system: “Please, make me Full HD from this”.

This is possible - upscale has not been canceled. The system takes and does this thing. The output is: 1 large square runs after another large square, and the third large square looks at all this. Video quality control engineers look at this and say: “Guys, this is g * out!”.

After the n-th number of requests from video-stream quality control experts, we added a bitrate check.

In our practice, there were two ridiculous cases when the copyright holder sent a video of 2 x 480 in size, and these two strips randomly laid out on prod. Actually, after these two strips, we had a human quality control department, and we are still looking at the proportions at the first stage so that the video stream can at least fit in 9:16 or at least 3: 4. In the extreme, 1:20 - but not 1: 480.

We introduced the FPS check (Frame per second - the number of frames per second in a video stream) about six months ago, when we began to experiment with HLS generation on the fly.

FPS control is needed so that there is always a reference frame at the beginning of each chunk. If the chunk is four or two seconds, and the FPS is fractional, one way or another, the reference frame from the beginning of the chunk will go somewhere.

Of course, you can customize, make unequal chunks and so on. But when you do adaptive streaming, you need to somehow synchronize it. Therefore, simpler rules give rise to simpler operation and understanding how it all works.

7-10% of our content live with fractional FPS, and this video cannot be streamed to arbitrary containers. Now we have a system that allows you to slice MP4 in HLS on the fly, in Dash, also to encode it all.

Often the video comes without any sound at all, or the sound is marked with another language, or something else.

A standard set of checks, including checking the size of files.

After we decided that the original is suitable for further processing, it is encoded into the desired bitrate from one file to another. The resulting file is MP4.

Next, packaging and , possibly, encryption is done - if for any reason we want to pre-pack the video stream in one container or another, then send the original-static data from the servers. Statics are always cheaper to give on load, but it is more expensive to store an extra copy of the bitrate.

Then the originals are sent to the servers , where specially trained people who come to control the quality of what has already arrived.

Quality control consists of two parts:

In fact, we are stupidly checking the MD5 from the stream, that everything was normal copied. About once a year I see a story in the logs that they reported bad news - that is, this is not a fictional case.

After that, since we are still a service of withdrawing money from people, we mark out the places where we need to show advertisements, if this video will work according to the model with an advertisement demonstration.

And what is good for the user is a caption label with notification of the next series and other things.

Finally, a specially trained person pulls the switch and the content goes to the end user: Backend servers begin to distribute links to this particular video stream.

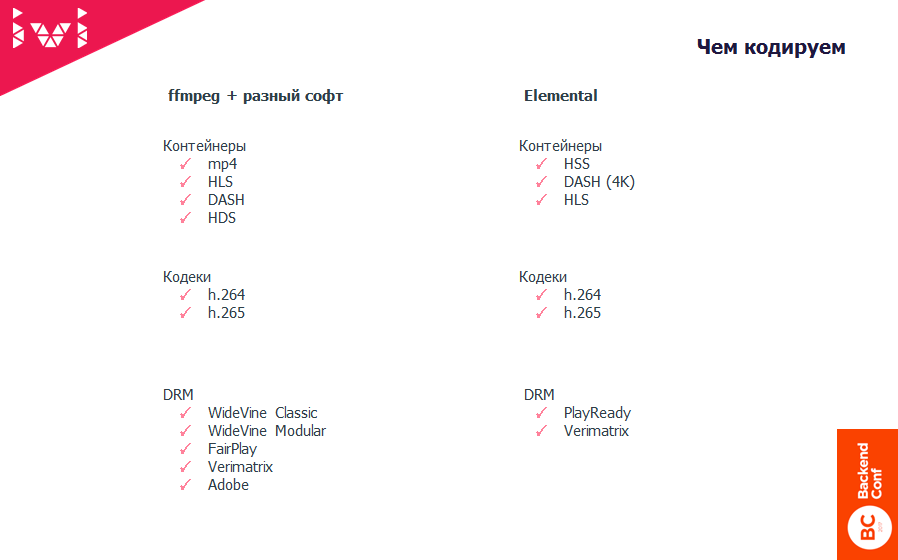

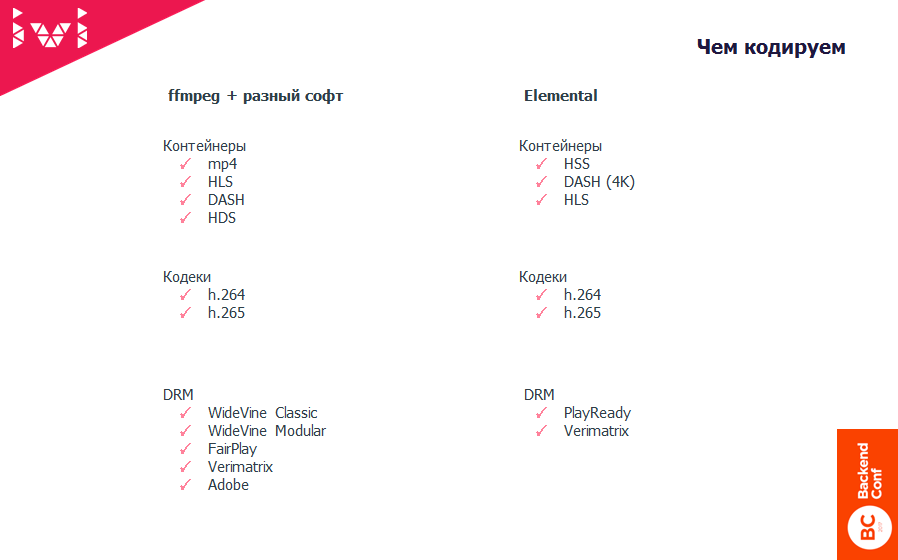

The largest cluster we have is ffmpeg plus various software. Two types can be distinguished: Hardware and Software.

We take ffmpeg and MP4, HLS and other boxes, and this closes 95% of all coding tasks that we perform. However, to save time and conduct experiments a year and a half ago, we bought a pair of servichkov from Elemental.

This is not an advertisement, they are really nice guys. These servers are more expensive than all our pieces of hardware put together, but they have one plus. If it is difficult for you to cook something with ffmpeg or open source software, you can always contact support and say: “Guys, my Smooth Streaming does not work, make it work!”.

They send it to you, and then, if you want to scale it, you just stupidly copy what they sent and either insert it into the software solution, or say: “OK, let it spin on Elemental”.

Now in our production Elemental is only used for one piece. We do content with DRM PlayReady, which we give to HSS. HSS is just Smooth Streaming - Microsoft's crap, which is now not supported by such a large number of devices. But, for example, Philips TVs, like the old Samsung and LG, are very fond of it.

All other platforms are catching up a bit faster. On Smart TV, there is generally a problem with updating firmware. They very often throw old TV sets both on iron and in everything. There with support trouble.

But there are people who want to watch movies on old models, and our task is to somehow make friends with a hedgehog.

As a result, approximately 95% is spent on software coding and 5% on encoding and packaging using Elemental. And a very large piece is associated with R & D, which is also carried out on Elemental. There, however, the speed is somewhat higher, because the GPU is crammed.

For example, we first wrote down stories with 4K and HDR on a hardware solution.

Remembering the first problem, when the developers mopped up the operation, and the exploitation of the developer's weed, we did a pretty simple thing: we took the docker-container and stuffed everything related to coding, removing all the difficulties with setting up the infrastructure, building ffmpeg, versions, and so on. .

We have done:

Then I will tell you a little bit about which of the orchestration systems we tried and why we decided to dwell on trivial and primitive solutions for this particular story.

Suppose we want to launch a new server and make it part of a cloud that deals with video encoding.

Works armor-piercing.

Each container sequentially requests several types of tasks:

Having tried to perform one task of each type, the container, having successfully added up the results of its work, dies off.

Inside the container:

Running these magical things wrapped Django, because it was easier at that time - you could write Shell scripts or wrap it all in Djangov teams.

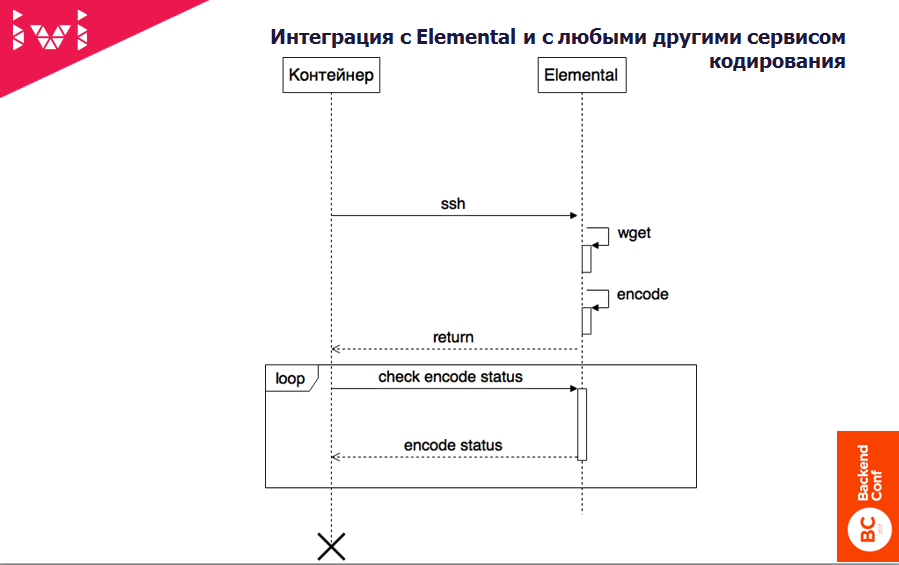

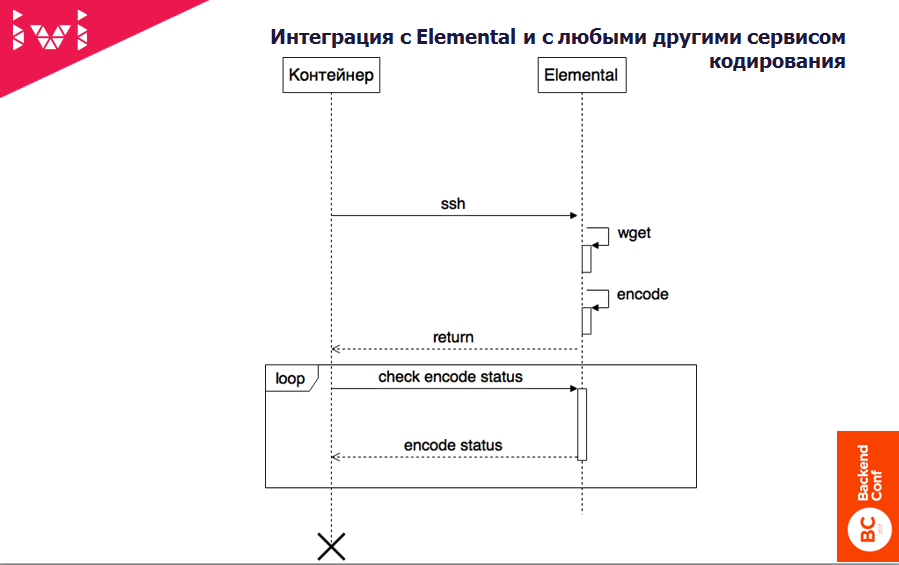

Then the question arose - how to connect external services to all this good?

Pretty simple too. In fact, the container has another separate type - send the puzzle somewhere else. He stupidly climbs on Elemental on SSH and looks at whether he has a resource there to:

If there is - fine, he extorts the file, puts the puzzle on encoding, and asynchronously leaves to wait.

Then, in a cycle, he periodically asks Elemental: “Is there anything ready?”. If it’s ready, again wget will download the coding results back into the shared daddy.

Thus, we connected several different, very simple RPC services (or not very simple ones) to integrate it into the coding system.

What's next? And then it all rolled out in operation and began to receive the first funny reviews.

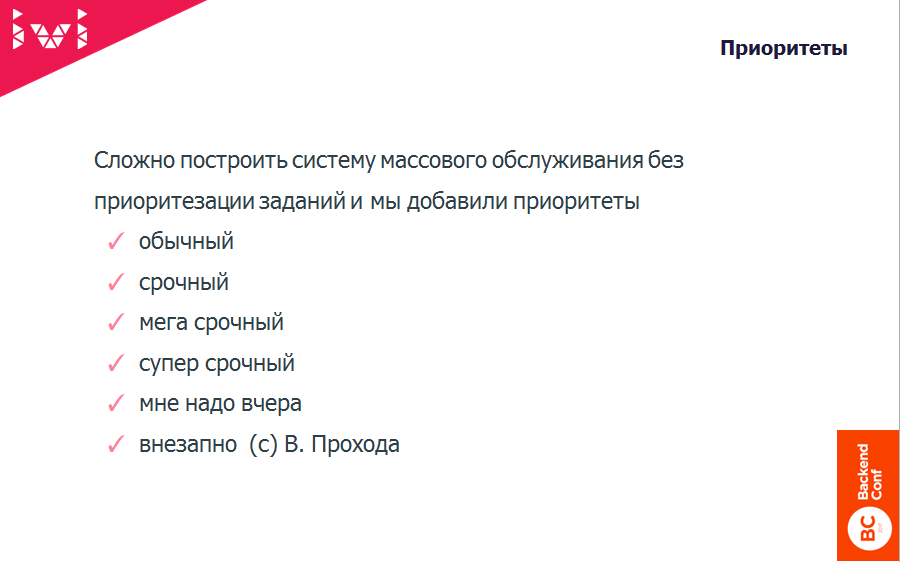

Thus, such an interesting system of priorities appeared. This is all taken from the real requests that various departments sent us. The highest priority is the priority "Sudden."

The author of this classification, Volodya Prohod, methodically wrote it all out and now we really have such a prioritization system. And they perfectly understand this! They know that “I need yesterday” is much more important than “Super urgent”, “Super urgent” for some reason more important than “Megas urgent”.

Actually, what users, such and requests.

The priorities are more or less clear, but they were not enough. Why? Because everyone, of course, began to wet "Suddenly" - because my task is the most important! And if another business is trying to hang a KPI of the type “You have to recode 20 vidos per hour!”, And you think: “Yes, of course, I also need! Suddenly! Come on! Pot, boil! ".

All definitely appeared "Suddenly"!

What did it lead to? To the fact that the really important tasks were stupidly waiting for resources. In fact, it was quite possible to calmly place the video, which is needed only after 4 months for production, with the usual priority. It would still be codified overnight. But while people are sitting in the office, they also need to turn the skewers and say that you have burned.

Therefore, we have routes.

What it is? In fact, we have fenced off in our computing facilities a space where only a certain department can go.

There are some really urgent tasks:

This year we quite often lay out serials up to the air (catch-forward). The people are screwing up our support - when is the next series of “Hotel Ellion !?” I can’t wait! ”There really is time! You will laugh, but it is true. I'm not talking about the popularity of the series "Matchmakers-6".

Therefore, hot premieres, especially catch-forward or catch-up (right after the TV broadcast), need to be unloaded quickly.

With advertisers it is even worse, because you can calculate how much it costs half an hour or an hour with so many users. This money is the lost profit of the company.

As soon as a loot arises in a dispute between different divisions, people cease to be human beings and take the ax of war — blood, tears and snot.

Therefore, we made reservations, where you can go and where you can not go, and called them routes.

In fact, there are really not very many urgent tasks. If several super hot premieres and 20 commercials come in a day, then one piece of iron handles this. They, in principle, do not conflict with each other. And all the basic things can be solved calmly priorities.

By separating the content department for prime ministers and trailers with a separate pen, and for advertisers, a separate story, we achieved that they were able to more or less agree and communicate. It seems to me, but they may think differently.

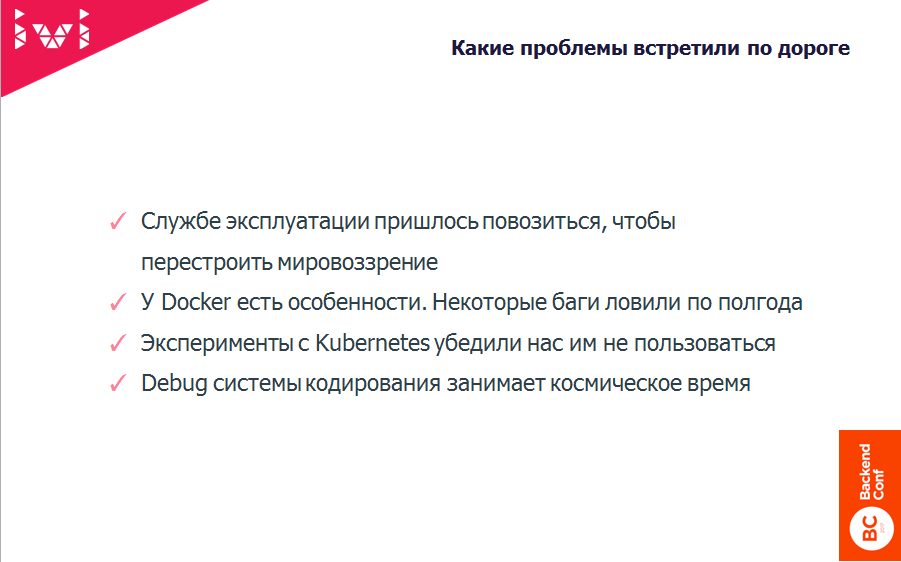

The service of exploitation, when someone comes to her with something new, always meets it with hostility, because her motto is “Don't touch the equipment and she won't let you down!”

As soon as something new comes in, it means that you need to change. Changes always happen through pain - humanity has not yet come up with another.

Therefore, we wanted stories with virtualization, with dockers to run a little wider than just a coding system. Let's go in two ways:

It was two years ago. For half a year we fiercely beat our heads against the wall, trying to solve the problems that “... just about, in three months, now we are releasing and everything will be fine!”. I'm talking about the engineers at Kubernetes.

Preparing for the report, I looked at the changes that took place there in two years. Yes, indeed, about 70% of our problems are closed. Now we can probably use all of them, but we have already killed half a year, and the next round, I think, will be in half a year or a year.

We have special DevOps engineers who help people cross the grass with the hedgehog. Although in principle, using the Docker container is pretty straightforward.

But Kubernetes dokus us. The person who introduced it is depressed, and six months later he quit because you are doing, you are doing, it seems, everything is good according to the documentation, and you have the right thoughts, and the direction is the same, but the pot does not cook!

A month after we decided that we would not do Kubernetes, one of our developers wrote a very simple orchestration system, just to solve the local problem of raising a test cluster for testers.

Kubernetes still solves more global problems - how to do it all in production, and not just in a test cluster. Yes, I had a dream to rush into production with this, but at first there was the idea of the first step - to make a test infrastructure.

It turned out that the test infrastructure is much easier to do without the help of a complex orchestration system, although I by no means criticize the path of Kubernetes. It is correct, just at the time when we started, it was still very damp.

Docker-containers are assembled quite quickly - in a few seconds.

There is computational power - you lift everything you need there with Puppet, and everything works quickly.

The number of calls from the Ingest department to the development department has decreased. It is important for me that the developers are engaged in development, and not support. Now, probably, a maximum of two times a month, one of the programmers looks at what has happened to one or another puzzle, what is going wrong. In this case, as a rule, they do not climb into the code, but use a special admin panel.

As a technical director, it has become somewhat calmer to live, because people do not shift papers from one box to another, but do good, bright, eternal ... or not.

We tried using the example of Elemental and, for example, the apple packer in HLS to connect external services.

This is necessary just to optimize the performance of the CDN, so that adaptive streaming will screw better. Before the creation of this system, the question of recoding the entire catalog could take from six months to a year. We really have a lot of videos!

Now, I think, on those capacities that are, you can cope in a month or two. The only question is: why?

At the same time, you can easily rent a cloud, the main thing is that this cloud was somewhere near you, because somehow Amazon came to us and said:

- See, the classic task - let's raise with us ...

- Do you have anything in Russia?

- So, to close to you, no.

- See, we have two Pb originals. We need, relatively speaking, to overtake them there, and then pick them up. How much will we pay for the traffic?

- Well, yes ... In Russia we will rise, we will come.

By the time, if you drive somewhere far away, the distance has a very significant impact on the choice of a partner, where you can, for example, raise external computing power.

Indeed, two weeks for testing and checking the quality of any new feature in the coding system that I described earlier is adodomy. Just in the bud he cuts out all the desire to get some features from this system.

And now a day or two, if hemorrhoids are completely - three or four. Plus - it is clear how to do it. The tester does not deal with infrastructure problems. He does not walk around the devopser, does not say: “Damn, the same thing doesn’t work for me, why?”. Now (at least I hope so), testers are no longer afraid to take on the tasks of controlling the quality of the coding system.

Answers to questions after the report often contain the most interesting pieces and interesting details, so we collected them here.

We also opened access to all videos of performances with Backend Conf 2017 and HighLoad ++ Junior .

We invite experts and professionals to become speakers at the May RIT ++ conference festival. If you have an interesting development experience and you are ready to share it, leave a request for our program committee.

About speaker

Evgeny Rossinsky - since 2012, CTO ivi has been working to this day. He led the company on the development of high-loaded projects Netstream, the fruits of which were projects related to online broadcasting and video (smotri.com, ivi). Since 2012, Netstream, along with the entire team, has been absorbed by ivi.

Since 2006 he has been teaching at MSTU. Bauman author's course "Technologies for team development software"

')

Today I’ll tell you how we gnawed a cactus and cried with mice on how to make a coding system and then upload it to OnDemand video.

First, I will briefly introduce you to our service, so that you understand the context, and then we turn to animal-assorted humor and various details.

Little about IVI

- "Ivi" shows legal video.

- We have a lot of different client applications: we play on the Web, iOS, coffee makers, TVs. Recently launched the XBOX app.

- This is a highload project with a multimillion audience - 33 million!

- A few years ago, they built their CDN: 30 cities in Russia and 3 DCs in Moscow with a ring of 150 Gbps.

- We have fun with such a load as 70 thousand requests per second.

Now let's go directly to what I would like to talk about - how the video from the copyright holder reaches the end user.

1. The right holder sends us either a link or (in 10% of cases, this system is automated) using a special protocol, we retrieve links to the original files from their FTP.

2. The special department Ingest (the term is taken from television) is engaged in preparing the video for transmission to the Coding System.

What is this job? It is necessary to check what the copyright holder sent, if it is suitable for viewing at all, including: see the bitrate, in which codecs, perhaps, somewhere cut off the beginning or end, display the sound level, make color correction and other miscellaneous interesting things.

The Ingest department was reorganized two years ago, when we started all this and identified two types of specialists:

- people who really understand video coding - and for them the words GOP and keyframe are not empty characters.

- queuing system operators called Coding System. They have a sheep, they throw it into the queuing system, on the other hand they get two sticks of sausage. Sniffing sausage - normal? - lei on prod.

Our task was to ensure that with the growth of the company the number of professionals who understand the video does not grow much. It was necessary to increase the number of those who "throws a sheep and gets two sticks of sausage."

3. From the magical department Ingest video falls into the coding system . This is our patrimony, which I will tell you about.

4. After encoding the video goes to the server.

5. Next, the standard scheme - the video is decomposed (using magic or independently) on the CDN and sent to the end user.

This is a simple classic content distribution scheme, starting from the origin servers and ending with the CDN.

But we will be interested in the coding system itself, about which I will tell you further, and you will ask vile questions.

What were our problems?

Or why two years ago we came to the conclusion that we need to change something and make the world a better place and not worse.

- We had just many kilometers of instructions on how to create a production environment for encoding.

We love ffmpeg, mp4box and more. Developer: “Oh, a new version has come out, a class! Now I quickly collect it in my place! Damn, the bitrate fell by 5%! Generally fire! It is necessary to arrest! ".

And here the fun begins - the classic DevOps problem: the developer has one infrastructure, the production servers have another. Begins pain, sodomy, throwing poop, hatred between the departments of development and operation.

You can talk a lot - yes, you need DevOps, etc. But in reality, no - people always have people, they hate each other. Therefore, we must first give a technological platform that will remove the opposition.

- The second big problem is that we really had to greatly reduce the requirements for the level of entry of coding system operators.

In fact, try it - look in the market for people who are well versed in video encoding, and so that there are many more, since about 10 thousand units of content arrive.

For example, a year ago we took the Indian series on trial - we had to dump 10,000 TIPs quickly. All of them are in different bitrates, some copyright holders themselves downloaded from the torrent - complete sodomy. And all this had to be somehow processed.

When you have a system that requires a very intelligent and understanding person, you can’t handle 10 thousand TIPs so that the quality is normal. Therefore, we have taken a number of steps.

- The next problem - in order to prepare the video for streaming, you need to go through a large number of stages, which means that at each stage you can screw it up - make a mistake.

The queuing system needs to be brought to life and either restart this very task, either correct and restart it, or say: “OK, we cannot encode it in principle”.

- The next story is R & D.

These are generally funny guys who wake up around the night and start to be creative.

Some article like “Israeli startup released a new codec that allows you to reduce the vidos bitrate by 10%” came to TechCrunch. Of course, we must try! And suddenly it turns out: “So, OK, we are in contact now with an Israeli startup, we make a concept out of g * vna and sticks, and now we need to start all this in production!”.

And here we return to the first problem, when another consumer appears in the food chain, and this is very unpleasant.

- Next is the zoo of containers, codecs and DRM.

In fact, the codecs are not very large zoo, but with containers and DRM is really huge. Since we are a legal service, we cannot just take and give away a great Dash or HLS, because we are simply not allowed to do this. The rightholder says, for example: "Guys, the movie" Star Wars "you can give only on such a platform and only in such a DRM."

DRM is Digital rights management - a thing that seems to protect against downloading and the ability to steal audio and video content. These systems are actually several. We are almost all popular implemented. This is true pain and suffering.

Why? Because in a good way, you should not know how DRM works - after all, it is a protection system. You do not have the right to engage in reverse engineering, but if you do not, this nonsense does not work. Therefore, how to test and debug DRM work is generally a separate song.

As a result, we have 52 different configurations of encoding and packaging video streams.

For example, there is an old LG TV, which has a very specific picture of HLS. If you give him an honest, good, cool HLS, which passes by all standards, he will not be able to play him, although he says in the dock that he can. In general, he has nothing but MP4 to play - with the corresponding minuses for the user: start time, buffering, etc.

Therefore, in order to play on the largest possible number of devices, we had to learn either to generate these formats in advance, or to do it on the fly. Here the money is decided - where it is profitable for us and where it is not. I will tell about it a little later.

- The next pain - we needed to be able to save the history of coding parameters in order to further understand what can be done with these video files and what cannot.

For example, you need to find all vidos with keyframe in two seconds. Why it may be necessary, I can tell.

For example, you need to conduct a test on how much a change in chunk length affects the load on a CDN and how this is related to caching algorithms. This information is needed. This is not a vital history, without which it is impossible to exist, but very useful if you want to make the operation of your service somewhat more cost-effective.

So that you understand: our cost is six to seven times cheaper than the commercial offers of any CDN. There are horse prices are laid. But this is a business, and it should lay a normal margin. Therefore, the coding system for us is not an empty sound.

- As in the case of DevOps, there are big problems with testing systems.

The first one who looks at any features before we roll this onto a prod is a QA engineer. He must first read the multi-kilometer instruction and then pick it up in his cluster.

In general, the coding features are the most complex because they require a lot of time. The tester is also a man: he launched something coded and went to drink tea cheerfully. At this point, his context was lost. He returned - everything fell - it is necessary to understand that. There are many such iterations.

Two years ago, on average, it took us two weeks to test one new feature. It was very painful.

With that on the same Web or on the backend we drive 15-20 releases per day. This is normal.

In the case of a coding system, it takes a lot of time to wait for the video to be encoded. Processing a test file, even if it is all black, there are no scene changes, it still requires a certain time.

- Standard problems with the system of mass service for a large number of consumers.

We have several departments using a coding system. This is a section of content that is taken away from the copyright holder, and there are still funny guys called “Advertising”.

Since we are proud and independent, in order not to sit on other people's pills and needles, we have launched our advertising twister. Advertisers need to be able to quickly roll out one or another advertising material to our user.

Who is a coding operator

In such an intensive mode, we simply could not recruit people in the right quantity, who know about coding.

What did business want? Business wanted cheap professionals who can do everything and are delighted with the routine work of the same type - just come from it!

What did the technical department expect from the coding specialist? Skills: “Well, please, do it like in the picture!”.

What were we going to?

- They wanted to create a low-entry system for operators.

- In order to be able to use cheap low-skilled employees, which on demand can be connected and disconnected.

- In order not to increase the number of expensive professionals, the use of so many highly qualified people is not so justified.

Video preparation stages

Stage # 1

When a video arrives to our system , the original itself is checked first - since even great guys who understand how to properly encode video can make mistakes.

- Bitrate

According to the statistics of the previous version of the system, there were very frequent cases when, for example, the original came in the bit rate of 1 Mbit, and the coding operator asked me to the system: “Please, make me Full HD from this”.

This is possible - upscale has not been canceled. The system takes and does this thing. The output is: 1 large square runs after another large square, and the third large square looks at all this. Video quality control engineers look at this and say: “Guys, this is g * out!”.

After the n-th number of requests from video-stream quality control experts, we added a bitrate check.

- Proportions.

In our practice, there were two ridiculous cases when the copyright holder sent a video of 2 x 480 in size, and these two strips randomly laid out on prod. Actually, after these two strips, we had a human quality control department, and we are still looking at the proportions at the first stage so that the video stream can at least fit in 9:16 or at least 3: 4. In the extreme, 1:20 - but not 1: 480.

- FPS.

We introduced the FPS check (Frame per second - the number of frames per second in a video stream) about six months ago, when we began to experiment with HLS generation on the fly.

FPS control is needed so that there is always a reference frame at the beginning of each chunk. If the chunk is four or two seconds, and the FPS is fractional, one way or another, the reference frame from the beginning of the chunk will go somewhere.

Of course, you can customize, make unequal chunks and so on. But when you do adaptive streaming, you need to somehow synchronize it. Therefore, simpler rules give rise to simpler operation and understanding how it all works.

7-10% of our content live with fractional FPS, and this video cannot be streamed to arbitrary containers. Now we have a system that allows you to slice MP4 in HLS on the fly, in Dash, also to encode it all.

Fractional FPS is bad. Why? Because at the moment of switching to another stream, adaptive streaming begins to work. The user blunted the channel, it is necessary to quickly switch to another chunk. It switches to another chunk, and there is no reference frame. Then for some time the user may fall apart a picture and a black screen will appear. It all depends on how the codec is implemented on the client side.

- Sound tracks.

Often the video comes without any sound at all, or the sound is marked with another language, or something else.

- Other

A standard set of checks, including checking the size of files.

Stage # 2

After we decided that the original is suitable for further processing, it is encoded into the desired bitrate from one file to another. The resulting file is MP4.

Stage # 3

Next, packaging and , possibly, encryption is done - if for any reason we want to pre-pack the video stream in one container or another, then send the original-static data from the servers. Statics are always cheaper to give on load, but it is more expensive to store an extra copy of the bitrate.

Stages # 4-5

Then the originals are sent to the servers , where specially trained people who come to control the quality of what has already arrived.

Quality control consists of two parts:

- A trivial check, is it normal if we even brought the content file to the originals server?

In fact, we are stupidly checking the MD5 from the stream, that everything was normal copied. About once a year I see a story in the logs that they reported bad news - that is, this is not a fictional case.

- Next, a specially trained person looks in the built-in player (first in the admin panel), how it all looks, whether what we encoded is consistent with the current quality.

Stage # 6

After that, since we are still a service of withdrawing money from people, we mark out the places where we need to show advertisements, if this video will work according to the model with an advertisement demonstration.

And what is good for the user is a caption label with notification of the next series and other things.

Stage # 7

Finally, a specially trained person pulls the switch and the content goes to the end user: Backend servers begin to distribute links to this particular video stream.

What do we encode?

The largest cluster we have is ffmpeg plus various software. Two types can be distinguished: Hardware and Software.

We take ffmpeg and MP4, HLS and other boxes, and this closes 95% of all coding tasks that we perform. However, to save time and conduct experiments a year and a half ago, we bought a pair of servichkov from Elemental.

This is not an advertisement, they are really nice guys. These servers are more expensive than all our pieces of hardware put together, but they have one plus. If it is difficult for you to cook something with ffmpeg or open source software, you can always contact support and say: “Guys, my Smooth Streaming does not work, make it work!”.

They send it to you, and then, if you want to scale it, you just stupidly copy what they sent and either insert it into the software solution, or say: “OK, let it spin on Elemental”.

Now in our production Elemental is only used for one piece. We do content with DRM PlayReady, which we give to HSS. HSS is just Smooth Streaming - Microsoft's crap, which is now not supported by such a large number of devices. But, for example, Philips TVs, like the old Samsung and LG, are very fond of it.

All other platforms are catching up a bit faster. On Smart TV, there is generally a problem with updating firmware. They very often throw old TV sets both on iron and in everything. There with support trouble.

But there are people who want to watch movies on old models, and our task is to somehow make friends with a hedgehog.

As a result, approximately 95% is spent on software coding and 5% on encoding and packaging using Elemental. And a very large piece is associated with R & D, which is also carried out on Elemental. There, however, the speed is somewhat higher, because the GPU is crammed.

For example, we first wrote down stories with 4K and HDR on a hardware solution.

How does all this work inside and how we come to this

Remembering the first problem, when the developers mopped up the operation, and the exploitation of the developer's weed, we did a pretty simple thing: we took the docker-container and stuffed everything related to coding, removing all the difficulties with setting up the infrastructure, building ffmpeg, versions, and so on. .

We have done:

- API;

- admin panel for the developer, with which they can deal with complex cases;

- admin panel for coding operators;

- container management;

- very primitive orchestration.

Then I will tell you a little bit about which of the orchestration systems we tried and why we decided to dwell on trivial and primitive solutions for this particular story.

How it works (on fingers)

Suppose we want to launch a new server and make it part of a cloud that deals with video encoding.

- We upload the entire configuration via Puppet;

- Install Cron, which checks once a minute if there is free space to launch another container;

- Further this container rises, looks, whether there are for it tasks for coding, and carries out them. On the server there is a shared daddy, where he puts his results, and, having successfully completed the task, he dies, while sharing the logs with the coding history. Everything is very simple.

- Then, on Cron, a new thing wakes up, looks, is there anything to do. If there is, then runs there.

Works armor-piercing.

What's inside the container?

Each container sequentially requests several types of tasks:

- At first he says: “Friends, is there anything to do exactly by the video itself? Do I need to overtake it in some other bitrate? ”And MP4 does.

- Having re-coded MP4, he says: “Friends, I made MP4, everything is fine. Or maybe you need to pack in HLS? ”Actually, this is not necessarily the same MP4 - it can be absolutely any other MP4 with a different bit rate.

- Next comes a similar story with DASH and then with HDS.

Having tried to perform one task of each type, the container, having successfully added up the results of its work, dies off.

Inside the container:

- Ubuntu

- ffmpeg

- ffprobe,

- mp4box

Running these magical things wrapped Django, because it was easier at that time - you could write Shell scripts or wrap it all in Djangov teams.

Integration with external services

Then the question arose - how to connect external services to all this good?

Pretty simple too. In fact, the container has another separate type - send the puzzle somewhere else. He stupidly climbs on Elemental on SSH and looks at whether he has a resource there to:

- download file;

- run the puzzle.

If there is - fine, he extorts the file, puts the puzzle on encoding, and asynchronously leaves to wait.

Then, in a cycle, he periodically asks Elemental: “Is there anything ready?”. If it’s ready, again wget will download the coding results back into the shared daddy.

Thus, we connected several different, very simple RPC services (or not very simple ones) to integrate it into the coding system.

Priorities

What's next? And then it all rolled out in operation and began to receive the first funny reviews.

Thus, such an interesting system of priorities appeared. This is all taken from the real requests that various departments sent us. The highest priority is the priority "Sudden."

The author of this classification, Volodya Prohod, methodically wrote it all out and now we really have such a prioritization system. And they perfectly understand this! They know that “I need yesterday” is much more important than “Super urgent”, “Super urgent” for some reason more important than “Megas urgent”.

Actually, what users, such and requests.

The priorities are more or less clear, but they were not enough. Why? Because everyone, of course, began to wet "Suddenly" - because my task is the most important! And if another business is trying to hang a KPI of the type “You have to recode 20 vidos per hour!”, And you think: “Yes, of course, I also need! Suddenly! Come on! Pot, boil! ".

All definitely appeared "Suddenly"!

What did it lead to? To the fact that the really important tasks were stupidly waiting for resources. In fact, it was quite possible to calmly place the video, which is needed only after 4 months for production, with the usual priority. It would still be codified overnight. But while people are sitting in the office, they also need to turn the skewers and say that you have burned.

Therefore, we have routes.

Routes

What it is? In fact, we have fenced off in our computing facilities a space where only a certain department can go.

There are some really urgent tasks:

- trailers,

- advertising,

- hot premiere

This year we quite often lay out serials up to the air (catch-forward). The people are screwing up our support - when is the next series of “Hotel Ellion !?” I can’t wait! ”There really is time! You will laugh, but it is true. I'm not talking about the popularity of the series "Matchmakers-6".

Therefore, hot premieres, especially catch-forward or catch-up (right after the TV broadcast), need to be unloaded quickly.

With advertisers it is even worse, because you can calculate how much it costs half an hour or an hour with so many users. This money is the lost profit of the company.

As soon as a loot arises in a dispute between different divisions, people cease to be human beings and take the ax of war — blood, tears and snot.

Therefore, we made reservations, where you can go and where you can not go, and called them routes.

In fact, there are really not very many urgent tasks. If several super hot premieres and 20 commercials come in a day, then one piece of iron handles this. They, in principle, do not conflict with each other. And all the basic things can be solved calmly priorities.

By separating the content department for prime ministers and trailers with a separate pen, and for advertisers, a separate story, we achieved that they were able to more or less agree and communicate. It seems to me, but they may think differently.

The service of exploitation, when someone comes to her with something new, always meets it with hostility, because her motto is “Don't touch the equipment and she won't let you down!”

As soon as something new comes in, it means that you need to change. Changes always happen through pain - humanity has not yet come up with another.

Therefore, we wanted stories with virtualization, with dockers to run a little wider than just a coding system. Let's go in two ways:

- We tried to implement Kubernetes so that test clusters for our QA engineers work on it.

- We needed orchestration.

It was two years ago. For half a year we fiercely beat our heads against the wall, trying to solve the problems that “... just about, in three months, now we are releasing and everything will be fine!”. I'm talking about the engineers at Kubernetes.

Preparing for the report, I looked at the changes that took place there in two years. Yes, indeed, about 70% of our problems are closed. Now we can probably use all of them, but we have already killed half a year, and the next round, I think, will be in half a year or a year.

But at the same time, we really liked the story just with the Docker-containers - the simplicity with which you can wrap the configuration you need, and absolutely without conflict between the operations department and the development department.

We have special DevOps engineers who help people cross the grass with the hedgehog. Although in principle, using the Docker container is pretty straightforward.

But Kubernetes dokus us. The person who introduced it is depressed, and six months later he quit because you are doing, you are doing, it seems, everything is good according to the documentation, and you have the right thoughts, and the direction is the same, but the pot does not cook!

A month after we decided that we would not do Kubernetes, one of our developers wrote a very simple orchestration system, just to solve the local problem of raising a test cluster for testers.

Kubernetes still solves more global problems - how to do it all in production, and not just in a test cluster. Yes, I had a dream to rush into production with this, but at first there was the idea of the first step - to make a test infrastructure.

It turned out that the test infrastructure is much easier to do without the help of a complex orchestration system, although I by no means criticize the path of Kubernetes. It is correct, just at the time when we started, it was still very damp.

What have we managed to achieve?

- First, really easy to operate.

Docker-containers are assembled quite quickly - in a few seconds.

- We got very good scalability.

There is computational power - you lift everything you need there with Puppet, and everything works quickly.

- It is very important for us to have detailed logging of all stages of video processing.

The number of calls from the Ingest department to the development department has decreased. It is important for me that the developers are engaged in development, and not support. Now, probably, a maximum of two times a month, one of the programmers looks at what has happened to one or another puzzle, what is going wrong. In this case, as a rule, they do not climb into the code, but use a special admin panel.

As a technical director, it has become somewhat calmer to live, because people do not shift papers from one box to another, but do good, bright, eternal ... or not.

- Opportunities to connect external services

We tried using the example of Elemental and, for example, the apple packer in HLS to connect external services.

- For 2016, several times (4, 5, 6 - I do not remember exactly) recoded the entire catalog.

This is necessary just to optimize the performance of the CDN, so that adaptive streaming will screw better. Before the creation of this system, the question of recoding the entire catalog could take from six months to a year. We really have a lot of videos!

Now, I think, on those capacities that are, you can cope in a month or two. The only question is: why?

At the same time, you can easily rent a cloud, the main thing is that this cloud was somewhere near you, because somehow Amazon came to us and said:

- See, the classic task - let's raise with us ...

- Do you have anything in Russia?

- So, to close to you, no.

- See, we have two Pb originals. We need, relatively speaking, to overtake them there, and then pick them up. How much will we pay for the traffic?

- Well, yes ... In Russia we will rise, we will come.

By the time, if you drive somewhere far away, the distance has a very significant impact on the choice of a partner, where you can, for example, raise external computing power.

- QA is now able to test in principle.

Indeed, two weeks for testing and checking the quality of any new feature in the coding system that I described earlier is adodomy. Just in the bud he cuts out all the desire to get some features from this system.

And now a day or two, if hemorrhoids are completely - three or four. Plus - it is clear how to do it. The tester does not deal with infrastructure problems. He does not walk around the devopser, does not say: “Damn, the same thing doesn’t work for me, why?”. Now (at least I hope so), testers are no longer afraid to take on the tasks of controlling the quality of the coding system.

Answers to questions after the report often contain the most interesting pieces and interesting details, so we collected them here.

under the spoiler

— , , 2 Pb – , – ?

— HTTP.

— , – ? ?

— . . , . , .

— GPU. GPU?

— , . 95% . Elemental – GPU. , .

, GPU . – , - , . , – , , .

, , . Nvidia, , , . , … , GPU , – . , .

— Elemental – , . ?

- Yes. .

— ? HLS, DASH?

— , Elemental, – HSS.

— , ?

— . , , , , , – , – , .

— . , DRM…

— .

— . , , , , - . - , ?

— .

— , Kubernetes …

— Kubernetes, , .

— , Mesos + Marathon, ?

— . . , . Mesos Marathon – , , .

, , - – . , . , – – , . , , Kubernetes.

.

— , ?

— , , — , , *, .

— . Pb? - ?

— , , . , .

, -, . – CDN, . , , . .

— , . , ? ?

— Kibana.

— , . – ?

— 52 . , 52 – , DRM, . . . – , . HLS – Verimatrix, PlayReady, . DASH.

, , WDW , , . – , CDN-, DRM.

, , 18.

— , . , ?

— . , , , . , , - .

, . – . , – Kubernetes, – ? . , . , .

— – -, , . ?

— , , , . , . , , - .

, . -, , . . . , : «, - , , ». DevOps-.

. – , . , . , .

— . , 4, .. QUIK , , , , ?

— , .

, , , CDN. . , . -, , , … CDN . , .

, , , , , .

— HTTP.

— , – ? ?

— . . , . , .

— GPU. GPU?

— , . 95% . Elemental – GPU. , .

, GPU . – , - , . , – , , .

, , . Nvidia, , , . , … , GPU , – . , .

— Elemental – , . ?

- Yes. .

— ? HLS, DASH?

— , Elemental, – HSS.

— , ?

— . , , , , , – , – , .

— . , DRM…

— .

— . , , , , - . - , ?

— .

— , Kubernetes …

— Kubernetes, , .

— , Mesos + Marathon, ?

— . . , . Mesos Marathon – , , .

, , - – . , . , – – , . , , Kubernetes.

.

— , ?

— , , — , , *, .

— . Pb? - ?

— , , . , .

, -, . – CDN, . , , . .

— , . , ? ?

— Kibana.

— , . – ?

— 52 . , 52 – , DRM, . . . – , . HLS – Verimatrix, PlayReady, . DASH.

, , WDW , , . – , CDN-, DRM.

, , 18.

— , . , ?

— . , , , . , , - .

, . – . , – Kubernetes, – ? . , . , .

— – -, , . ?

— , , , . , . , , - .

, . -, , . . . , : «, - , , ». DevOps-.

. – , . , . , .

— . , 4, .. QUIK , , , , ?

— , .

, , , CDN. . , . -, , , … CDN . , .

, , , , , .

We also opened access to all videos of performances with Backend Conf 2017 and HighLoad ++ Junior .

We invite experts and professionals to become speakers at the May RIT ++ conference festival. If you have an interesting development experience and you are ready to share it, leave a request for our program committee.

Source: https://habr.com/ru/post/345738/

All Articles