Do you want a 6.5 deposit? Calculation of the rate of return on shares and full return using the Moex API and the dividend parser

A little about the text below.

The laziest portfolio investor usually does this: goes to a financial manager, they together constitute the investor profile and, based on this profile, they collect a portfolio of assets that correspond to the risk / return indicators that the portfolio investor is willing to accept.

If an investor is very long-term and the portfolio is correct, then he can buy the paper at any time and at any price, a 10-year time period will smooth the difference through dividend payments (of course, we should look for securities with a constant cash flow).

Consider a situation in which you need to find securities (hereinafter I will mean a specific type of securities - stocks, everything is clear with bonds, there is a coupon) that the cash flow brings in the form of dividends, satisfying your financial plan. The simplest example is to find a stock whose cash flow exceeds the inflation value, i.e. 4% (according to Rosstat)

Let's consider the second factor of this risk / return pair - the actual return

')

The profitability of a stock is an indicator that allows you to assess how much profit you can get from the moment you purchase a security. Yield can be not only positive, but also negative - in the case when the stock brought the investor a loss. In general, the profitability of the stock is calculated using the following simple formula:

Aggregators

Immediately give a couple of resources that already contain the data that we need. There are data on the yields of securities and factors that need to be paid attention to when buying them. I myself also occasionally look at these resources: Income.ru and the SmartLab Investment section .

You can safely use them, but I set myself the task to automate a certain process + to be able to work with data on dividend payments for a certain possible period of time.

We write a parser

After going through several options, I stopped at phpQuery, although I had tried to implement a parser on Simple HTML DOM before. The result was processed for a very long time, so I had to change. Data parsim from investfunds.ru

Parser code

public function getDivsFromCode($code){ // - , . include_once './phpQuery.php'; if ( $curl = curl_init () ) // { curl_setopt ($curl, CURLOPT_URL, 'http://stocks.investfunds.ru/stocks/'.$code.'/dividend/');// curl_setopt ($curl, CURLOPT_RETURNTRANSFER, 1); curl_setopt ($curl, CURLOPT_POST, true); curl_setopt ($curl, CURLOPT_HEADER, 0); $result = curl_exec ($curl);// curl_close ($curl);// } $html = phpQuery::newDocumentHTML($result); phpQuery::selectDocument($html); $a = [1,3,5]; // (, ) $emitent_string = htmlentities(pq('h1')->text(),ENT_COMPAT,'UTF-8'); if ($emitent_string === ""){ $divs['emitent'] = ' '; return $divs; } $re = [' , ', ' , ',' ',' ']; $emi = str_replace($re,'',$emitent_string); $divs['emitent'] = $emi; $divs['code'] = $code; /* , */ $i = pq('[cellpadding="0"] tr td:nth-child(1)'); $l = substr_count($i,'td style'); /*, */ if ($l === 0){ $divs['data']= 'No divs'; return $divs; } /****** css************************/ for ($i =3;$i<$l+3;$i++){ foreach ($a as $j){ switch ($j) { case 1: $divs['data'][$i-2]['year'] = str_replace(' ','',htmlentities(pq("[cellpadding='0'] tr:nth-child($i) td:nth-child($j)")->text(),ENT_COMPAT,'UTF-8')); break; case 3: $divs['data'][$i-2]['date'] = str_replace(' ','',htmlentities(pq("[cellpadding='0'] tr:nth-child($i) td:nth-child($j)")->text(),ENT_COMPAT,'UTF-8')); break; case 5: $string = htmlentities(pq("[cellpadding='0'] tr:nth-child($i) td:nth-child($j)")->text(),ENT_SUBSTITUTE,'UTF-8'); $re = '/[ \s]|[]/'; $divs['data'][$i-2]['price'] = (float)preg_replace($re,'',$string); break; default: break; } } } /* , , , */ return $divs; } the result of the function will be an array of data on one issuer. Next, I import the result into companies.json

import

$divs = $emitent->getDivsFromCode($i); if ($divs['emitent'] != ' '){ array_push($json_div, $divs); } } file_put_contents('companies_part1.json',json_encode($json_div,JSON_UNESCAPED_UNICODE)); Array example

[{"emitent":", (RU0009071187, AVAZ)", "code":3, "data": {"1": {"year":"2007","date":"16.05.2008","price":0.29}, "2": {"year":"2006","date":"06.04.2007","price":0.1003}, "3": {"year":"2005","date":"07.04.2006","price":0.057} } }] Minuses of the parser: at first I thought that I would have to parse about 1000 pages, because after 800 I only came across additional issues. But then, not finding one of the issuers, I decided to continue the parsing and it turned out that even after 5k pages something might happen. The solution is to rewrite the parser using multicurl for processing speed. Before that, my hands did not reach me (I began to read), but for the right one, I should have done just that. Maybe someone from the readers will help me. Again, the base with divas can be updated once every six months. That's enough if you operate with annual yields.

Calculate the rate of return and the total return of the stock from the date of purchase

I have already given the formula above. For automation, I wrote a script that uses raw data entered manually and counts the required indicators.

$name = 'GAZP'; // $year_buy = 2015; // $buy_price = 130; // $year_last_div = 2016; // $v_need = 4; // For convenience, I wrote a small function. which by ticker finds the issuer data in json

Ticker Search

public function getDivsFromName($name){ $file = file_get_contents('companies.json'); $array = json_decode($file,true); foreach ($array as $emitent) { if ((stristr($emitent['emitent'],$name))&&(!stristr($emitent['emitent'],$name.'-'))){ return $emitent; } } } Now we can calculate the data we are interested in. To get the current price ( $ today_price variable) I use the function in which I wrote in my previous post and which uses the Moscow Exchange API. All formulas are taken from this article.

$emitent = new emitent(); $v_today; // ; $v_need = $v; // ; $div = $emitent->getDivsFromName($name); $sum = $emitent->getSumDivs($year_buy, $div); $last_div = $emitent->getSumDivs($year_last_div, $div,1); // c /*r = (D + (P1 - P0))/P0 * 100% * P0 - * P1 - * D - * */ $today_price = $emitent->getPrice($name, '.json'); $r = ($sum + ($today_price-$buy_price))/$buy_price*100; $v_today = $last_div/$today_price*100; $P = ($last_div/$v_need)*100; // Now a little analysis of the results. For example, I take two large companies that are included INDEX MSCI RUSSIA

Gazprom

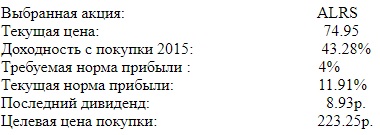

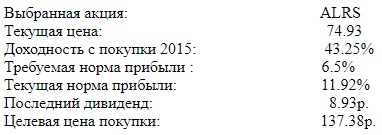

and Alrosa (Suppose we took it for 60r. in 2015.)

As you can see, despite the fact that the price of a share of Gazprom is practically the same as the purchase price, we still have a yield of 12% due to dividends. Alrosa is even better off. We can recalculate for the rates of return on deposits from the site of banks.ru from the list of top-50 banks. Now banks give us 6.5%

Accordingly, you can, by varying the desired rate of return, understand whether the current stock price satisfies your needs and your financial goals.

I note that, as in the previous article, this is only the finding of one of the factors that influence decision making.

Many investors do the above calculations in Excel. This tool provides many formulas for calculating profit, cash flow, etc. But I aimed to automate the collection of data on dividends and the automated processing of this data.

The article this time includes less theory and more code because the theory is very extensive, and to bind this task to the general theory of portfolio construction, you need to write a series of articles. Yes, and the Habra format does not imply the writing of purely investment articles, especially now in the vast expanses of the network there are a great many.

Previous article

Thanks for attention!

Source: https://habr.com/ru/post/345696/

All Articles