Load testing "non-HTTP". Part 1 JMeter

In the monolith, calls to methods were made inside one application — now, in the microservice architecture, all interaction takes place via the network. Accordingly, the speed of information exchange between applications falls regardless of the exchange protocol used.

In this article, we will explain how to write code for load testing “non-HTTP” protocols using the example of Apache Thrift using tools such as JMeter and Gatling ( part 2 ). We will test microservice, which should deal with 50K RPS. From one load machine we will try to achieve the performance stated in this tweet:

We take Thrift as a basis, but this does not play a special role; with minor adjustments, you can test gRPC , Avro and other protocols. The approaches in the article are general, and you will only need to replace the client.

Formally, the protocols described are the RPC framework and / or data serialization system , but even the developers themselves use the word protocol .

Why JMeter and Gatling

One of the reasons - opensource-origin. You can look at the code and try to understand what is implemented in the tool and how. And do not pay.

On open spaces of GitHub there are tools ground for specific protocols. For example, for Thrift, this is Iago from Twitter developers or Bender from Pinterest guys. But working with such frameworks dramatically increases the threshold of entry and bus factor . In contrast, Gatling and even more so JMeter are widely distributed and have a large community, which you can always ask for advice or find a description of the problem.

Well, we, as lovers of writing code in any strange situation, both tools are great.

The next question is: why not take one of the two tools? At first glance, we have parity between them and we want to understand everything in detail: which one works better, who has what problems. In addition, it is interesting to compare approaches - Threads vs Actors .

JMeter

Although JMeter is a GUI-oriented load facility, you can write code for it. This approach improves readability, allows you to store data in an adequate form in the VCS and conduct code review without pain in the eyes. In addition, for writing a client for any protocol, the code is not only sufficient, but also a necessary condition. Finally, with the code it is easy to turn to the development for help, without telling them about a specific tool, and thereby again lower the bus factor.

Let's see what JMeter offers for loading custom protocols:

- Non-HTTP plugin. The option, of course, is good, but not implemented for our protocol and poorly modifiable.

- Writing code inside JMeter. The idea is so-so - it is difficult to work with dependencies and a problem with performance is possible. According to the article BeanShell Sampler is very slow and you need to write on the shell there - discard it immediately. JSR223 Sampler at first glance shows tolerable performance - let's leave for further test.

- Writing code in the IDE is always nicer and more convenient. JMeter offers two samplers for this: Java and Junit.

Performance comparison sampler

To understand which sampler to use, we carry out a comparative analysis. To test, we will write a simple script that connects the lines in a loop, and set VALUE small (10) and larger (100).

import java.security.SecureRandom; for (int i = 0; i < VALUE; i++) { new StringBuilder().append( new SecureRandom().nextInt()); } All tests were performed on JMeter 3.3:

It can be seen that JSR223 is slower, which means that it crashes. Java and Junit have similar performance, in order to understand which one is more convenient, you will have to sort both.

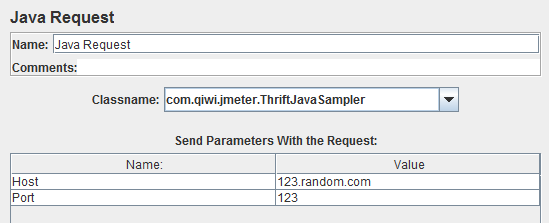

Java Request Sampler

Java Sampler has only two settings: the choice of test for the load and the parameters to pass to the test. You can set a set of default parameters in the code - they will appear in the JMeter GUI. But it should be noted that after adding a new parameter directly from the GUI and saving the test plan, the newly added parameters will be reset.

The Java Sampler test extends the standard AbstractJavaSamplerClient from the JMeter library (which we connect by any convenient means of assembly, for example, Gradle). Actually, the test class may consist of the test itself, pre- and post-conditions, and default parameters.

public class ThriftJavaSampler extends AbstractJavaSamplerClient { @Override public SampleResult runTest(JavaSamplerContext javaSamplerContext) { SampleResult sampleResult = new SampleResult(); sampleResult.sampleStart(); boolean result = Utility.getScenario(); sampleResult.sampleEnd(); sampleResult.setSuccessful(result); return sampleResult; } } The test method accepts the JMeter context from which parameters should be taken, and returns SampleResult, which in turn contains a set of methods to help customize the test run and evaluate the results. For our purposes, the following 3 methods are important: the start and end time of the request, as well as the result.

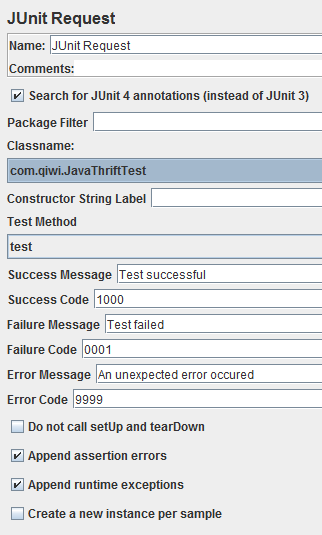

Junit Request Sampler

Junit Sampler also has a load test selection and here you can write several methods in one class. The default parameters are forwarded to the code through the User Defined Variables element. All other settings are clear from the description: do not cause pre and post conditions, add assertions and runtime errors to the output. It is not necessary to include the creation of a test instance for each new request, since this will significantly slow down performance.

Junit Request Sampler is similar to the usual Junit test, but it works a little differently. JMeter never calls @BeforeClass and @AfterClass, so you need to use a separate test to set up a global precondition. It is also worth noting that the code from Before and After is not taken into account during the test run.

@BeforeClass public static void setUpClass() {assert false;} @Before public void setUp() {} @Test public void test() {} @After public void cleanUp() {} @AfterClass public static void cleanUpTest() {assert false;} The JMeter developers themselves say that the GUI mode should be used only for debug. But here we must be careful with statics and singletons . For example, after restarting the test, everything that is declared inside a singleton will not be initialized. At the same time, without using a singleton, all objects will be reinitialized before each test, which will adversely affect performance. Static variables will always remember their values after running the test and will not change, even if they are redefined from the GUI.

Having compared both samplers, we finally settled on the Junit Request Sampler for its simplicity and, at the same time, ease of modification.

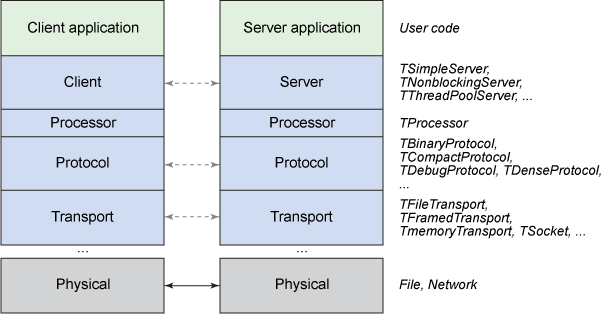

We write Thrift client

When writing a client, you should take into account all the variety of Thrift protocol settings. The main rule: the client and the server should work with the same transport and protocol, have the same version of artifacts.

In order not to waste time on creating a client in each test and not exhausting the ports of the machine with which we generate the load, we will immediately write a pool of clients. For a pool, you can specify the number of clients, it will keep the necessary number of connections, you will only need to take a client from the pool before use and return later.

asyncClientPool = new ThriftClientPool<>(() -> new PaymentsCreate.AsyncClient( (TProtocolFactory) tTransport -> new TMultiplexedProtocol(new TBinaryProtocol(tTransport), SERVICE), new TAsyncClientManager(), new TNonblockingSocket(host, port, TIMEOUT)), PoolConfig.get()); This is how we created the pool and the client. The main thing is that in this example, the pool does not know anything about the implementation, and is configured at creation. This is the most basic pool written in GenericObjectPool, on the basis of which our developers made a self-regulating pool with logging and a single protocol / transport.

We wrote the code, put it in jar files and put it next to the JMeter:

$JMETER_DIR/lib/junit $JMETER_DIR/lib/ext $JMETER_DIR/lib You should not forget about the third-party libraries and their uniqueness. Without them, the test may not even appear in the list of available or get a version conflict when the load starts.

JMeter without jmx

The article about writing code for JMeter will be incomplete if we do not discuss how to get rid of cumbersome test plans for hundreds of lines of XML in jmx files.

We write the application using the JMeter libraries and go through the main points in order.

Before running the application, you must specify where the settings for JMeter live. In this case, locally installing JMeter is optional:

final String JMETER_HOME = Utility.getJMeterHome(); JMeterUtils.loadJMeterProperties(JMETER_HOME + "jmeter.properties"); JMeterUtils.initLogging(); JMeterUtils.setLocale(Locale.ENGLISH); JMeterUtils.setJMeterHome(JMETER_HOME); Let's call our test plan and specify the protocol:

TestPlan testPlan = new TestPlan("Thrift test"); TestElement sampler = Utility.getSampler(); LoopController and ThreadGroup are responsible for the load generator - how and how much we will load. Everything is standard here:

LoopController controller = new LoopController(); controller.setLoops(10); controller.setContinueForever(false); ThreadGroup threadGroup = new ThreadGroup(); threadGroup.setNumThreads(10); threadGroup.setRampUp(0); threadGroup.setDuration(30); threadGroup.setSamplerController(controller); You can see the results in compressed form during the load (thanks to the summariser), and then save them for further processing (the resultCollector is responsible for this):

Summariser summariser = new Summariser(); SampleSaveConfiguration saveConfiguration = new SampleSaveConfiguration(true); ResultCollector resultCollector = new ResultCollector(summariser); resultCollector.setFilename(JMETER_HOME + "report.jtl"); resultCollector.setSuccessOnlyLogging(true); resultCollector.setSaveConfig(saveConfiguration); All elements are combined into a special JMeter tree. It looks like how we do it in GUI mod:

HashTree config = new HashTree(); config.add(testPlan) .add(threadGroup) .add(sampler) .add(resultCollector); Configure and run the load:

StandardJMeterEngine jMeterEngine = new StandardJMeterEngine(); jMeterEngine.configure(config); jMeterEngine.runTest(); Everything, you can forget about XML. Hooray!

Ultimate load

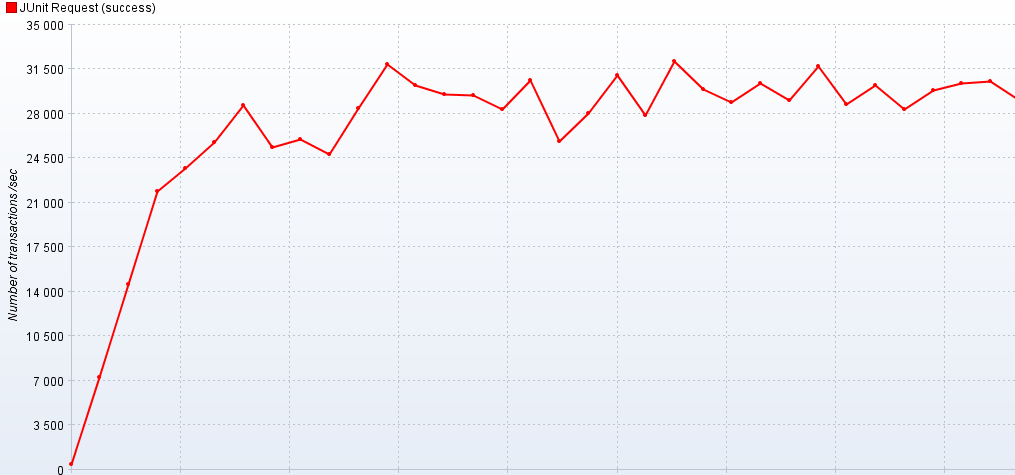

Let's see how much our generator can produce from one load machine. Naturally, all system settings for load testing have been made, we have already conjured with network settings and increased the limits for open handles. After the run, we get a not very smooth load schedule, according to which we can do about 30K RPS:

Naturally the question arises, is this the limit of the client or server part? We put another JMeter next to us in the cluster and made sure that the service can deliver 50 thousand RPS targets.

PS

In the next section, we will analyze the "non-HTTP" load using Gatling, and tell you how we managed to increase its performance tenfold.

')

Source: https://habr.com/ru/post/345556/

All Articles