Frontend performance optimization

A braking site is a pain not only for the user, but also for the developer. How you can fix the situation, when you need to rely on caching, and where you can trust the processor, and how all this can help optimize the performance of a complex frontend application, JS expert and HTML Academy teacher Igor Alekseenko (@ iamo0) is ready to explain in practice . Under the cut - the decoding of his report with Frontend Conf 2017 .

About speaker

Igor Alekseenko is a developer with a great experience, he conducts basic and advanced courses on JS at the HTML Academy. He worked at Lebedev Studio, Islet, and JetBrains.

')

Today I wanted to talk about the problem of developers. I will share my own pain, but I hope you share it.

I hate it when the interfaces slow down. And I hate not only as a user of sites - but also as their developer.

Why? Because we, as developers, are responsible for the emotions that people experience on sites, for their experience. If a person comes to the site and gets some kind of negative experience - this is our fault. This is not a designer, not a complex technology - this is us.

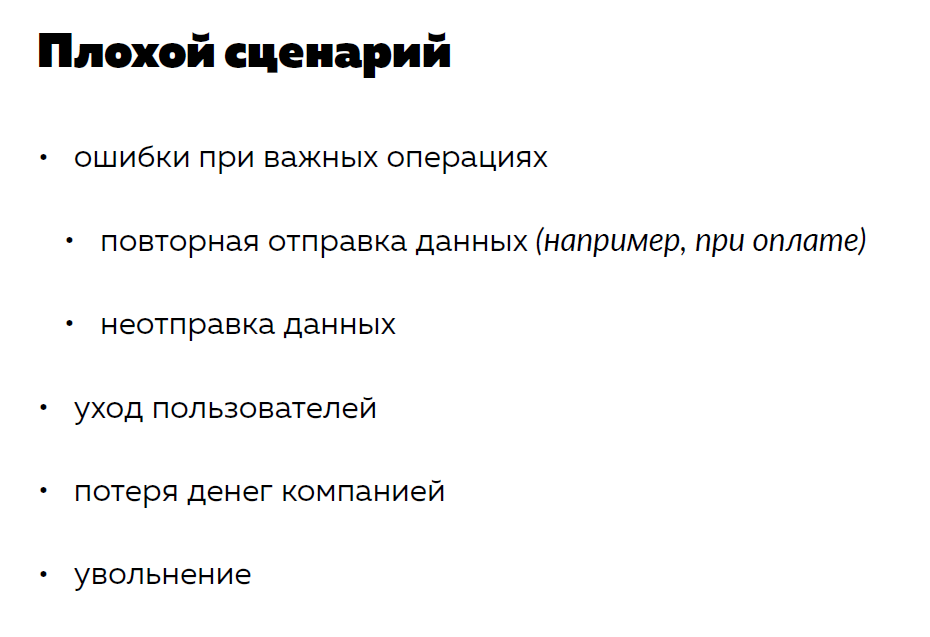

Well, when the interface just slows down. Well, you think, the user will come to our online game, but will not be able to aim and kill the opponent or see some jerky animation.

But in fact, everything is much more complicated. Because there are more sites, and they solve more complex tasks. If you are developing any banking application and you have had a second data transfer, and the user has lost money, or you have an online store, and the user could not buy the goods he needs, then you will lose this user. And all this is simply due to the fact that your site is slow.

A lost user is a loss of money, lost revenue from the company's point of view, and possibly your dismissal.

Therefore, in general, braking the site - this is a serious problem. And so it needs to be addressed.

But in order to solve a problem, you need to know the enemy by sight. Let's see what the brakes on sites are all about, why websites slow down and where it comes from.

Brakes on sites occur when user interaction is no longer even. What does it mean? The fact is that when users browse the site, they see not just some static image. Because the site is not just a picture, it is a process of user interaction with the interface that we offer him.

The user can see the same animations that I talked about. It can trivially scroll the site - and this is also a dynamic interaction. He pokes on buttons, enters text, drags elements. This all works dynamically.

Why does it work dynamically? Why sites can live for a long time?

This is due to the fact that such a structure as the Event Loop is built into all browser engines.

In fact, the Event Loop is such a simple programmer trick, which is that we simply start an infinite loop with a certain frequency. So that we do not have a clogged stack and some kind of performance.

This infinite loop at each step checks external conditions and starts certain actions. For example, he understands that the user has scrolled the mouse and you need to slightly shift the page.

With the Event Loop, which is built into the browser engine, all user interactions are synchronized - scrolling, other things and the code that we execute in the long term. That is, it all depends on this event loop.

How to get into Event Loop frames?

We have a cycle that turns with a certain frequency. But we, as front-fenders, cannot control this frequency and do not know it. We only have the opportunity to use ready-made, intended for us frames. That is, we do not control the frequency, but we can fit into it. For this, there is a requestAnimationFrame construction.

If we pass the code to the callback requestAnimationFrame, then we get to the beginning of the next update frame. Frames updates are different. On the MAC, for example, these frames try to fit into 60 Fs, but the frequency is not always equal to 60 Fs. Further on examples I will show it.

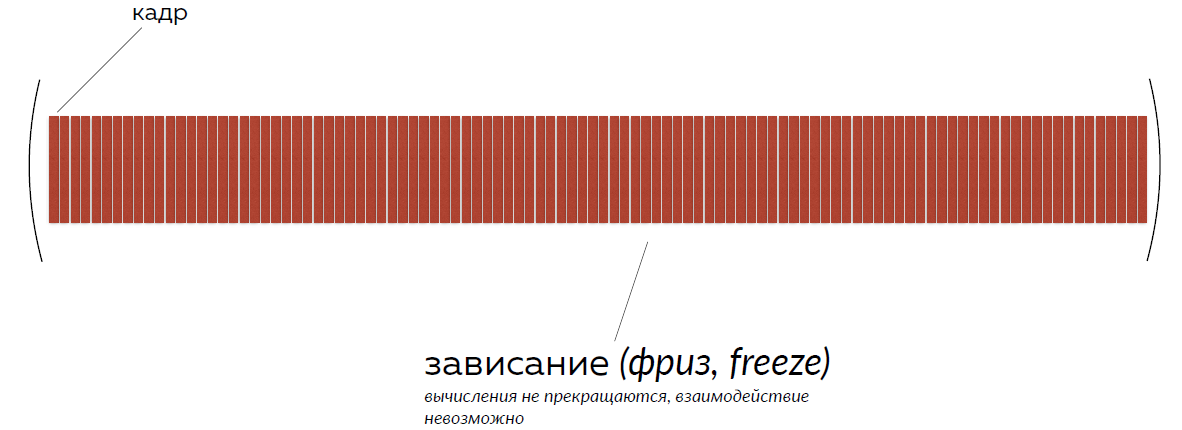

We have already disassembled that at the core of Javascript is an infinite loop, which is updated with time. Now we can assume where the brakes can arise from.

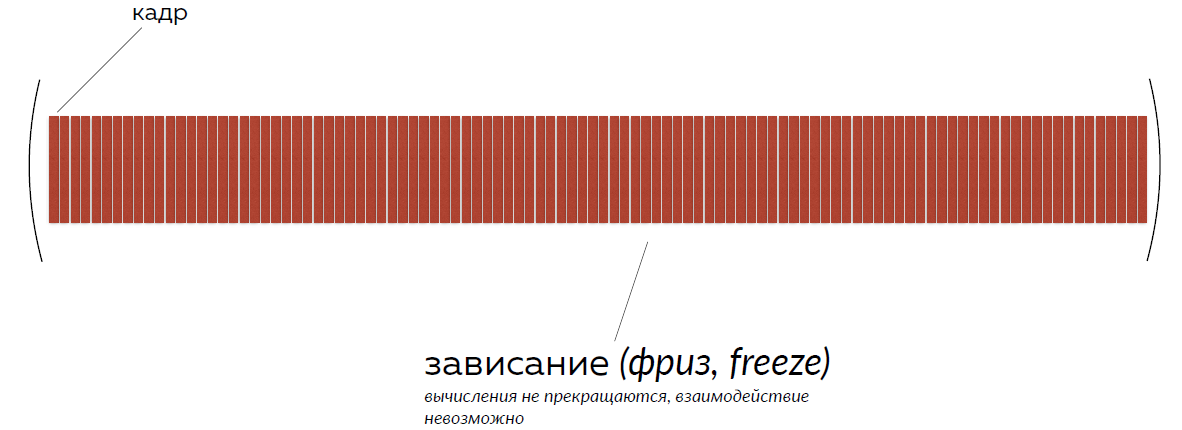

There are frames that are updated over time. We run some calculations on the site. Any. Everything we write in Javascript is actually computing. If these calculations take longer than one visible update frame, the user sees lags.

That is, there is a series of consecutive frames, and then - some long calculation, which takes more than a frame. The user sees a slight delay. He is twitching animation.

Or the calculations may become too large, and then the page will freeze and nothing can be done with it.

Ok, we understood what a brake is and why the site can slow down. Now let's solve the problem.

We are programmers, so we have the tools that run our code. The main task of the programmer is to properly distribute the load balance between the processor and memory.

We all know what a processor is. This is a device in a computer that is responsible for instantaneous calculations. That is, any command that we write is converted into an instruction for the processor, and it will execute this command.

But if there is a sequence of commands that leads to some great result (let's say we calculated a complex value), but we don’t want to repeat this sequence - we can write the result of this command in memory and use another processor instruction called reading from memory .

I just wanted to talk about it.

The first optimization, which seems logical, is to use memory in order not to use calculations.

In principle, this strategy sounds advantageous. Moreover, it is a good strategy and it is already in use.

For example, Javascript has a built-in Math object, which is designed to work with mathematics and calculations. This object contains not only methods, but also some counted popular values - so as not to recalculate them. For example, as in the case of the number π, which is stored up to a certain sign.

Secondly, there is a good example about the old days. I love programmers of the 80s because they wrote effective solutions. The iron was weak, and they had to come up with some good stuff.

In 3D shooters, trigonometry is always used: in order to count distances and sines, cosines, and other such things. From the point of view of a computer, this is also a rather expensive operation.

Previously, programmers at the compilation stage shoved tables of sines and cosines directly into the program code. That is, they used the already calculated values of trigonometric functions. Instead of counting them for rendering the scene, they took them as constants.

Thus you can optimize everything in the world. You can pre-calculate the animation - how it will look, and do anything.

In principle, it sounds very cool.

Look, here are the frames, and instead of starting calculations that take several frames, we run calculations that only deal with reading: read the finished value, substitute, use. And it turns out that the interface is very fast.

Theoretically it sounds very cool. But let's think about how exactly frontenders work with memory.

When we open a browser tab, we are allocated a certain amount of memory. By the way, we also don't know him. In this we are also limited.

But what's more, we cannot control this memory.

There is another feature - something is already stored in this memory:

That is, for each transfer that stands between the tags, a browser memory object is created, like text-mode, and it hangs.

We see that we have a lot of everything in our memory anyway, but at the same time we want to write something of our own there. This is dangerous enough because memory can start to slow down.

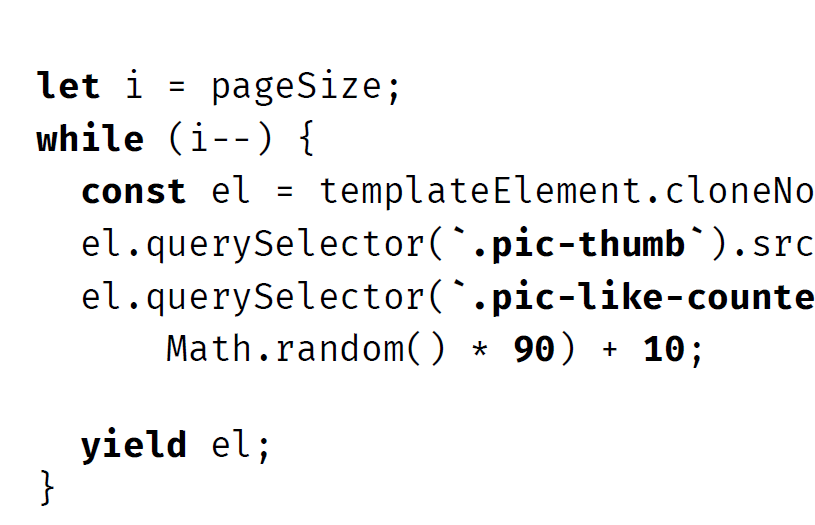

There are two main reasons - and, oddly enough, they contradict each other. The first is called “Garbage Collection”, and the second is “Lack of Garbage Collection.”

Let us examine each of them.

Before I explain what garbage collection is, I’ll tell you how we will look at work in terms of performance, memory, and everything else.

The fact is that all these things can be measured. In any browser there are developer tools. I’ll show Chrome as an example, but in other browsers it’s also there. We will look at the “Profiling” or “Perfomance” tab.

It can be opened and measured at any time by a so-called browser performance snapshot. Click on the button "Record / write." Performance is being recorded, and after a while you can look at what was happening on the page and how it affected your memory and processor.

What does this tab consist of?

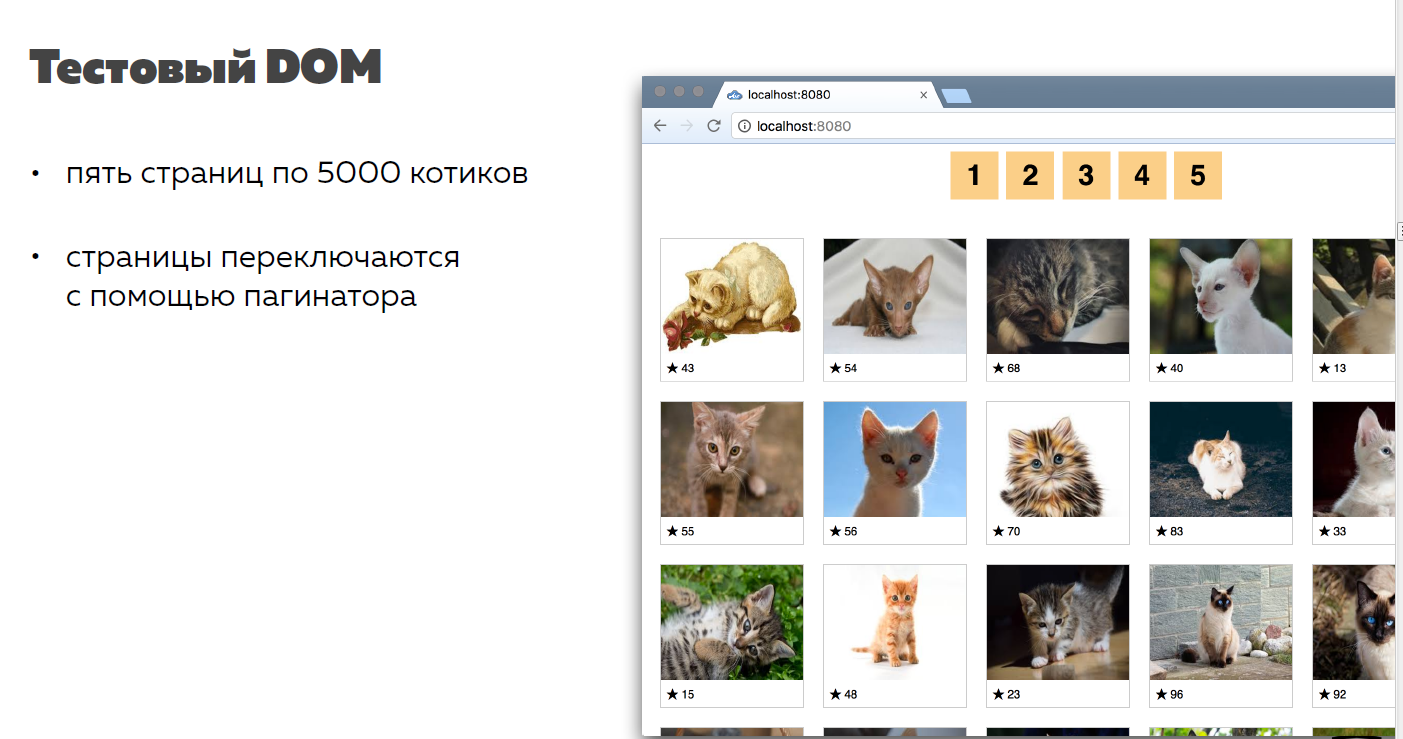

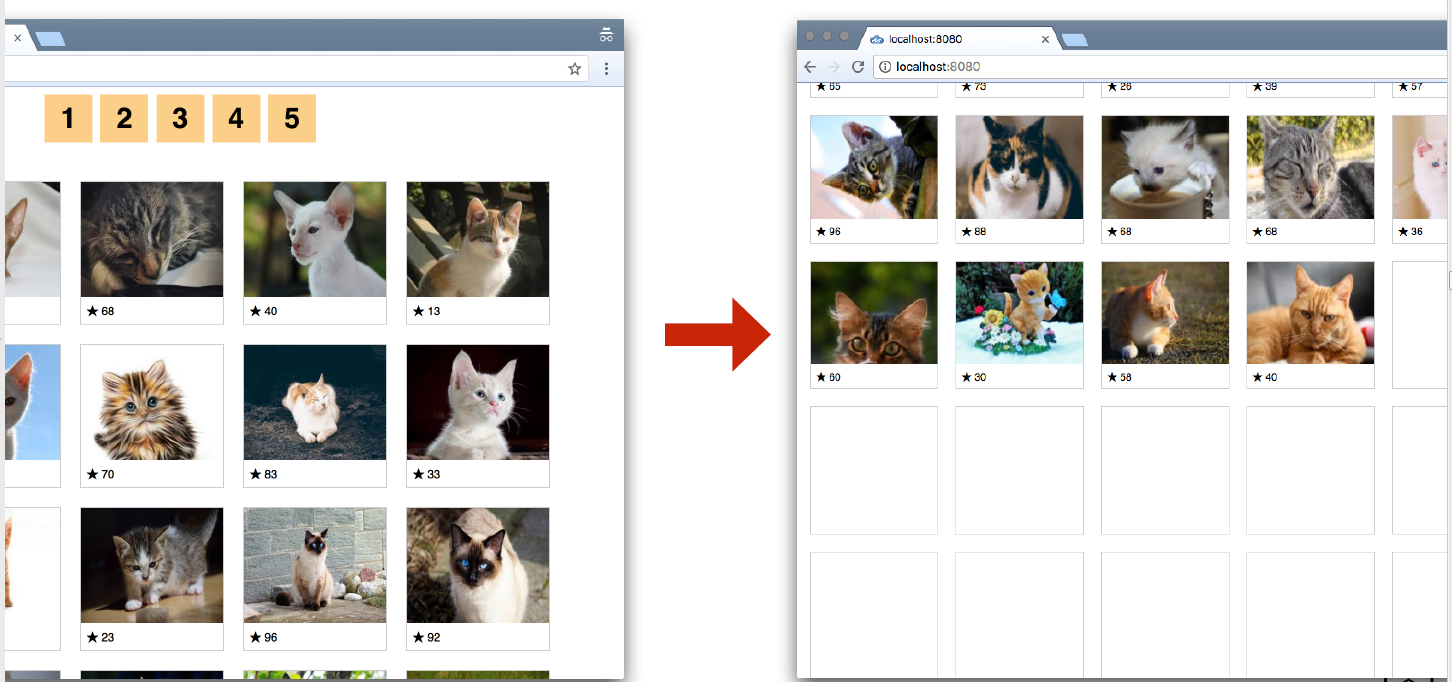

So, we will measure this performance on Instagram with cats. Everyone loves cats, everyone loves Instagram - so I decided to do so.

Seals are not many, so we will look at the big pages. We will have five pages with 5,000 cats, and we will learn how to switch them.

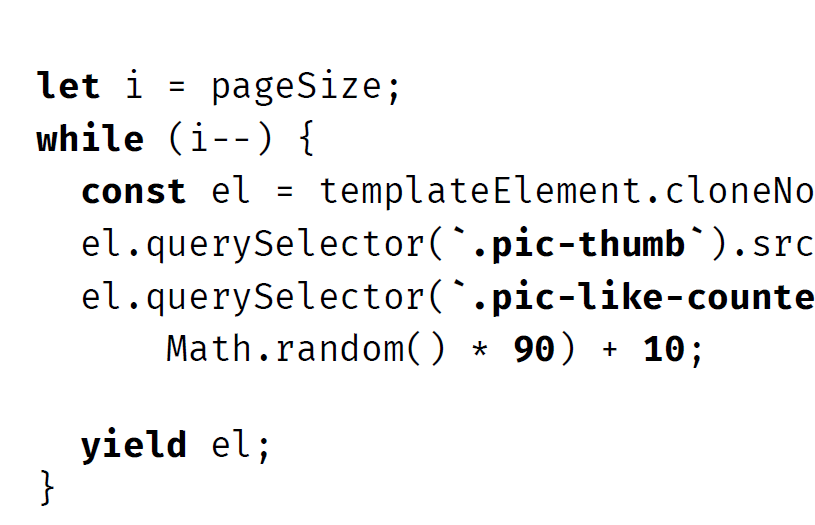

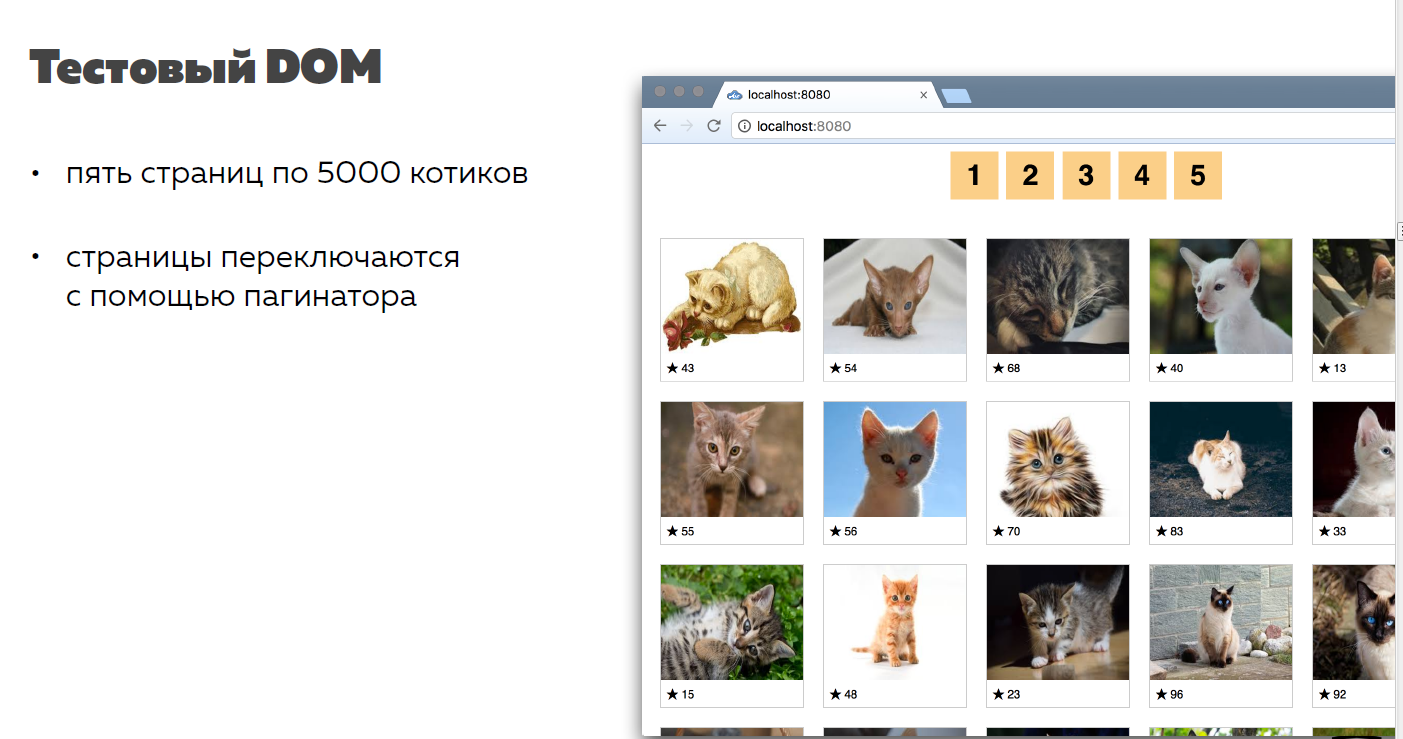

Below is the code with which I generate the seals. They are all unique.

That is, I create a DOM element from some standard template and fill it with unique data. Even where the pictures are repeated, I use the template: so that there is no caching and the memory test is clean.

When I create all the elements, I add them to one fragment - this is also an optimization that you all know. To clean the pages, I’ll just be - bald bombs! - clean the container.

It works faster.

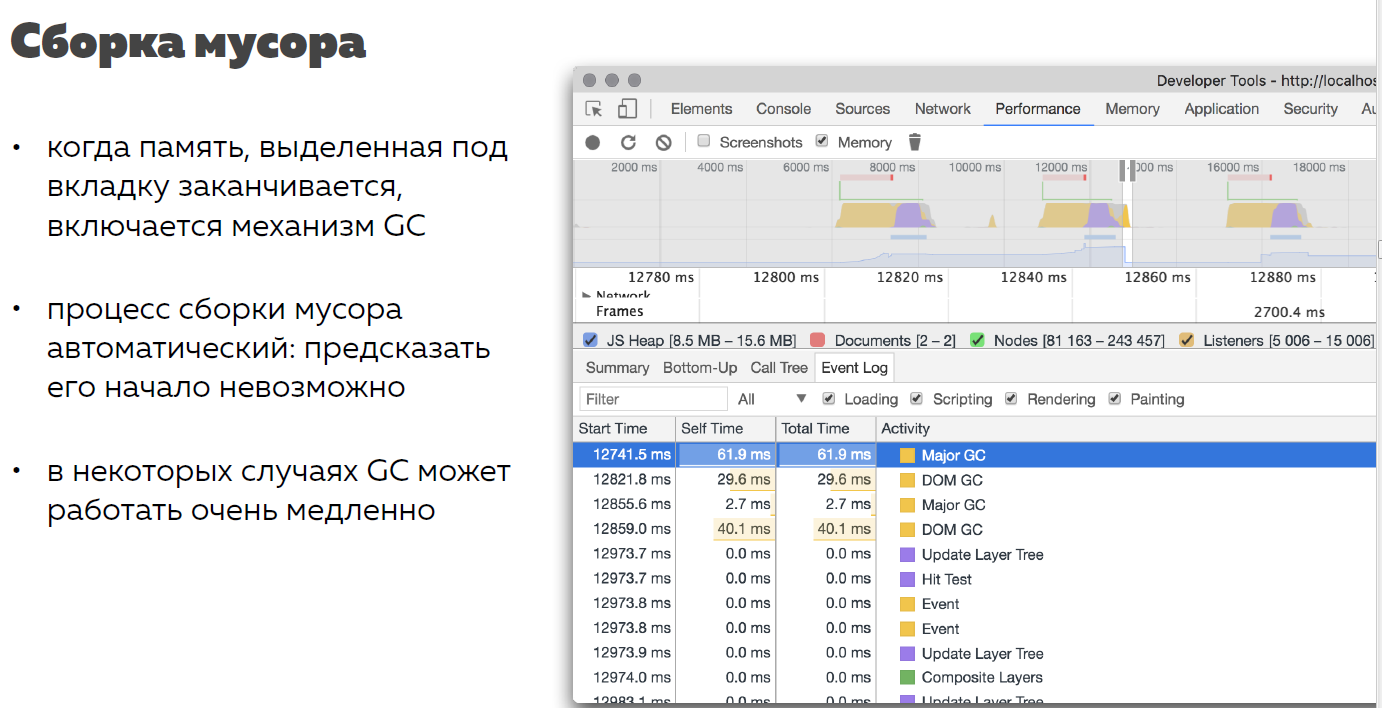

So, garbage collection.

Garbage collection is a process that is designed to optimize memory management. He is not controlled by us. The browser itself starts it when it realizes that the memory allocated for this tab is running out and you need to delete old unused objects.

Old unused objects are objects that are no longer referenced. That is, these are objects that are not written into variables, into objects, into arrays - in general, nowhere.

It would seem, yes, this is a cool process, we need garbage collection, because the memory is really limited and needs to be freed.

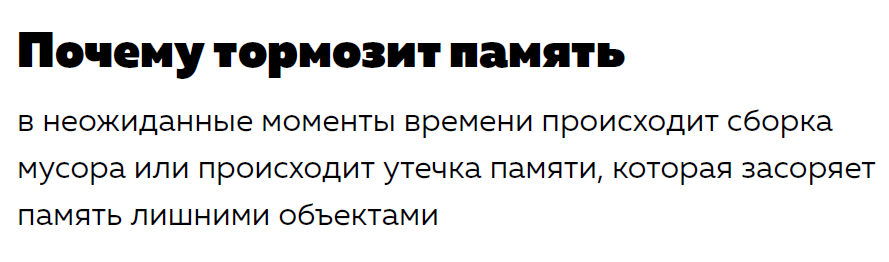

Why can this be a problem? Because we don’t know how long garbage collection will take, and we don’t know when it will happen.

Let's look at an example.

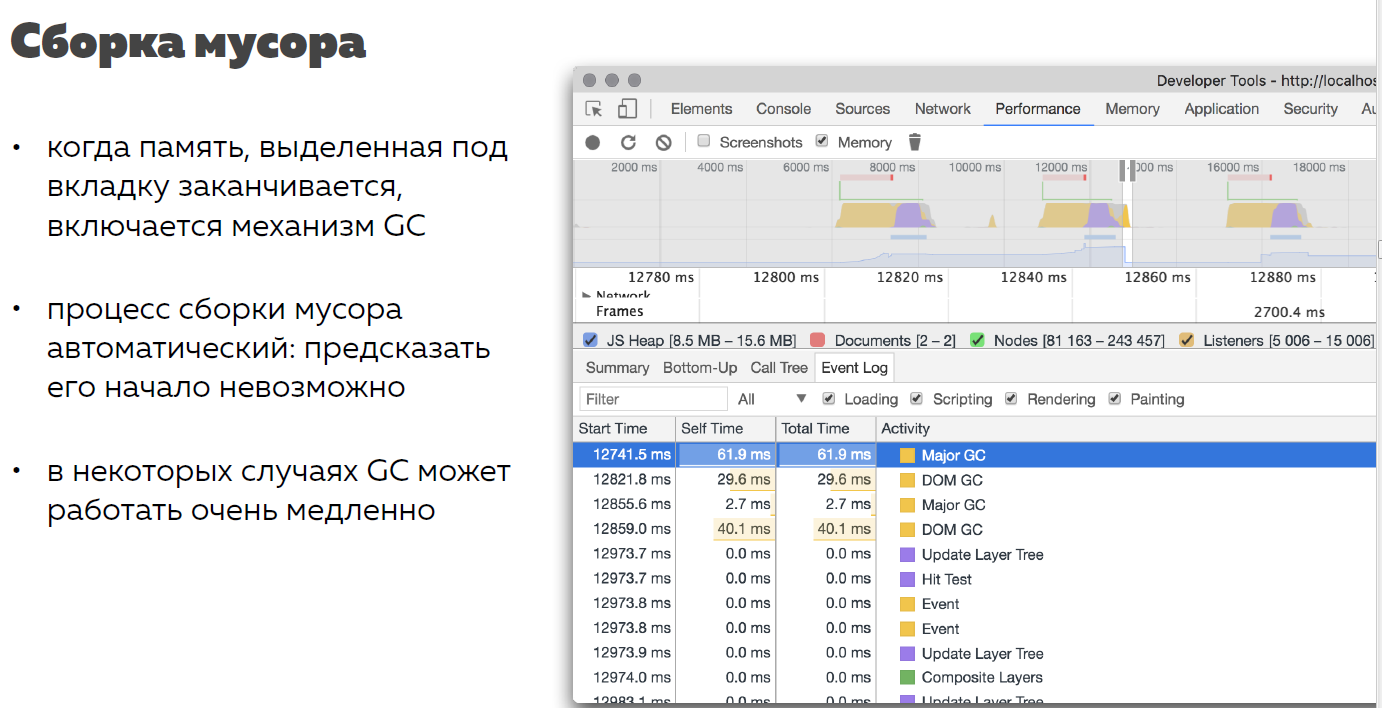

Here I recorded a timeline profile of switching our pages with cats. On the top graph, there are spikes in processor performance — CPU utilization.

In the lower graph, you can see that first the memory goes up - this is a graph of memory usage - and then a step down. This is precisely the process of garbage collection.

I had enough memory for the first two pages, and I drew 10,000 cats. Then the memory is over and, in order to render another 5,000 cats, I deleted the old ones, because they are no longer used.

Basically, it's cool. Indeed, the browser took care of me and deleted what I do not use. What is the problem?

Let's bite our time, as I usually say, down this leap, and see how long the garbage collection process took.

If you add 4 entries to garbadge collecting, you can see that the garbage collection process took 134 ms - this is 10 frames at 60 Fps.

That is, if you wanted to move the block by 600 Ps for a certain time, then you would have lost the move to 100 Ps simply due to the fact that the browser decided to clean the memory. You do not control the onset of this process, nor the duration. This is bad.

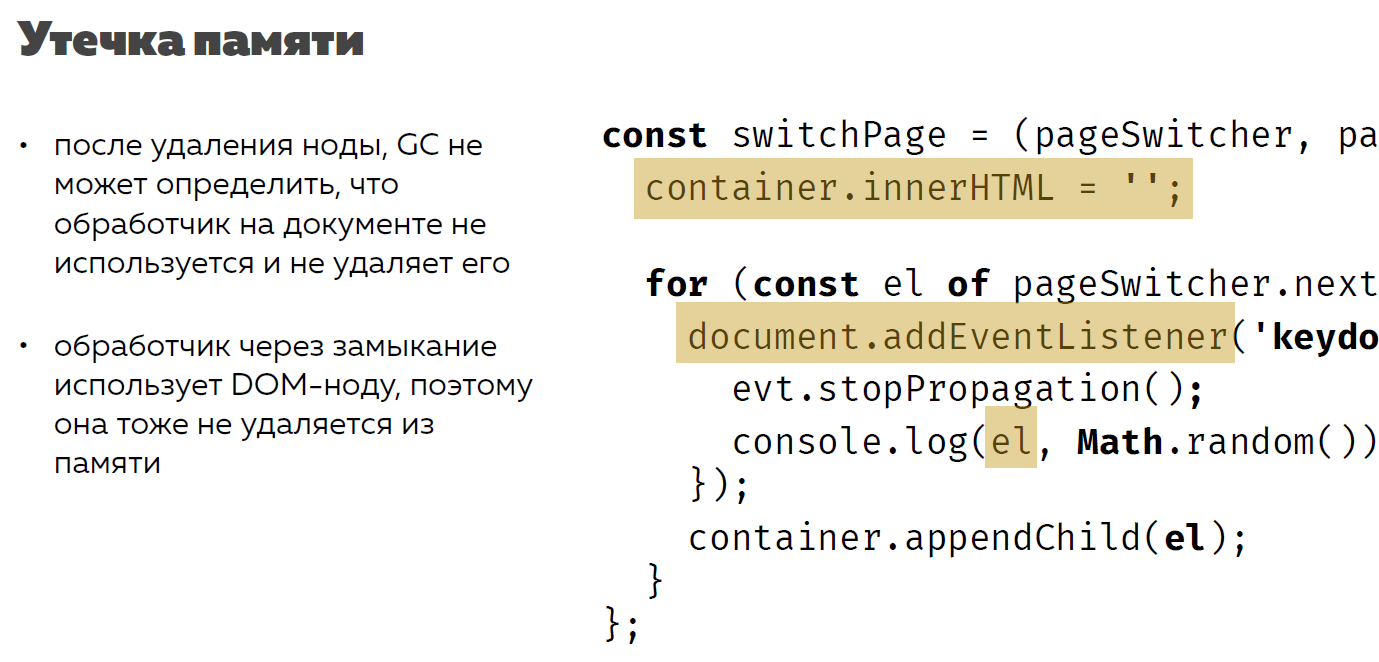

The second problem is exactly the opposite of the first. It is called a memory leak.

It would seem that the browser is so inconsistent: it needs to clean the memory for a long time, and not to clear the memory for a long time. Why?

A memory leak is a process where there is no such garbage collection. That is, even it may be happening, but does not clean what we need. Sometimes we can throw something in such a way - and, looking at it, the browser engine will understand that it cannot clean it.

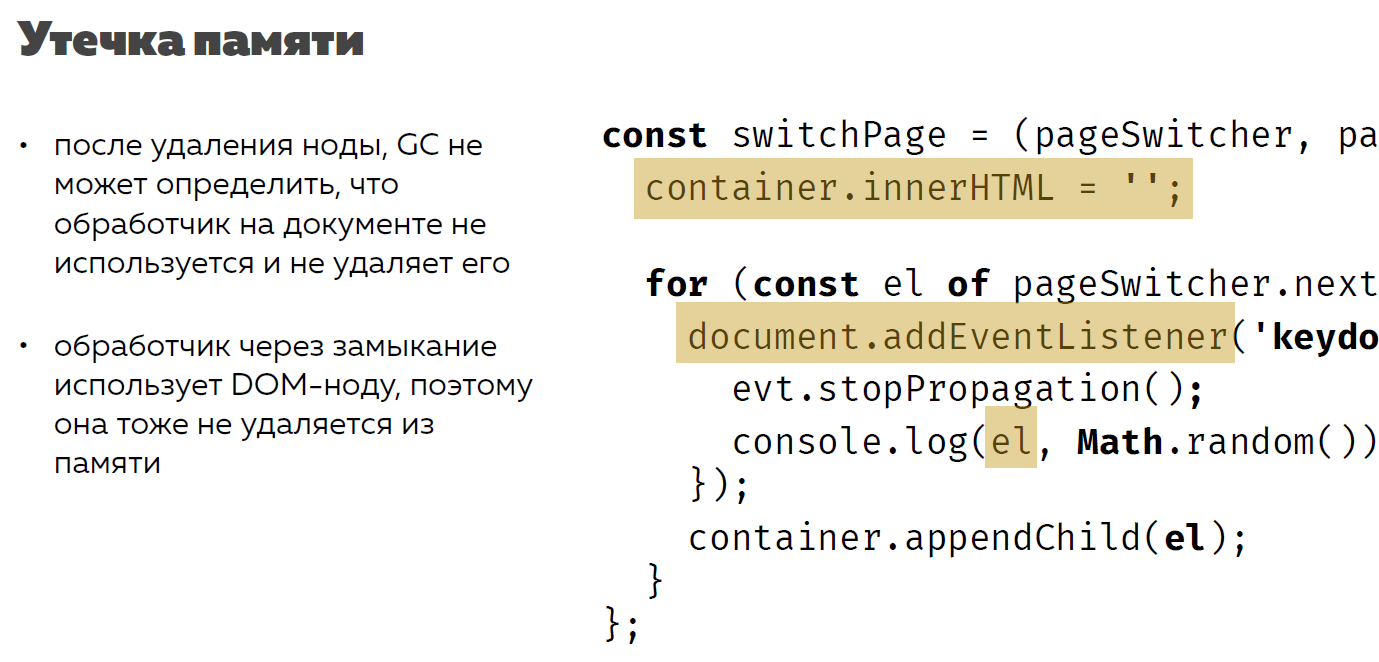

Let's look at code example.

There is the same page switching, but every time I insert new elements on the page, I add a handler to each photo.

Suppose I need to press the spacebar. I hung up just in case for a document handler so that Keydo would not disappear anywhere. What happens in this case?

When I clean the container by removing HTML, I will remove the DOM nodes from the DOM tree. But the handlers on the document will remain, because the document will remain on the page. Nothing will happen to him. When garbage collection takes place, the garbage collector will not remove these handlers.

Let's see what is written inside these handlers?

Inside these handlers, a node is used. It turns out that the nodes will not be deleted from memory, because they are referenced. This is called a memory leak. From the point of view of logic, neither Iods nor handlers are needed. But the collector does not know about it, because from his point of view they are used.

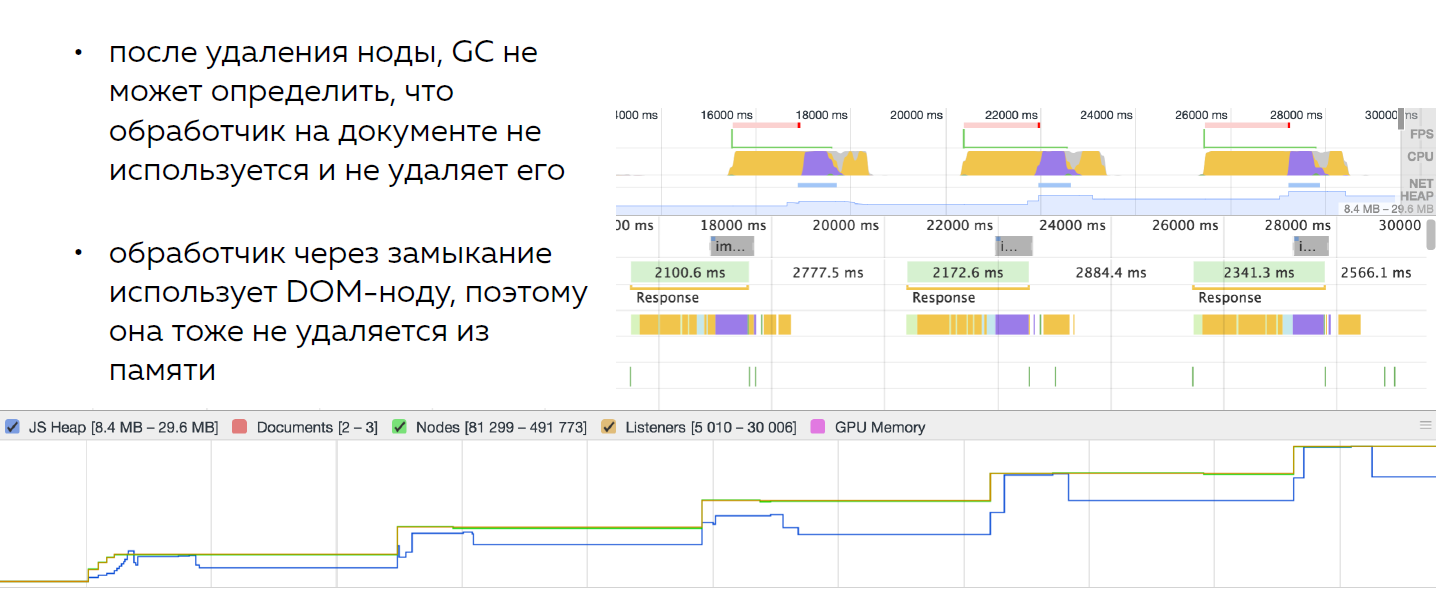

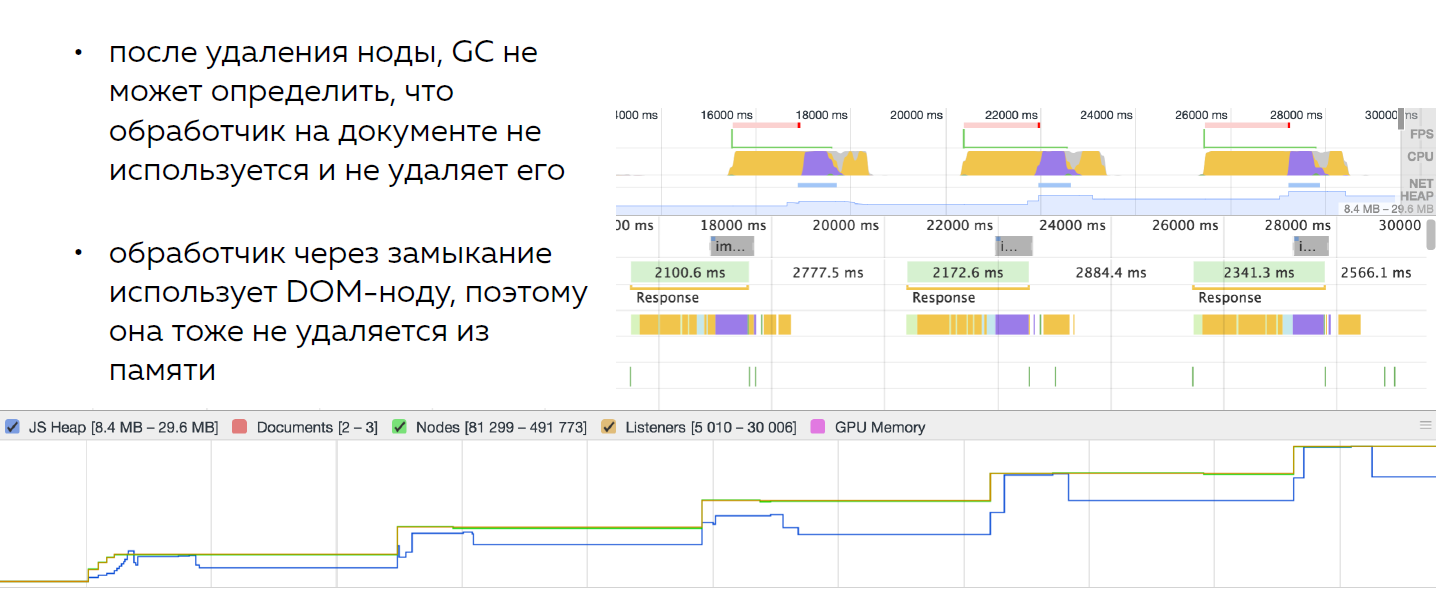

What happens in this case? Let's look at the schedule.

From the waves that rise and fall, the schedule turned into a ladder that grows upward. With each page switch, memory is used more and more - but it is not cleaned, since handlers and nodes remain in memory.

Memory is not released. The computer also starts to slow down.

Why? Because a big memory is very bad. The processor will be clogged when performing operations on a large DOM.

The result is that when we try to optimize something with memory, we get a big risk.

Brendan Ike himself, who created Javascript, in a recent interview (WebAssembly) said: “Javascript is a good language. It is fast and in terms of performance can sometimes be with C, but the problem starts when garbage collection occurs, because we do not know when the collector will come to us and how long it will work. ”

It turns out that memory optimizations - maybe good - but not reliable, because sometimes they can lose productivity and things will go badly.

So let's look at the other side of the picture, which I showed: how to optimize the speed of the application from the point of view of the processor - the main computing device.

There are three main ways to speed up the processor:

Let's look at our picture with cats.

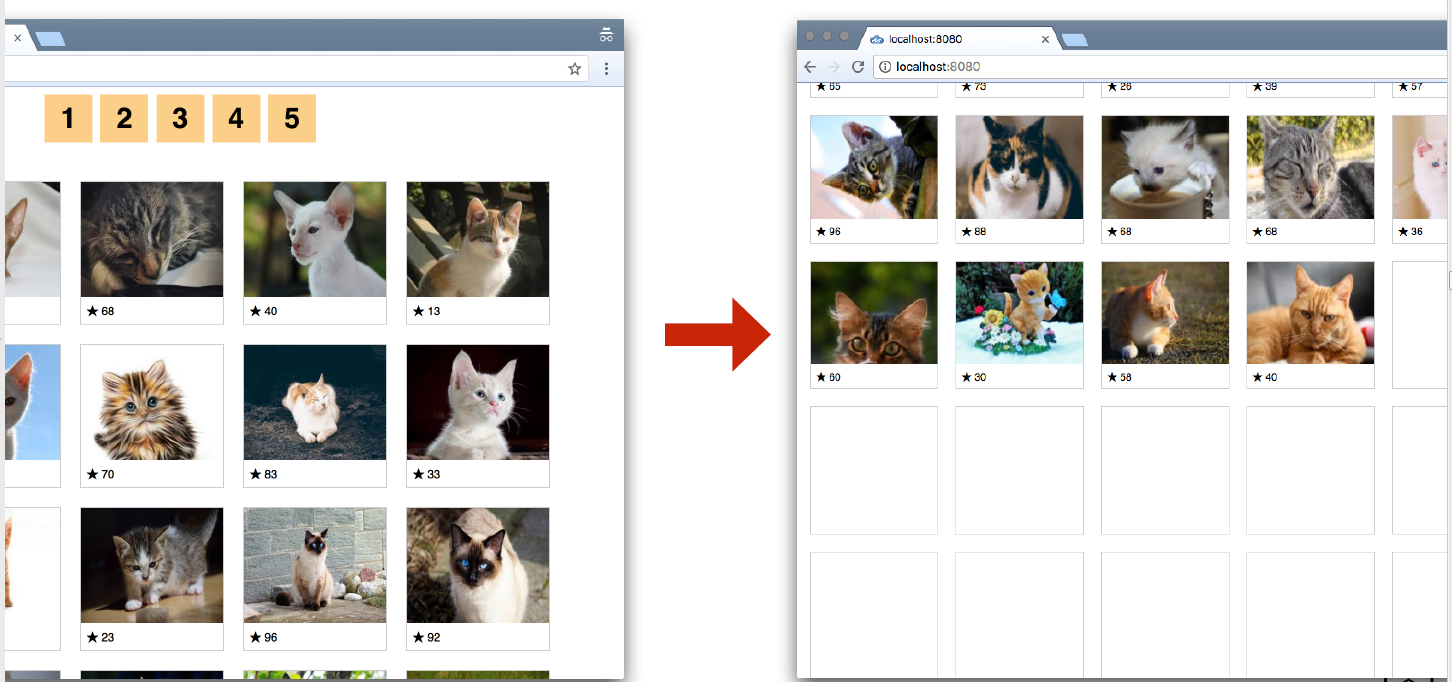

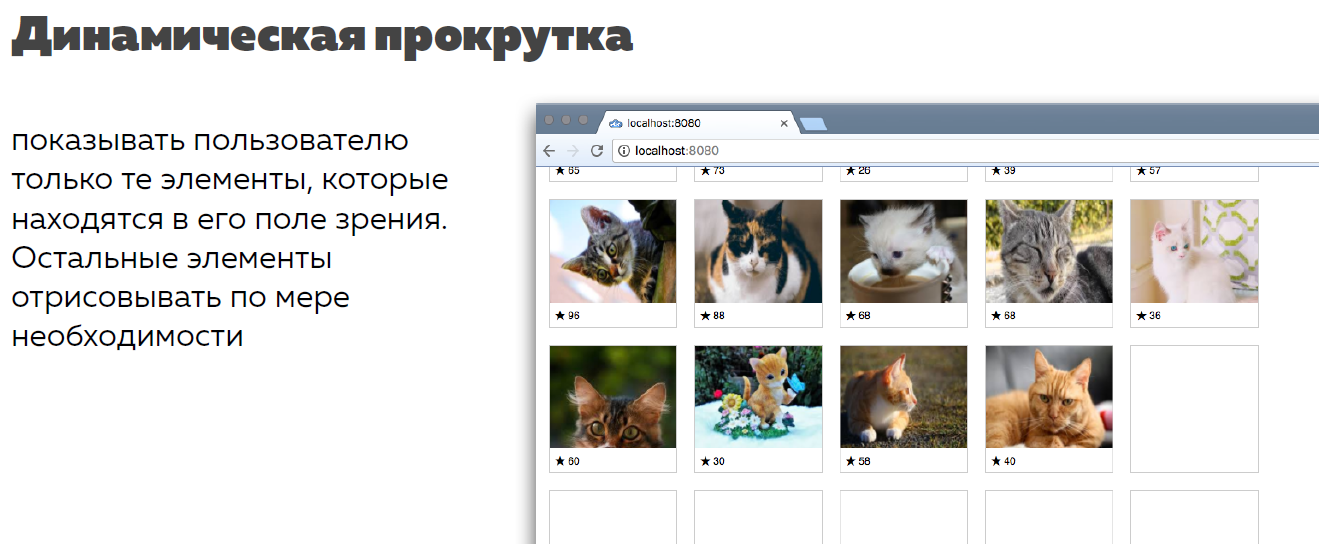

I said that I had five pages of 5,000 seals. In principle, this, by the way, is real volume. You can scroll for 0.5 minutes of these cats, and you will have a DOM of 5,000 items.

But if you think about it - it is not necessary for the first boot. We now see four rows and five columns of cats. These are 20 cats. It turns out that the user, opening the page for the first time, sees 20 cats, and not all 5,000 pictures.

And the browser renders 5 000. It turns out that we draw a lot of excess. Why draw 5,000 cats, if we show 20?

Well, we can even make a start - the user can scroll the site and he also needs to show something. But if you draw 100 cats, it will already be 5 screens.

Therefore, the first thing you can do is reduce the volume of the DOM. This is the easiest way. You reduce the DOM, and everything works faster.

Let me prove it to you from the profiler point of view.

I draw the first page on 5,000 elements and use the profiler to record the loading process. By the way, there is a “Reload” button, and if you click on it, the profiler will record the page loading speed. It will reload it and when the page is completely rendered and everything is ready, it will stop recording this screenshot and 5,000 seals will be rendered in four seconds.

And here I recorded screenshots.The user sees the first seals at the end of these four seconds. A little earlier, somewhere at the end of the third second, image wrappers appear.

If you reduce the page to 100 elements (up to five screens), then the download will take only 0.5 seconds. Moreover, the user will immediately see the finished result - without wrappers.

Therefore, the first step is to reduce the amount of computation.

The second point is trotting.

What is trotting?

Imagine that you have a certain frame rate and it does not fit into the frame rate that we chose. We have already talked about this.

Trotling is a way of thinking when we take a step back and try to understand, do we really need the refresh rate that we have?

Suppose it is 60 Fps, and operations are performed with this frequency. But the calculations take more than 16.5 ms. And here you need to think - do you really need to enter these calculations in 16.5 ms? If not, then we can arrange the frequency to the desired one.

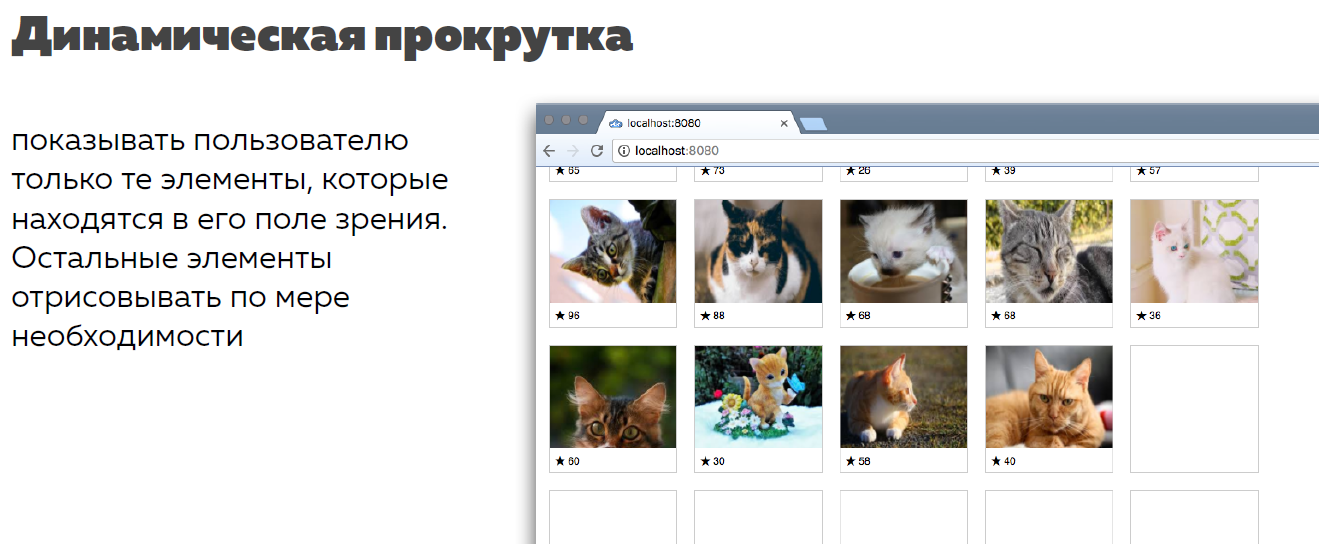

Let's take an example.We have just optimized cats and we’re showing not 5,000 cats, but 100. Let's change the way the user interacts with these cats. We will not show large pages at once, we will show cats as needed.

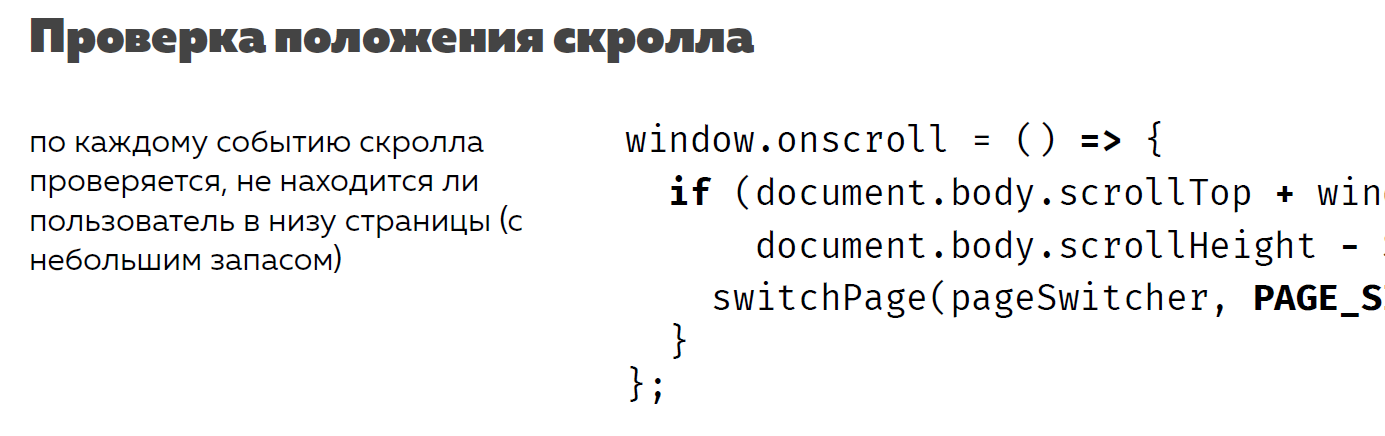

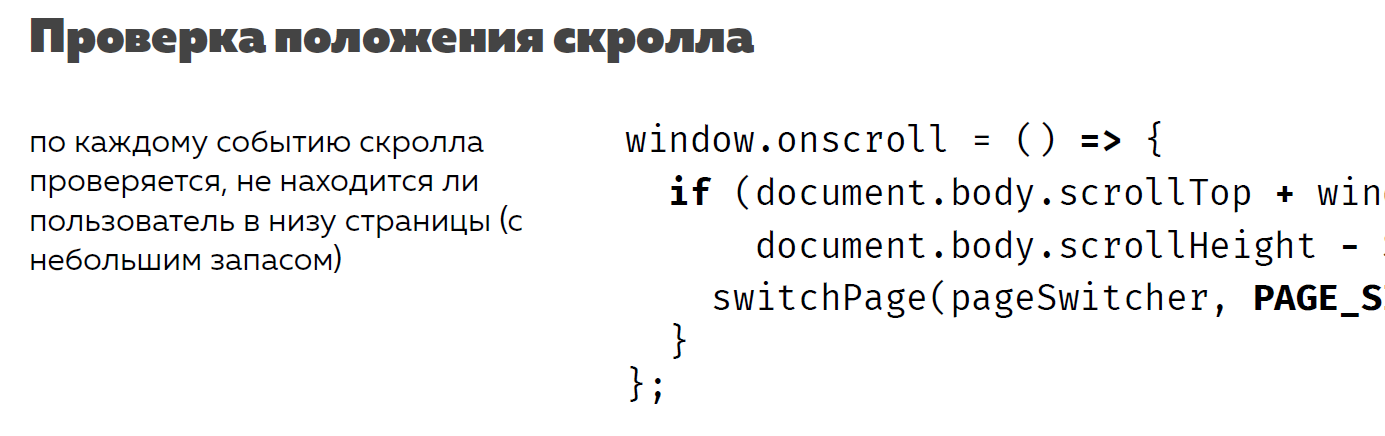

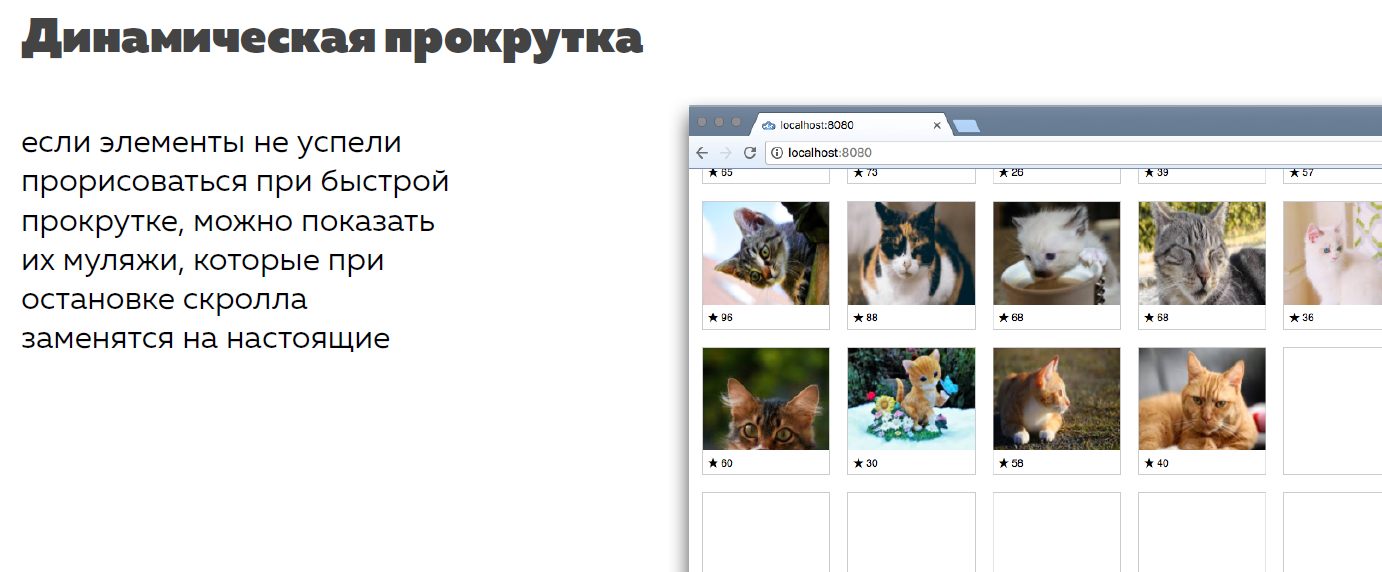

For this you need a dynamic scroll. We scroll, reach the bottom, show the next page. Here the code is about that.

But I added some statistics to this code. I recorded the delta in pixels - how often the scroll event is triggered by me, and the counter of total scroll events. When I scrolled the page from top to bottom, at its height of 1,000 ps, the scroll event triggered every four. From top to bottom there were 500 checks.

Why so much?Because the scroll happens just at 60 Fps - the very one. And yesterday, LG showed an Ipad with a screen frequency of 120 Hz, which means that they will now try to make 120 Fps, and not 60. And here it will be necessary to think even more about it. Remember the donkey from the cartoon about Shrek, who asked “We have already arrived? Have we arrived yet? ”- my check behaves the same way. She works too often and obsessively.

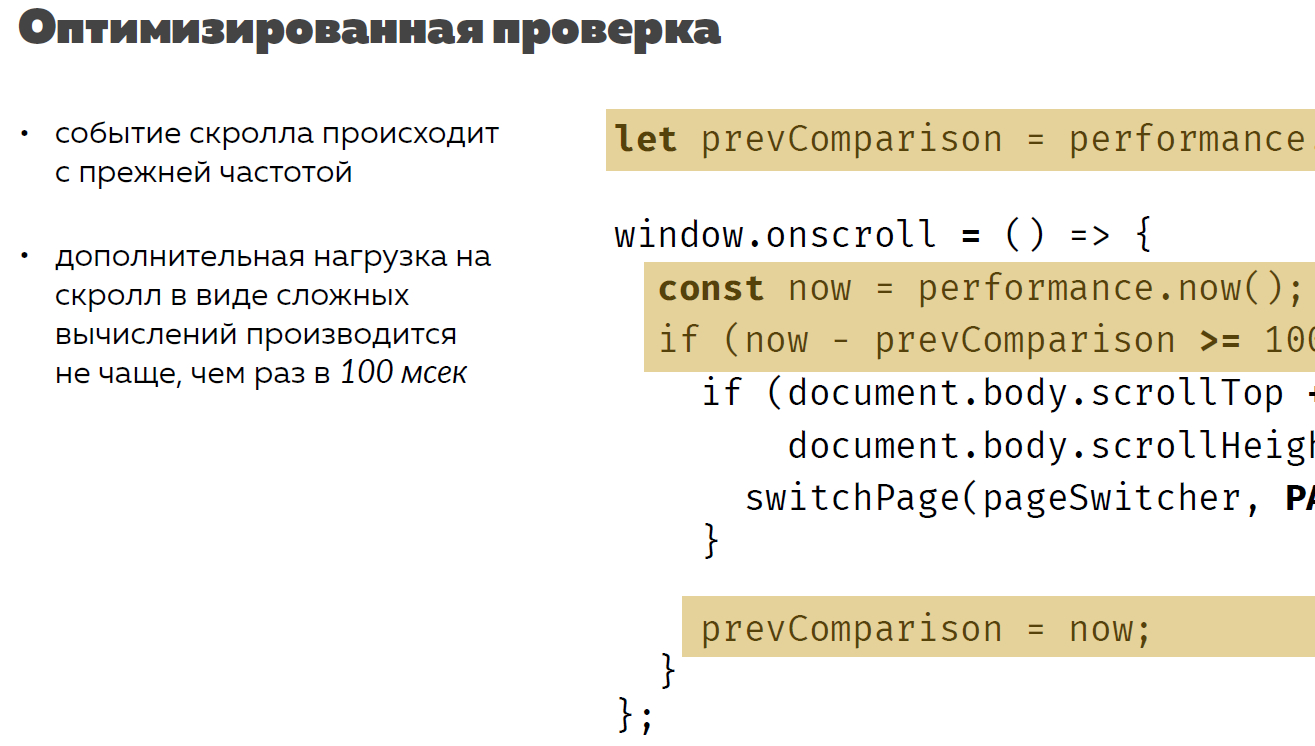

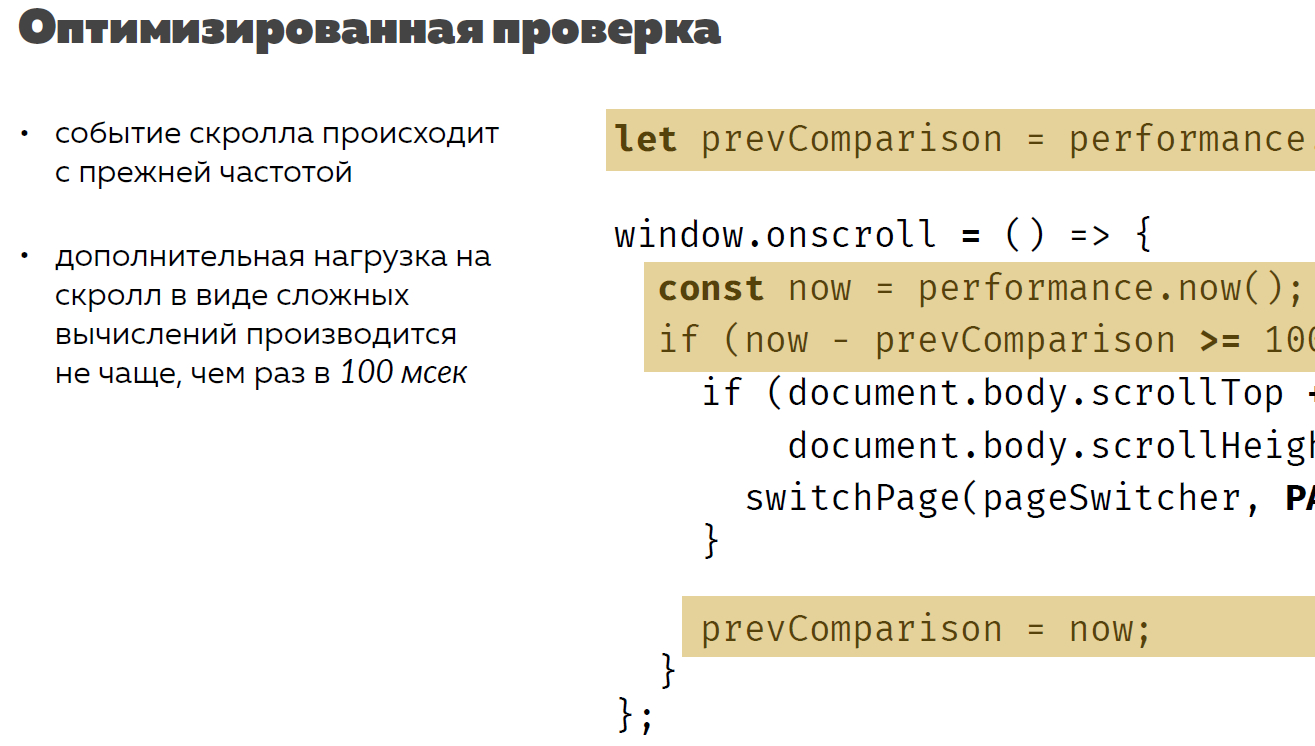

Trotling is to order the number of frames. I do not need to check every 4 ps before scrolling the bottom of the page. I can do this, say, 1 time in 100 ms.

Here I added a small check based on dates.

I look at how much time has passed since the last check, and run the next one. What is the point?The scroll event continues to occur, but I do not use all the scroll frames, but only some of them - those that fall under my conditions.

When I used 100 ms, the check was performed every 20-30 ps, and only 100 checks occurred from top to bottom. In principle, this is normal - once in 100 ps ask if we are downstairs.

This is the second way to calculate. Check frame rate. Maybe for a specific task you do not need 60 Fps and you can reduce it.

The third way is to give calculations.

How can I give calculations from the processor? There are several options:

Consider each of these methods.

To begin with, I’ll tell you why browser games are not made on SVG, but on anvas.

Browser games are a thing in which there are so many disposable elements that you throw out. You create them and throw them out, create them and throw them out. In such cases, when there is a complex interaction and a large number of small elements that are not long playing, it makes sense to use anvas.

Consider a comparison of the ideology of SVG and anvas.

Under SVG, when you draw something, you need a DOM element. SVG is the DOM. You describe the format as markup, but, as we have already figured out, all the markup falls into JS as a DOM tree — as an object, for example, with a class list or with all other properties.

When you write on canvas, you simply operate on pixels. You have methods that describe the interaction with canvas. The result is pixels on the screen - and nothing more.

Therefore, on canvas we have to invent our own data structures - since there is no DOM tree, which was invented for us by someone. But then it may be slightly better for solving problems than the structures that SVG offers.

Once SVG has some kind of standard structure, it has an API. That is, you can do any interactions with SVG, for example, update one by one, animate.

On sanvas all this can not be done. You have to write everything by hand, as in assembly language. But then you can get a performance boost. After all, SVG is a DOM, and it will be considered on the processor, and pixel drawing will be on the video card.

Let's look at an example of why we rotate the Earth a little.

Here, the resolution is ridiculous in modern times - 800x600 - nothing at all. The Land that you see is vector graphics. All countries are described through one complex path. I did not draw each country separately as an object. They are all laid in one line of a certain shape.

I will update the frames via requestAnimationFrame . That is, the browser itself will tell me what my Fps is for drawing this thing. He himself will understand how much he can draw 1 frame.

As an animation, I will rotate the Earth through 360 ° - from London to London. It was easier to write this way - I need to pass 0 to the array, because this is the London coordinate.

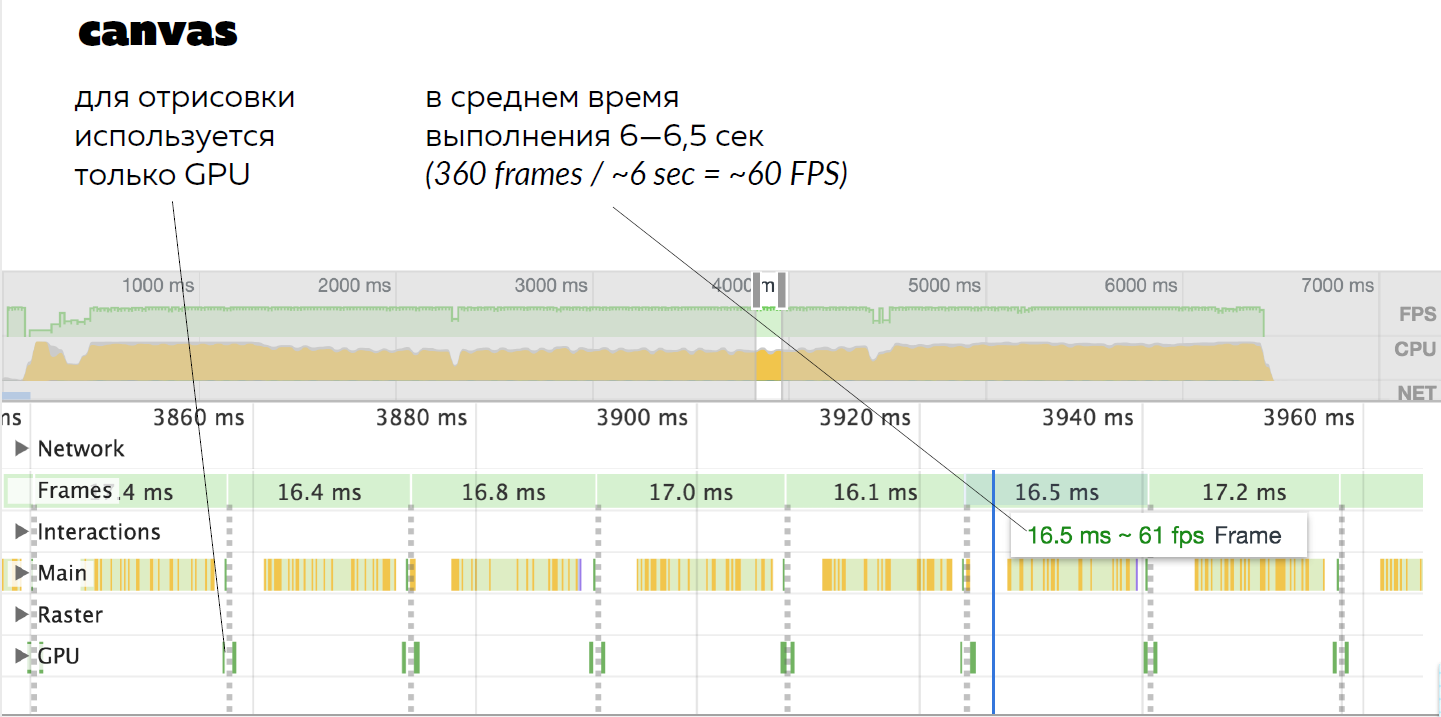

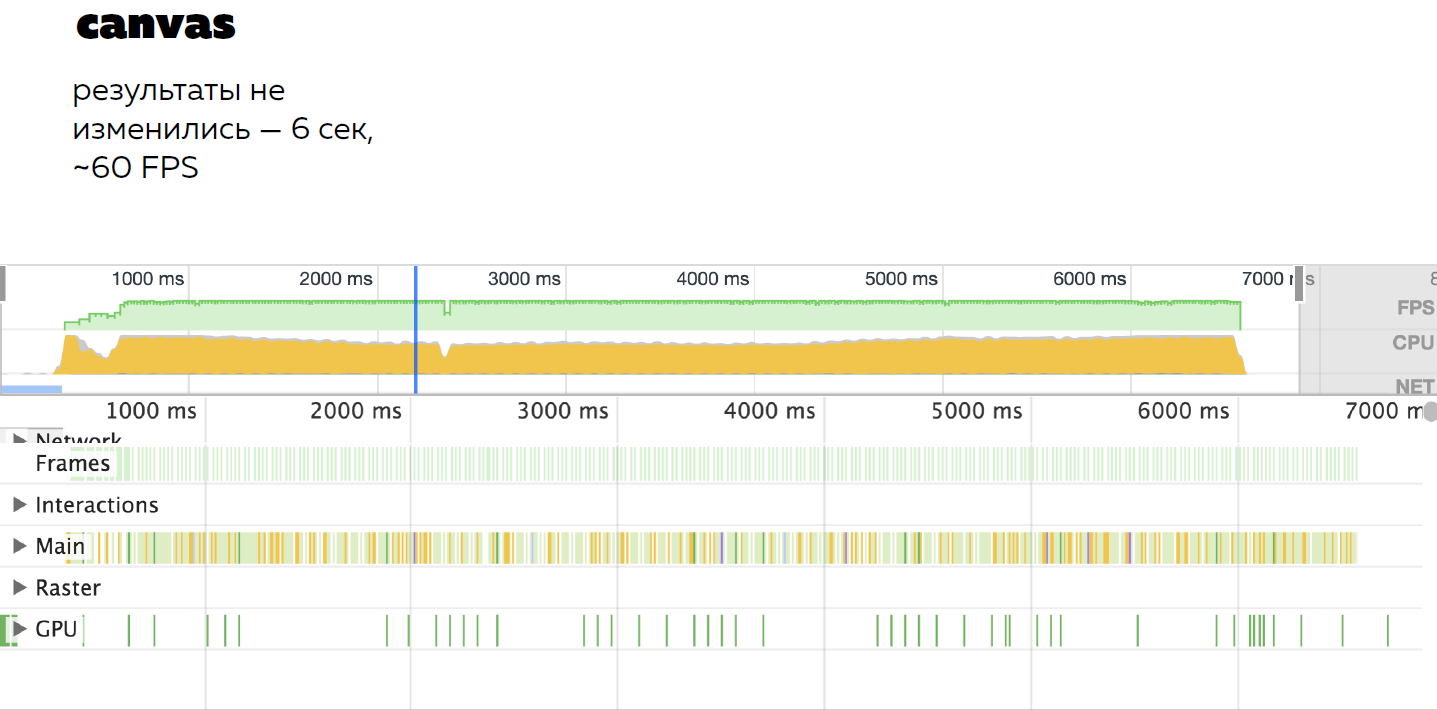

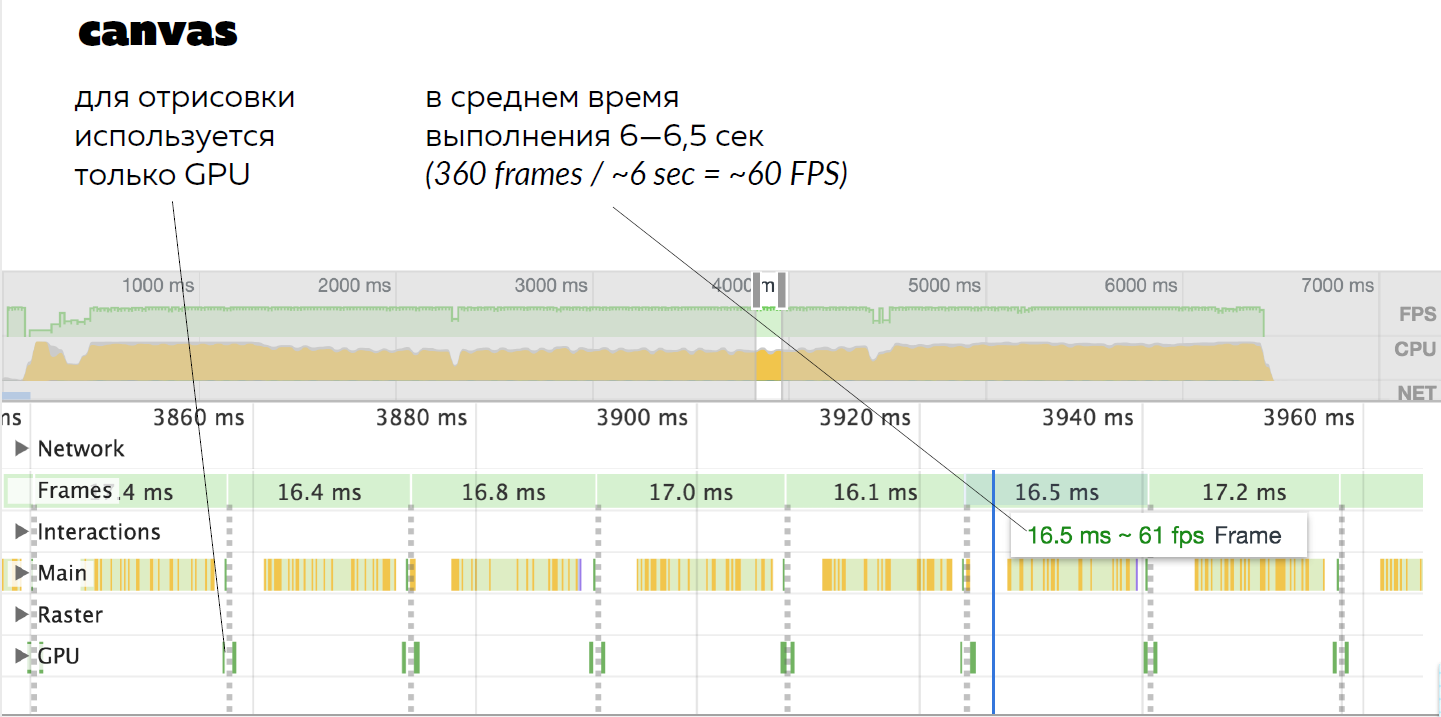

Here I recorded the work profile of the canvas. First, look at the bottom chart. Only GPU is used here. That is only a video card for animation. There is nothing higher.

This is where the Main tab is involved. This is the work of the processor itself in changing the array of the number 0 by the number 360 and miscalculation of this contour depending on the angle - it multiplies the known coordinates of the countries by the formula of their projection on the circle.

Further only the video card is used.

In the end, I ran a few tests, and it turned out that on average, the animation lasts six seconds. With the help of simple calculations — 360 ° in 60 seconds — 60 Fps is obtained — all is well.

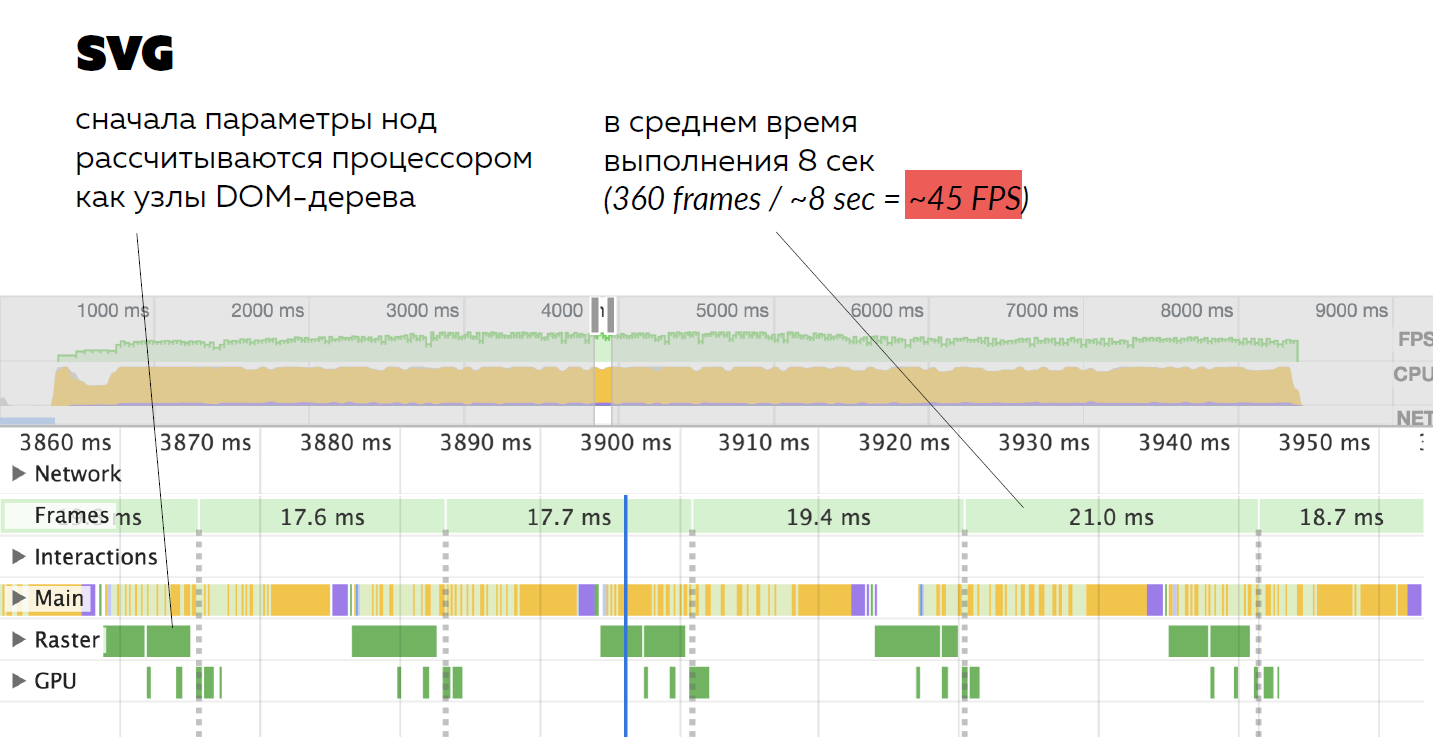

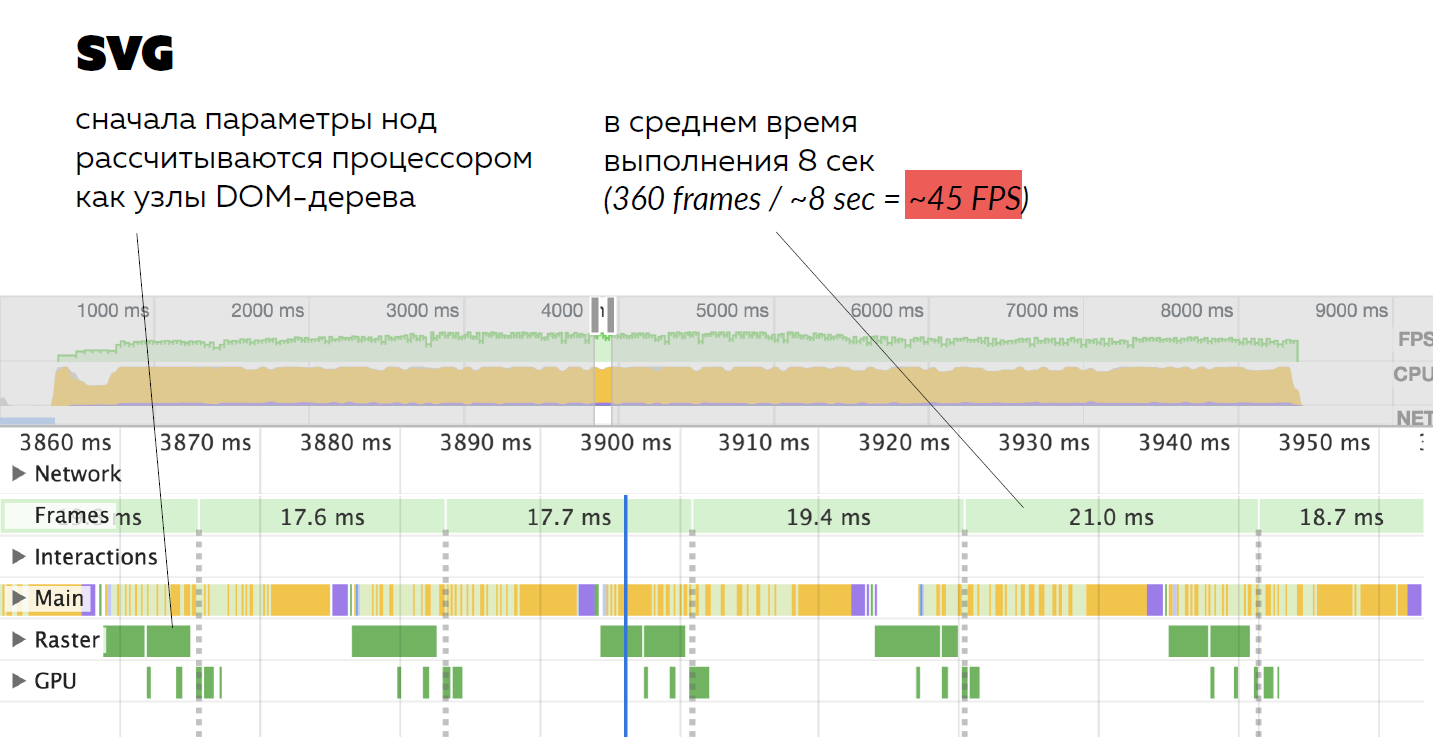

But SVG managed a little worse. Why?Because if you look at the last two lines, we will see a completed Raster tab. This means that SVG created a DOM object for each frame, calculated all its parameters completely using the processor, not using the GPU, and using the video card already rendered it in the form of pixels.

That is, first a DOM object that is long and difficult to compute, then pixels on the screen. This is quite seriously squandered performance. It took about eight seconds, and this is about 45 Fps.

I circled 45 Fps in a red frame, because this is what is called beyond the shame. You say, “What are you saying? There are even movies that run at 24 Fps. ”

Not true.Even films that were recorded at 24 Fps at the dawn of cinema were shown at 48 Fps. This concept is explained to us by Thomas Edison: “Yes, a person will see 24 Fps movement, but he will see flickering from updating the image”.

That is, he will see a moving picture, while noticing flicker. To prevent this from happening, you need at least 48 Fps.

To do this, in old films each frame was shown twice.

That is, even at the dawn of cinema, films were shown at 48 Fps. But SVG failed, failed the performance test, and this is bad.

That is, it turns out that in certain cases sanvas is better than SVG. For example, if you have many such disposable elements that need to be thrown out, it is better to use sanvas.

Another test.

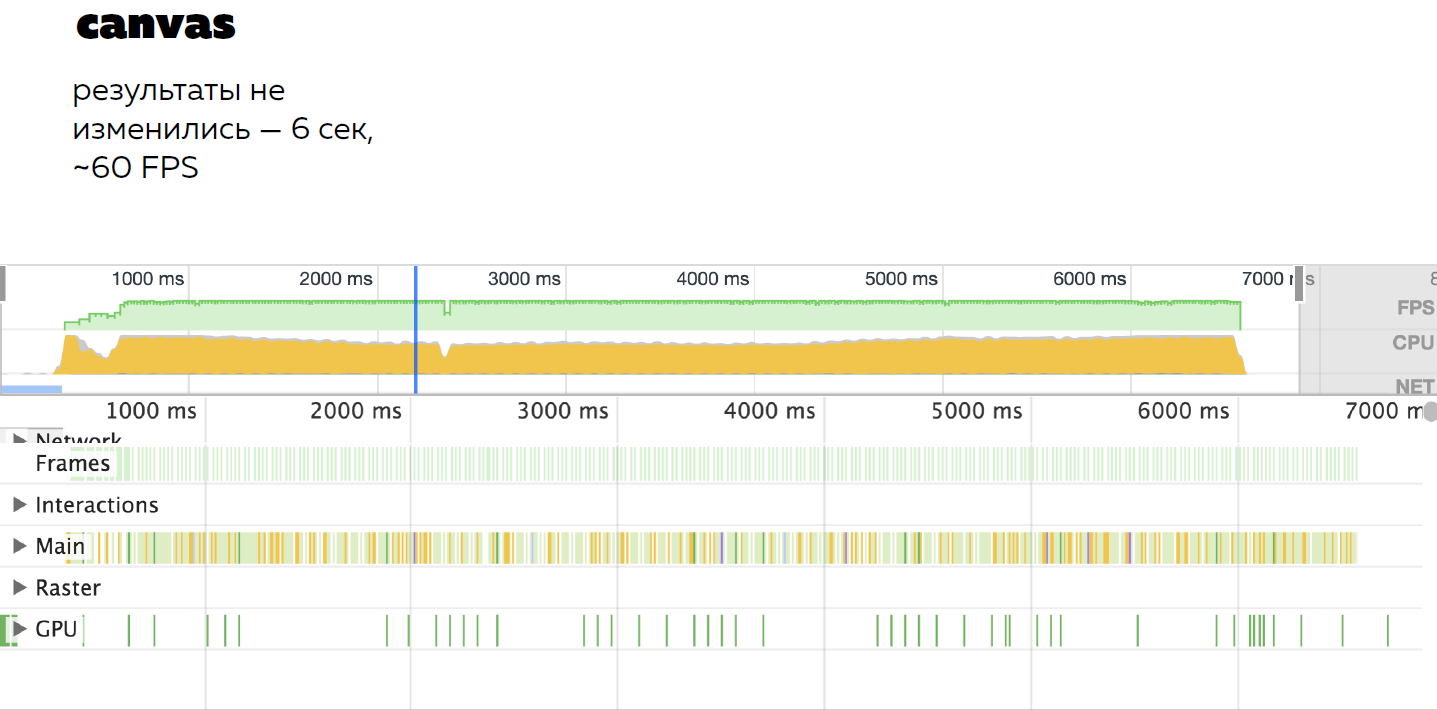

When I was preparing and chasing away all the code samples, I accidentally forgot one line - clearing the previous frame. I got such a strange picture. I decided not just to get rid of this bug, but to see where my mistake would lead me, and measured the performance of this thing.

When I measured the performance of the earth’s revolution on canvas without cleaning canvas, I got 60 Fps. I rechecked five times if I had the wrong picture. 60 Fps - and do not care about canvas.

How do you think SVG did it?

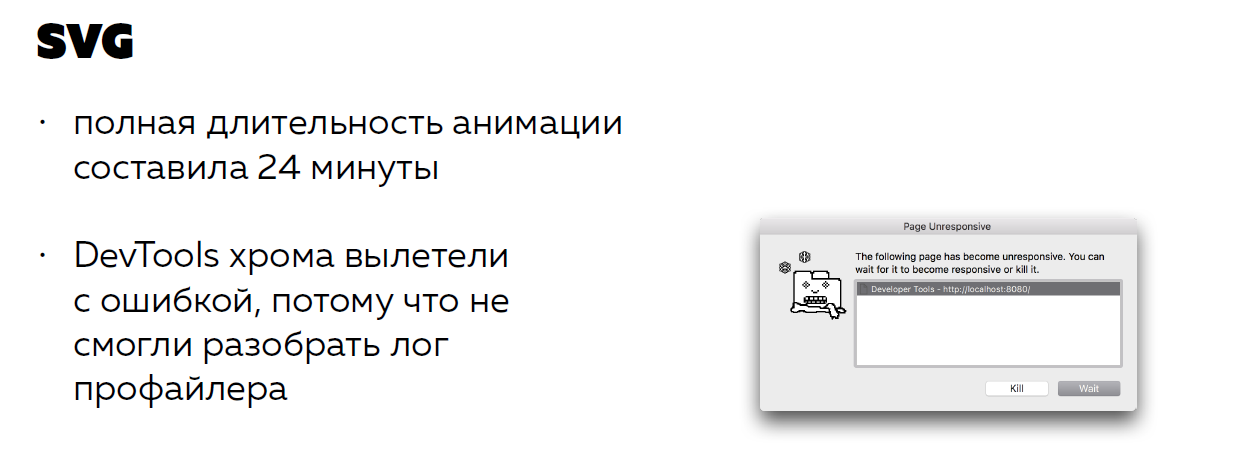

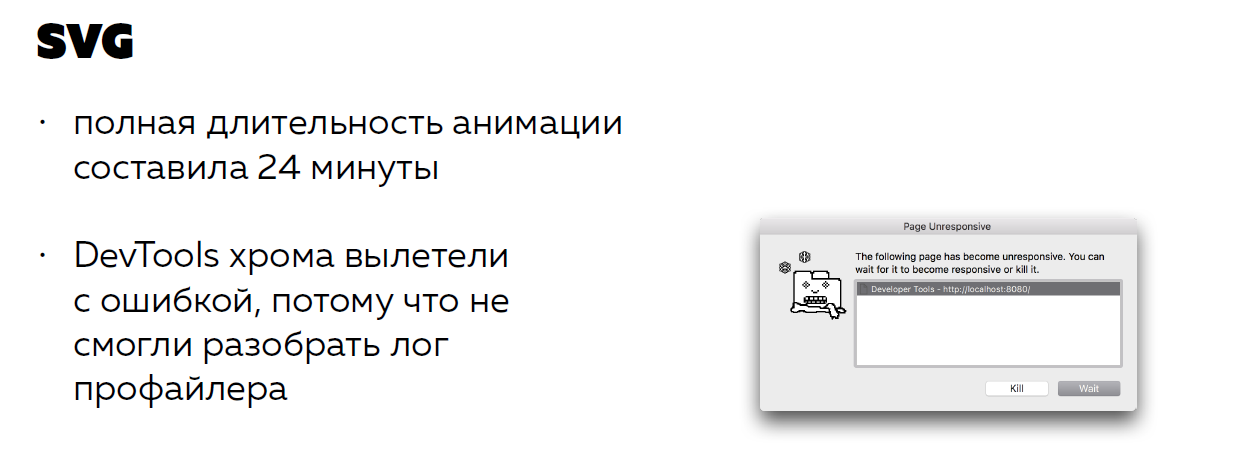

Full animation took 24 minutes! He didn’t clean anything and a classic leak occurred. Memory grew and grew. I wanted to show the profiler, but for the first time I saw the window of death on the profiler. This is generally very strange.

To explain what happened, I'll show the profiler on a regular SVG.

Memory is used below, and every two seconds it is clogged, then cleaned and clogged again. Now put this ladder one on top of another and imagine what happened at the end of 24 minutes, when I managed to drink tea, go somewhere.

In general, if you have a lot of disposable-elements, there is a complex graphics and non-trivial interaction, it is better to use sanvas. It is unlikely that someone will describe the dust particles flying from the tree into which the fireball fell, as a DOM node, each of which has a class list.

No, better canvas.

Another calculation method is to transfer the calculations to the server. We just said that canvas have good and fast graphics. But somehow I had a task to draw a heatmap over the city: how often do restaurants meet there?

I thought: graphics? Graphics. SVG is not suitable, because the update step is 1ps, that is, each pixel means something to me. Therefore, there must be sanvas.

I solved this task on anvas. The data structure came, I walked along it, put a dot on the map, which had a certain saturation color.

What happened? In fact, I didn’t like the solution much because:

Then I thought: “Well, I’m a smart developer, I solved an awesomely difficult task - I drew a heat map, but it’s worth thinking about the user and don’t work, but simply:

That is, instead of loading the user's processor with his vanity, I went to the backend and asked me to generate pictures, that is, to load the processor of the backend computer that was designed for this. The user processor was loaded only by reading pictures from the cache.

As you know, the pictures have another advantage - they do not need to be recalculated every time. They are remembered and drawn - all is well.

The third way is to give in a parallel stream. In fact, this is a fairly new way for the frontend, because the guys who write in other languages usually talked about this.

Let's look at the task. There is an editor, for example, Ace. Since this is a fully client-side editor, the client side is responsible for such basic things as blinking the cursor, scrolling, moving the cursor, and so on.

But besides this, you need to highlight the code or say: “Dude, you wrote the wrong operator in the wrong place” - and break it all.

To say that the user wrote the wrong operator in the wrong place, you need to build an ST-tree, run through it, analyze it and so on.

What did the Ace developers do? They said: “In the mainstream we leave the main one. Let the cursor blink and go, and the screen scrolls. This will occur without delay. User will be satisfied. And we’ll give all the remaining extras to the Worker. ”

This is a tool that allows you to run Javascript file in a separate browser thread and avoid unnecessary expense of performance - for example, to build a tree.

There is one limitation. In the Worker service, you should not give up work with DOM. That is, with DOM you are working in your thread, and you give complex calculations in parallel. This will increase the performance of your frontend.

To summarize How can I give calculations from a practical point of view?

I have long been telling how to optimize and reduce computations. But sometimes this can not be done.

For example, no real server on the Internet will answer me faster than 100 ms. I can't do anything about it. Even if I have a super-beautiful interface that is thought out to the smallest detail, all calculations are optimized, there will still be delays and users will be able to see the lags.

What to do with delays? There are two ways:

Now I will tell you how this is done.

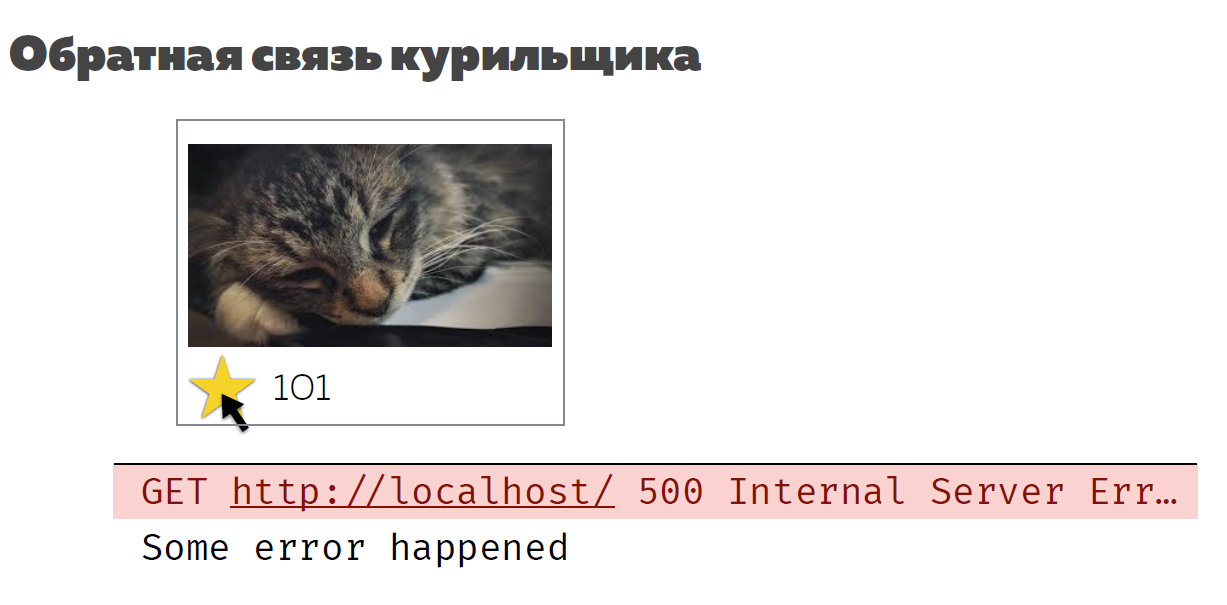

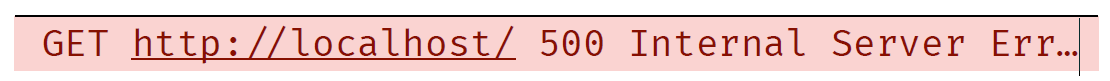

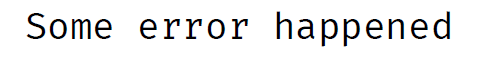

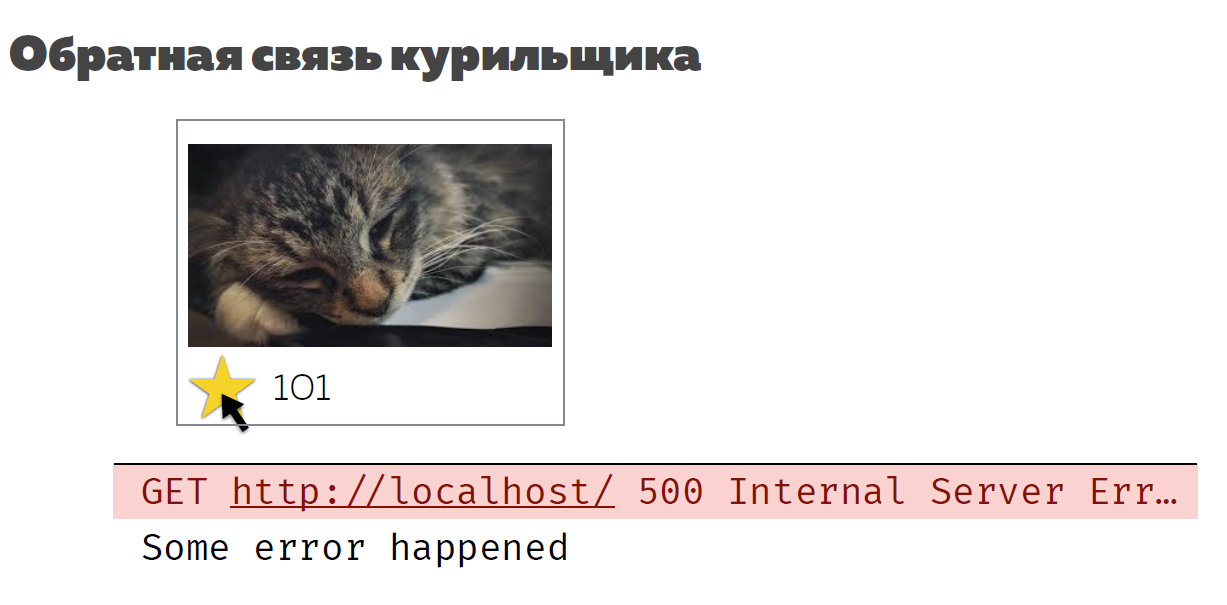

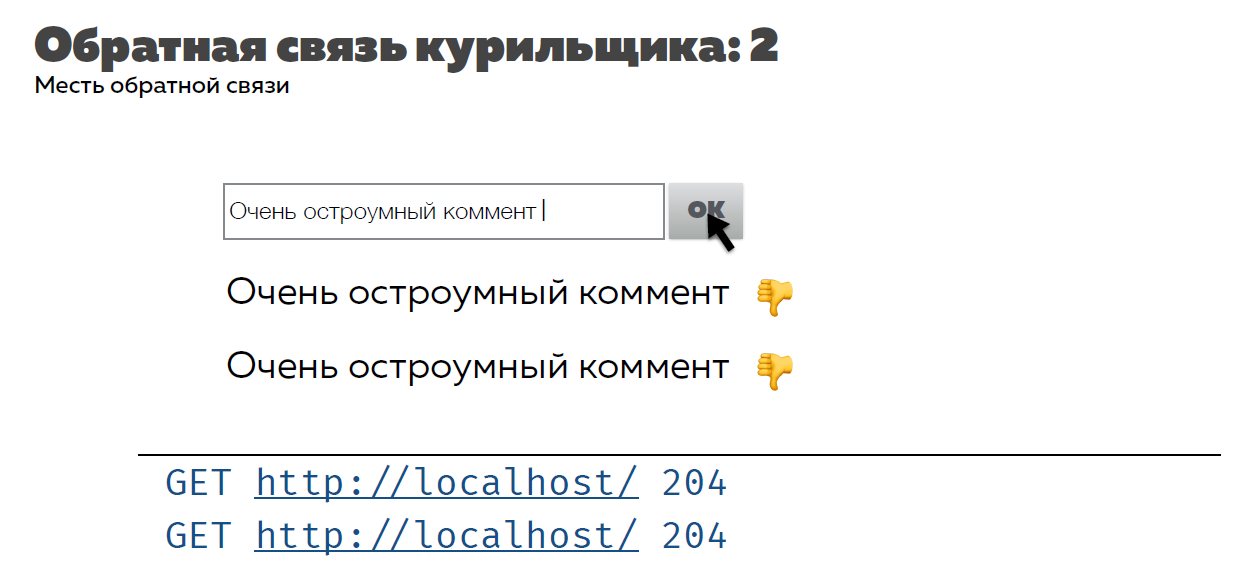

Consider the correct feedback. Let's go back to Instagram with cats and add some interaction.

There is a cat, and the user wants to like him. He brings the mouse, clicks on the asterisk, I show - yes, you liked, you're great! There are more likes! But this is not true. Like is not like while it is not like on the server.

Sometimes servers sometimes have this:

What do all developers do in this case?

No one makes any transparent feedback.

How to do? I'm not saying that you need to forget about the Optimistic UI, but showing the direction. If the user has clicked on something, and the result is not instantaneous, you need to show it, for example, you can even highlight this asterisk. At least show: “Wait. Yes, we understood that you clicked, but not everything is ready yet. ”

When something goes wrong, it also needs a separate status. That is, we need to be transparent and show the user what is happening. As I said, we are responsible for the user's impression of the site. And he is not a fool that did not understand what displays him in the console.

When I was preparing for this report, I called my mother and told her what I would talk about. She told me a joke:

- Why did you bring me 10 pizzas? I ordered 1.

- Yes, you ordered 1, but the button was pressed 10 times

It seems like a stupid joke, but I meet with that. Sometimes I write comments, press a button - and even the button does not bend, nothing happens. I think - buggy, or what? You need to click again.

But in fact, everything happened, the request went to the server (both requests went to the server), and both comments were drawn. And users do not like when they see 2 identical comments.

For some reason it is considered that I am guilty, although in fact the programmer is to blame. It was possible to simply show the feedback - yes, no longer press this button, please. We understood that you pressed it.

When the answer came, you can even reset the content, and the user intuitively understands that you can enter another message.

At the development stage, it does not cost anything at all, but the interface will get better, the delay is more transparent. The user will not be offended. He knows that you need to go to the server. As Louis C. Key said, “Wait! It flies into space! ”While you complain that my site does not show in a second.

The second way - you can slightly deceive the user.

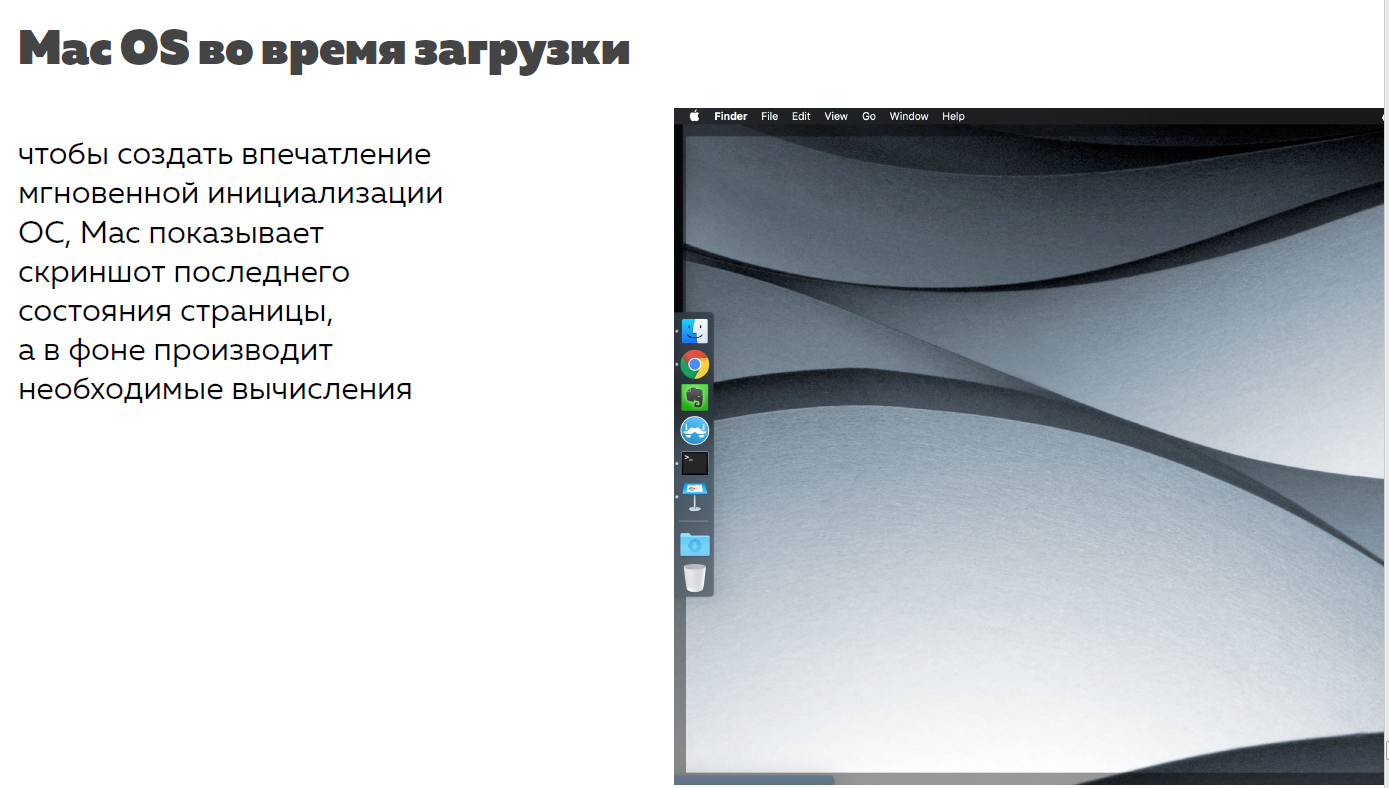

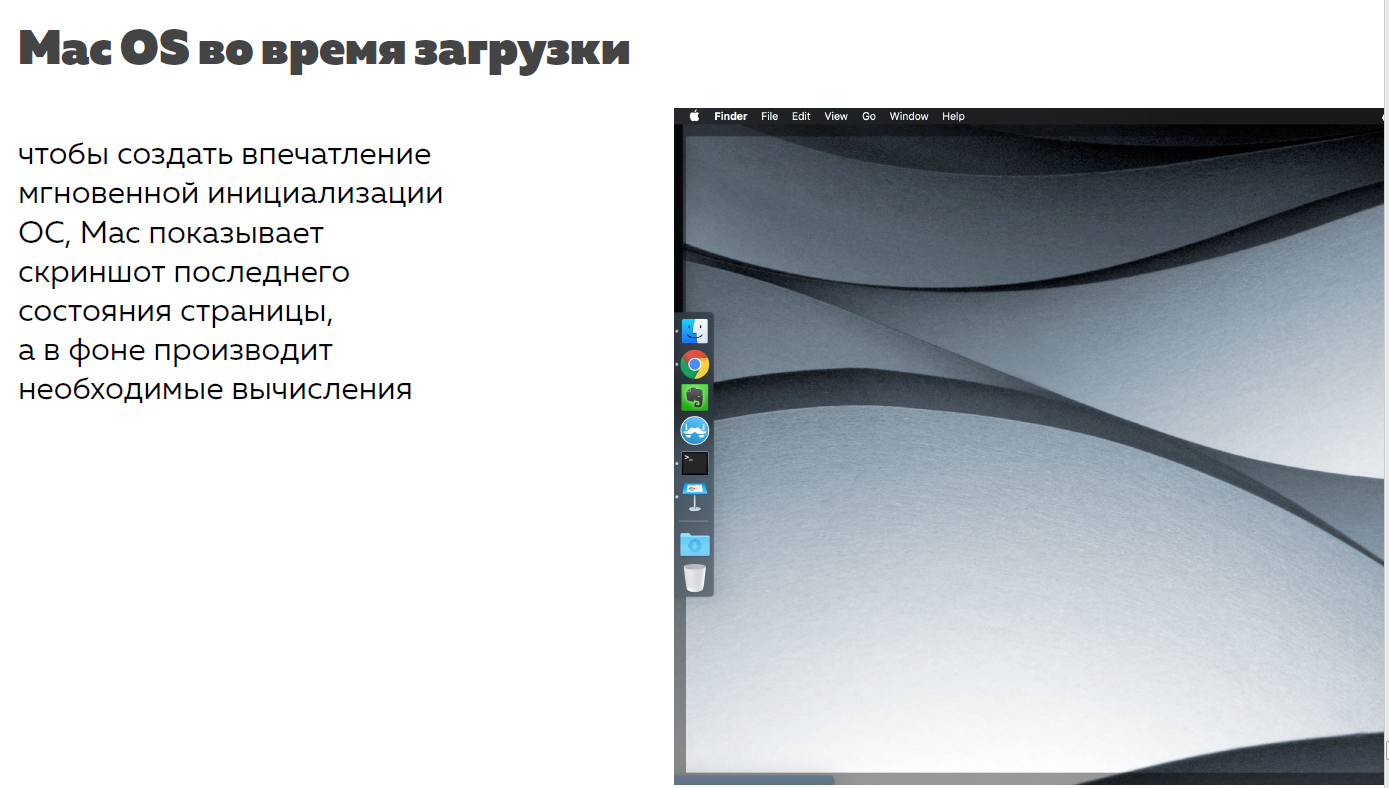

For example, when the guys developed Macintosh back in 1982 or in 1987, I don’t remember exactly, they had very weak hardware, because Apple had the task to fit in a certain price to make the computer affordable. And they had to come up with interesting solutions.

Jeff Raskin, who designed the first version of the Mac OS interface, came up with the following. It is clear that the computer can not turn on instantly and immediately show the working screen. Therefore, they photographed the last state of the screen before shutting down, recorded the picture on the zero track of the disk and, when the computer turned on, showed it.

Why did this work? Because a person needs seconds to switch the context. That is, when we talk about Fps, professional pilots see 270 Fps. But when people switch the context between tasks, it takes a few seconds to realize what is happening.

When the guys from Mac OS showed the picture, people looked and thought: “Yes, something happened here, something changed.” As long as you understand what has happened, a real screen will be shown to you.

This solution turned out to be so good that it still works on a Mac. For example, when you turn on the MacBook, you see that the WI-FI indicator is already on full. And after two seconds, he begins to search the network.

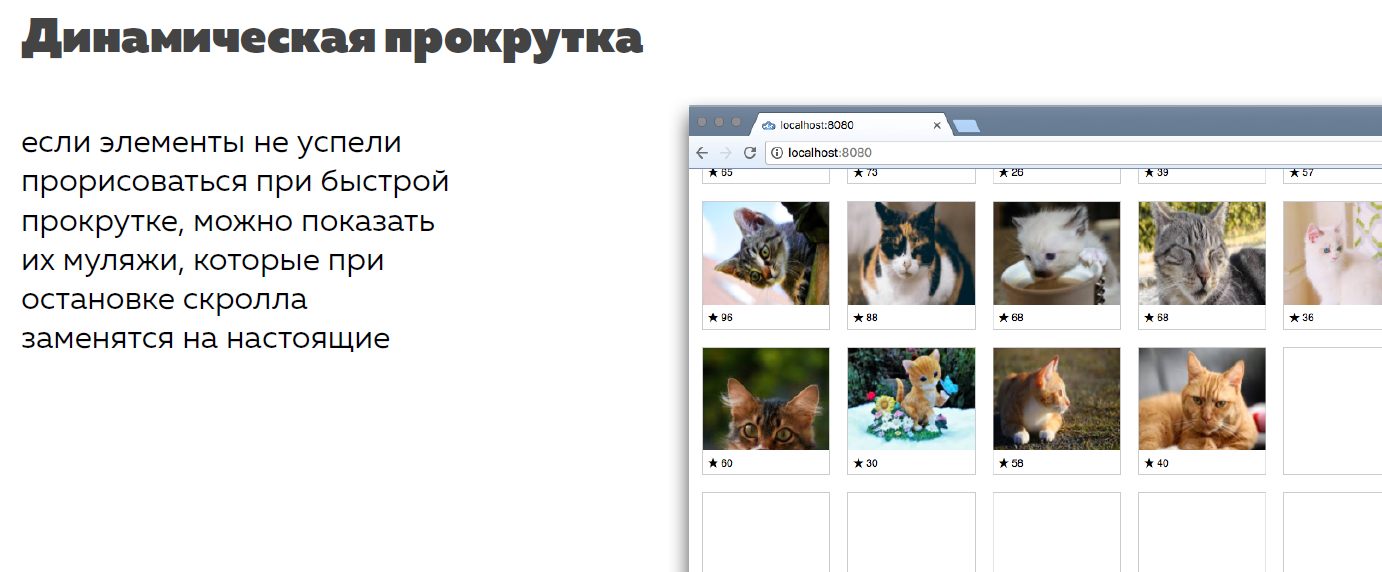

The second method, which follows from the first, is a cropped screenshot. It is called Skeleton Screens. This thing came up with Google Images.

They had the same task, which we talked about a little earlier, - that if we show not all the pictures, but the user will scroll.

They thought - we can't show all the pictures at once, but we can promise the user that there will be a picture. And drew a gray block the size of the picture. When the user stops, it shows the real picture. When the user scrolls and sees gray blocks, he believes in it intuitively - well, yes, the picture did not have time to load, and this is normal.

It can also be used, and it is good.

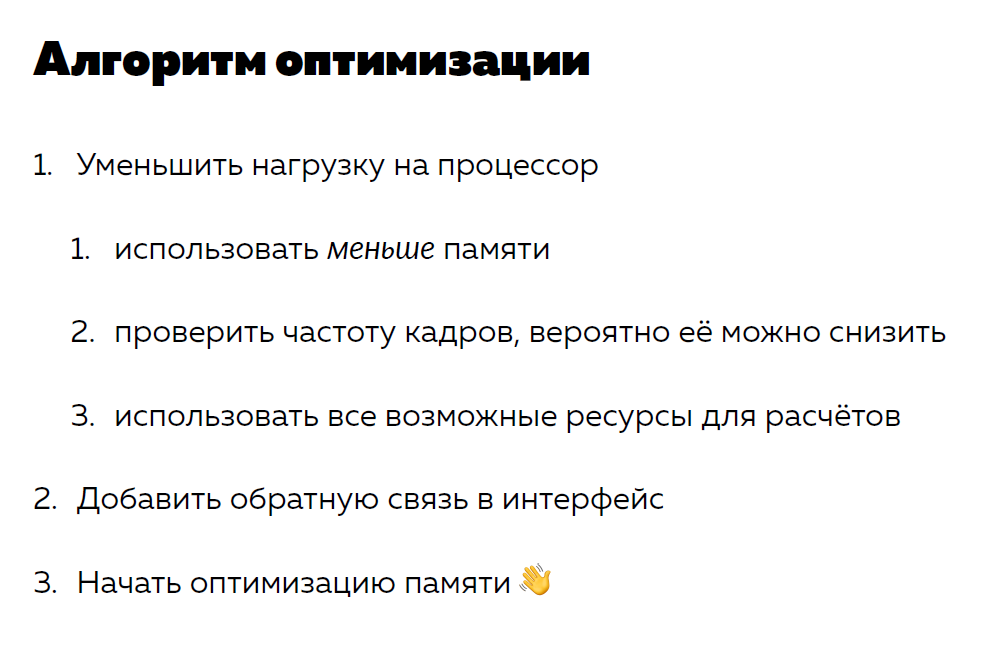

Summarize. What to do if the site slows down?

If you need more details on this topic, we have prepared a transcript of the speaker's answers to questions from the audience.

We are also in a hurry to announce that we have opened access to all video recordings of performances from Frontend Conf 2017 . There are almost three dozen of them.

At the same time, we invite pros to become speakers at our May RIT ++ conference festival. If you have an interesting development experience and you are ready to share it, leave a request for our program committee.

About speaker

Igor Alekseenko is a developer with a great experience, he conducts basic and advanced courses on JS at the HTML Academy. He worked at Lebedev Studio, Islet, and JetBrains.

')

Today I wanted to talk about the problem of developers. I will share my own pain, but I hope you share it.

I hate it when the interfaces slow down. And I hate not only as a user of sites - but also as their developer.

Why? Because we, as developers, are responsible for the emotions that people experience on sites, for their experience. If a person comes to the site and gets some kind of negative experience - this is our fault. This is not a designer, not a complex technology - this is us.

Well, when the interface just slows down. Well, you think, the user will come to our online game, but will not be able to aim and kill the opponent or see some jerky animation.

But in fact, everything is much more complicated. Because there are more sites, and they solve more complex tasks. If you are developing any banking application and you have had a second data transfer, and the user has lost money, or you have an online store, and the user could not buy the goods he needs, then you will lose this user. And all this is simply due to the fact that your site is slow.

A lost user is a loss of money, lost revenue from the company's point of view, and possibly your dismissal.

Therefore, in general, braking the site - this is a serious problem. And so it needs to be addressed.

But in order to solve a problem, you need to know the enemy by sight. Let's see what the brakes on sites are all about, why websites slow down and where it comes from.

Brakes on sites occur when user interaction is no longer even. What does it mean? The fact is that when users browse the site, they see not just some static image. Because the site is not just a picture, it is a process of user interaction with the interface that we offer him.

The user can see the same animations that I talked about. It can trivially scroll the site - and this is also a dynamic interaction. He pokes on buttons, enters text, drags elements. This all works dynamically.

Why does it work dynamically? Why sites can live for a long time?

This is due to the fact that such a structure as the Event Loop is built into all browser engines.

In fact, the Event Loop is such a simple programmer trick, which is that we simply start an infinite loop with a certain frequency. So that we do not have a clogged stack and some kind of performance.

This infinite loop at each step checks external conditions and starts certain actions. For example, he understands that the user has scrolled the mouse and you need to slightly shift the page.

With the Event Loop, which is built into the browser engine, all user interactions are synchronized - scrolling, other things and the code that we execute in the long term. That is, it all depends on this event loop.

How to get into Event Loop frames?

We have a cycle that turns with a certain frequency. But we, as front-fenders, cannot control this frequency and do not know it. We only have the opportunity to use ready-made, intended for us frames. That is, we do not control the frequency, but we can fit into it. For this, there is a requestAnimationFrame construction.

If we pass the code to the callback requestAnimationFrame, then we get to the beginning of the next update frame. Frames updates are different. On the MAC, for example, these frames try to fit into 60 Fs, but the frequency is not always equal to 60 Fs. Further on examples I will show it.

We have already disassembled that at the core of Javascript is an infinite loop, which is updated with time. Now we can assume where the brakes can arise from.

There are frames that are updated over time. We run some calculations on the site. Any. Everything we write in Javascript is actually computing. If these calculations take longer than one visible update frame, the user sees lags.

That is, there is a series of consecutive frames, and then - some long calculation, which takes more than a frame. The user sees a slight delay. He is twitching animation.

Or the calculations may become too large, and then the page will freeze and nothing can be done with it.

Ok, we understood what a brake is and why the site can slow down. Now let's solve the problem.

We are programmers, so we have the tools that run our code. The main task of the programmer is to properly distribute the load balance between the processor and memory.

We all know what a processor is. This is a device in a computer that is responsible for instantaneous calculations. That is, any command that we write is converted into an instruction for the processor, and it will execute this command.

But if there is a sequence of commands that leads to some great result (let's say we calculated a complex value), but we don’t want to repeat this sequence - we can write the result of this command in memory and use another processor instruction called reading from memory .

I just wanted to talk about it.

The first optimization, which seems logical, is to use memory in order not to use calculations.

In principle, this strategy sounds advantageous. Moreover, it is a good strategy and it is already in use.

For example, Javascript has a built-in Math object, which is designed to work with mathematics and calculations. This object contains not only methods, but also some counted popular values - so as not to recalculate them. For example, as in the case of the number π, which is stored up to a certain sign.

Secondly, there is a good example about the old days. I love programmers of the 80s because they wrote effective solutions. The iron was weak, and they had to come up with some good stuff.

In 3D shooters, trigonometry is always used: in order to count distances and sines, cosines, and other such things. From the point of view of a computer, this is also a rather expensive operation.

Previously, programmers at the compilation stage shoved tables of sines and cosines directly into the program code. That is, they used the already calculated values of trigonometric functions. Instead of counting them for rendering the scene, they took them as constants.

Thus you can optimize everything in the world. You can pre-calculate the animation - how it will look, and do anything.

In principle, it sounds very cool.

Look, here are the frames, and instead of starting calculations that take several frames, we run calculations that only deal with reading: read the finished value, substitute, use. And it turns out that the interface is very fast.

Theoretically it sounds very cool. But let's think about how exactly frontenders work with memory.

When we open a browser tab, we are allocated a certain amount of memory. By the way, we also don't know him. In this we are also limited.

But what's more, we cannot control this memory.

There is another feature - something is already stored in this memory:

- This memory stores the wound-time of the language: all constructors, all functions. The language itself is stored in memory;

- You also use some data: download something from Ajax, generate some kind of structure;

- You have a DOM tree, and it also gets into memory, because Javascript cannot read HTML, and the browser converts the markup for it into a set of objects, into a tree. In the memory of the browser, the tab gets everything that is in the markup, including all the tags in the form of each individual object. Fall texts.

That is, for each transfer that stands between the tags, a browser memory object is created, like text-mode, and it hangs.

We see that we have a lot of everything in our memory anyway, but at the same time we want to write something of our own there. This is dangerous enough because memory can start to slow down.

There are two main reasons - and, oddly enough, they contradict each other. The first is called “Garbage Collection”, and the second is “Lack of Garbage Collection.”

Let us examine each of them.

Before I explain what garbage collection is, I’ll tell you how we will look at work in terms of performance, memory, and everything else.

The fact is that all these things can be measured. In any browser there are developer tools. I’ll show Chrome as an example, but in other browsers it’s also there. We will look at the “Profiling” or “Perfomance” tab.

It can be opened and measured at any time by a so-called browser performance snapshot. Click on the button "Record / write." Performance is being recorded, and after a while you can look at what was happening on the page and how it affected your memory and processor.

What does this tab consist of?

- Firstly, there is a timeline above that shows seconds, that is, the lifetime of the tab.

- Fps - the frame rate that was at that time;

- Processor load - on the joint graph it shows different calculations;

- Screenshots You can not show them, by the way. I advise you to disable them, because if you record a performance profile with screenshots enabled, you are guaranteed to lose Fps. That is, if you need to calculate Fps, turn off the screenshots just in case.

- Memory. This is the lowest chart.

- Detailed statistics of the same information that we see above. That is, profilers can be increased at any moment and see exactly in frames what was happening. We can even increase to the frame and see what operations were performed on it - even with links to the code.

So, we will measure this performance on Instagram with cats. Everyone loves cats, everyone loves Instagram - so I decided to do so.

Seals are not many, so we will look at the big pages. We will have five pages with 5,000 cats, and we will learn how to switch them.

Below is the code with which I generate the seals. They are all unique.

That is, I create a DOM element from some standard template and fill it with unique data. Even where the pictures are repeated, I use the template: so that there is no caching and the memory test is clean.

When I create all the elements, I add them to one fragment - this is also an optimization that you all know. To clean the pages, I’ll just be - bald bombs! - clean the container.

It works faster.

So, garbage collection.

Garbage collection is a process that is designed to optimize memory management. He is not controlled by us. The browser itself starts it when it realizes that the memory allocated for this tab is running out and you need to delete old unused objects.

Old unused objects are objects that are no longer referenced. That is, these are objects that are not written into variables, into objects, into arrays - in general, nowhere.

It would seem, yes, this is a cool process, we need garbage collection, because the memory is really limited and needs to be freed.

Why can this be a problem? Because we don’t know how long garbage collection will take, and we don’t know when it will happen.

Let's look at an example.

Here I recorded a timeline profile of switching our pages with cats. On the top graph, there are spikes in processor performance — CPU utilization.

In the lower graph, you can see that first the memory goes up - this is a graph of memory usage - and then a step down. This is precisely the process of garbage collection.

I had enough memory for the first two pages, and I drew 10,000 cats. Then the memory is over and, in order to render another 5,000 cats, I deleted the old ones, because they are no longer used.

Basically, it's cool. Indeed, the browser took care of me and deleted what I do not use. What is the problem?

Let's bite our time, as I usually say, down this leap, and see how long the garbage collection process took.

If you add 4 entries to garbadge collecting, you can see that the garbage collection process took 134 ms - this is 10 frames at 60 Fps.

That is, if you wanted to move the block by 600 Ps for a certain time, then you would have lost the move to 100 Ps simply due to the fact that the browser decided to clean the memory. You do not control the onset of this process, nor the duration. This is bad.

Memory leak is a situation where some unused objects remain in memory during garbage collection, because the garbage collector thinks they can be used.

The second problem is exactly the opposite of the first. It is called a memory leak.

It would seem that the browser is so inconsistent: it needs to clean the memory for a long time, and not to clear the memory for a long time. Why?

A memory leak is a process where there is no such garbage collection. That is, even it may be happening, but does not clean what we need. Sometimes we can throw something in such a way - and, looking at it, the browser engine will understand that it cannot clean it.

Let's look at code example.

There is the same page switching, but every time I insert new elements on the page, I add a handler to each photo.

Suppose I need to press the spacebar. I hung up just in case for a document handler so that Keydo would not disappear anywhere. What happens in this case?

When I clean the container by removing HTML, I will remove the DOM nodes from the DOM tree. But the handlers on the document will remain, because the document will remain on the page. Nothing will happen to him. When garbage collection takes place, the garbage collector will not remove these handlers.

Let's see what is written inside these handlers?

Inside these handlers, a node is used. It turns out that the nodes will not be deleted from memory, because they are referenced. This is called a memory leak. From the point of view of logic, neither Iods nor handlers are needed. But the collector does not know about it, because from his point of view they are used.

What happens in this case? Let's look at the schedule.

From the waves that rise and fall, the schedule turned into a ladder that grows upward. With each page switch, memory is used more and more - but it is not cleaned, since handlers and nodes remain in memory.

Memory is not released. The computer also starts to slow down.

Why? Because a big memory is very bad. The processor will be clogged when performing operations on a large DOM.

The result is that when we try to optimize something with memory, we get a big risk.

Brendan Ike himself, who created Javascript, in a recent interview (WebAssembly) said: “Javascript is a good language. It is fast and in terms of performance can sometimes be with C, but the problem starts when garbage collection occurs, because we do not know when the collector will come to us and how long it will work. ”

Memory is unreliable

Brakes associated with memory can occur when writing values to it, and when it is automatically cleaned. Predicting the moment of occurrence of brakes is very difficult.

It turns out that memory optimizations - maybe good - but not reliable, because sometimes they can lose productivity and things will go badly.

So let's look at the other side of the picture, which I showed: how to optimize the speed of the application from the point of view of the processor - the main computing device.

There are three main ways to speed up the processor:

- Reduce the amount of computation;

- To shuffle - I'll explain later what it is;

- Do not use the processor. This is also rather strange, but a good way to optimize the processor.

Let's look at our picture with cats.

I said that I had five pages of 5,000 seals. In principle, this, by the way, is real volume. You can scroll for 0.5 minutes of these cats, and you will have a DOM of 5,000 items.

But if you think about it - it is not necessary for the first boot. We now see four rows and five columns of cats. These are 20 cats. It turns out that the user, opening the page for the first time, sees 20 cats, and not all 5,000 pictures.

And the browser renders 5 000. It turns out that we draw a lot of excess. Why draw 5,000 cats, if we show 20?

Well, we can even make a start - the user can scroll the site and he also needs to show something. But if you draw 100 cats, it will already be 5 screens.

Therefore, the first thing you can do is reduce the volume of the DOM. This is the easiest way. You reduce the DOM, and everything works faster.

Let me prove it to you from the profiler point of view.

I draw the first page on 5,000 elements and use the profiler to record the loading process. By the way, there is a “Reload” button, and if you click on it, the profiler will record the page loading speed. It will reload it and when the page is completely rendered and everything is ready, it will stop recording this screenshot and 5,000 seals will be rendered in four seconds.

And here I recorded screenshots.The user sees the first seals at the end of these four seconds. A little earlier, somewhere at the end of the third second, image wrappers appear.

If you reduce the page to 100 elements (up to five screens), then the download will take only 0.5 seconds. Moreover, the user will immediately see the finished result - without wrappers.

Therefore, the first step is to reduce the amount of computation.

The second point is trotting.

What is trotting?

Imagine that you have a certain frame rate and it does not fit into the frame rate that we chose. We have already talked about this.

Trotling is a way of thinking when we take a step back and try to understand, do we really need the refresh rate that we have?

Suppose it is 60 Fps, and operations are performed with this frequency. But the calculations take more than 16.5 ms. And here you need to think - do you really need to enter these calculations in 16.5 ms? If not, then we can arrange the frequency to the desired one.

Let's take an example.We have just optimized cats and we’re showing not 5,000 cats, but 100. Let's change the way the user interacts with these cats. We will not show large pages at once, we will show cats as needed.

For this you need a dynamic scroll. We scroll, reach the bottom, show the next page. Here the code is about that.

But I added some statistics to this code. I recorded the delta in pixels - how often the scroll event is triggered by me, and the counter of total scroll events. When I scrolled the page from top to bottom, at its height of 1,000 ps, the scroll event triggered every four. From top to bottom there were 500 checks.

Why so much?Because the scroll happens just at 60 Fps - the very one. And yesterday, LG showed an Ipad with a screen frequency of 120 Hz, which means that they will now try to make 120 Fps, and not 60. And here it will be necessary to think even more about it. Remember the donkey from the cartoon about Shrek, who asked “We have already arrived? Have we arrived yet? ”- my check behaves the same way. She works too often and obsessively.

Trotling is to order the number of frames. I do not need to check every 4 ps before scrolling the bottom of the page. I can do this, say, 1 time in 100 ms.

Here I added a small check based on dates.

I look at how much time has passed since the last check, and run the next one. What is the point?The scroll event continues to occur, but I do not use all the scroll frames, but only some of them - those that fall under my conditions.

When I used 100 ms, the check was performed every 20-30 ps, and only 100 checks occurred from top to bottom. In principle, this is normal - once in 100 ps ask if we are downstairs.

This is the second way to calculate. Check frame rate. Maybe for a specific task you do not need 60 Fps and you can reduce it.

The third way is to give calculations.

How can I give calculations from the processor? There are several options:

- You can give some calculations on the video card.

- Some calculations can be given to the server;

- You can give the calculation to another thread. This will not unload the user's processor - but it will unload the process that is open in the tab.

Consider each of these methods.

To begin with, I’ll tell you why browser games are not made on SVG, but on anvas.

Browser games are a thing in which there are so many disposable elements that you throw out. You create them and throw them out, create them and throw them out. In such cases, when there is a complex interaction and a large number of small elements that are not long playing, it makes sense to use anvas.

Consider a comparison of the ideology of SVG and anvas.

Under SVG, when you draw something, you need a DOM element. SVG is the DOM. You describe the format as markup, but, as we have already figured out, all the markup falls into JS as a DOM tree — as an object, for example, with a class list or with all other properties.

When you write on canvas, you simply operate on pixels. You have methods that describe the interaction with canvas. The result is pixels on the screen - and nothing more.

Therefore, on canvas we have to invent our own data structures - since there is no DOM tree, which was invented for us by someone. But then it may be slightly better for solving problems than the structures that SVG offers.

Once SVG has some kind of standard structure, it has an API. That is, you can do any interactions with SVG, for example, update one by one, animate.

On sanvas all this can not be done. You have to write everything by hand, as in assembly language. But then you can get a performance boost. After all, SVG is a DOM, and it will be considered on the processor, and pixel drawing will be on the video card.

Let's look at an example of why we rotate the Earth a little.

Here, the resolution is ridiculous in modern times - 800x600 - nothing at all. The Land that you see is vector graphics. All countries are described through one complex path. I did not draw each country separately as an object. They are all laid in one line of a certain shape.

I will update the frames via requestAnimationFrame . That is, the browser itself will tell me what my Fps is for drawing this thing. He himself will understand how much he can draw 1 frame.

As an animation, I will rotate the Earth through 360 ° - from London to London. It was easier to write this way - I need to pass 0 to the array, because this is the London coordinate.

Here I recorded the work profile of the canvas. First, look at the bottom chart. Only GPU is used here. That is only a video card for animation. There is nothing higher.

This is where the Main tab is involved. This is the work of the processor itself in changing the array of the number 0 by the number 360 and miscalculation of this contour depending on the angle - it multiplies the known coordinates of the countries by the formula of their projection on the circle.

Further only the video card is used.

In the end, I ran a few tests, and it turned out that on average, the animation lasts six seconds. With the help of simple calculations — 360 ° in 60 seconds — 60 Fps is obtained — all is well.

But SVG managed a little worse. Why?Because if you look at the last two lines, we will see a completed Raster tab. This means that SVG created a DOM object for each frame, calculated all its parameters completely using the processor, not using the GPU, and using the video card already rendered it in the form of pixels.

That is, first a DOM object that is long and difficult to compute, then pixels on the screen. This is quite seriously squandered performance. It took about eight seconds, and this is about 45 Fps.

I circled 45 Fps in a red frame, because this is what is called beyond the shame. You say, “What are you saying? There are even movies that run at 24 Fps. ”

Not true.Even films that were recorded at 24 Fps at the dawn of cinema were shown at 48 Fps. This concept is explained to us by Thomas Edison: “Yes, a person will see 24 Fps movement, but he will see flickering from updating the image”.

That is, he will see a moving picture, while noticing flicker. To prevent this from happening, you need at least 48 Fps.

To do this, in old films each frame was shown twice.

That is, even at the dawn of cinema, films were shown at 48 Fps. But SVG failed, failed the performance test, and this is bad.

That is, it turns out that in certain cases sanvas is better than SVG. For example, if you have many such disposable elements that need to be thrown out, it is better to use sanvas.

Another test.

When I was preparing and chasing away all the code samples, I accidentally forgot one line - clearing the previous frame. I got such a strange picture. I decided not just to get rid of this bug, but to see where my mistake would lead me, and measured the performance of this thing.

When I measured the performance of the earth’s revolution on canvas without cleaning canvas, I got 60 Fps. I rechecked five times if I had the wrong picture. 60 Fps - and do not care about canvas.

How do you think SVG did it?

Full animation took 24 minutes! He didn’t clean anything and a classic leak occurred. Memory grew and grew. I wanted to show the profiler, but for the first time I saw the window of death on the profiler. This is generally very strange.

To explain what happened, I'll show the profiler on a regular SVG.

Memory is used below, and every two seconds it is clogged, then cleaned and clogged again. Now put this ladder one on top of another and imagine what happened at the end of 24 minutes, when I managed to drink tea, go somewhere.

In general, if you have a lot of disposable-elements, there is a complex graphics and non-trivial interaction, it is better to use sanvas. It is unlikely that someone will describe the dust particles flying from the tree into which the fireball fell, as a DOM node, each of which has a class list.

No, better canvas.

Another calculation method is to transfer the calculations to the server. We just said that canvas have good and fast graphics. But somehow I had a task to draw a heatmap over the city: how often do restaurants meet there?

I thought: graphics? Graphics. SVG is not suitable, because the update step is 1ps, that is, each pixel means something to me. Therefore, there must be sanvas.

I solved this task on anvas. The data structure came, I walked along it, put a dot on the map, which had a certain saturation color.

What happened? In fact, I didn’t like the solution much because:

- I had to write a lot of crutches. Canvas is almost a graphical assembler, it has a fairly low level API and had to manually work with each pixel.

- I had to run a lot of calculations, and the user saw the same thing. That is, when the user slightly shifted the map - I recounted all over again.

Then I thought: “Well, I’m a smart developer, I solved an awesomely difficult task - I drew a heat map, but it’s worth thinking about the user and don’t work, but simply:

- come to the backend,

- which has python

- beautiful library for working with graphics,

- which gives me static pictures,

- and they are not mine

- and also cached.

That is, instead of loading the user's processor with his vanity, I went to the backend and asked me to generate pictures, that is, to load the processor of the backend computer that was designed for this. The user processor was loaded only by reading pictures from the cache.

As you know, the pictures have another advantage - they do not need to be recalculated every time. They are remembered and drawn - all is well.

The third way is to give in a parallel stream. In fact, this is a fairly new way for the frontend, because the guys who write in other languages usually talked about this.

Let's look at the task. There is an editor, for example, Ace. Since this is a fully client-side editor, the client side is responsible for such basic things as blinking the cursor, scrolling, moving the cursor, and so on.

But besides this, you need to highlight the code or say: “Dude, you wrote the wrong operator in the wrong place” - and break it all.

To say that the user wrote the wrong operator in the wrong place, you need to build an ST-tree, run through it, analyze it and so on.

What did the Ace developers do? They said: “In the mainstream we leave the main one. Let the cursor blink and go, and the screen scrolls. This will occur without delay. User will be satisfied. And we’ll give all the remaining extras to the Worker. ”

This is a tool that allows you to run Javascript file in a separate browser thread and avoid unnecessary expense of performance - for example, to build a tree.

There is one limitation. In the Worker service, you should not give up work with DOM. That is, with DOM you are working in your thread, and you give complex calculations in parallel. This will increase the performance of your frontend.

To summarize How can I give calculations from a practical point of view?

- If you are doing a visualization on D3, and it is complicated (there is such a thing - gravity. For example, you built a tree, and the more node it has, the stronger it attracts the node, and this is all animated) - it’s better to do it on anvas, don't do it on svg.

- Many server requests are not always bad. This can be good if the user sees the interface faster.

- Non-interactive overlay on Google maps - picture. It is just a law.

- If you can count something in a parallel thread, do it.

I have long been telling how to optimize and reduce computations. But sometimes this can not be done.

For example, no real server on the Internet will answer me faster than 100 ms. I can't do anything about it. Even if I have a super-beautiful interface that is thought out to the smallest detail, all calculations are optimized, there will still be delays and users will be able to see the lags.

What to do with delays? There are two ways:

- Use transparent feedback, that is, show the user that "yes, I know that there is a delay, and this is normal."

- A little deceive user.

Now I will tell you how this is done.

Consider the correct feedback. Let's go back to Instagram with cats and add some interaction.

There is a cat, and the user wants to like him. He brings the mouse, clicks on the asterisk, I show - yes, you liked, you're great! There are more likes! But this is not true. Like is not like while it is not like on the server.

Sometimes servers sometimes have this:

What do all developers do in this case?

No one makes any transparent feedback.

How to do? I'm not saying that you need to forget about the Optimistic UI, but showing the direction. If the user has clicked on something, and the result is not instantaneous, you need to show it, for example, you can even highlight this asterisk. At least show: “Wait. Yes, we understood that you clicked, but not everything is ready yet. ”

When something goes wrong, it also needs a separate status. That is, we need to be transparent and show the user what is happening. As I said, we are responsible for the user's impression of the site. And he is not a fool that did not understand what displays him in the console.

When I was preparing for this report, I called my mother and told her what I would talk about. She told me a joke:

- Why did you bring me 10 pizzas? I ordered 1.

- Yes, you ordered 1, but the button was pressed 10 times

It seems like a stupid joke, but I meet with that. Sometimes I write comments, press a button - and even the button does not bend, nothing happens. I think - buggy, or what? You need to click again.

But in fact, everything happened, the request went to the server (both requests went to the server), and both comments were drawn. And users do not like when they see 2 identical comments.

For some reason it is considered that I am guilty, although in fact the programmer is to blame. It was possible to simply show the feedback - yes, no longer press this button, please. We understood that you pressed it.

When the answer came, you can even reset the content, and the user intuitively understands that you can enter another message.

At the development stage, it does not cost anything at all, but the interface will get better, the delay is more transparent. The user will not be offended. He knows that you need to go to the server. As Louis C. Key said, “Wait! It flies into space! ”While you complain that my site does not show in a second.

The second way - you can slightly deceive the user.

For example, when the guys developed Macintosh back in 1982 or in 1987, I don’t remember exactly, they had very weak hardware, because Apple had the task to fit in a certain price to make the computer affordable. And they had to come up with interesting solutions.

Jeff Raskin, who designed the first version of the Mac OS interface, came up with the following. It is clear that the computer can not turn on instantly and immediately show the working screen. Therefore, they photographed the last state of the screen before shutting down, recorded the picture on the zero track of the disk and, when the computer turned on, showed it.

Why did this work? Because a person needs seconds to switch the context. That is, when we talk about Fps, professional pilots see 270 Fps. But when people switch the context between tasks, it takes a few seconds to realize what is happening.

When the guys from Mac OS showed the picture, people looked and thought: “Yes, something happened here, something changed.” As long as you understand what has happened, a real screen will be shown to you.

This solution turned out to be so good that it still works on a Mac. For example, when you turn on the MacBook, you see that the WI-FI indicator is already on full. And after two seconds, he begins to search the network.

The second method, which follows from the first, is a cropped screenshot. It is called Skeleton Screens. This thing came up with Google Images.

They had the same task, which we talked about a little earlier, - that if we show not all the pictures, but the user will scroll.

They thought - we can't show all the pictures at once, but we can promise the user that there will be a picture. And drew a gray block the size of the picture. When the user stops, it shows the real picture. When the user scrolls and sees gray blocks, he believes in it intuitively - well, yes, the picture did not have time to load, and this is normal.

It can also be used, and it is good.

Summarize. What to do if the site slows down?

- Start with the processor, that is, improve your computing process:

- Reduce memory, do not clog it, do small operations.

- Check if you do these calculations too often.

- Unload the processor, give complex algorithms to the backend, despite the fact that you are handsome and can write it. - Add the correct feedback to the interface so that the user understands that, yes, the calculations are in progress, but this is normal.

- And after that, I wish you good luck in memory optimization.

If you need more details on this topic, we have prepared a transcript of the speaker's answers to questions from the audience.

All under the spoiler

- Workers seem to serialize / deserialize when data is sent to them. We had such a situation with complex calculations. We considered how many points on the chart a person has, how many came from the server, and it all was merzh. When we moved everything to Worker, it became more inhibited. And while we can not bring all this logic to the server - because he, too, begins to blunt.

As a result, when loading the page there is a small lag. We somehow decorated it, but, nevertheless, it is still there. How can you deal with such situations? Something to offer?

- The first thing that comes to mind is the very way to show the spinner that now something is considered, this is normal. Secondly, the task is set in a general form and I cannot give a concrete solution, but I would look at what kind of data comes from the server. Maybe there are too many of them.

- A thousand points with some data.

- Maybe it makes sense to thin out the data, show them less, if there is such an opportunity.

- Is that at some old retrospective thin out. Option, by the way, thanks.

- Yes, first download less data, and then, as necessary, load a larger amount of data, and then it will work a little faster.

- There was an example about scrolling and about subtracting TimeOut, there was, I think, there was. Will there be a worse solution if you use Windows TimeOut and Clear? That is, we will check it once.

- This is a popular misconception that trotl is Windows TimeOut. I do not remember who invented this, one of the big guys, it seems, Zakas. In fact, this debounce is a slightly different operation. Throttle is when calculations occur frequently, and we take only a fraction of them, but with an equal period of time. That is, Throttle is when I have something executed guaranteed every 100 ms. And the example with TimeOut will not work once every 100 ms. The stack will be filled, and then, when it passes 100 ms, it will be executed.

This is a good thing. It is called debounce. It also helps, for example, when the user enters something, you do not need to use Throttle to use and show a subject as you type, it is better to do this debounce when he entered it.

- I would like to clarify something about optimization on the processor. Nothing was said about making the layers. But it also allows you to save time on animations, transfer calculations to a graphics processor — transfer exactly that image that you just need to draw at different points in time in different places.

- Will the change mean? Yes I agree. Simply, I rather talked about the code, the code, and Will-change - this is a declarative API, HTML more refers to markup.

- Anyway, optimization too. And the second question is about the processor. What about those poor browser users where service workers are not supported? If we in our application are not tied to the fact that we throw some heavyweight calculations there, we are significantly limited in support.

- I also really like such a thing as task prioritization. For example, React Fiber uses this.

They collect all the tasks of updating the interface that they need, and perform them in order of priority - first important from the point of view of rendering, then - not so much. And if we do not have a service worker, but we need to somehow distribute everything, we can gather a pool of tasks and do them the same way, depending on priority.

React Fiber does it under the hood, it's cool.

- I understand that this is something in the spirit of approximate rendering, at first, more coarse, then more detailed?

- This is a very crude simplification, but something like this.

- I have a question about the DOM SVG animation. Did you try to use lstgs sub, for example, did you run some tests, and how much faster was it? This is a project that aims to improve the performance of the animation exactly DOM'a.

- No, I showed all hardcore, iron. The animation is all hand written.

- It would be interesting to see such tests.

- In terms of tools? This is a good idea, I will think. I have already said that I have a website and maybe I will try it in time.

- They just say that they can immediately animate a lot of nodes without any lags, and they have tests. Interesting.

- I agree with you, but, you know, after all there under the hood is the same thing that I just said. You need to understand the direction to go. If you understand that these libraries use this, that is good, and it will help. I agree?

As a result, when loading the page there is a small lag. We somehow decorated it, but, nevertheless, it is still there. How can you deal with such situations? Something to offer?

- The first thing that comes to mind is the very way to show the spinner that now something is considered, this is normal. Secondly, the task is set in a general form and I cannot give a concrete solution, but I would look at what kind of data comes from the server. Maybe there are too many of them.

- A thousand points with some data.

- Maybe it makes sense to thin out the data, show them less, if there is such an opportunity.

- Is that at some old retrospective thin out. Option, by the way, thanks.

- Yes, first download less data, and then, as necessary, load a larger amount of data, and then it will work a little faster.

- There was an example about scrolling and about subtracting TimeOut, there was, I think, there was. Will there be a worse solution if you use Windows TimeOut and Clear? That is, we will check it once.

- This is a popular misconception that trotl is Windows TimeOut. I do not remember who invented this, one of the big guys, it seems, Zakas. In fact, this debounce is a slightly different operation. Throttle is when calculations occur frequently, and we take only a fraction of them, but with an equal period of time. That is, Throttle is when I have something executed guaranteed every 100 ms. And the example with TimeOut will not work once every 100 ms. The stack will be filled, and then, when it passes 100 ms, it will be executed.

This is a good thing. It is called debounce. It also helps, for example, when the user enters something, you do not need to use Throttle to use and show a subject as you type, it is better to do this debounce when he entered it.

- I would like to clarify something about optimization on the processor. Nothing was said about making the layers. But it also allows you to save time on animations, transfer calculations to a graphics processor — transfer exactly that image that you just need to draw at different points in time in different places.

- Will the change mean? Yes I agree. Simply, I rather talked about the code, the code, and Will-change - this is a declarative API, HTML more refers to markup.

- Anyway, optimization too. And the second question is about the processor. What about those poor browser users where service workers are not supported? If we in our application are not tied to the fact that we throw some heavyweight calculations there, we are significantly limited in support.

- I also really like such a thing as task prioritization. For example, React Fiber uses this.

They collect all the tasks of updating the interface that they need, and perform them in order of priority - first important from the point of view of rendering, then - not so much. And if we do not have a service worker, but we need to somehow distribute everything, we can gather a pool of tasks and do them the same way, depending on priority.

React Fiber does it under the hood, it's cool.

- I understand that this is something in the spirit of approximate rendering, at first, more coarse, then more detailed?

- This is a very crude simplification, but something like this.

- I have a question about the DOM SVG animation. Did you try to use lstgs sub, for example, did you run some tests, and how much faster was it? This is a project that aims to improve the performance of the animation exactly DOM'a.

- No, I showed all hardcore, iron. The animation is all hand written.

- It would be interesting to see such tests.

- In terms of tools? This is a good idea, I will think. I have already said that I have a website and maybe I will try it in time.

- They just say that they can immediately animate a lot of nodes without any lags, and they have tests. Interesting.

- I agree with you, but, you know, after all there under the hood is the same thing that I just said. You need to understand the direction to go. If you understand that these libraries use this, that is good, and it will help. I agree?

We are also in a hurry to announce that we have opened access to all video recordings of performances from Frontend Conf 2017 . There are almost three dozen of them.

At the same time, we invite pros to become speakers at our May RIT ++ conference festival. If you have an interesting development experience and you are ready to share it, leave a request for our program committee.

Source: https://habr.com/ru/post/345498/

All Articles