Key-value for storing metadata in storage. We test the selected databases

In this article, we continue to talk about how you can store metadata in storage using key-value databases.

This time, the focus of our attention on the selected database: Aerospike and RocksDB. Description of the importance of metadata in the storage system, as well as the results of testing embedded databases can be found here .

Test parameters of the key-value database

We briefly recall the main parameters for which we conducted testing (details in the previous article ).

')

The main workload is Mix50 / 50. Additionally evaluated: RR, Mix70 / 30 and Mix30 / 70.

Testing was carried out in 3 stages:

- Database filling - we fill in 1 database flow to the required number of keys.

1.1 Reset caches! Otherwise, the tests will be dishonest: the database usually writes data on top of the file system, so the operating system cache works. It is important to discard it before each test. - Tests for 32 threads - we run workloads

2.1 Random Read

• We reset caches!

2.2 Mix70 / 30

• We reset caches!

2.3 Mix50 / 50

• We reset caches!

2.4 Mix30 / 70

• We reset caches! - Tests for 256 threads.

3.1 Same as for 32 threads.

Measurable indicators

- Throughput / throughput (IOPS / RPS - who loves which notation).

- Latency (msec) latency:

• Min.

• Max.

• The mean square value is a more significant value than the arithmetic mean, because takes into account the standard deviation.

• Percentile 99.99.

Test environment

Configuration:

| CPU: | 2x Intel Xeon E5-2620 v4 2.10GHz |

| RAM: | 16GB |

| Disk: | [2x] NVMe HGST SN100 1.5TB |

| OS: | CentOS Linux 7.2 kernel 3.11 |

| FS: | EXT4 |

The amount of available RAM was not physically regulated, but programmatically - part of it was artificially filled with a Python script, and the remainder was free for the database and caches.

Dedicated DB. Aerospike

How is Aerospike different from the engines we tested before?

- RAM index

- Using RAW disks (= no FS)

- Not a tree, but a hash.

It so happened that in the Aerospike index for each key is stored 64B (and the key itself is only 8B). In this case, the index must always be fully in RAM.

This means that with our number of keys, the index will not fit in the memory we allocate. It is necessary to reduce the number of keys. And our data allows it!

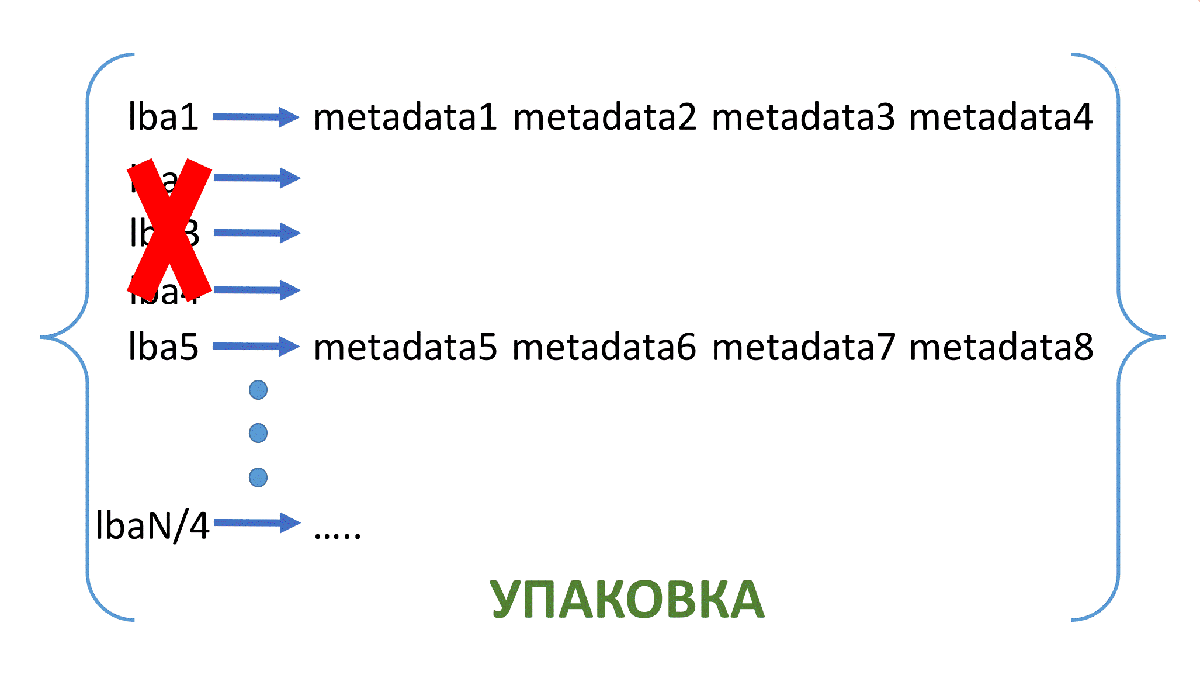

Fig. 1. Package 1

Fig. 2. Packing 2

So, using this package, we reduced the number of keys by 4 times. In the same way, we can reduce their number by k times. Then the size of the value will be 16 * k B.

Testing. 17 billion keys

In order for the Aerospike index of 17 billion keys (17 billion lba-> metadata mappings) to fit into RAM, you need to pack this 64 times.

As a result, we get 265 625 000 keys (each of the keys will correspond to a value of 1024B in size, containing 64 instances of metadata).

We will test using YCSB. It does not produce the average square delay, so it will not be on the graphs.

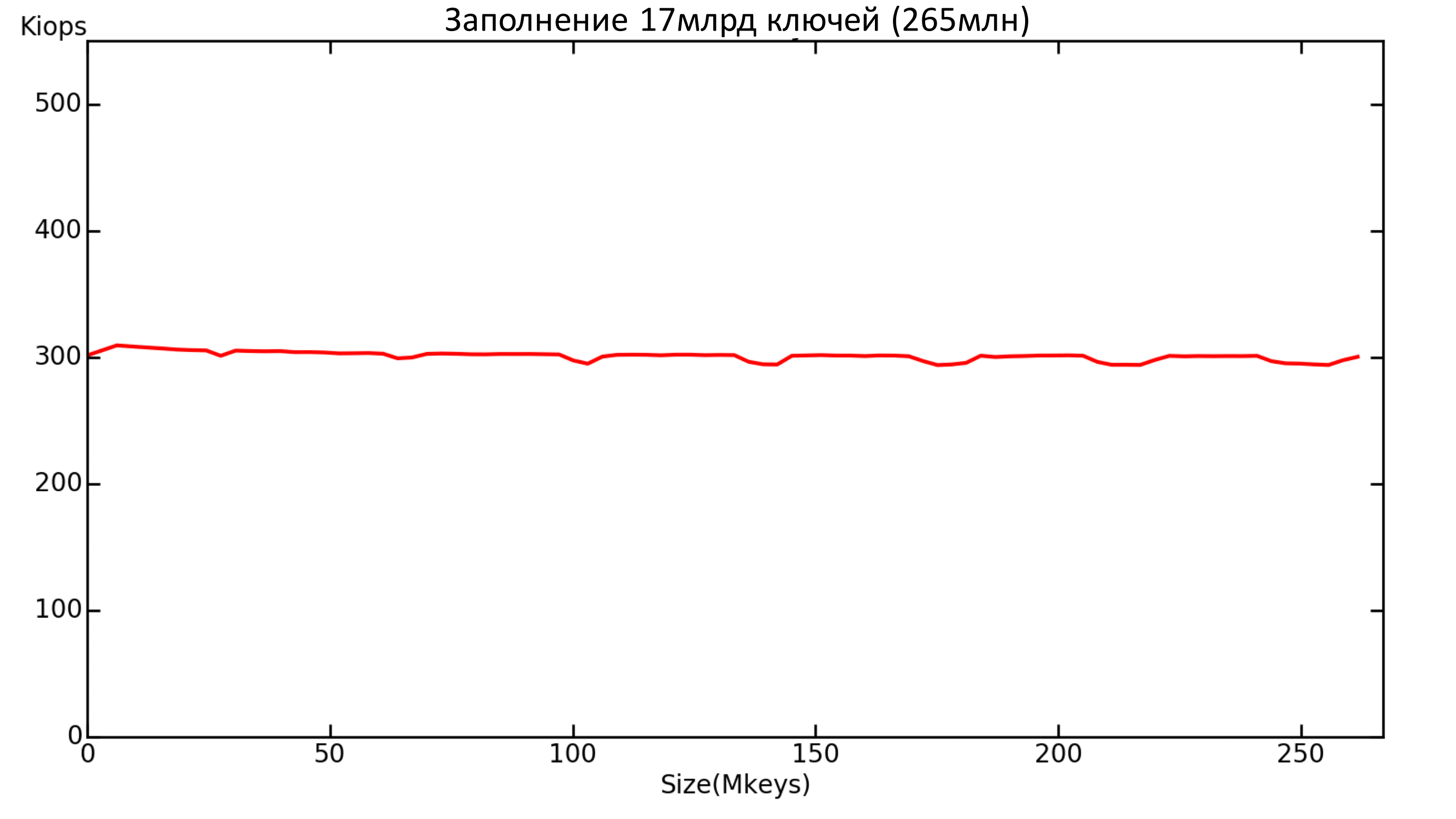

Filling

Aerospike showed a good result to fill. Behaves very stable.

But the filling was carried out in 16 streams, and not in one, as it was with the engines. In one thread, Aerospike issued about 20k IOPS. Most likely, the matter is in the benchmark (that is, the benchmark simply does not “squeeze” the database into one thread). Or Aerospike loves a lot of streams, and in one it is not ready to give out a large bandwidth.

Fig. 3. Base filling capacity

The maximum delay was also kept at about the same level throughout the filling.

Fig. 4. Latency filling base

Tests

It is important to note here that on these graphs you cannot directly compare Aerospike and RocksDB, as the tests were conducted under different conditions and different benchmarks - Aerospike was used “with packaging”, and RocksDB was used without.

Also worth noting is that 1 IO from Aerospike = extract 64 values (instances of metadata).

RocksDB results here are provided as a reference.

Fig. 5. Comparing Aerospike and RocksDB.100% Read

Fig. 6. Comparing Aerospike and RocksDB. Mix 70% / 30%

Fig. 7. Comparing Aerospike and RocksDB Mix 50% / 50%

Fig. 8. Comparison of Aerospike and RocksDB Mix 30% / 70%

As a result, recording on a small number of streams was slower than that of RocksDB (it should be remembered that in the case of Aerospike, 64 values are recorded at once).

But on a large number of threads, Aerospike still produces higher values.

Fig. 9. Aerospike. Latency Read 100%

Here we finally managed to get an acceptable level of latency. But only in the reading test.

Fig. 10. Aerospike.Latency Mix 50% / 50%

Now you can update the list of findings:

- Record + few threads => WiredTiger

- Record + many threads => RocksDB

- Read + DATA> RAM => RocksDB

- Read + DATA <RAM => MDBX

- Multiple threads + DATA> RAM + Pack => Aerospike

The final conclusion on the choice of database for metadata: in this form, none of the applicants do not reach the indicators we need. Closer of all is the Aerospike.

Below we will talk about what can be done with this and what data storage in direct addressing gives us.

Direct Addressing. 137 billion keys

Consider a storage system of 512 TV. The metadata of such a storage system is placed on one NVME and corresponds to 137 billion keys for the key-value database.

Consider the simplest implementation of direct addressing. To do this, create a SPDK NVMf target on one node, take a local NVMe and this NVMf target on another node and combine them into logical RAID1.

This approach will allow you to record metadata and protect them with a replica in case of failure.

When testing performance in multiple threads, each thread will write to its area and these areas will not overlap.

Testing was performed using the FIO benchmark in 8 threads with a queue depth of 32. The table below shows the test results.

Table 1. Testing Direct Addressing

| rand read 4k (IOPS) | rand write 4k (IOPS) | rand r / w 50/50 4K (IOPS) | rand r / w 70/30 4K (IOPS) | rand r / w 30/70 4K (IOPS) | |

|---|---|---|---|---|---|

| spdk | 760 - 770 K | 350 - 360 K | 400 - 410 K | 520 - 540 K | 410 - 450 K |

| lat (ms) avg / max | 0.3 / 5 | 0.4 / 23 | 0.3 / 19 | 0.5 / 21 | 1.2 / 28 |

Now let's test Aerospike in a similar configuration, where 137 billion keys are also placed on one NVMe and replicated to another NVMe.

We test with the help of the “native” benchmark Aerospike. We take two benchmarks - each on its own node - and 256 threads to squeeze the maximum performance.

We get the following results:

Table 2. Aerospike testing with replication

| R / W | Iops | > 1ms | > 2ms | > 4ms | 99.99 lat <= |

|---|---|---|---|---|---|

| 100/0 | 635,000 | 7% | one% | 0% | 50 |

| 70/30 | 425,000 | eight% | 3% | one% | 50 |

| 50/50 | 342,000 | eight% | four% | one% | 50 |

| 30/70 | 271,000 | eight% | four% | one% | 40 |

| 0/100 | 200,000 | 0% | 0% | 0% | 36 |

Below are the results of testing without replication, one benchmark in 256 threads.

Table 3. Aerospike testing without replication

| R / W | Iops | <= 1ms | > 1ms | > 2ms | 99.99 lat <= |

|---|---|---|---|---|---|

| 100/0 | 413,000 | 99% | one% | 0% | five |

| 70/30 | 376,000 | 95% | five% | 2% | 7 |

| 50/50 | 360000 | 92% | eight% | 3% | eight |

| 30/70 | 326,000 | 93% | 7% | 3% | eight |

| 0/100 | 260000 | 94% | 6% | 2% | five |

Note that Aerospike c replication works no worse, and even better. This increases latency compared to non-replicating Aerospike testing.

We also give the results of testing RocksDB without replication (RocksDB does not have native built-in replication) with its benchmark of 256 threads, 137 billion keys with packaging.

Table 4. Testing RocksDB without replication

| R / W | Iops | <= 1ms | > 1ms | > 2ms | 99.99 lat <= |

|---|---|---|---|---|---|

| 100/0 | 444000 | 93% | 7% | 2% | 300 |

| 70/30 | 188,000 | 86% | 14% | four% | 2000 |

| 50/50 | 107,000 | 75% | 25% | 3% | 1800 |

| 30/70 | 73,000 | 85% | 15% | 0% | 1200 |

| 0/100 | 97000 | 74% | 26% | 17% | 2500 |

findings

- RocksDB "c packaging" does not pass latency tests

- Aerospike c replication almost meets the criteria

- The simplest implementation of direct addressing meets all the criteria for performance and latency.

P.S. Restrictions

Note the important parameters that remained outside the scope of this study:

- File system (FS) and its settings

- Virtual memory settings

- DB settings.

1. FS and its settings

- There is an opinion that, for example, F2FS or XFS is suitable for RocksDB

- Also the choice of filesystem depends on which drives are used in the system.

- Size page (block) FS

2. Virtual memory settings

- You can read here .

3. DB settings

- Mdbx. A recommendation was received from the developer of this engine to try a different page size in the engine: 2 kilobytes or cluster size in NVME. In general, this parameter is important in other databases (even in Aerospike).

- WiredTiger. In addition to the cache_size parameter, which we changed, there are a lot of parameters. Read here .

- RocksDB.

• You can try Direct IO: i.e. communication with the disk, bypassing the page cache. But then, most likely, it will be necessary to increase the block cache built into RocksDB. About this here .

• There is information on how to configure RocksDB for NVME. Here, by the way, is used XFS. - Aerospike. It also has a lot of settings, but their tuning for several hours did not bring tangible results.

Source: https://habr.com/ru/post/345482/

All Articles