Machine learning and chocolates

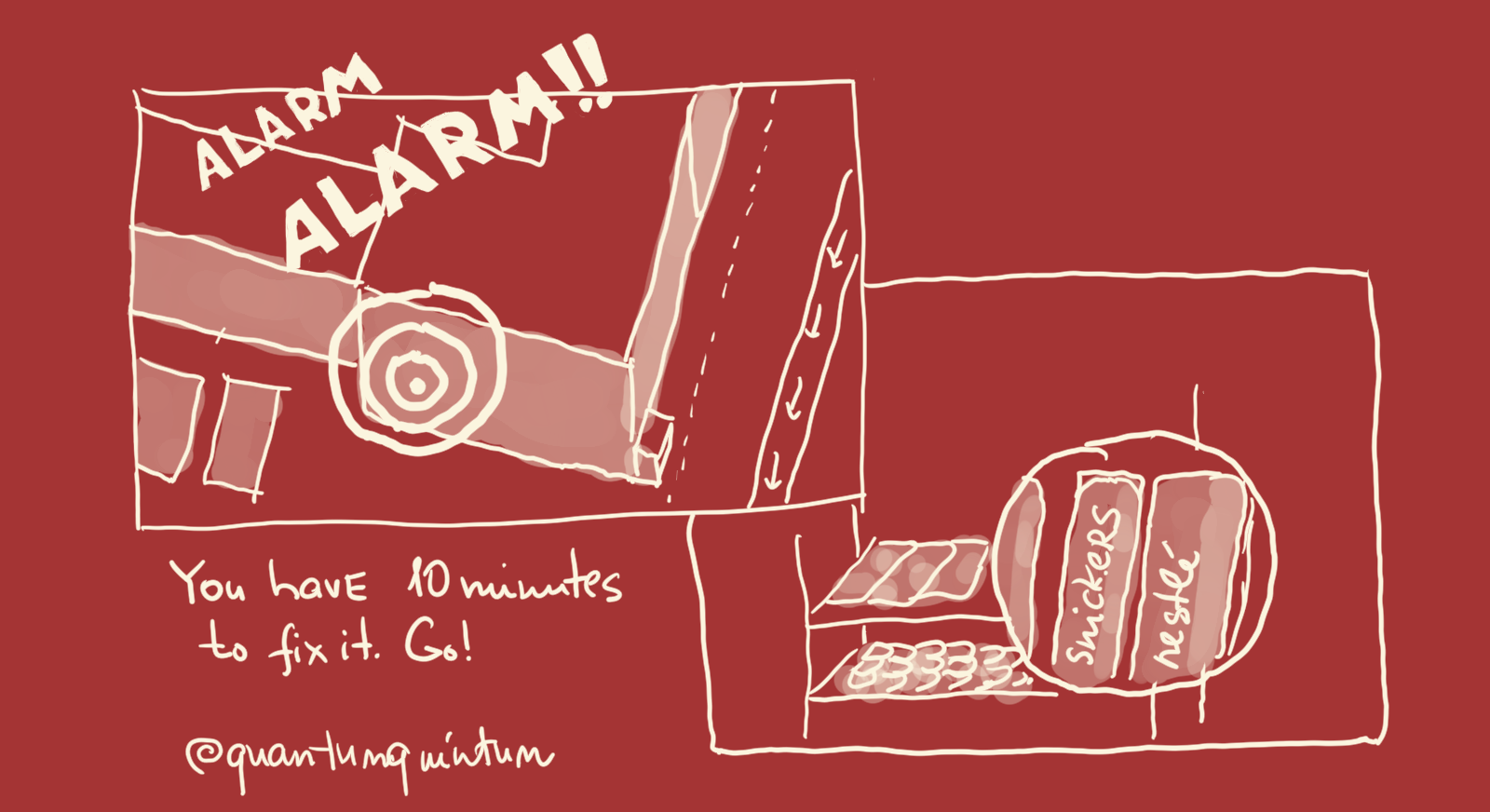

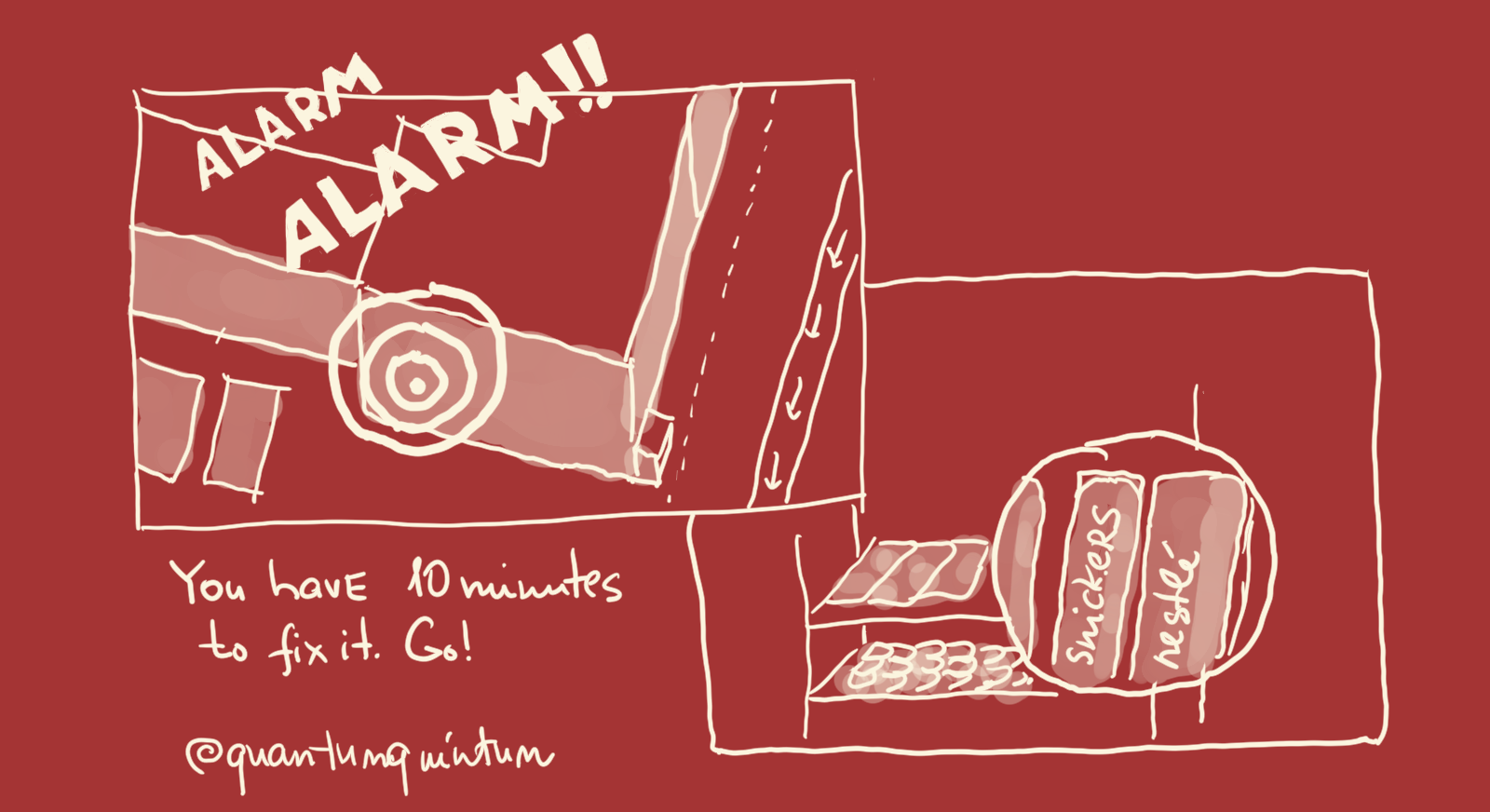

They say that merchandisers have an unspoken rule: never put Nesquik and Snickers bars next to them. Who knows whether it is a myth or not, technologies that allow you to check the conditions of storage and display of chocolates on display cases exist. In this article, we dive into them and talk about a machine learning model designed specifically for this purpose.

In the company with which we worked, there is a huge distribution network through supermarket chains covering more than fourteen countries. Each distributor must arrange the chocolates on windows in accordance with standard policies. These policies indicate which shelf a particular type of candy should be placed on, and also determine the rules for warehousing.

Procedures to verify compliance with these policies invariably entail very high costs. SMART Business sought to develop a system with which the inspector or store manager could look at the image and immediately understand how well and efficiently the goods are placed on the shelf - as in the image below.

Effective policy (left), ineffective policy (right)

In determining the range of tasks, we examined a number of image classification techniques, including the Microsoft Custom Vision Service , transfer training using CNTK ResNet, and object detection using CNTK Fast-RCNN . Although the technology of detecting objects using Fast-RCNN ultimately showed the best result, we also found out during the research that each approach differs in complexity and each has its own pros and cons.

Teaching a REST-based service and working with it is much easier than teaching, deploying and updating a custom model of computer vision. As a result, the first service we started using was the Microsoft Custom Vision Service. Custom Vision Service is a tool for creating custom image classifiers and their continuous optimization. For training the model, we used a set of samples from 882 images, on which individual shelves with chocolate candy display were presented (at that, 505 images showed the appropriate display of goods and 377 showed not corresponding).

As a result of the training, we obtained a relatively efficient base model using the Custom Vision Service along with the following performance tests:

In addition, we tested models on a set of 500 hidden images to supplement this data and get guaranteed baseline indicators.

For more information about performance testing and using the Custom Vision Service model in a production environment, see the previous code example: Food Classification Using the Custom Vision Service. Detailed explanations of standard classification indicators are provided in the Indicators section when evaluating machine learning algorithms in Python.

Inaccuracy Matrix

Despite the fact that the Custom Vision Service showed excellent results in this scenario and proved to be a powerful tool for image classification, this service revealed a number of limitations that prevent its effective use in a production environment.

These limitations are most fully described in the following piece of documentation on the Custom Vision Service:

The methods used in the Custom Vision Service, ways to effectively identify differences, which allows you to start creating a prototype, even with a small amount of data. In theory, a small number of images are required to create a classifier — 30 images for each class is enough to create a prototype. However, this means that the Custom Vision Service is usually poorly prepared to implement scenarios aimed at identifying the most minor differences.

The Custom Vision Service showed excellent results after we found out that we need to process one policy and separate shelves with display of chocolate products. Nevertheless, the limit of 1000 images used for training the service did not allow us to fine-tune the model to work with some borderline cases within this policy.

For example, the Custom Vision Service has proven itself in terms of detecting gross policy violations, and these were the majority in our data set, for example, the following cases:

Nevertheless, this service constantly failed to recognize less obvious, albeit systematic violations in cases where the difference consisted of just one candy - like, for example, on the first shelf in this image:

To overcome the limitations of the Custom Vision Service, we decided to create several models, and then combine the results using the majoritarian classifier . And although this would undoubtedly improve the results of using the model and, possibly, would allow using it in other scenarios, the costs for the API and the execution time would increase. In addition, the model would still not be able to scale to serve more than one or two policies, since in the Custom Vision Service there is a limit on the number of models in each account — no more than nineteen.

To avoid the limitations of the Custom Vision Service associated with the data set, we decided to create an image recognition model using CNTK and ResNet training transfer technology, following the instructions in the next tutorial . ResNet is a deep convolutional neural network (GNSS) architecture developed by Microsoft as part of the 2015 ImageNet competition.

In this case, our training data set contained two sets of 795 images that represented an effective and inefficient policy.

Fig. 3 ResNet CNN with image from ImageNet. The input data is the RGB image of the cat, the output data is the probability vector, the maximum value of which corresponds to the label “striped cat”.

Since we did not have enough data (tens of thousands of samples) and enough computational power to train the large-scale CNN model from scratch, we decided to use ResNet as part of re-training at the output level of the training data set.

We launched the ResNet learning transfer model three times: for 20, 200 and 2000 superframes, respectively. The best result was obtained for a test data set at startup for 2000 superframes.

Inaccuracy Matrix

As you can see, the technology transfer learning has shown significantly worse performance results in comparison with the Customer Vision Service.

However, learning transfer to ResNet is a powerful tool for teaching image recognition systems that use limited data sets. However, if the model is used for new images that are too different from the original 1000 ImageNet classes, then it cannot obtain new representative elements using abstract elements “learned” from the ImageNet training set.

We have seen promising results in terms of classifying individual policies using object recognition techniques (for example, the Custom Vision Service). Given the large number of brands and the possible options for their placement on the shelves, it was absolutely impossible to determine whether a policy was being observed, based on images only, using the standard object recognition process based on the available data.

Taking into account the high complexity of the problems arising during the test, as well as the desire of SMART Business to create new models for each policy as quickly and easily as possible based on standard object recognition methods, we decided to tackle the problem creatively.

In order to make the policy more efficient, without reducing the classification accuracy, we decided to use object detection technology and Fast R-CNN along with AlexNet to detect shelf images with display of goods that meet the requirements. If the image contains shelves with a display that meets the requirements, then the entire showcase is considered to meet the requirements. Thus, we could not only classify images, but also reuse previously classified shelves to create new customizable policies. We chose Fast R-CNN instead of other alternatives (for example, Faster R-CNN), because the implementation and evaluation process has already proven its effectiveness in using CNTK (see the section Object Detection Using CNTK ).

First, we used the new image support function — the Visual Item Tagging Tool (VoTT) —the marking of effective policies within a larger data set (2,600 images). For instructions on tagging image catalogs using VOTT, see Marking an Image Catalog .

Please note that on all three shelves the layout meets the requirements, therefore, the image demonstrates the work of an effective policy.

By changing the filtering proportions, the number, and the minimum size of the focal area, we were able to obtain a high-quality result using the existing data set.

Although at first glance, the results for this model look much worse than when using a solution based on the Custom Vision Service, the presence of a modular structure and the possibility of generalization within individual, constantly recurring problems inspired SMART Business to continue research in the field of advanced object detection techniques.

Further, the advantages and disadvantages of the studied contextual methods of classifying images in order of increasing complexity are considered.

The ecosystem of deep learning is developing rapidly; fundamentally new algorithms are being developed and improved every day. After you become familiar with high performance data in standard performance testing, you may be tempted to immediately use the latest DNN algorithm to solve classification problems. However, it is no less important (and maybe more) to evaluate such new technologies in the context of their application. Too often, the novelty of machine learning algorithms overshadows the importance of well-designed and balanced techniques.

The techniques that we studied in the course of cooperation with SMART Business provide a huge selection of classification methods of varying degrees of complexity, and also show possible shortcomings that should be taken into account when building image classification systems.

Our study shows how important it is to take into account all possible drawbacks (implementation complexity, scalability and optimization possibilities) when using different size data sets, variability of class instances, similarity of classes, and different performance requirements.

PS We thank Kostya Kichinsky ( Quantum Quintum ) for the illustration of this article.

PS We thank Kostya Kichinsky ( Quantum Quintum ) for the illustration of this article.

Series of Digital Transformation articles

Technological articles:

')

1. Start .

2. Blockchain in the bank .

3. We learn the car to understand human genes .

4. Machine learning and chocolates .

5. Loading ...

A series of interviews with Dmitry Zavalishin on DZ Online :

1. Alexander Lozhechkin from Microsoft: Do we need developers in the future?

2. Alexey Kostarev from “Robot Vera”: How to replace HR with a robot?

3. Fedor Ovchinnikov from Dodo Pizza: How to replace the restaurant director with a robot?

4. Andrei Golub from ELSE Corp Srl: How to stop spending a lot of time on shopping trips?

Situation

In the company with which we worked, there is a huge distribution network through supermarket chains covering more than fourteen countries. Each distributor must arrange the chocolates on windows in accordance with standard policies. These policies indicate which shelf a particular type of candy should be placed on, and also determine the rules for warehousing.

Procedures to verify compliance with these policies invariably entail very high costs. SMART Business sought to develop a system with which the inspector or store manager could look at the image and immediately understand how well and efficiently the goods are placed on the shelf - as in the image below.

Effective policy (left), ineffective policy (right)

Study

In determining the range of tasks, we examined a number of image classification techniques, including the Microsoft Custom Vision Service , transfer training using CNTK ResNet, and object detection using CNTK Fast-RCNN . Although the technology of detecting objects using Fast-RCNN ultimately showed the best result, we also found out during the research that each approach differs in complexity and each has its own pros and cons.

Custom Vision Service

Teaching a REST-based service and working with it is much easier than teaching, deploying and updating a custom model of computer vision. As a result, the first service we started using was the Microsoft Custom Vision Service. Custom Vision Service is a tool for creating custom image classifiers and their continuous optimization. For training the model, we used a set of samples from 882 images, on which individual shelves with chocolate candy display were presented (at that, 505 images showed the appropriate display of goods and 377 showed not corresponding).

As a result of the training, we obtained a relatively efficient base model using the Custom Vision Service along with the following performance tests:

In addition, we tested models on a set of 500 hidden images to supplement this data and get guaranteed baseline indicators.

For more information about performance testing and using the Custom Vision Service model in a production environment, see the previous code example: Food Classification Using the Custom Vision Service. Detailed explanations of standard classification indicators are provided in the Indicators section when evaluating machine learning algorithms in Python.

| Tag | Accuracy | Full return | F-1 Score | Support |

|---|---|---|---|---|

| Does not meet the requirements | 0.71 | 0.74 | 0.72 | 170 |

| Meets requirements | 0.87 | 0.85 | 0.86 | 353 |

| Average / total | 0.82 | 0.81 | 0.82 | 523 |

Inaccuracy Matrix

| 125 | 45 |

| 52 | 301 |

Despite the fact that the Custom Vision Service showed excellent results in this scenario and proved to be a powerful tool for image classification, this service revealed a number of limitations that prevent its effective use in a production environment.

These limitations are most fully described in the following piece of documentation on the Custom Vision Service:

The methods used in the Custom Vision Service, ways to effectively identify differences, which allows you to start creating a prototype, even with a small amount of data. In theory, a small number of images are required to create a classifier — 30 images for each class is enough to create a prototype. However, this means that the Custom Vision Service is usually poorly prepared to implement scenarios aimed at identifying the most minor differences.

The Custom Vision Service showed excellent results after we found out that we need to process one policy and separate shelves with display of chocolate products. Nevertheless, the limit of 1000 images used for training the service did not allow us to fine-tune the model to work with some borderline cases within this policy.

For example, the Custom Vision Service has proven itself in terms of detecting gross policy violations, and these were the majority in our data set, for example, the following cases:

Nevertheless, this service constantly failed to recognize less obvious, albeit systematic violations in cases where the difference consisted of just one candy - like, for example, on the first shelf in this image:

To overcome the limitations of the Custom Vision Service, we decided to create several models, and then combine the results using the majoritarian classifier . And although this would undoubtedly improve the results of using the model and, possibly, would allow using it in other scenarios, the costs for the API and the execution time would increase. In addition, the model would still not be able to scale to serve more than one or two policies, since in the Custom Vision Service there is a limit on the number of models in each account — no more than nineteen.

Transfer Training with CNTK and ResNet

To avoid the limitations of the Custom Vision Service associated with the data set, we decided to create an image recognition model using CNTK and ResNet training transfer technology, following the instructions in the next tutorial . ResNet is a deep convolutional neural network (GNSS) architecture developed by Microsoft as part of the 2015 ImageNet competition.

In this case, our training data set contained two sets of 795 images that represented an effective and inefficient policy.

Fig. 3 ResNet CNN with image from ImageNet. The input data is the RGB image of the cat, the output data is the probability vector, the maximum value of which corresponds to the label “striped cat”.

Since we did not have enough data (tens of thousands of samples) and enough computational power to train the large-scale CNN model from scratch, we decided to use ResNet as part of re-training at the output level of the training data set.

results

We launched the ResNet learning transfer model three times: for 20, 200 and 2000 superframes, respectively. The best result was obtained for a test data set at startup for 2000 superframes.

| Tag | Accuracy | Full return | F-1 Score | Support |

|---|---|---|---|---|

| Does not meet the requirements | 0.38 | 0.96 | 0.54 | 171 |

| Meets requirements | 0.93 | 0.23 | 0.37 | 353 |

| Average / total | 0.75 | 0.47 | 0.43 | 524 |

Inaccuracy Matrix

| 165 | 6 |

| 272 | 81 |

As you can see, the technology transfer learning has shown significantly worse performance results in comparison with the Customer Vision Service.

However, learning transfer to ResNet is a powerful tool for teaching image recognition systems that use limited data sets. However, if the model is used for new images that are too different from the original 1000 ImageNet classes, then it cannot obtain new representative elements using abstract elements “learned” from the ImageNet training set.

findings

We have seen promising results in terms of classifying individual policies using object recognition techniques (for example, the Custom Vision Service). Given the large number of brands and the possible options for their placement on the shelves, it was absolutely impossible to determine whether a policy was being observed, based on images only, using the standard object recognition process based on the available data.

Taking into account the high complexity of the problems arising during the test, as well as the desire of SMART Business to create new models for each policy as quickly and easily as possible based on standard object recognition methods, we decided to tackle the problem creatively.

Decision

Object Detection and Fast R-CNN

In order to make the policy more efficient, without reducing the classification accuracy, we decided to use object detection technology and Fast R-CNN along with AlexNet to detect shelf images with display of goods that meet the requirements. If the image contains shelves with a display that meets the requirements, then the entire showcase is considered to meet the requirements. Thus, we could not only classify images, but also reuse previously classified shelves to create new customizable policies. We chose Fast R-CNN instead of other alternatives (for example, Faster R-CNN), because the implementation and evaluation process has already proven its effectiveness in using CNTK (see the section Object Detection Using CNTK ).

First, we used the new image support function — the Visual Item Tagging Tool (VoTT) —the marking of effective policies within a larger data set (2,600 images). For instructions on tagging image catalogs using VOTT, see Marking an Image Catalog .

Please note that on all three shelves the layout meets the requirements, therefore, the image demonstrates the work of an effective policy.

By changing the filtering proportions, the number, and the minimum size of the focal area, we were able to obtain a high-quality result using the existing data set.

results

Although at first glance, the results for this model look much worse than when using a solution based on the Custom Vision Service, the presence of a modular structure and the possibility of generalization within individual, constantly recurring problems inspired SMART Business to continue research in the field of advanced object detection techniques.

Use cases

Further, the advantages and disadvantages of the studied contextual methods of classifying images in order of increasing complexity are considered.

| Technique | Benefits | disadvantages | Application area |

|---|---|---|---|

| Custom Vision Service | • Ability to start using even with small datasets. A graphical user interface is required. • Checked images can be remarked to improve the model. • Ability to implement the service in a production environment with just one click. | • Ability to detect the most minor changes. • Impossibility of local launch of the model. • Limited training set: only 1000 images. | • Cloud services (for example, Custom Vision Service) are great for solving problems related to the classification of objects, in the presence of a limited training set. This is the easiest method available. • In our study, the service showed the best results when using the existing data set, but did not cope with scaling within several policies and with the detection of constantly recurring problems. |

| CNN / transfer training | • Effective use of existing levels of the model, so that the model does not have to be trained from scratch. • Simple training - just select the sorted image catalogs and apply the training script to them. • The size of the training set is not limited, it is possible to launch the model offline. | • Does not cope with the classification of data, the abstract elements of which differ from the elements that participated in the training based on the ImageNet data set. • Training requires a graphical user interface. • Implementation in a production environment is much more difficult compared to the introduction of a computer vision service. | • Transferring CNN training on pre-trained models (for example, ResNet or Inception) shows the best results when using medium-sized datasets with properties similar to ImageNet categories. Please note that in the presence of a large data set (at least several tens of thousands of samples), it is recommended to conduct re-training at all levels of the model. • Of all the methods we studied, the transfer of training showed the worst result in relation to our complex classification scenario. |

| Object Detection with VoTT | • Better for detecting minor differences between image classes. • The detection areas have a modular structure, they can be reused when changing the criteria for complex classification. • The size of the training set is not limited, it is possible to launch the model offline. | • Annotation of the frame for all images is required (although using VoTT greatly simplifies the task). • Training requires a graphical user interface. • Implementation in a production environment is much more difficult compared to the introduction of a computer vision service. • Algorithms like Fast R-CNN are not able to detect areas of small size. | • By combining object detection techniques with heuristic image classification technologies, you can use scenarios that support working with medium-sized datasets in cases where minor differences are required to differentiate the image classes. • Of all the considered techniques, this turned out to be the most difficult in terms of implementation, however, it demonstrated the most accurate result for the existing test data set. SMART Business chose this very method. |

The ecosystem of deep learning is developing rapidly; fundamentally new algorithms are being developed and improved every day. After you become familiar with high performance data in standard performance testing, you may be tempted to immediately use the latest DNN algorithm to solve classification problems. However, it is no less important (and maybe more) to evaluate such new technologies in the context of their application. Too often, the novelty of machine learning algorithms overshadows the importance of well-designed and balanced techniques.

The techniques that we studied in the course of cooperation with SMART Business provide a huge selection of classification methods of varying degrees of complexity, and also show possible shortcomings that should be taken into account when building image classification systems.

Our study shows how important it is to take into account all possible drawbacks (implementation complexity, scalability and optimization possibilities) when using different size data sets, variability of class instances, similarity of classes, and different performance requirements.

PS We thank Kostya Kichinsky ( Quantum Quintum ) for the illustration of this article.

PS We thank Kostya Kichinsky ( Quantum Quintum ) for the illustration of this article.Source: https://habr.com/ru/post/345452/

All Articles