"Instant" frontend. Lecture in Yandex

There are several ways to speed up the delivery of frontend elements to a user's device. Developer Artyom Belov from the Samara office of the Norwegian company Cxense tried the most promising ones: HTTP / 2, Server Push, Service Worker, as well as optimization in the build process and on the client side. So, what should be done to reduce the application response time to a minimum?

- Hello. Thank you for coming. I want to tell a story about how we managed to make the frontend "instant".

For a start, I will introduce myself to at least somehow raise confidence in myself in such a dangerous topic. I am a fender from the Norwegian company Cxense. No one really can pronounce the name correctly. It is good that seven years after the foundation they released a video on how to pronounce Cxense correctly. "C-Sense".

')

We deliver to people what they want in the following sections: advertising and articles. Here the backend gets to deliver data quickly. Not surprisingly, backenders from the Norwegian Linus Torvalds office were a classmate. But we are somehow against them ... The problem originated like this: we cannot deliver the frontend quickly.

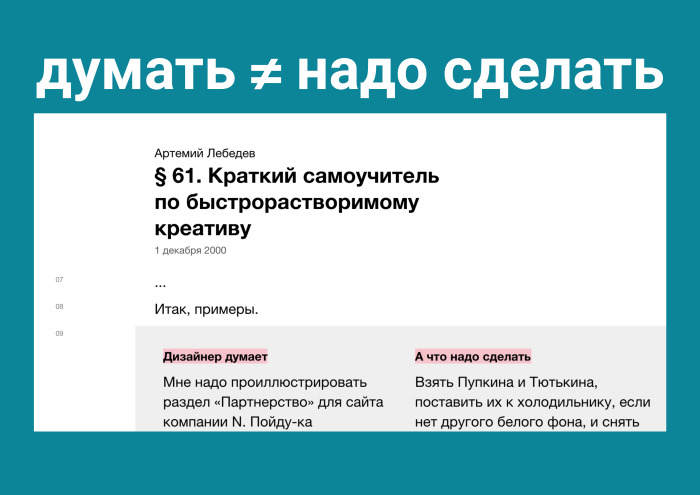

When I heard disputes about how the front-end should be “delivered”, how to load and speed it up, this reminded me of a respected person’s article, 61 paragraph - “A quick tutorial on instant creativity” for designers.

There were described examples about how the designer thinks, but in general it should be done differently. And in the frontend sometimes you need to go beyond. You yourself can catch yourself thinking that sometimes people think in a completely different direction and everything depends on micro-optimization.

Great, we are serious guys too and we started the path of optimization according to the following list:

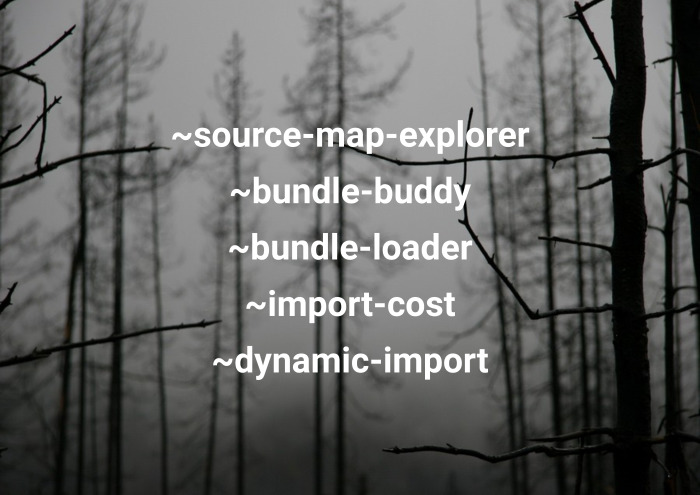

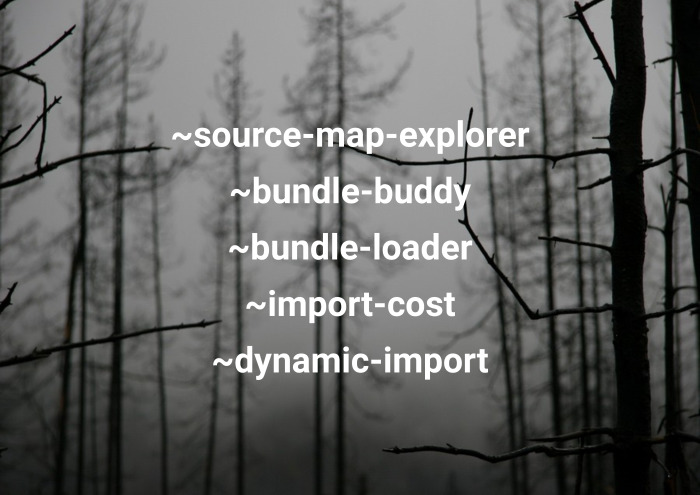

We installed source-map-explorer. We are so hipster that we use bundle-buddy, released a month ago. Analyzed the bundle and removed duplicates. They realized that the application should be one bundle, and it needs to be broken down into chunks, and only downloaded when going to the necessary page. Have you installed the import-cost extension to know? in what size we load the dependencies in VS Code. Established dynamic imports, they found one use in the code. But somehow sad in my heart, and here it is also autumn ... And all you want is to go home.

And then come to the office and say the default phrase: “Guys, help me rewrite the frontend!”.

But here is bad luck. The phrase “let's rewrite to React” was voiced two years ago and there is nothing more to rewrite. From here begins my simple story.

What will I tell you?

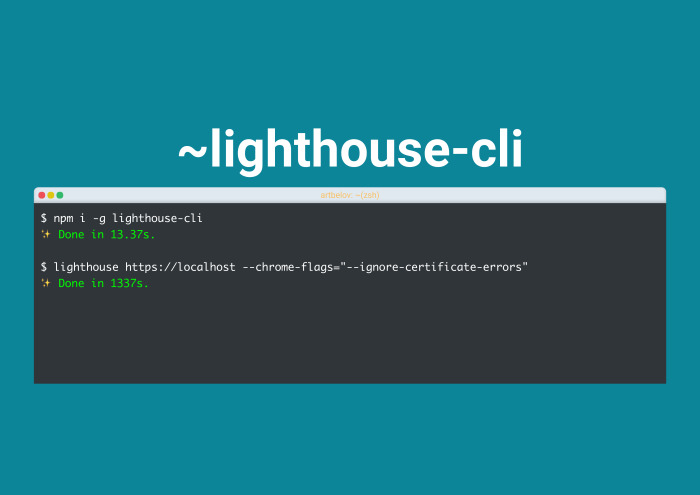

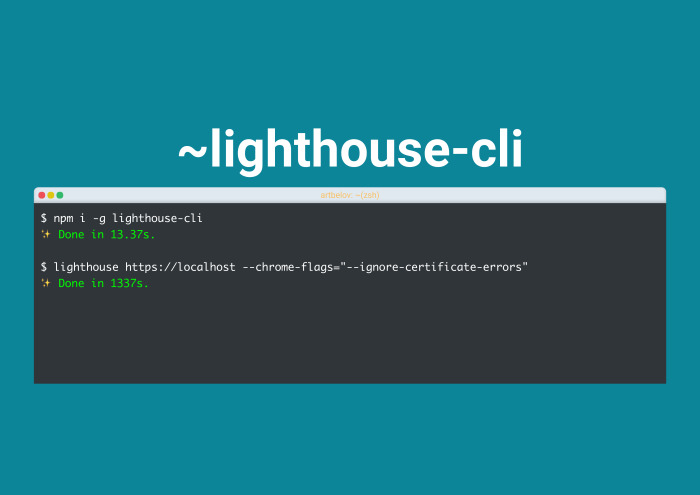

Part of any successful presentation is graphics, but they won't be here. I will use numbers. There is a serious, good, two-year exposure of the application, and its initial speed after the previously mentioned optimizations is 100%. All the results that I will talk about were checked by the following CLI tool from the project manager.

Pwmetrics. He was made by Paul Irish - perhaps the most respected project manager on this planet. Considering that it is easily embedded in CI, and I’m not afraid of vim, I’m just rebooting the computer ...

Pwmetrics is very fast and in a matter of seconds gives me four main metrics: the time before the interactive, when finally our clicks are not “swallowed”, Google's speed index, visible prokrasku and full prokrasku applications.

Why not a lighthouse? Because I'm as old as you used it, even when it was long and not built into the audits tab. Go!

It all started with the fact that thanks to aggressive hints from Google, we switched to HTTPS. Of course, it was still very cool filed by such things as certbot. Now we buy an SSL certificate not for $ 7, but for 0. But this is sad, despite the fact that the theory does not reassure that traffic over HTTPS is not checked. After all, it all slows down. And, indeed, HTTPS makes it run on a very simple formula.

Consider the example of Google. Handshake with Google takes 50 ms, after which another 20 ms is added to each request. I will not put tests from our application, but you can see what numbers you have. You will be upset. In general, it all started with this transition to HTTPS. We need to beat time, because he slowed us even more. But the pain ends, because HTTPS opens up superpowers. And really - everything that I will tell, without HTTPS does not work. Thanks google.

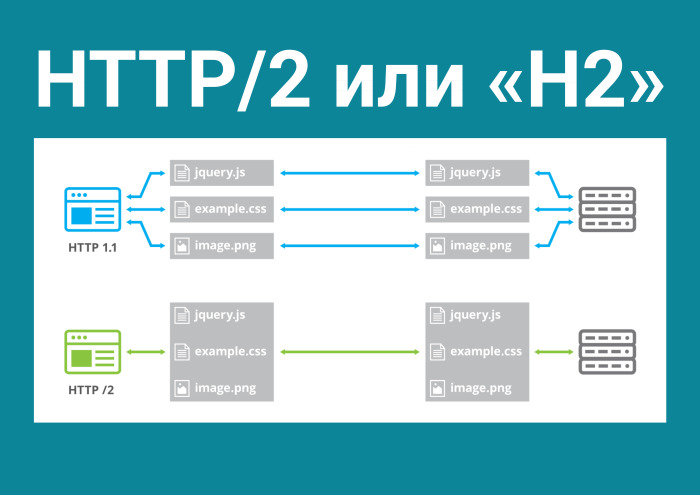

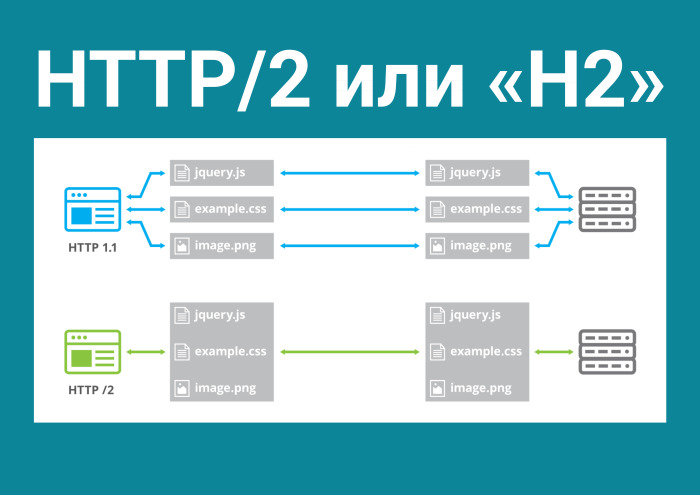

This is HTTP / 2, a new binary protocol that solves the most urgent problems in the modern web, where there are a lot of pictures and requests. In HTTP 1 we have six unidirectional connections: either the file goes there, or back. HTTP / 2 opens only one connection and creates an unlimited number of bidirectional streams there. And what does this give us?

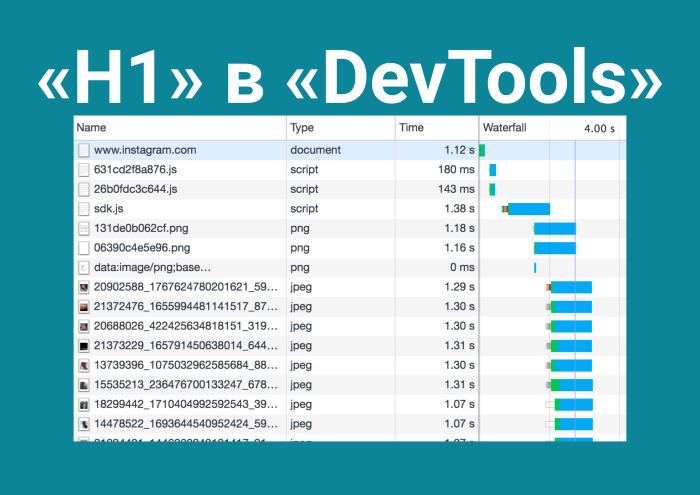

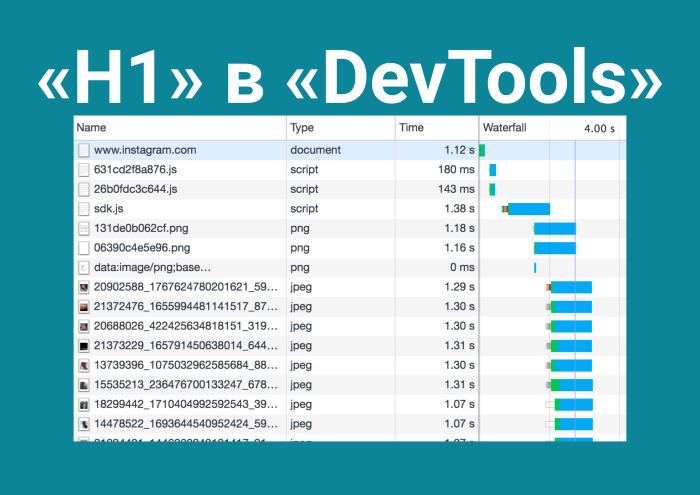

I hasten to remind you that HTTP 1 in DevTools waterfall looks stepwise due to these restrictions on connections, while HTTP / 2 loads the application in one iteration.

And then you watch successful stories of people, you see these screenshots of phones, where full LTE and Wi-Fi, and you think that it is time to finally configure the module to nginx, so that HTTP / 2 is supported for static. But you remember what country you are in. And you decide to make the conditions more real.

The brew install command simplehttp2server was not so difficult to do. To our most distant client, the delay is 600 ms. And how does he work there? Really powerful. Twice better than HTTP 1.

But I'm not banned from Google ...

There are legendary presentations. For example, the CTO of some CDN comes out and says, “That they will not tell you about HTTP / 2”. And you look at these statistics, in which green is where HTTP / 2 won, and red is where HTTP won 1. “Internet speed”, “delay” - I understand this, but why did so many tests in the right column win HTTP one? Plr? You googling and you understand: this is what hides from us like that. Because in Devtools in the Network tab there is no packet loss rate. This is the number of packets that will be lost.

I set packet loss 2%, average latency, and yes, somehow it didn't work out. HTTP / 2 is still faster. You do another test ... and here are the other numbers. The whole thing rests on a pure experiment. I'm afraid there are few who can hold it. It is necessary to sit in the minibus with the phone, go, switch between stations, understand that your packet loss is dynamic, and take measurements. Has this stopped me, given that I am in the front end and our target audience does not work on mobile devices? Not.

What I've done? There is a webpack-merge. Of course, I made a build for both HTTP 1 and HTTP / 2 in one project. Everything could have been finished if there were no such geniuses as Ilya Grigorik, who says: “Those patterns that you use for HTTP 1 are antipatterns for HTTP / 2”.

You can do reverse and understand that this also works for HTTP / 2. In HTTP / 2, you hit all your application on a bunch of files and load them into one iteration. Because they run in parallel, because why drag one bundle? And vice versa. There are many tactics. It is possible that someone has asynchronous loading of CSS, JS and others, but async defer does not always save. And we, in some cases extending as a SaaS solution, use the following scheme: for some customers one thing, for others the other. And in general, without breaking anything, I received an increase of 21%. Just added a module for nginx.

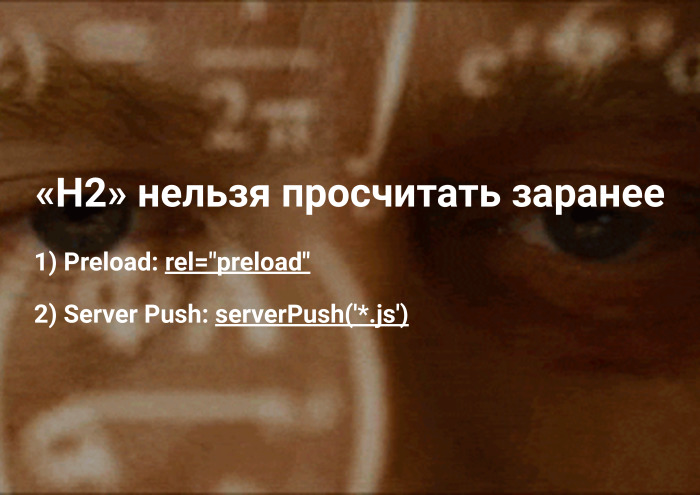

By the way, I lied. HTTP / 2 cannot be calculated in advance. After all, you all successfully forgot about the preload, which was fired two years ago. And there is such a thing as Server Push.

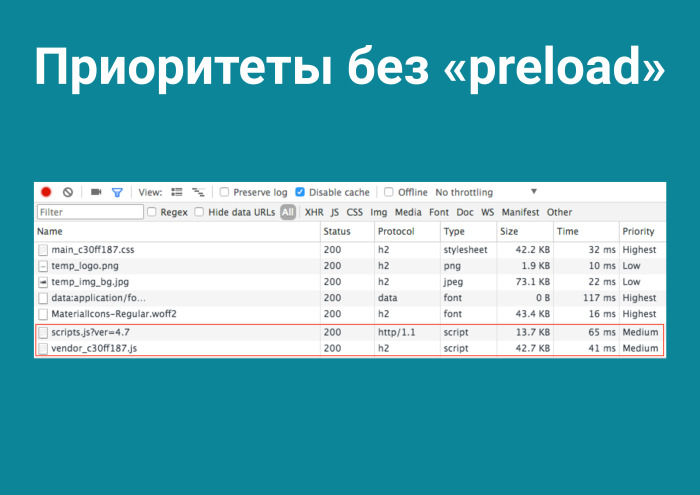

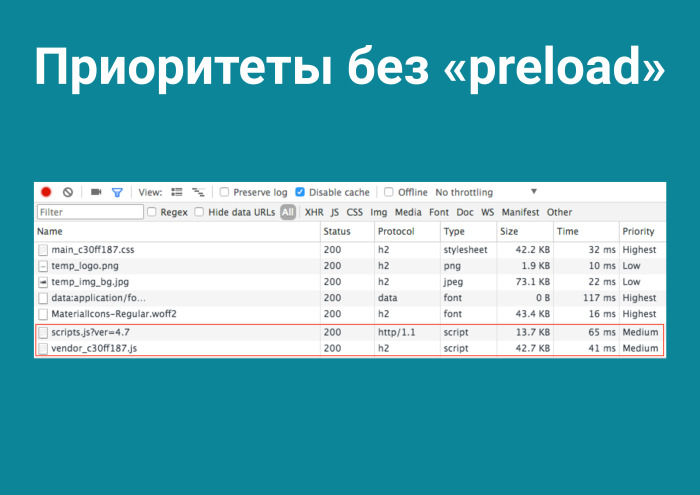

I decided to check them out. Priorities without preload, of course. Due to the fact that we place our bundles before the closing <body> tag, they are loaded last, while thanks to <link rel = “preload”> you can tell the browser yourself: “Dear, load it first, I will need it”. And it pumps up the application load by 0%. But here I lied. Because time has decreased to interactive. The necessary files came, parsed, and the user received the first prokrasku applications much faster. And what about HTTP Server Push?

Literally, this is when the server pushes everything for you whatever you want. That is, you open a connection to the server, and Server Push is installed there and it looks at what address you have come to, and then simply pulls you the files. The logic of what kind of files to push, laid, of course, on the server. It looks like this.

You open a connection to the server, and without any request you will receive image.jpg in 4 ms. You are all familiar with the company Akamai, because all the same host photos in Instagram. Akamai in their projects use Server Push for “hero images”, for the first screens. But I do not have a raster in the project - I think, like you. I use SVG. I think: probably, Server Push will be interesting to me due to the fact that there is a PRPL.

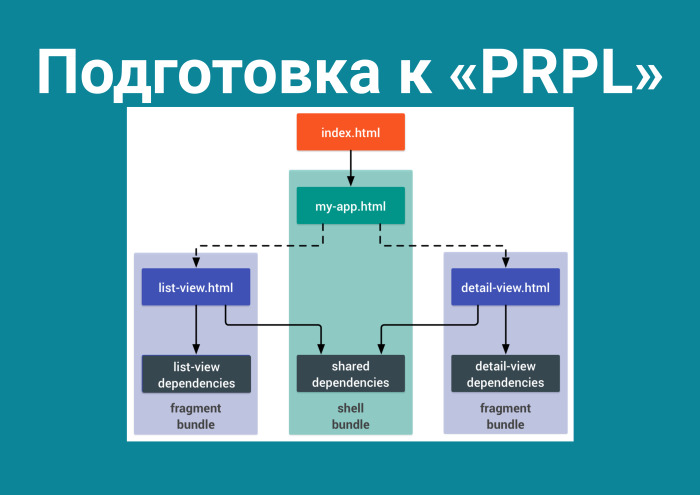

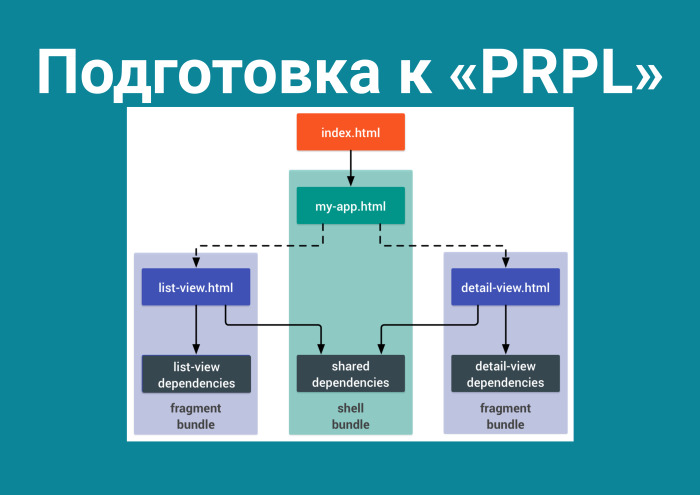

Watching the Google Dev Summit. PRPL. The first download occurs, the server pulls files, and subsequent downloads are stored in the Service Worker. I read about this pattern, and the preparation is as follows.

If you want to use it, you need to break your bundle modularly. Go to one route - one bundle, to another route - another. I set it up.

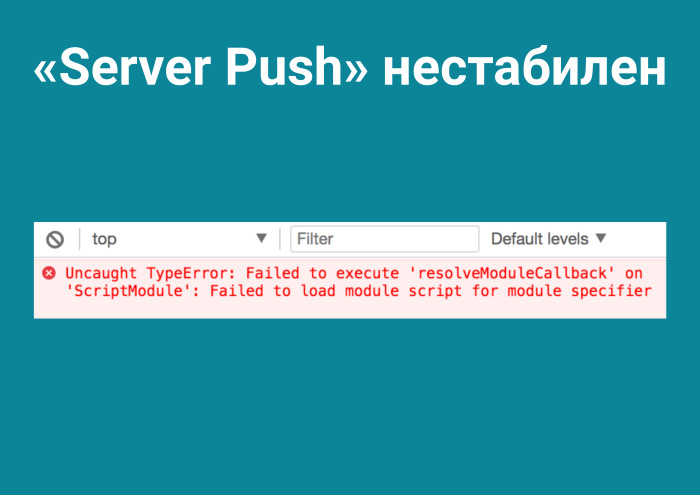

By the way, I really like non-working examples on Node.js in articles. We immediately see that the technology is run-in. By adjusting this, I get a 61% increase. Not bad, considering that our clients, it turns out, are cut by slice on the last Chrome. Great! But it was not there. For some reason, Server Push is unstable. It gives such a detailed trace route that it is immediately clear where the problem is.

In general, this whole story about PRPL is incomprehensible. I thought, with Serivice Worker a problem, and here already at the first stage nothing works.

I do not want to either reduce the hash in the CSS modules, or cut out the glyphs, or here are the dynamic imports ... And, to them, we found one use.

And with sadness you move to the blogs of successful companies. This is Dropbox. I look, the headline is: "We have reduced our storage by 60%, saved billions of dollars."

It turns out that compression algorithms have been around for a long time. It happened somewhere in June - apparently, a meteorite flew somewhere over Samara and the idea to test new compression algorithms, Zopfli and Brotli, came to me and a guy from a neighboring company. More than sure, you read his article , this is Alexander Subbotin. The article was sold on Medium, and he is known, but I am not.

In the open source they love to show off - 3 kB gzipped. In general, you can write: Zopfli squeezes 10% less. Because Zopfli is backward compatible for gzip. What is it? Again, the new Zopfli compression algorithm is 2–8% more efficient than gzip. And it is supported in 100% of browsers, exactly like gzip.

And Brotli, which is not very old, but is supported in 80% of browsers.

How it works? On your server, in particular for Brotli - for Zopfli already by default - there are permissions that take the files and encode them on the server using a certain algorithm. That is, in the case of gzip, it takes main.js and creates main.js.gz.

In the case of Zopfli, he creates everything the same, only compressed - in my case - 2% more efficient.

In the case of Brotli, you install the module for nginx, and if your browser supports Brotli, it gives a really enchanting boost. It is impossible not to rejoice when you have to load less front end. And thanks to people like the German font specialist Bram Stein, there is a webfonttools brew formula. It is interesting in that it provides two interesting tools. One pinches WOFF fonts with Zopfli and in this case saves ... We have the material UI, we get to Roboto, on the whole headset. He saved 3K. And WOFF - 2 KB. Brotli, apparently, squeezes with a greater degree of compression and saves 1 KB. Still nice. This is just a brew install webfonttools. That's what it costs you.

In our case, the increase is as follows: Zopfli - plus 7%, Brotli - plus 23%.

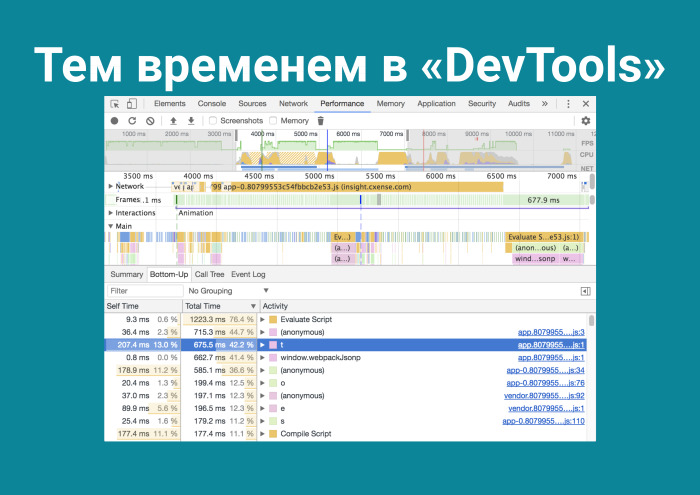

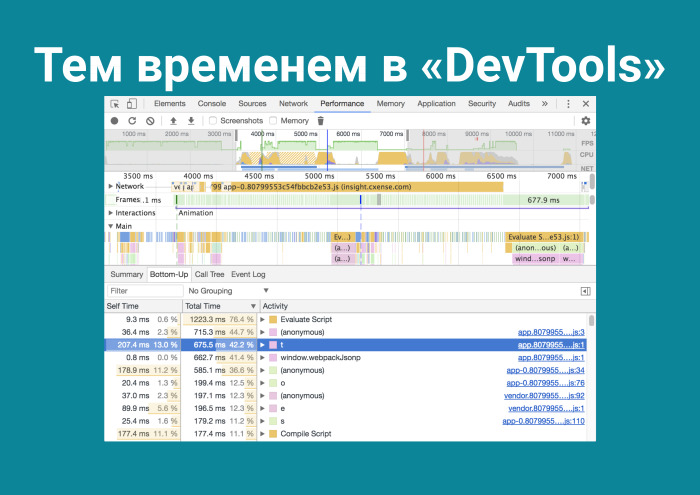

I forgot about the main thing. I judged people who are engaged in micro-optimization in JS, but forgot what was happening in DevTools.

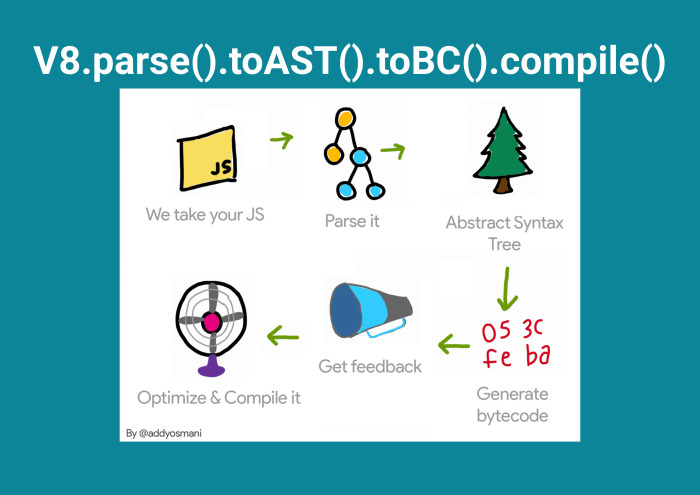

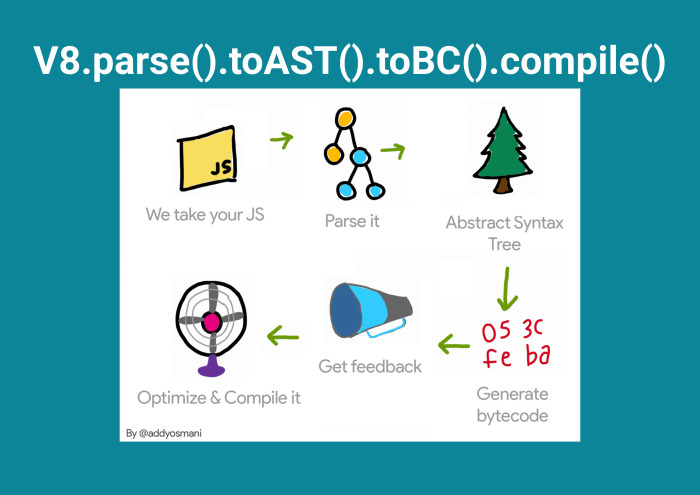

I go and understand that Evaluate Script comes first. In the third place is some kind of function t () - but I did not write this, I will check git blame. And Compile Script. They take a lot of time. And how to reduce it? Why, after I started the frontend, should the client still parse this code? And because V8 works that way.

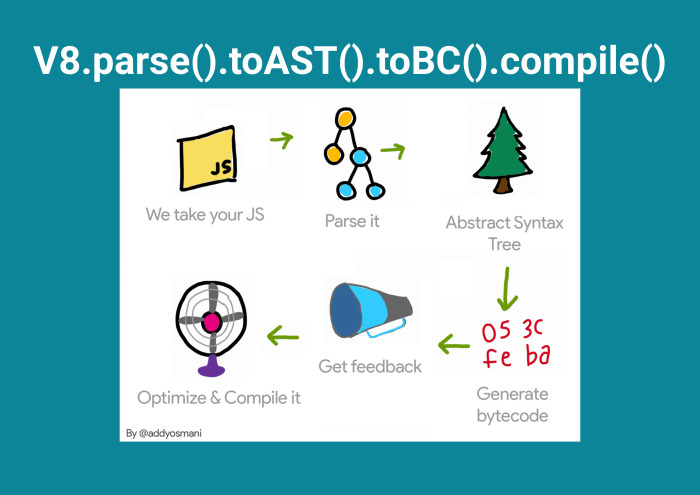

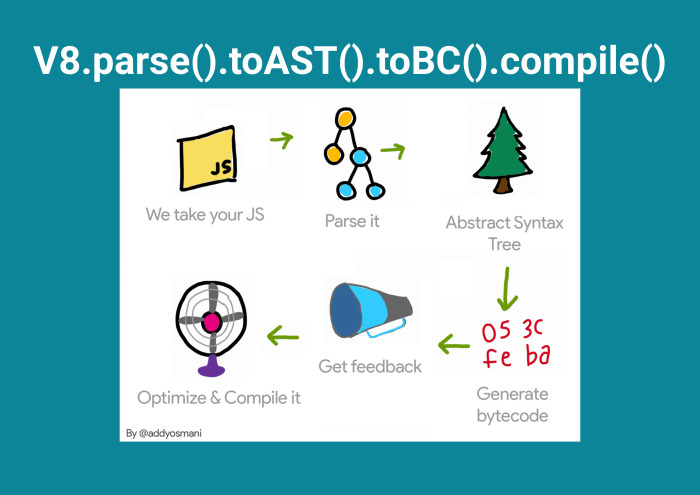

Thanks to people like Eddie Osmani, we understand that JS comes in the form of text, after which it is parsed, after which it is converted into an Abstract Syntax Tree, a byte code is generated and optimized.

And here I remember that everything new is well forgotten old.

Optimize-js - it also takes and wraps everything in self-invoking functions. I look on console.time (), it gives a gain in 0,01 ms. But I guess I just did not remember the function code. I connect the webpack plugin, and the increase is already 0.1%. In general, there is used in the readme ... it does not matter. The 62nd Chrome came out, and the release notes said that the V8 is already good. So this trick with optimize-js will be less and less effective every day. But one has only to go on Twitter, and you will know that there is such a thing as prepack.

Because she is from Facebook. In general, it looks cool. That is, in the case of Fibonacci ... Yes, not bad, but it obviously takes less time to initialize a variable.

You look that the Fibonacci number is 0.5 ms and that the variable in memory is 0.02 ms. You connect prepack - everything falls in you, you disconnect source maps - everything falls in you, you disconnect Math.random () - you have everything ... Ok. Climb into the documentation, turn off all that is possible, because Math.random is considered and all this does not work out very nicely. Then you realize that your bundle has become more, because there is only a cycle for a hundred for, where the generation of strings for the names of our modules takes place. Turn it off. And it gives an increase of 1%.

I had to go to the backendars ...

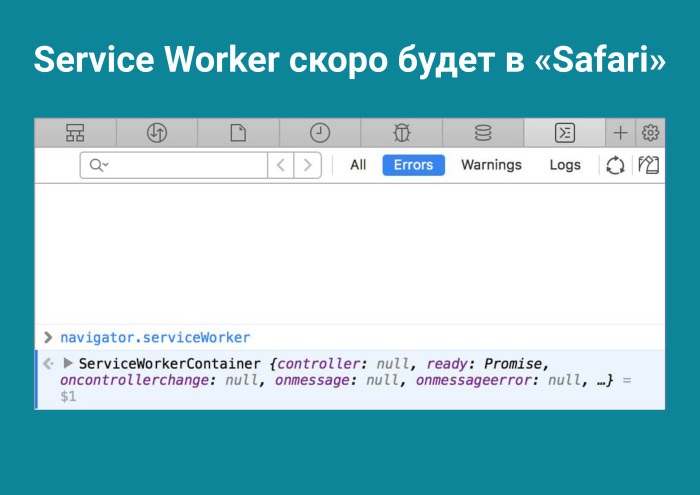

But in fact, this is the future. It comes, apparently, not today. If in the case of optimize-js it is clear, there is decreasing, in the case of prepack it is increasing. And I think the guys will come to success. And already lucky guys who make apps about PWA, Progressive Web Apps, which sell Windows Store, for which the APK is automatically generated on Android. There in general there is an offline and native app touch, but I am not particularly interested. And then there is high performance. Well, what is her secret? In the Service Worker. That Safari won't support Service Worker is a joke. Support will be.

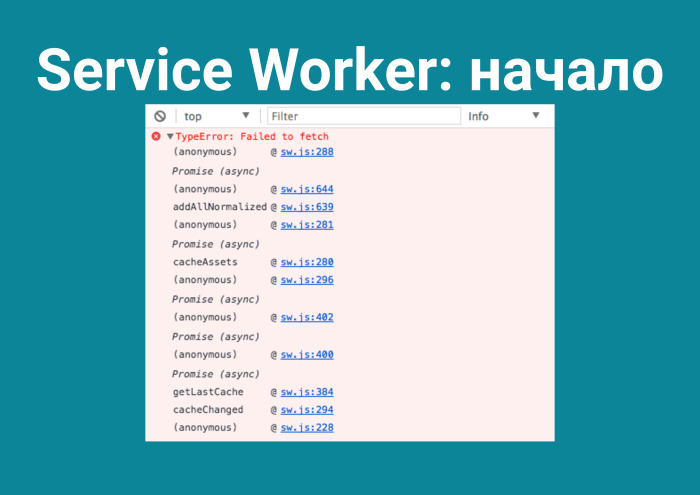

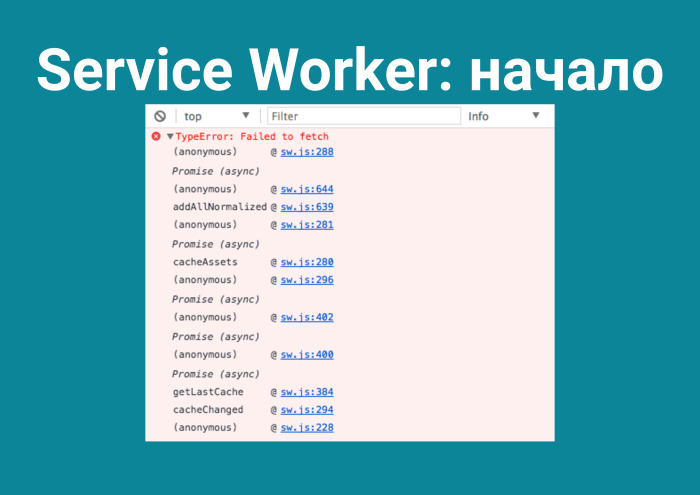

I started searching this topic and trying it. After all, why bother loading the frontend twice, requesting it? Lyrical digression. In our application, you can go at all to different routes, and this is a very common case. And everything is very sad. All this is downloaded a hundred times. And I think - caches on the client. In addition, everything is broken. And here begins my favorite rubric in programming. Because you will not succeed!

Well, like Public Enemy sang: "Harder than you think it’s a beautiful thing." But why did this happen at all?

You register it with just one line of code.

But because, not realizing what it is and how it works, you immediately go into battle. While the download is in progress, I'll tell you how it looks.

I registered sw.js, did something and everything fell - since you basically do not understand what it is. Wikipedia definition: Service Worker is a proxy built into your browser and currently has support in 81% of browsers. He can only see what the user is sending, intercept requests and, if the request is the one he needs, check in sw.js if the logic is there for him. And - to do the logic outlined there.

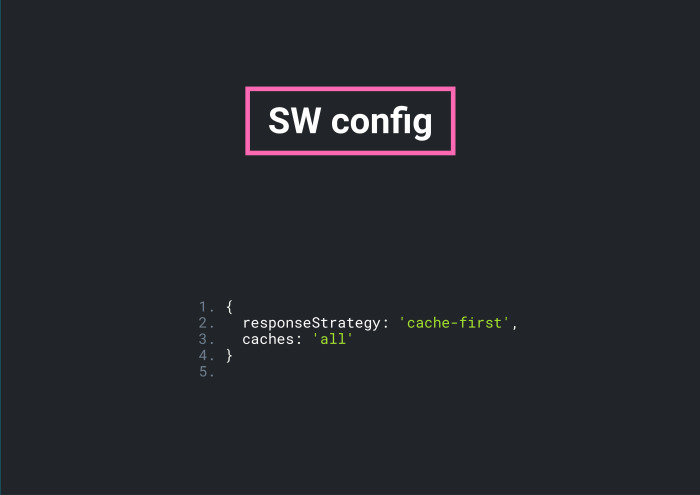

To lay this logic, you need to answer two questions.

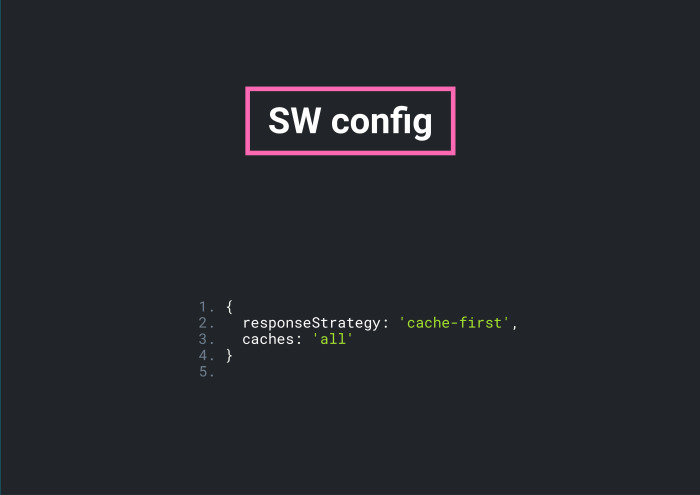

First of all - which cash strategy do you choose? It exists cache-first, that is, downloaded, saved to the cache, and then from there you take until the update arrives. Or network-first: all the time to pull the server and take from the cache, only if the server did not respond.

What to cache? Let's cache everything.

We live in the golden time. As Stefan Judis said: “Just celebrate”. We can take anything: sw-precache, offline-plugin - can not go wrong. They work great. And all these sw.js - 300 lines of the same. In a section it looks like this. This is a map with your files and hashmaps: functions for working with caching strategies, functions for working with files, and cache - if you have not listened to lectures and know what “normalization” is.

Some kind of eventlisteners and state management. But this is necessary for the guys from PWA, but I just want the front-end once again not to load.

Service Worker is not in business. The statistics are as follows: 22 requests, 2 MB and finish in 4 seconds. I connect the Service Worker with the “Cach All” requirement and get a gain of minus 200%. Something went wrong.

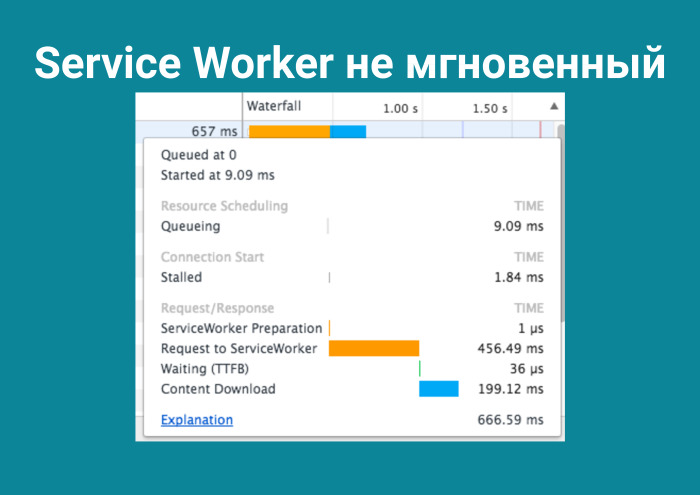

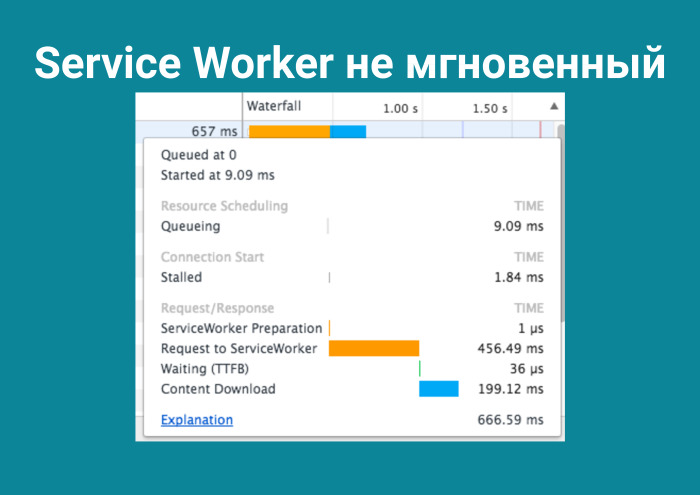

Here is a screenshot, all in grids, 65 requests, 16 MB, and finished in 7.6 seconds. But I did not lie, the application is great. And why? Service Worker loaded the user to failure. It's a proxy, and in order to keep something in itself, it takes time. You even make a request to him and he can work out half a second!

To reach the thing that is in the browser, you need half a second. Not bad. Click in DevTools on the explanation, and then there is an instruction on how to read flame charts. Inexplicably, but fact: when I climbed into DevTools, I did not find compile-time. How do you think this is? An attentive listener will remember that the V8 works something like this.

It so happened that I accidentally found out: Service Worker keeps the prepared cache in it. I see that my Evaluate script has dropped from 1400 ms to 1000 ms in the bottom-up tab and the compile-time has dropped twice. Therefore, I did not “see” him - it went down the list.

Why? With this question I went to the Internet and, after spending a day there, I did not find anything. And helped me to issue on GitHub to the respected Dan Abramov. There was something like this: “Why did you add the Service Worker to create-react-app?” And a bunch of comments. And Eddie Osmani in his last paragraph gives me an answer, very modestly: “You know, guys, and the Service Worker actually keeps the cache in prepared state already in a parsed form, you do not need to compile a second time.” I understand that this is my chance, and that everything around is generally not aware of this. But why put this in a press release? Indeed, it is better to delve into GitHub , only 34 comments from the end.

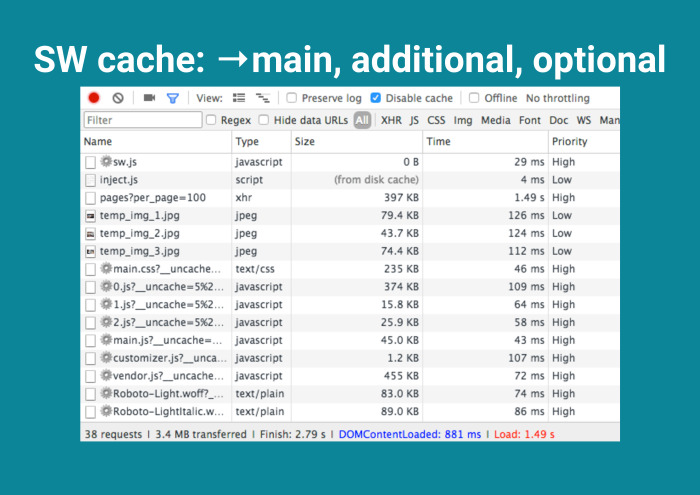

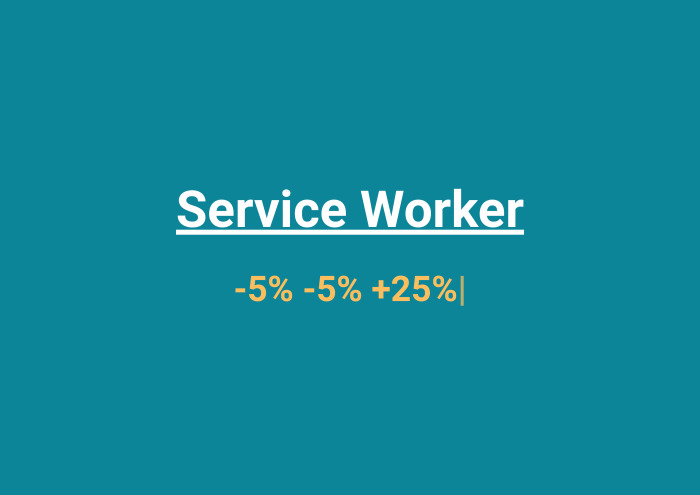

And further, as Pavel Durov said, I distinguish the most important from the non-principal. Instead of caching everything, I do the following: I select the main section - the kernel, without which the application will not start. I allocate additional fonts. They are heavy, it is better to keep them on the client. And optionally, Service Worker, save everything that walks on the network. It would be nice.

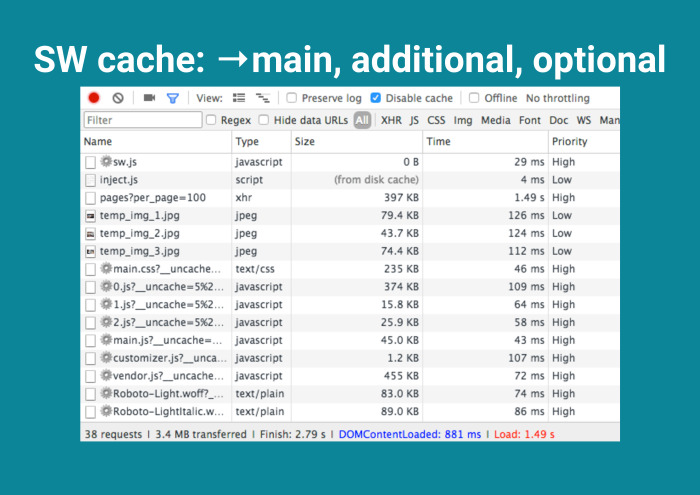

How does this happen? The first download from the user.

His Service Worker does not load much, but FPS sags, traffic increases. Great, the grids appeared next to the vital files. Then at the next boot - additional cache.

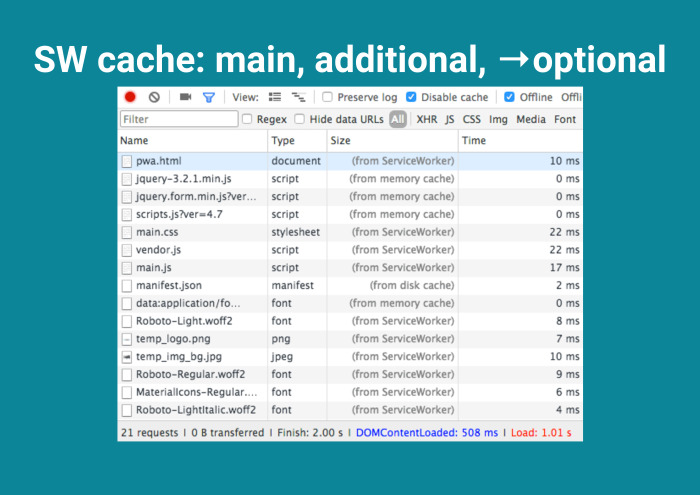

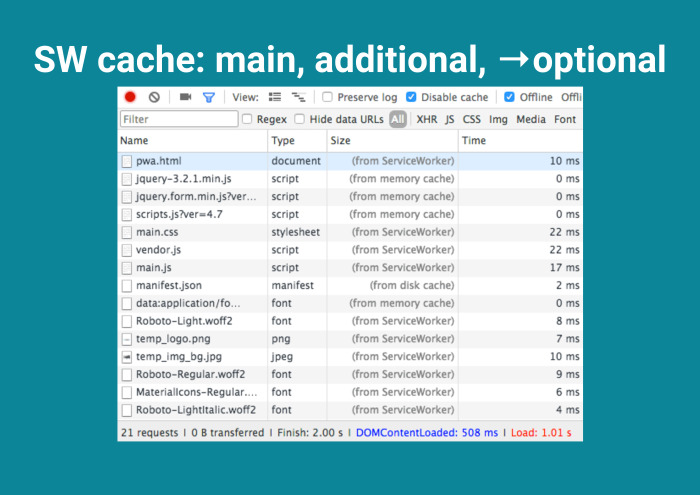

And then optional.

And here you reach the final station, because you have not loaded a single byte. By and large, Service Worker takes away, takes away, and then still throws it from above with interest. ? I dont know.

post-CSS- MoOx , . ? I do not know.

, .

, cache all SW . , , , , .

. . , . , , . . , webpack . , . . .

. Thanks for attention.

It turns out that compression algorithms have been around for a long time. It happened somewhere in June - apparently, a meteorite flew somewhere over Samara and the idea to test new compression algorithms, Zopfli and Brotli, came to me and a guy from a neighboring company. More than sure, you read his article, this is Alexander Subbotin. The article was sold on Medium, and he is known, but I am not.

- Hello. Thank you for coming. I want to tell a story about how we managed to make the frontend "instant".

For a start, I will introduce myself to at least somehow raise confidence in myself in such a dangerous topic. I am a fender from the Norwegian company Cxense. No one really can pronounce the name correctly. It is good that seven years after the foundation they released a video on how to pronounce Cxense correctly. "C-Sense".

')

We deliver to people what they want in the following sections: advertising and articles. Here the backend gets to deliver data quickly. Not surprisingly, backenders from the Norwegian Linus Torvalds office were a classmate. But we are somehow against them ... The problem originated like this: we cannot deliver the frontend quickly.

When I heard disputes about how the front-end should be “delivered”, how to load and speed it up, this reminded me of a respected person’s article, 61 paragraph - “A quick tutorial on instant creativity” for designers.

There were described examples about how the designer thinks, but in general it should be done differently. And in the frontend sometimes you need to go beyond. You yourself can catch yourself thinking that sometimes people think in a completely different direction and everything depends on micro-optimization.

Great, we are serious guys too and we started the path of optimization according to the following list:

We installed source-map-explorer. We are so hipster that we use bundle-buddy, released a month ago. Analyzed the bundle and removed duplicates. They realized that the application should be one bundle, and it needs to be broken down into chunks, and only downloaded when going to the necessary page. Have you installed the import-cost extension to know? in what size we load the dependencies in VS Code. Established dynamic imports, they found one use in the code. But somehow sad in my heart, and here it is also autumn ... And all you want is to go home.

And then come to the office and say the default phrase: “Guys, help me rewrite the frontend!”.

But here is bad luck. The phrase “let's rewrite to React” was voiced two years ago and there is nothing more to rewrite. From here begins my simple story.

What will I tell you?

Part of any successful presentation is graphics, but they won't be here. I will use numbers. There is a serious, good, two-year exposure of the application, and its initial speed after the previously mentioned optimizations is 100%. All the results that I will talk about were checked by the following CLI tool from the project manager.

Pwmetrics. He was made by Paul Irish - perhaps the most respected project manager on this planet. Considering that it is easily embedded in CI, and I’m not afraid of vim, I’m just rebooting the computer ...

Pwmetrics is very fast and in a matter of seconds gives me four main metrics: the time before the interactive, when finally our clicks are not “swallowed”, Google's speed index, visible prokrasku and full prokrasku applications.

Why not a lighthouse? Because I'm as old as you used it, even when it was long and not built into the audits tab. Go!

It all started with the fact that thanks to aggressive hints from Google, we switched to HTTPS. Of course, it was still very cool filed by such things as certbot. Now we buy an SSL certificate not for $ 7, but for 0. But this is sad, despite the fact that the theory does not reassure that traffic over HTTPS is not checked. After all, it all slows down. And, indeed, HTTPS makes it run on a very simple formula.

Consider the example of Google. Handshake with Google takes 50 ms, after which another 20 ms is added to each request. I will not put tests from our application, but you can see what numbers you have. You will be upset. In general, it all started with this transition to HTTPS. We need to beat time, because he slowed us even more. But the pain ends, because HTTPS opens up superpowers. And really - everything that I will tell, without HTTPS does not work. Thanks google.

This is HTTP / 2, a new binary protocol that solves the most urgent problems in the modern web, where there are a lot of pictures and requests. In HTTP 1 we have six unidirectional connections: either the file goes there, or back. HTTP / 2 opens only one connection and creates an unlimited number of bidirectional streams there. And what does this give us?

I hasten to remind you that HTTP 1 in DevTools waterfall looks stepwise due to these restrictions on connections, while HTTP / 2 loads the application in one iteration.

And then you watch successful stories of people, you see these screenshots of phones, where full LTE and Wi-Fi, and you think that it is time to finally configure the module to nginx, so that HTTP / 2 is supported for static. But you remember what country you are in. And you decide to make the conditions more real.

The brew install command simplehttp2server was not so difficult to do. To our most distant client, the delay is 600 ms. And how does he work there? Really powerful. Twice better than HTTP 1.

But I'm not banned from Google ...

There are legendary presentations. For example, the CTO of some CDN comes out and says, “That they will not tell you about HTTP / 2”. And you look at these statistics, in which green is where HTTP / 2 won, and red is where HTTP won 1. “Internet speed”, “delay” - I understand this, but why did so many tests in the right column win HTTP one? Plr? You googling and you understand: this is what hides from us like that. Because in Devtools in the Network tab there is no packet loss rate. This is the number of packets that will be lost.

I set packet loss 2%, average latency, and yes, somehow it didn't work out. HTTP / 2 is still faster. You do another test ... and here are the other numbers. The whole thing rests on a pure experiment. I'm afraid there are few who can hold it. It is necessary to sit in the minibus with the phone, go, switch between stations, understand that your packet loss is dynamic, and take measurements. Has this stopped me, given that I am in the front end and our target audience does not work on mobile devices? Not.

What I've done? There is a webpack-merge. Of course, I made a build for both HTTP 1 and HTTP / 2 in one project. Everything could have been finished if there were no such geniuses as Ilya Grigorik, who says: “Those patterns that you use for HTTP 1 are antipatterns for HTTP / 2”.

You can do reverse and understand that this also works for HTTP / 2. In HTTP / 2, you hit all your application on a bunch of files and load them into one iteration. Because they run in parallel, because why drag one bundle? And vice versa. There are many tactics. It is possible that someone has asynchronous loading of CSS, JS and others, but async defer does not always save. And we, in some cases extending as a SaaS solution, use the following scheme: for some customers one thing, for others the other. And in general, without breaking anything, I received an increase of 21%. Just added a module for nginx.

By the way, I lied. HTTP / 2 cannot be calculated in advance. After all, you all successfully forgot about the preload, which was fired two years ago. And there is such a thing as Server Push.

I decided to check them out. Priorities without preload, of course. Due to the fact that we place our bundles before the closing <body> tag, they are loaded last, while thanks to <link rel = “preload”> you can tell the browser yourself: “Dear, load it first, I will need it”. And it pumps up the application load by 0%. But here I lied. Because time has decreased to interactive. The necessary files came, parsed, and the user received the first prokrasku applications much faster. And what about HTTP Server Push?

Literally, this is when the server pushes everything for you whatever you want. That is, you open a connection to the server, and Server Push is installed there and it looks at what address you have come to, and then simply pulls you the files. The logic of what kind of files to push, laid, of course, on the server. It looks like this.

You open a connection to the server, and without any request you will receive image.jpg in 4 ms. You are all familiar with the company Akamai, because all the same host photos in Instagram. Akamai in their projects use Server Push for “hero images”, for the first screens. But I do not have a raster in the project - I think, like you. I use SVG. I think: probably, Server Push will be interesting to me due to the fact that there is a PRPL.

Watching the Google Dev Summit. PRPL. The first download occurs, the server pulls files, and subsequent downloads are stored in the Service Worker. I read about this pattern, and the preparation is as follows.

If you want to use it, you need to break your bundle modularly. Go to one route - one bundle, to another route - another. I set it up.

By the way, I really like non-working examples on Node.js in articles. We immediately see that the technology is run-in. By adjusting this, I get a 61% increase. Not bad, considering that our clients, it turns out, are cut by slice on the last Chrome. Great! But it was not there. For some reason, Server Push is unstable. It gives such a detailed trace route that it is immediately clear where the problem is.

In general, this whole story about PRPL is incomprehensible. I thought, with Serivice Worker a problem, and here already at the first stage nothing works.

I do not want to either reduce the hash in the CSS modules, or cut out the glyphs, or here are the dynamic imports ... And, to them, we found one use.

And with sadness you move to the blogs of successful companies. This is Dropbox. I look, the headline is: "We have reduced our storage by 60%, saved billions of dollars."

It turns out that compression algorithms have been around for a long time. It happened somewhere in June - apparently, a meteorite flew somewhere over Samara and the idea to test new compression algorithms, Zopfli and Brotli, came to me and a guy from a neighboring company. More than sure, you read his article , this is Alexander Subbotin. The article was sold on Medium, and he is known, but I am not.

In the open source they love to show off - 3 kB gzipped. In general, you can write: Zopfli squeezes 10% less. Because Zopfli is backward compatible for gzip. What is it? Again, the new Zopfli compression algorithm is 2–8% more efficient than gzip. And it is supported in 100% of browsers, exactly like gzip.

And Brotli, which is not very old, but is supported in 80% of browsers.

How it works? On your server, in particular for Brotli - for Zopfli already by default - there are permissions that take the files and encode them on the server using a certain algorithm. That is, in the case of gzip, it takes main.js and creates main.js.gz.

In the case of Zopfli, he creates everything the same, only compressed - in my case - 2% more efficient.

In the case of Brotli, you install the module for nginx, and if your browser supports Brotli, it gives a really enchanting boost. It is impossible not to rejoice when you have to load less front end. And thanks to people like the German font specialist Bram Stein, there is a webfonttools brew formula. It is interesting in that it provides two interesting tools. One pinches WOFF fonts with Zopfli and in this case saves ... We have the material UI, we get to Roboto, on the whole headset. He saved 3K. And WOFF - 2 KB. Brotli, apparently, squeezes with a greater degree of compression and saves 1 KB. Still nice. This is just a brew install webfonttools. That's what it costs you.

In our case, the increase is as follows: Zopfli - plus 7%, Brotli - plus 23%.

I forgot about the main thing. I judged people who are engaged in micro-optimization in JS, but forgot what was happening in DevTools.

I go and understand that Evaluate Script comes first. In the third place is some kind of function t () - but I did not write this, I will check git blame. And Compile Script. They take a lot of time. And how to reduce it? Why, after I started the frontend, should the client still parse this code? And because V8 works that way.

Thanks to people like Eddie Osmani, we understand that JS comes in the form of text, after which it is parsed, after which it is converted into an Abstract Syntax Tree, a byte code is generated and optimized.

And here I remember that everything new is well forgotten old.

Optimize-js - it also takes and wraps everything in self-invoking functions. I look on console.time (), it gives a gain in 0,01 ms. But I guess I just did not remember the function code. I connect the webpack plugin, and the increase is already 0.1%. In general, there is used in the readme ... it does not matter. The 62nd Chrome came out, and the release notes said that the V8 is already good. So this trick with optimize-js will be less and less effective every day. But one has only to go on Twitter, and you will know that there is such a thing as prepack.

Because she is from Facebook. In general, it looks cool. That is, in the case of Fibonacci ... Yes, not bad, but it obviously takes less time to initialize a variable.

You look that the Fibonacci number is 0.5 ms and that the variable in memory is 0.02 ms. You connect prepack - everything falls in you, you disconnect source maps - everything falls in you, you disconnect Math.random () - you have everything ... Ok. Climb into the documentation, turn off all that is possible, because Math.random is considered and all this does not work out very nicely. Then you realize that your bundle has become more, because there is only a cycle for a hundred for, where the generation of strings for the names of our modules takes place. Turn it off. And it gives an increase of 1%.

I had to go to the backendars ...

But in fact, this is the future. It comes, apparently, not today. If in the case of optimize-js it is clear, there is decreasing, in the case of prepack it is increasing. And I think the guys will come to success. And already lucky guys who make apps about PWA, Progressive Web Apps, which sell Windows Store, for which the APK is automatically generated on Android. There in general there is an offline and native app touch, but I am not particularly interested. And then there is high performance. Well, what is her secret? In the Service Worker. That Safari won't support Service Worker is a joke. Support will be.

I started searching this topic and trying it. After all, why bother loading the frontend twice, requesting it? Lyrical digression. In our application, you can go at all to different routes, and this is a very common case. And everything is very sad. All this is downloaded a hundred times. And I think - caches on the client. In addition, everything is broken. And here begins my favorite rubric in programming. Because you will not succeed!

Well, like Public Enemy sang: "Harder than you think it’s a beautiful thing." But why did this happen at all?

You register it with just one line of code.

But because, not realizing what it is and how it works, you immediately go into battle. While the download is in progress, I'll tell you how it looks.

I registered sw.js, did something and everything fell - since you basically do not understand what it is. Wikipedia definition: Service Worker is a proxy built into your browser and currently has support in 81% of browsers. He can only see what the user is sending, intercept requests and, if the request is the one he needs, check in sw.js if the logic is there for him. And - to do the logic outlined there.

To lay this logic, you need to answer two questions.

First of all - which cash strategy do you choose? It exists cache-first, that is, downloaded, saved to the cache, and then from there you take until the update arrives. Or network-first: all the time to pull the server and take from the cache, only if the server did not respond.

What to cache? Let's cache everything.

We live in the golden time. As Stefan Judis said: “Just celebrate”. We can take anything: sw-precache, offline-plugin - can not go wrong. They work great. And all these sw.js - 300 lines of the same. In a section it looks like this. This is a map with your files and hashmaps: functions for working with caching strategies, functions for working with files, and cache - if you have not listened to lectures and know what “normalization” is.

Some kind of eventlisteners and state management. But this is necessary for the guys from PWA, but I just want the front-end once again not to load.

Service Worker is not in business. The statistics are as follows: 22 requests, 2 MB and finish in 4 seconds. I connect the Service Worker with the “Cach All” requirement and get a gain of minus 200%. Something went wrong.

Here is a screenshot, all in grids, 65 requests, 16 MB, and finished in 7.6 seconds. But I did not lie, the application is great. And why? Service Worker loaded the user to failure. It's a proxy, and in order to keep something in itself, it takes time. You even make a request to him and he can work out half a second!

To reach the thing that is in the browser, you need half a second. Not bad. Click in DevTools on the explanation, and then there is an instruction on how to read flame charts. Inexplicably, but fact: when I climbed into DevTools, I did not find compile-time. How do you think this is? An attentive listener will remember that the V8 works something like this.

It so happened that I accidentally found out: Service Worker keeps the prepared cache in it. I see that my Evaluate script has dropped from 1400 ms to 1000 ms in the bottom-up tab and the compile-time has dropped twice. Therefore, I did not “see” him - it went down the list.

Why? With this question I went to the Internet and, after spending a day there, I did not find anything. And helped me to issue on GitHub to the respected Dan Abramov. There was something like this: “Why did you add the Service Worker to create-react-app?” And a bunch of comments. And Eddie Osmani in his last paragraph gives me an answer, very modestly: “You know, guys, and the Service Worker actually keeps the cache in prepared state already in a parsed form, you do not need to compile a second time.” I understand that this is my chance, and that everything around is generally not aware of this. But why put this in a press release? Indeed, it is better to delve into GitHub , only 34 comments from the end.

And further, as Pavel Durov said, I distinguish the most important from the non-principal. Instead of caching everything, I do the following: I select the main section - the kernel, without which the application will not start. I allocate additional fonts. They are heavy, it is better to keep them on the client. And optionally, Service Worker, save everything that walks on the network. It would be nice.

How does this happen? The first download from the user.

His Service Worker does not load much, but FPS sags, traffic increases. Great, the grids appeared next to the vital files. Then at the next boot - additional cache.

And then optional.

And here you reach the final station, because you have not loaded a single byte. By and large, Service Worker takes away, takes away, and then still throws it from above with interest. ? I dont know.

post-CSS- MoOx , . ? I do not know.

, .

, cache all SW . , , , , .

. . , . , , . . , webpack . , . . .

. Thanks for attention.

Source: https://habr.com/ru/post/345430/

All Articles