POWA-like PostgreSQL Monitoring with Prometheus

Prehistory

We used the excellent POWA utility for a long time to collect and easily view data on how PostgreSQL works (overall server performance, slowest queries, most frequent queries). However, this solution was far from ideal and we managed to find a better option, moreover, fully integrated with our main monitoring system.

POWA minimally satisfied most of our needs:

- Easy to install and use (install the extension for Postgres, install the web muzzle, use it);

- It has a web interface (the developers do not have access to the production server, but we want to watch how the database behaves);

- Collects the minimum required for us metrics.

However, there were unpleasant cons:

- It is inconvenient to watch data from multiple servers;

- The interface is not customizable;

- It is not possible to fasten (without pain) centralized authorization;

- There is no way to add your metrics;

- No integration with our monitoring system (we use Prometheus to collect metrics and Grafana to visualize them).

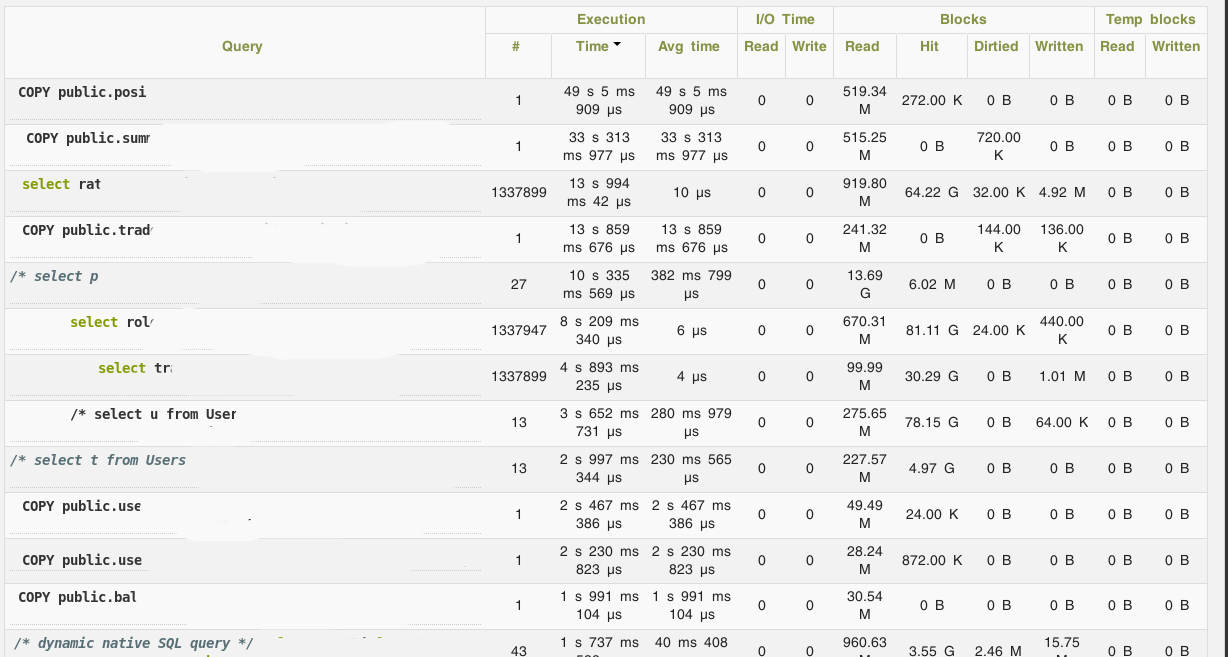

A couple of POWA screenshots for an example:

In this regard, it was decided to try to collect metrics from PostgreSQL using postgres_exporter for Prometheus.

Installation

TL; DR: here is the role for Ansible, was written under CentOS 7, but after minimal edits (replacing firewalld with iptables) should work on any system with systemd - https://github.com/UnitedTraders/ansible-postgresql-exporter

Solution Architecture

I will not talk in detail about Prometheus itself, there is enough material about it, but I will go through the general architecture and the exporter.

Prometheus uses the pull metrics collection model: he has a list of exporters and he polls them over HTTP, collecting a list of metrics from them and putting them into his store.

An exporter is an agent that collects metrics directly from the entity (the server as a whole, or a specific application) that needs to be monitored. Prometheus has rich possibilities for instrumentation, therefore, exporters are available for most popular applications, and it’s easy to write your own if necessary.

postgres_exporter works as follows: it connects to PostgreSQL, performs queries to service tables, and exposes the results in a special format using an internal HTTP server to collect them by Prometheus. An important point: in addition to a large set of default queries, you can define your own and collect any data that can be obtained using SQL, including some business metrics.

Thus, setting postgres_exporter'a boils down to three actions:

- Install postgres_exporter on the server that we want to monitor;

- Write requests (if needed) to monitor your settings;

- Show Prometheus server where to get metrics.

Exporter Installation

The exporter is written in Go, so everything is trivial:

- Download the binary from https://github.com/wrouesnel/postgres_exporter/releases

- We define the environment variable in the env file

DATA_SOURCE_NAME="postgresql://postgres@localhost:5432/?sslmode=disable" - Create a systemd unit, such as this:

[Unit] Description=Prometheus exporter for Postgresql (https://github.com/wrouesnel/postgres_exporter) [Service] WorkingDirectory=/opt/postgres_exporter EnvironmentFile=/opt/postgres_exporter/env ExecStart=/opt/postgres_exporter/postgres_exporter_v0.4.1_linux-amd64/postgres_exporter --extend.query-path=/opt/postgres_exporter/metrics.yaml --web.listen-address=:9187 User=pg_exporter [Install] WantedBy=multi-user.target - Create a file with custom queries for your metrics (see below);

- We start the service.

Customize your metrics

By default, postgres_exporter cannot collect data on requests. But PostgreSQL has a very useful extension, pg_stat_statements , which is exactly what it does. Installing pg_stat_statements comes down to three simple steps:

- Install contrib modules for PostgreSQL;

- Add the post_gresql.conf parameter to

shared_preload_libraries = 'pg_stat_statements'; - Create an extension in the postgrese itself:

CREATE EXTENSION pg_stat_statements.

Since it was important for us to collect the query execution time first, then the file with metrics turned out like this:

pg_database: query: " SELECT pg_database.datname, pg_database_size(pg_database.datname) as size FROM pg_database" metrics: - datname: usage: "LABEL" description: "Name of the database" - size: usage: "GAUGE" description: "Disk space used by the database" pg_stat_statements: query: "SELECT queryid, datname, left(query, 100) as short_query, sum(calls) as calls, sum(total_time) as total_time, min(min_time) as min_time, max(max_time) as max_time, sum(mean_time*calls)/sum(calls) as mean_time FROM pg_stat_statements JOIN pg_database ON pg_stat_statements.dbid = pg_database.oid group by queryid, short_query, datname" metrics: - queryid: usage: "LABEL" description: "Query ID" - datname: usage: "LABEL" description: "Database name" - short_query: usage: "LABEL" description: "Query limited to 100 symbols" - calls: usage: "COUNTER" description: "Number of times executed" - total_time: usage: "COUNTER" description: "Total time spent in the statement, in milliseconds" - min_time: usage: "GAUGE" description: "Minimum time spent in the statement, in milliseconds" - max_time: usage: "GAUGE" description: "Maximum time spent in the statement, in milliseconds" - mean_time: usage: "GAUGE" description: "Mean time spent in the statement, in milliseconds" There is one pitfall here: records from pg_stat_statements need to be aggregated, because In this table there can be several records with the same combination of query id, database id and query. The presence of such records leads to a fall in postgres_exporter, since it forms the names of the metrics based on this data, and they must be unique.

For simplicity, I did not add read / write metrics (shared_blks_written, etc., they are added by analogy). Also, I repeat, similar queries can be made to any table, and not just to pg_stat_statements.

Prometheus Request Examples

With the above config, the exporter will generate a significant number of metrics - 5 pieces for each type of request. We will aggregate them on the side of Prometheus.

Examples of requests (immediately with graphan variables for templating):

sum(rate(pg_stat_statements_mean_time{datname!~"template.*", datname!~"postgres", instance=~"$server"}[1m])) by (instance)- the average time of the request for the last minute across the serversum(rate(pg_stat_statements_mean_time{datname!~"template.*", datname!~"postgres", instance=~"$server", datname=~"$database"}[1m])) by (datname)- average time request for the last minute on the selected databasesum(increase(pg_stat_statements_calls{datname!~"template.*", datname!~"postgres", instance=~"$server", datname=~"$database"}[1m])) by (datname)- number of requests per minute to basetopk(20, increase(pg_stat_statements_calls{instance=~"$server", datname=~"$database"}[10m]))- top-20 of the most frequent database queries in the last 10 minutestopk(20, pg_stat_statements_mean_time{instance=~"$server", datname=~"$database"})- top-20 longest queries to the database

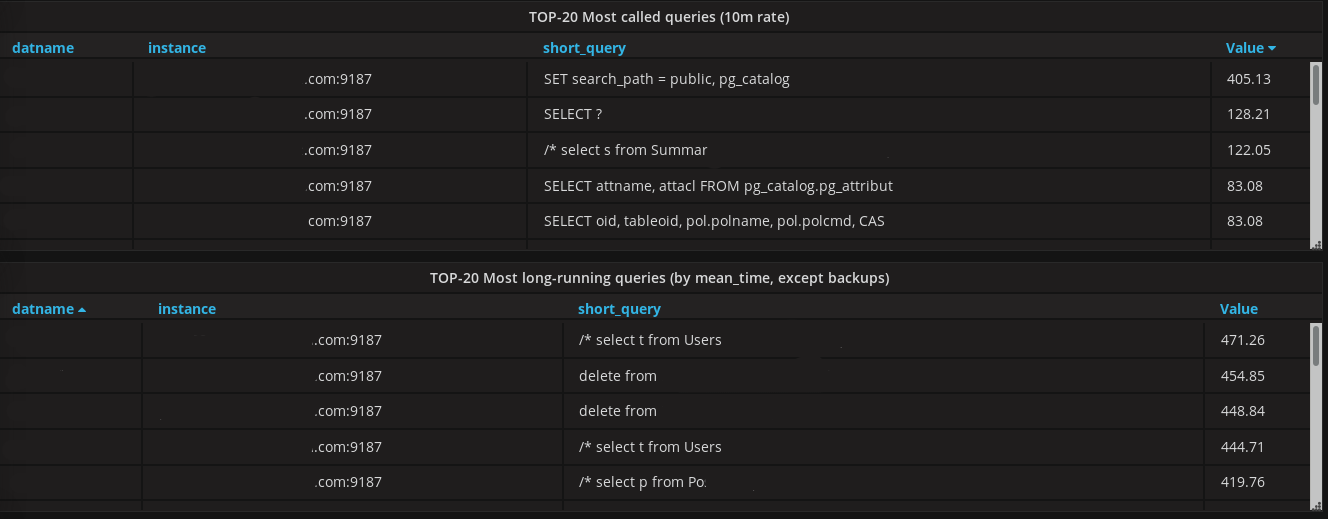

In graphan, it looks like this ( link to json):

')

Source: https://habr.com/ru/post/345370/

All Articles