Render clouds on mobile devices

3 years ago, the artist asked me:

- Listen, is it possible to add beautiful clouds to our mobile game?

- No, it is absolutely impossible, we constantly rotate the camera, so billboards will look very fake even if we add normal maps to them, and other ways ...

* artist plunges into lethargic sleep *

For me, there is no greater pleasure than finding out that I was wrong.

')

There are a lot of articles written about photorealistic rendering of clouds, but if you want to draw clouds on your smartphone, you have to invent a bunch of hacks, simplifications and assumptions.

Under the cut a detailed description of the rendering of clouds on mobile and a lot of html5 gifs.

We will need:

Blur normals in 2 passes:

Similarly, blur the cloud depth map:

It would be nice to add noise to our data.

There are 3 options:

Temporarily disable the blur to better understand how the noise projection works.

We project noise along the X, Y, Z axes:

Now use this noise to change the depth map of the cloud using the formula:

depth mathrel+=noise.r∗sin(t∗ pi qquad)+ qquad qquadnoise.g∗sin(t∗ pi+ dfrac pi3\)+ qquad qquadnoise.b∗sin(t∗ pi+ dfrac2 pi3)

And project the noise again:

Bring back the blur:

Lighting consists of 2 components:

So far we have applied noise only to the depth map.

Let's use noise in the process of applying lighting.

Add the noise vector to the normals:

Shift the position from which we read to noise.xy :

We impose on the rest of the world using alpha blending, adding transparency where the objects of the world are close to the surface of the clouds, or completely obscure them.

color.a mathrel∗=1−saturate((cloudDepth+fallback−worldDepth)/fallback)

Where fallback - the depth at which the object disappears from visibility inside the cloud.

If you run all these transformations in Full HD resolution, your top-end smartphone will cry, curl up into a ball and begin to show a slideshow with a frequency of 15-20 fps: phones pull out 3d graphics only with the help of early-z technology, which reduces the number of operations before performing three or four simple shaders per pixel. And we do a lot of computation in every pixel.

What to do? It's time to get rid of operations. The shapes of the clouds are vague, and the moving noise will cover up our dirty work: we will cut the resolution!

Final performance:

7 ms on nexus 5x with medium graphics settings (budget phone 2015).

0.5 ms on a notebook with a GTX 940M, which means that such performance clouds are perfect for VR, where high frame rates are important.

I don’t know if you can post a link to the Asset Store, but who is looking for it will always find :)

UPD: Added a section on performance

- Listen, is it possible to add beautiful clouds to our mobile game?

- No, it is absolutely impossible, we constantly rotate the camera, so billboards will look very fake even if we add normal maps to them, and other ways ...

* artist plunges into lethargic sleep *

For me, there is no greater pleasure than finding out that I was wrong.

')

There are a lot of articles written about photorealistic rendering of clouds, but if you want to draw clouds on your smartphone, you have to invent a bunch of hacks, simplifications and assumptions.

Under the cut a detailed description of the rendering of clouds on mobile and a lot of html5 gifs.

We collect data

We will need:

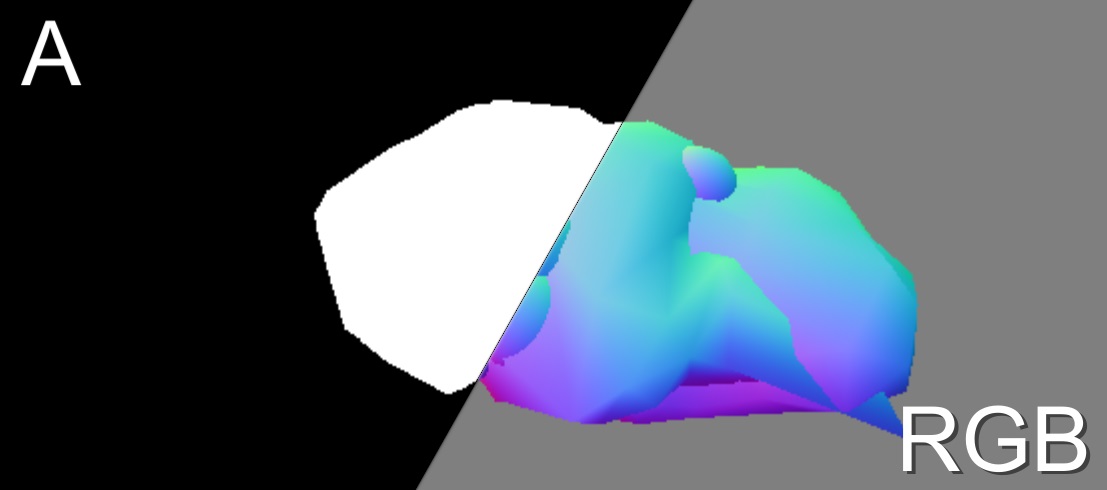

- Depth of the world:

- Cloud Depth:

- Cloud normals:

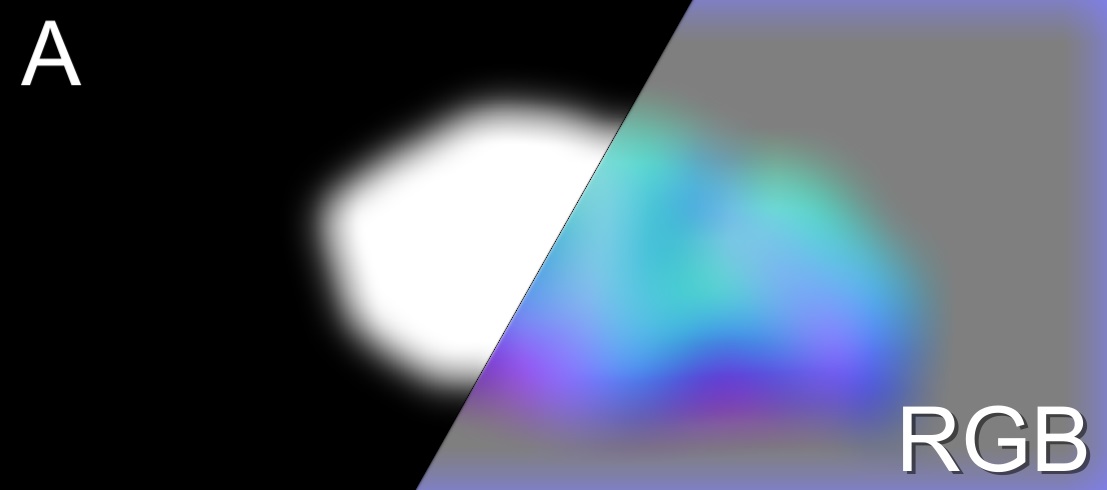

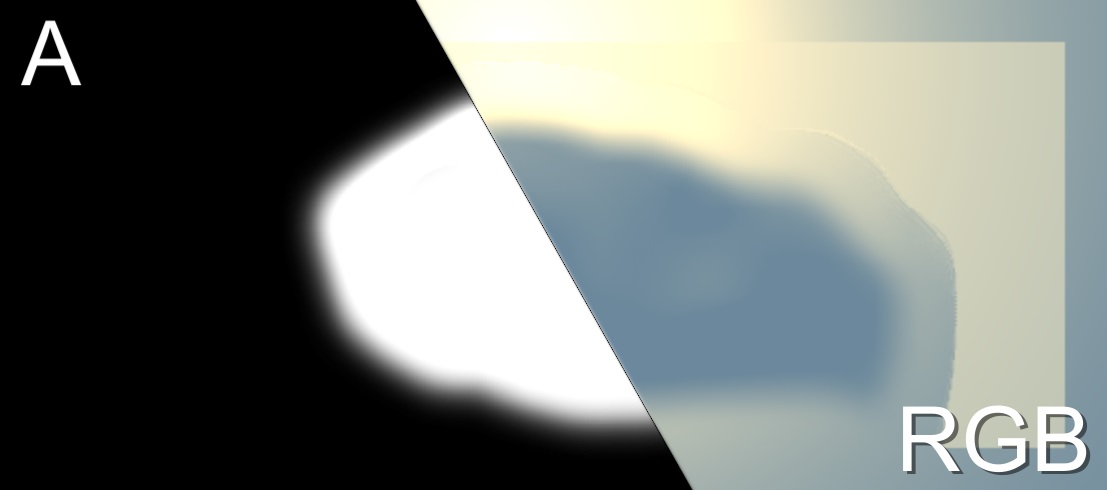

Little about the format

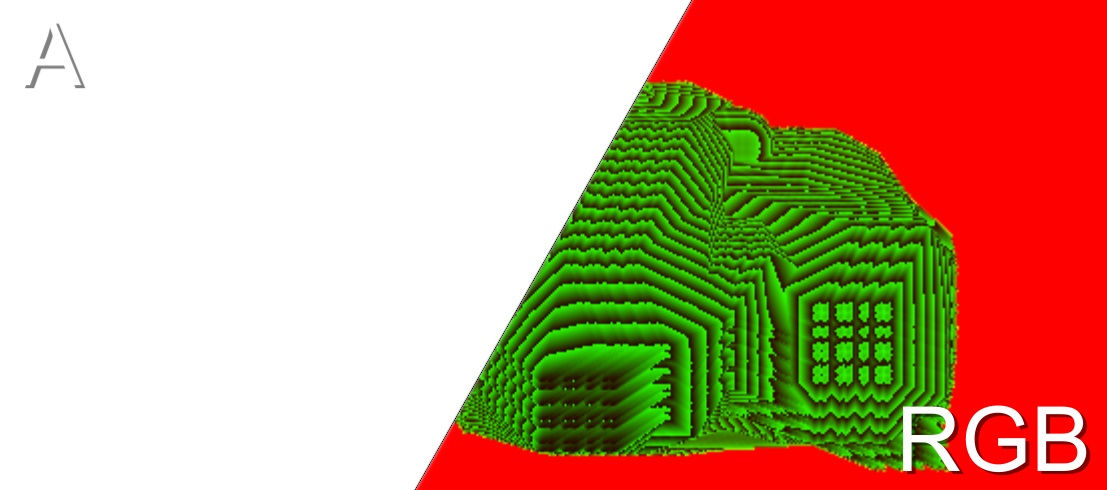

The left half of the image is the Alpha channel. The darker - the more transparent.

The right half of the image is RGB channels.

The only guaranteed supported texture format on mobile is ARGB32, so I'll use it.

The depth is encrypted into the RG texture channels, while z=r+g/$25 .

Normal is represented as a 3d vector in camera space, and rgb=(normal+1)/2 because RGB does not support negative values.

The right half of the image is RGB channels.

The only guaranteed supported texture format on mobile is ARGB32, so I'll use it.

The depth is encrypted into the RG texture channels, while z=r+g/$25 .

Normal is represented as a 3d vector in camera space, and rgb=(normal+1)/2 because RGB does not support negative values.

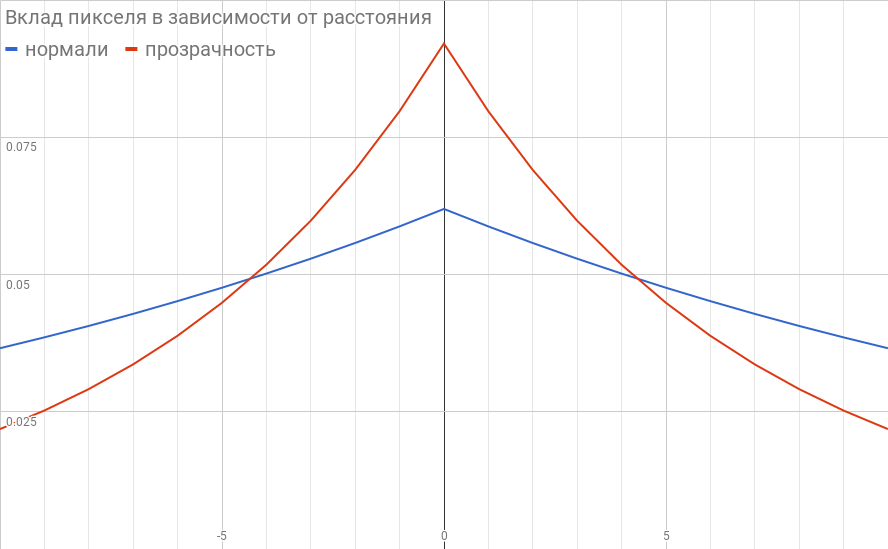

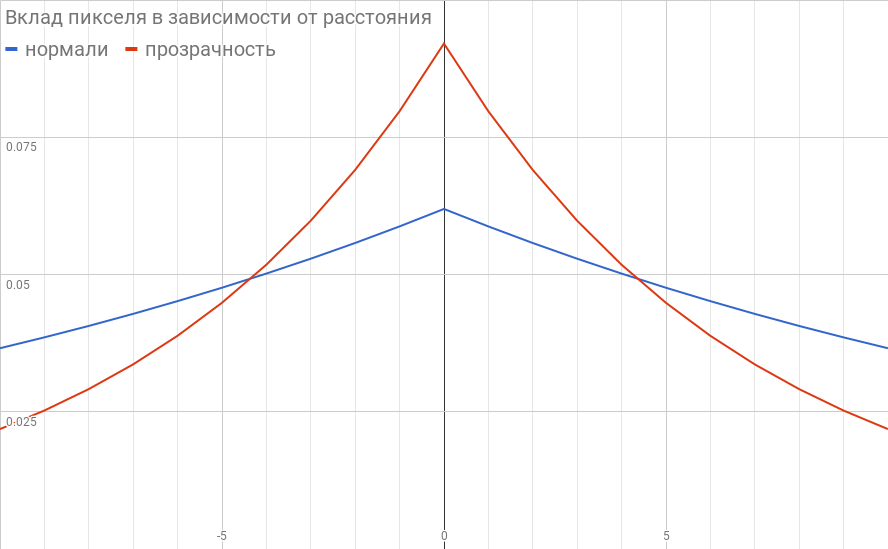

Blur

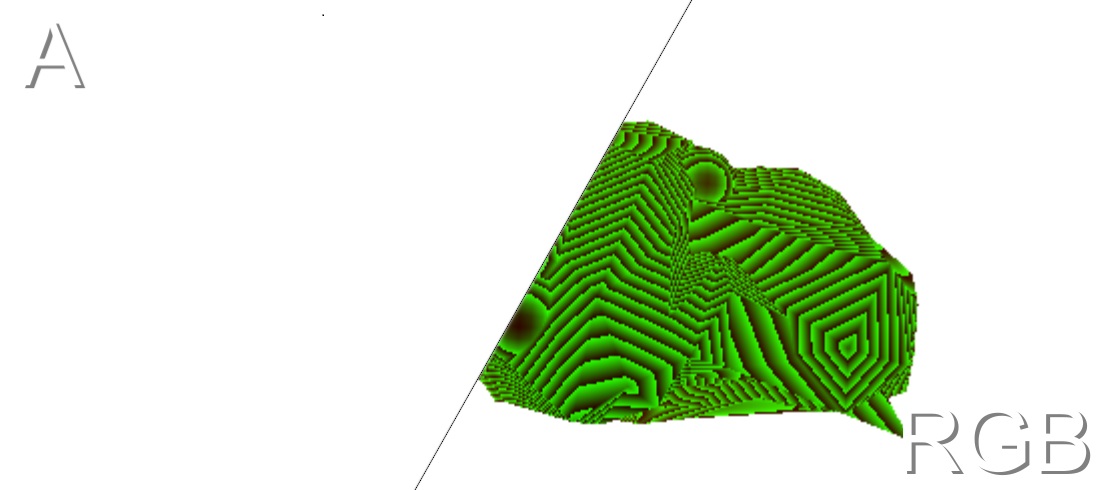

Blur normals in 2 passes:

- Horizontally:

- Vertically:

Note: normals are blurring more actively than transparency, this will make the clouds become soft, but will not let them lose their outlines.

Similarly, blur the cloud depth map:

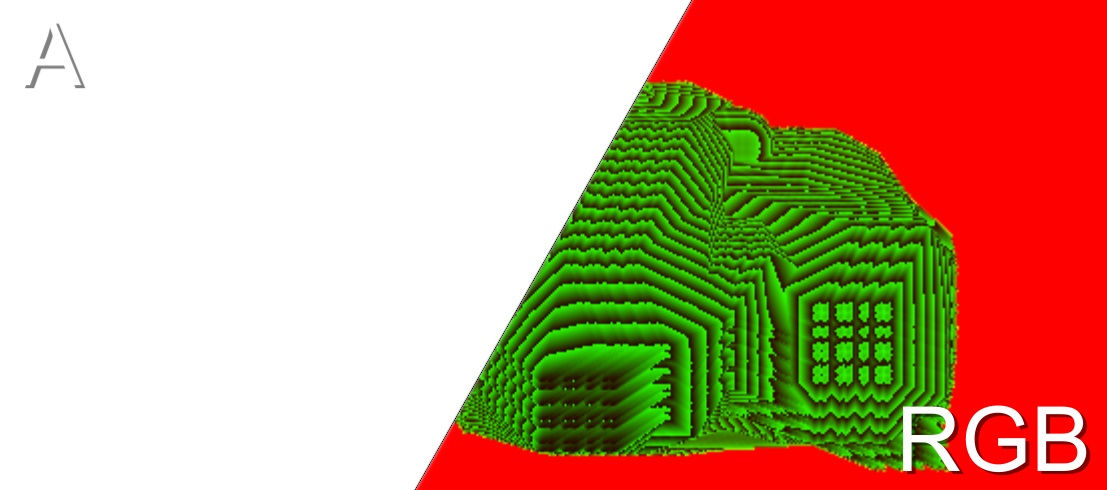

We project noise

It would be nice to add noise to our data.

There are 3 options:

- 3D texture - requires a lot of memory, it works slowly on mobile.

- Shader noise generator - for perlin noise, you need to call RNG many times => it works slowly; No artistic control: you cannot turn on another type of noise without rewriting the code.

- Triplanar projection of 2d texture - we generate a texture with noise, project it along the X, Y and Z axes. Effectively; You can substitute any texture; takes up little memory.

Temporarily disable the blur to better understand how the noise projection works.

Triplanar projection

If a p - coordinates of a point in 3d space, and n - normal to the surface, the projection is calculated as follows:

color=noise(p.yz)∗nx2+ qquad quadnoise(p.zx)∗ny2+ qquad quadnoise(p.xy)∗nz$

Since the length of the vector n equal to 1, the sum of the squares of its coordinates will give 1, while maintaining the brightness of the noise.

Projecting noise along the X axis:

We project noise along the X and Y axes:

color=noise(p.yz)∗nx2+ qquad quadnoise(p.zx)∗ny2+ qquad quadnoise(p.xy)∗nz$

Since the length of the vector n equal to 1, the sum of the squares of its coordinates will give 1, while maintaining the brightness of the noise.

Projecting noise along the X axis:

We project noise along the X and Y axes:

We project noise along the X, Y, Z axes:

Now use this noise to change the depth map of the cloud using the formula:

depth mathrel+=noise.r∗sin(t∗ pi qquad)+ qquad qquadnoise.g∗sin(t∗ pi+ dfrac pi3\)+ qquad qquadnoise.b∗sin(t∗ pi+ dfrac2 pi3)

And project the noise again:

Bring back the blur:

Lighting

Lighting consists of 2 components:

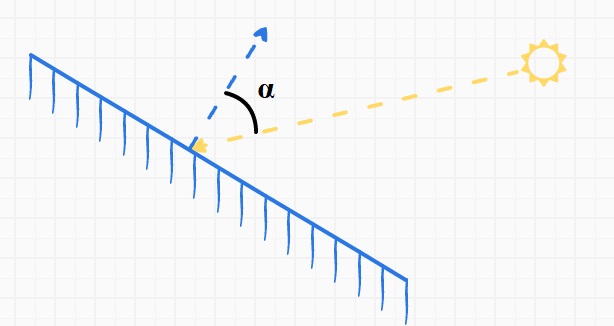

- Pseudo diffuse lighting

t=saturate((cos(a)+lightEnd)/(1+lightEnd))

color=lerp(lightColor,shadowColor,t)=lightColor∗t+shadowColor∗(1−t)Illustrations

The dependence of illumination on the angle a at different values lightEnd :

This formula has a physical meaning: this is what a naive light propagation would look like with a spherical cloud with a linear attenuation of brightness and without scattering.

Result:

- Translucent

If in this pixel there is not a single object from the solid world, add translucency:

t=saturate(radius−distance)2∗(1−color.a)2

color=lerp(color,lightColor,t)

Where

radius - radius of scanning, and

distance - distance from the sun to the current pixel

Apply noise

So far we have applied noise only to the depth map.

Let's use noise in the process of applying lighting.

Add the noise vector to the normals:

Shift the position from which we read to noise.xy :

Cloud overlay the rest of the world

We impose on the rest of the world using alpha blending, adding transparency where the objects of the world are close to the surface of the clouds, or completely obscure them.

color.a mathrel∗=1−saturate((cloudDepth+fallback−worldDepth)/fallback)

Where fallback - the depth at which the object disappears from visibility inside the cloud.

Performance

If you run all these transformations in Full HD resolution, your top-end smartphone will cry, curl up into a ball and begin to show a slideshow with a frequency of 15-20 fps: phones pull out 3d graphics only with the help of early-z technology, which reduces the number of operations before performing three or four simple shaders per pixel. And we do a lot of computation in every pixel.

What to do? It's time to get rid of operations. The shapes of the clouds are vague, and the moving noise will cover up our dirty work: we will cut the resolution!

- The depth map of the world is drawn in full or half resolution (all the same, smartphones have very small pixels).

- All other operations are performed in permission dfrac14 , reducing the area by 16 times => increasing the performance by 16 times.

- We perform the blending operation already in a full-sized screen buffer, while using the original map of the depth of the world, which allows you to show clear outlines of objects, maintaining the illusion that the clouds were rendered in full resolution.

Final performance:

7 ms on nexus 5x with medium graphics settings (budget phone 2015).

0.5 ms on a notebook with a GTX 940M, which means that such performance clouds are perfect for VR, where high frame rates are important.

Final result:

I don’t know if you can post a link to the Asset Store, but who is looking for it will always find :)

UPD: Added a section on performance

Source: https://habr.com/ru/post/345186/

All Articles