Tests alone are not enough, good architecture is needed.

We all understand what automatic tests are. We are developing software, and we want it to solve some user problems. Having written the test, we are convinced that a specific problem is solved by a specific piece of code. Then the requirements change, we change the tests and change the code in accordance with the new requirements. But it does not always save. In addition to high test coverage, our code should be designed in such a way as to protect the developer from errors even while writing it.

In the article, I tried to describe one of the problems that a good architecture can solve: related code sections can disperse among themselves, this can lead to bugs, and tests here will not save. A good design can help.

We are developing marketing CRM-system for the aggregation of data buyers online stores. In this platform, you can use the API to load orders, and then analyze what consumers are buying, what can be recommended, and the like. You also need to be able to see in the interface the change history for each order.

To begin with, we will present our domain model.

')

We have buyers, orders and order items. The buyer may have many orders, the order may have many lines. Each line contains the base price per item, price per line, quantity, product ID, link to the order in which the line is contained, and the line status (for example, “completed” or “canceled”). The order contains the order ID of the customer, the date and time of the order, the cost of the order, taking into account all discounts, shipping costs, a link to the store purchase, as well as a link to the consumer.

We also have the requirement to view the order change history and the ban on deleting order data that was once loaded to us. As a result, the following class structure is obtained:

Order (Order) - an aggregate, which may include many historical order states (OrderHistoryItem), each of which contains lines (OrderHistoryLine). Thus, when changing an order, we will save its status before and after the change. In this case, the current state of the order can always be obtained by ordering the historical state by time and taking the first one.

Now we will describe the format of the API request that our customers could use to transfer orders:

Imagine that we implemented this API and wrote tests on it. Our clients began to use it, and we realized the problem.

The fact is that our API implies a fairly simple integration from the client (for example, an online store). It is assumed that the order data transfer service should be called by the client for each order change on its side. This reduces the complexity of integration, since there is no logic other than translation on the client side.

However, it happens that our system is indifferent to some changes in orders that occur in the client system, since they are of no interest to marketing and analytics. Such changes to the order on our side simply will not be anything different.

In addition, there may be situations when, due to some network errors, the client uses our service several times for the same order change, and we save them all. To defeat network plan errors, when the same transaction is transmitted several times, you can use the idempotency key, but it will not help to solve the problem of insignificant changes. We really don't want to keep several order changes in business if they are no different.

No sooner said than done. Solution to the forehead: let's write a code that, when a new order changes, will among the already existing changes of the same order look for exactly the same, and if it exists, it simply will not save another one. The code will look something like this (if you don’t think about hash optimizations):

In this code, we go through all the historical records of the order and look for the same using the IsEquivalent method. At the same time, IsEquivalent should compare each field of the order, and then look for the corresponding order lines and compare each field of these lines. A naive implementation might look like this:

OrderHistoryItem.IsEquivalent is a fairly simple function, it is fairly easy to test. Having written tests on it, we will make sure that the business requirement is being fulfilled that prohibits creating several identical states of the same order.

However, there is a problem here, which is that in enterprise-systems, like the one we have just talked about, there is quite a lot of code. Our company has 5 teams of 5 developers, we all develop the same product, and even at such scales it is quite difficult to organize the work so that each person in each team understands the subject area of each product feature well. In principle, this is not very necessary, since teams are divided into features, but sometimes features intersect, and then difficulties arise. The problem I'm talking about is the complexity of a large system, where there are many components that need to be interconnected.

Imagine that you want to add another field to your order: type of payment.

Fix the OrderHistoryItem class:

In addition, we must add the ability to transfer the type of payment to our service.

Have we not forgotten anything? Oh, yes, we need to fix IsEquivalent. And this is very sad, since the feature “not to create duplicates of the same order changes” is quite invisible, it is difficult to remember about it. Will the product owner write about how we should consider the type of payment when comparing orders? Will the architect of this feature remember this? Will the programmer think about this, who will implement the type of payment and add it to the service? After all, if no one remembers this, then no test will fall , and in a situation where an order change comes to us, first with one type of payment, and then with another, the second change will not be saved.

One of the most important criteria for the quality of the selected application architecture is the ease of application modification for changing business requirements. Also, one of the pillars of developing non-trivial software is reducing complexity. The problem, which I described above, is connected with high complexity and possibility of changes. In short, the architecture should be one in which it would be impossible to forget about the need to somehow compare each of the properties of the order change history.

We wrote an imperative code to check the properties of the order, which is easy to test, but difficult to analyze. Let's try to take advantage of the declarative approach. Now the IsEquivalent method inside calls IsTotalPriceEquivalent, IsDeliveryCostEquivalent, etc. methods. We introduce the interface of the comparison strategy of the order property IOrderHistoryItemPropertyEqualityStrategy:

Let's do an implementation instead of each comparison method in IsEquivalent:

and make the comparison more declarative:

A little refactorim:

Now, when adding a new property, you need to create a new property comparison strategy and add it to the array. So far, nothing has changed, we are still not protected from the error. But due to the fact that the code has become declarative, we can check something. It would be great to be able to figure out for which property which strategy is responsible. Then we could get all the properties at the order and check that they are all covered with strategies. And it's pretty easy to modify. Add the PropertyName property to the strategy interface and implement it in each of the strategies:

Now we will write a check:

Now it’s enough to write a test for this check, and you can be sure that we forget something. Separately, I note that the implementation shown is not complete. First, the properties that do not need to be compared are not taken into account. For them, you can use the following implementation of the strategy:

Secondly, it is not shown how to make such a strategy for complex properties, such as lines. Since this is done quite similarly, you can think it out yourself.

In addition, adherence to defensive programming can suggest that it is worth checking that there is not more than one strategy registered for each property, and also that there are no registered strategies for unknown properties. But these are details.

Another example of a similar problem was the “consumer removal” functionality. The problem with deletion was that quite a lot of things are connected with the consumer in our system, and when deleting you need to think about all the entities with which consumers are connected, perhaps indirectly through other entities, and this functionality also had to be polymorphic, because end customers may have more entities than in the main product.

As you can see, the problem is the same: if you add a new entity or even just some kind of link and forget about the fact that there is a deletion, you can get a bug in the production of an FK violation when trying to delete.

The solution is similar: a relatively complex reflexive code is quite possible to bypass the entire data model, including in the final implementations for a particular client, at the start of the application. In this model, find which entities are related to consumers in some way. After this, look for whether deletion strategies are registered for all of these entities, and if something is not present, simply fall.

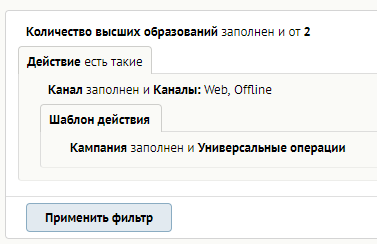

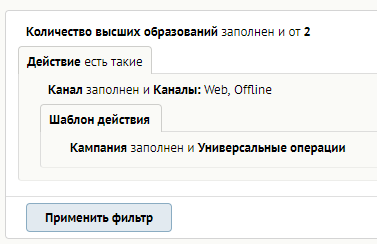

Another example of the same problem: register filtering conditions. We have a fairly flexible filter constructor in the UI:

These filters are used both for filtering virtually all entities on the pages of the lists, as well as for setting up all sorts of automatically triggered mechanics - triggers.

The trigger works like this: every 15 minutes, it selects all consumers on which it should work, using the complex filtering condition collected in the designer, and processes them in turn, sending them a letter, awarding points or giving out some kind of prize or discount.

Over time, it became clear that this approach needs to be optimized, because there are many triggers, there are many consumers, and every 15 minutes it’s hard to choose them all. Optimization, which suggested itself - let's not check consumers who have not changed, we will only look at changed consumers each time. This would be a completely valid approach if it were not for one thing: some filtering conditions become true for the consumer not because something happened to him, but because the time just came. Such conditions are all sorts of temporary conditions, such as “there are less than 5 days left before the birthday”.

We identified all the filtering conditions, the truth of which may change from time to time, and decided that they should tell us the time during which it makes sense to recount them. Roughly speaking, if we count in days, then it makes sense to recount every 12 hours, if in hours - then every hour.

The problem is that the module, in which our filtering conditions are defined, is one of the most basic, and we would not like it to think about what the time for recalculation should be. Therefore, the interface for obtaining the recalculation time for the filtering condition is separated from the filtering conditions themselves, and we again find ourselves in a situation where, when creating a new filtering condition, we can easily forget that it requires specifying the recalculation time.

The solution is the same as before. We have an application initialization stage when filtering conditions are added. After that there is a stage when recalculation time providers are added for filtering conditions in another module. After that, it is critical to verify that for all filtering conditions such providers are registered. In no case can not rely on the default behavior: if the provider receiving the time is not registered, assume that the filter does not require recalculation. This feature is very tempting, because refactoring in this situation looks much simpler: you need to register providers only for those filtering conditions that were selected. If you choose this approach, you can save time now, but then someone will write a new filtering condition for time, forget about writing a time provider for recalculation for it, and a trigger with this filtering condition will not actually work.

That's all. I tried to explain in the article my own vision of the problem of “unwritten code”. Unfortunately, we cannot verify the absence of any code in this way, we need to repel something, look for some inconsistencies in the configuration of a large system, and fall, fall, fall, as long as they exist.

Try to make the architecture impenetrable, so that if used improperly, it always curses, so you can protect yourself from unpleasant mistakes in battle. Try not to compromise with reliability: if you need to refactor a lot of code, in order to reduce the risks later, then you should still spend time.

In the article, I tried to describe one of the problems that a good architecture can solve: related code sections can disperse among themselves, this can lead to bugs, and tests here will not save. A good design can help.

Life example

We are developing marketing CRM-system for the aggregation of data buyers online stores. In this platform, you can use the API to load orders, and then analyze what consumers are buying, what can be recommended, and the like. You also need to be able to see in the interface the change history for each order.

To begin with, we will present our domain model.

')

We have buyers, orders and order items. The buyer may have many orders, the order may have many lines. Each line contains the base price per item, price per line, quantity, product ID, link to the order in which the line is contained, and the line status (for example, “completed” or “canceled”). The order contains the order ID of the customer, the date and time of the order, the cost of the order, taking into account all discounts, shipping costs, a link to the store purchase, as well as a link to the consumer.

We also have the requirement to view the order change history and the ban on deleting order data that was once loaded to us. As a result, the following class structure is obtained:

Customer

class Customer { public int Id { get; set; } public string Name { get; set; } public string Email { get; set; } } Order

class Order { public int Id {get;set;} public string ExternalId {get;set;} public ICollection<OrderHistoryItem> History {get;} } Historical order status

class OrderHistoryItem { public ICollection<OrderHistoryLine> OrderLines {get;} public Order Order { get; set; } public DateTime OrderChangeDateTime { get; set; } public decimal TotalAmountAfterDiscounts { get; set; } public Customer Customer { get; set; } public decimal DeliveryCost { get; set; } public string ShopExternalId { get; set; } } Order Item

class OrderHistoryLine { public decimal BasePricePerItem { get; set; } public OrderHistoryItem OrderHistoryItem { get; set; } public decimal PriceOfLine { get; set; } public decimal Quantity { get; set; } public string Status { get; set; } public string ProductExternalId { get; set; } } Order (Order) - an aggregate, which may include many historical order states (OrderHistoryItem), each of which contains lines (OrderHistoryLine). Thus, when changing an order, we will save its status before and after the change. In this case, the current state of the order can always be obtained by ordering the historical state by time and taking the first one.

Now we will describe the format of the API request that our customers could use to transfer orders:

API format

{ id: “123”, customer: { id: 1 }, dateTimeUtc: “21.08.2017 11:11:11”, totalAmountAfterDiscounts: 2000, shop: “shop-moscow”, deliveryCost: 0, items: [{ basePricePerItem: 100, productId: “456”, status: “Paid”, quantity: 20, priceOfLineAfterDiscounts: 2000 }] } Imagine that we implemented this API and wrote tests on it. Our clients began to use it, and we realized the problem.

The fact is that our API implies a fairly simple integration from the client (for example, an online store). It is assumed that the order data transfer service should be called by the client for each order change on its side. This reduces the complexity of integration, since there is no logic other than translation on the client side.

However, it happens that our system is indifferent to some changes in orders that occur in the client system, since they are of no interest to marketing and analytics. Such changes to the order on our side simply will not be anything different.

In addition, there may be situations when, due to some network errors, the client uses our service several times for the same order change, and we save them all. To defeat network plan errors, when the same transaction is transmitted several times, you can use the idempotency key, but it will not help to solve the problem of insignificant changes. We really don't want to keep several order changes in business if they are no different.

No sooner said than done. Solution to the forehead: let's write a code that, when a new order changes, will among the already existing changes of the same order look for exactly the same, and if it exists, it simply will not save another one. The code will look something like this (if you don’t think about hash optimizations):

public void SaveOrder(OrderHistoryItem historyItem) { if (historyItem.Order.IsNew) { SaveOrderCore(historyItem.Order); SaveOrderHistoryCore(historyItem); return; } var doesSameHistoryItemExist = historyItem.Order.History .Where(h => h != historyItem) .Where(h => h.IsEquivalent(historyItem)) .Any(); if (doesSameHistoryItemExist) return; SaveOrderHistoryCore(historyItem); } In this code, we go through all the historical records of the order and look for the same using the IsEquivalent method. At the same time, IsEquivalent should compare each field of the order, and then look for the corresponding order lines and compare each field of these lines. A naive implementation might look like this:

public bool IsEquivalent(OrderHistoryItem otherHistoryItem) { return IsTotalPriceEquivalent(otherHistoryItem) && IsDeliveryCostEquivalent(otherHistoryItem) && IsShopEquivalent(otherHistoryItem) && AreLinesEquivalent(otherHistoryItem); } Tests do not save

OrderHistoryItem.IsEquivalent is a fairly simple function, it is fairly easy to test. Having written tests on it, we will make sure that the business requirement is being fulfilled that prohibits creating several identical states of the same order.

However, there is a problem here, which is that in enterprise-systems, like the one we have just talked about, there is quite a lot of code. Our company has 5 teams of 5 developers, we all develop the same product, and even at such scales it is quite difficult to organize the work so that each person in each team understands the subject area of each product feature well. In principle, this is not very necessary, since teams are divided into features, but sometimes features intersect, and then difficulties arise. The problem I'm talking about is the complexity of a large system, where there are many components that need to be interconnected.

Imagine that you want to add another field to your order: type of payment.

Fix the OrderHistoryItem class:

class OrderHistoryItem with added PaymentType property

class OrderHistoryItem { public Order Order { get; set; } public DateTime OrderChangeDateTime { get; set; } public decimal TotalAmountAfterDiscounts { get; set; } public Customer Customer { get; set; } public decimal DeliveryCost { get; set; } public string ShopExternalId { get; set; } public string PaymentType {get;set;} } In addition, we must add the ability to transfer the type of payment to our service.

Have we not forgotten anything? Oh, yes, we need to fix IsEquivalent. And this is very sad, since the feature “not to create duplicates of the same order changes” is quite invisible, it is difficult to remember about it. Will the product owner write about how we should consider the type of payment when comparing orders? Will the architect of this feature remember this? Will the programmer think about this, who will implement the type of payment and add it to the service? After all, if no one remembers this, then no test will fall , and in a situation where an order change comes to us, first with one type of payment, and then with another, the second change will not be saved.

One of the most important criteria for the quality of the selected application architecture is the ease of application modification for changing business requirements. Also, one of the pillars of developing non-trivial software is reducing complexity. The problem, which I described above, is connected with high complexity and possibility of changes. In short, the architecture should be one in which it would be impossible to forget about the need to somehow compare each of the properties of the order change history.

Declarative strategies as a way out

We wrote an imperative code to check the properties of the order, which is easy to test, but difficult to analyze. Let's try to take advantage of the declarative approach. Now the IsEquivalent method inside calls IsTotalPriceEquivalent, IsDeliveryCostEquivalent, etc. methods. We introduce the interface of the comparison strategy of the order property IOrderHistoryItemPropertyEqualityStrategy:

interface IOrderHistoryItemPropertyEqualityStrategy { bool ArePropertiesEquivalent(OrderHistoryItem firstOrderHistoryItem, OrderHistoryItem secondOrderHistoryItem); } Let's do an implementation instead of each comparison method in IsEquivalent:

class TotalPriceOrderHistoryItemPropertyEqualityStrategy : IOrderHistoryItemPropertyEqualityStrategy { public bool ArePropertiesEquivalent(OrderHistoryItem firstOrderHistoryItem, OrderHistoryItem secondOrderHistoryItem){ return firstOrderHistoryItem.TotalAmountAfterDiscounts == secondOrderHistoryItem.TotalAmountAfterDiscounts; } } class DeliveryCostOrderHistoryItemPropertyEqualityStrategy : IOrderHistoryItemPropertyEqualityStrategy { public bool ArePropertiesEquivalent(OrderHistoryItem firstOrderHistoryItem, OrderHistoryItem secondOrderHistoryItem) { return firstOrderHistoryItem.DeliveryCost == secondOrderHistoryItem.DeliveryCost; } } and make the comparison more declarative:

public bool IsEquivalent(OrderHistoryItem otherHistoryItem) { return new TotalPriceOrderHistoryItemPropertyEqualityStrategy().ArePropertiesEquivalent(this, otherHistoryItem) && new DeliveryCostOrderHistoryItemPropertyEqualityStrategy().ArePropertiesEquivalent(this, otherHistoryItem) && new ShopOrderHistoryItemPropertyEqualityStrategy().ArePropertiesEquivalent(this, otherHistoryItem) && new LinesOrderHistoryItemPropertyEqualityStrategy().ArePropertiesEquivalent(this, otherHistoryItem); } A little refactorim:

private static IOrderHistoryItemPropertyEqualityStrategy[] equalityStrategies = new[] { new TotalPriceOrderHistoryItemPropertyEqualityStrategy(), new DeliveryCostOrderHistoryItemPropertyEqualityStrategy(), new ShopOrderHistoryItemPropertyEqualityStrategy(), new LinesOrderHistoryItemPropertyEqualityStrategy() } public bool IsEquivalent(OrderHistoryItem otherHistoryItem) { return equalityStrategies.All(strategy => strategy.ArePropertiesEquivalent(this, otherHistoryItem)) } Now, when adding a new property, you need to create a new property comparison strategy and add it to the array. So far, nothing has changed, we are still not protected from the error. But due to the fact that the code has become declarative, we can check something. It would be great to be able to figure out for which property which strategy is responsible. Then we could get all the properties at the order and check that they are all covered with strategies. And it's pretty easy to modify. Add the PropertyName property to the strategy interface and implement it in each of the strategies:

public interface IOrderHistoryItemPropertyEqualityStrategy { string PropertyName {get;} bool ArePropertiesEquivalent(OrderHistoryItem firstOrderHistoryItem, OrderHistoryItem secondOrderHistoryItem); } public class TotalPriceOrderHistoryItemPropertyEqualityStrategy : IOrderHistoryItemPropertyEqualityStrategy { public string PropertyName => nameof(OrderHistoryItem.TotalAmountAfterDiscounts); public bool ArePropertiesEquivalent(OrderHistoryItem firstOrderHistoryItem, OrderHistoryItem secondOrderHistoryItem){ return firstOrderHistoryItem.TotalAmountAfterDiscounts == secondOrderHistoryItem.TotalAmountAfterDiscounts; } } Now we will write a check:

public static void CheckOrderHistoryEqualityStrategies() { var historyItemPropertyNames = typeof(OrderHistoryItem) .GetProperties() .Select(p => p.Name); var equalityStrategiesPropertyNames = equalityStrategies .Select(es => es.PropertyName); var propertiesWithoutCorrespondingStrategy = historyItemPropertyNames .Except(equalityStrategiesPropertyNames) .ToArray(); if (!propertiesWithoutCorrespondingStrategy.Any()) return; var missingPropertiesString = string.Join(", ", propertiesWithoutCorrespondingStrategy); throw new InvalidOperationException($" {missingPropertiesString} "); } Now it’s enough to write a test for this check, and you can be sure that we forget something. Separately, I note that the implementation shown is not complete. First, the properties that do not need to be compared are not taken into account. For them, you can use the following implementation of the strategy:

public class NoCheckOrderHistoryItemPropertyEqualityStrategy : IOrderHistoryItemPropertyEqualityStrategy { private string propertyName; public NoCheckOrderHistoryItemPropertyEqualityStrategy(string propertyName) { this.propertyName = propertyName; } public string PropertyName => propertyName; public bool ArePropertiesEquivalent(OrderHistoryItem firstOrderHistoryItem, OrderHistoryItem secondOrderHistoryItem) { return true; } } Secondly, it is not shown how to make such a strategy for complex properties, such as lines. Since this is done quite similarly, you can think it out yourself.

In addition, adherence to defensive programming can suggest that it is worth checking that there is not more than one strategy registered for each property, and also that there are no registered strategies for unknown properties. But these are details.

Other examples

Consumer removal

Another example of a similar problem was the “consumer removal” functionality. The problem with deletion was that quite a lot of things are connected with the consumer in our system, and when deleting you need to think about all the entities with which consumers are connected, perhaps indirectly through other entities, and this functionality also had to be polymorphic, because end customers may have more entities than in the main product.

As you can see, the problem is the same: if you add a new entity or even just some kind of link and forget about the fact that there is a deletion, you can get a bug in the production of an FK violation when trying to delete.

The solution is similar: a relatively complex reflexive code is quite possible to bypass the entire data model, including in the final implementations for a particular client, at the start of the application. In this model, find which entities are related to consumers in some way. After this, look for whether deletion strategies are registered for all of these entities, and if something is not present, simply fall.

Providers of recalculation time filtering conditions

Another example of the same problem: register filtering conditions. We have a fairly flexible filter constructor in the UI:

These filters are used both for filtering virtually all entities on the pages of the lists, as well as for setting up all sorts of automatically triggered mechanics - triggers.

The trigger works like this: every 15 minutes, it selects all consumers on which it should work, using the complex filtering condition collected in the designer, and processes them in turn, sending them a letter, awarding points or giving out some kind of prize or discount.

Over time, it became clear that this approach needs to be optimized, because there are many triggers, there are many consumers, and every 15 minutes it’s hard to choose them all. Optimization, which suggested itself - let's not check consumers who have not changed, we will only look at changed consumers each time. This would be a completely valid approach if it were not for one thing: some filtering conditions become true for the consumer not because something happened to him, but because the time just came. Such conditions are all sorts of temporary conditions, such as “there are less than 5 days left before the birthday”.

We identified all the filtering conditions, the truth of which may change from time to time, and decided that they should tell us the time during which it makes sense to recount them. Roughly speaking, if we count in days, then it makes sense to recount every 12 hours, if in hours - then every hour.

The problem is that the module, in which our filtering conditions are defined, is one of the most basic, and we would not like it to think about what the time for recalculation should be. Therefore, the interface for obtaining the recalculation time for the filtering condition is separated from the filtering conditions themselves, and we again find ourselves in a situation where, when creating a new filtering condition, we can easily forget that it requires specifying the recalculation time.

The solution is the same as before. We have an application initialization stage when filtering conditions are added. After that there is a stage when recalculation time providers are added for filtering conditions in another module. After that, it is critical to verify that for all filtering conditions such providers are registered. In no case can not rely on the default behavior: if the provider receiving the time is not registered, assume that the filter does not require recalculation. This feature is very tempting, because refactoring in this situation looks much simpler: you need to register providers only for those filtering conditions that were selected. If you choose this approach, you can save time now, but then someone will write a new filtering condition for time, forget about writing a time provider for recalculation for it, and a trigger with this filtering condition will not actually work.

Finally

That's all. I tried to explain in the article my own vision of the problem of “unwritten code”. Unfortunately, we cannot verify the absence of any code in this way, we need to repel something, look for some inconsistencies in the configuration of a large system, and fall, fall, fall, as long as they exist.

Try to make the architecture impenetrable, so that if used improperly, it always curses, so you can protect yourself from unpleasant mistakes in battle. Try not to compromise with reliability: if you need to refactor a lot of code, in order to reduce the risks later, then you should still spend time.

Source: https://habr.com/ru/post/344862/

All Articles