Dirty tricks and RAM

Problems with memory limitations in the past?

It turns out no. Not only some commercial engines work poorly with memory - many platforms have quite aggressive RAM requirements. In addition, they add more and restrictions on the size of disks and cartridges.

We have collected examples from the gaming industry (of different years and platforms), about almost illegal ways of squeezing levels, textures and whole games into the required volumes. Maybe these methods are not very beautiful, but they allowed the developers to release the game, and no one has yet guessed anything.

Pro boot screen

Our game (first person shooter) had problems with properly unloading levels on the Xbox; part of the memory could not be freed, so after completing the level and moving on to the next game, it always crashed. The Xbox had a very limited memory, and, unlike the PC, the console did not have additional slow storage in case the program ran out of memory. She was immediately dying.

')

Our team actually noticed this problem very late, because we had the opportunity to run the game from the right level. This function of the level editor allowed programmers and designers to jump directly to the level with which they worked, skipping the main menu and previous missions. This possibility is vital in the development process, and I have never seen a game without this function (of course, before the release of the game it is often cut out).

Therefore, for convenience, everyone started the game in the editor and jumped to the desired level. Of course, this did not concern the developers of the main menu, but they only started this menu and never downloaded the level. So, most of the developers of the project did not immediately recognize that the levels could not be quickly unloaded from memory.

When the quality control department found this error, it turned out to be quite difficult to repair all the leaks at such a late stage of development. The first leaks were easy, but gradually it became more and more difficult to track and clean up every small piece of memory before the level began. We did a lot of work - it was possible to run 4-5 levels in a row, but sooner or later the console crashed. Go campaign at a time was impossible.

The development team could not fix everything on time. Although, perhaps, they just gave up too quickly, I don’t know for sure ... But they took advantage of the easy-to-use feature of the convenient Xbox console API. Then (and on the Xbox 360 too) it was possible to ask the console to reboot. And the developer could tell the Xbox what to do after the restart.

That is, we could ask the console to reboot and start the same game again with a certain parameter. So a quick launch of the levels moved from the editor to the game itself. After completing the level, the game rebooted the console and started itself again with the command line parameter (the name of the level being launched). Of course, the reboot signified the appearance of a black screen, so the blackout screen was quickly implemented, making the transition to black. The console rebooted and moved to the next level, as the editor would have done, and then gradually increased the brightness. Voila, the perfect (?) Way to clear all memory between levels is ready.

- Nicolas Mercier

RAM and Crash

I was one of two programmers (along with Andy Gavin) writing Crash Bandicoot for the PlayStation 1.

RAM was the main problem even then. PS1 had only 2 MB of RAM, and we had to go to insane exploits to fit the game into them. We had levels that contained more than 10 MB, which were supposed to be loaded and unloaded from memory dynamically without the slightest braking - delays during loading, when the frame rate drops below 30 Hz.

Basically, the game worked because Andy wrote a terrific page system that loaded and unloaded data pages of 64KB as Crash moved along the level. It was a demonstration of all of its capabilities - the system was executed across the entire spectrum of functionality - from high-level memory management to DMA coding at the opcode level. Andy even controlled the physical location of the bytes on the CD-ROM, so that at a reading speed of 300 KB / s, the PS1 had time to load data for each level by the time Crash reached the right place.

I wrote a packer that received resources — sounds, graphics, code to control enemies on lisp, etc. - and packed them into 64 KB pages for the Andy system. (By the way, the task of creating an ideal package of objects of arbitrary size into pages with a fixed volume is NP-complete, and therefore its optimal solution in polynomial, that is, a reasonable time is most likely impossible.)

Some levels barely fit into the framework, and my packer used many algorithms (choosing the first suitable, the most suitable, etc.), trying to find the perfect packaging option. This included a stochastic search, akin to the gradient descent process used in the simulated annealing. In fact, I had a whole bunch of different packaging strategies; the program tried them all and selected the best result.

However, the problem with such a randomly directed search is that you never know whether you will get the same results again. Some Crash levels fit into the maximum allowable number of pages (it seems there were 21 of them) only when the stochastic packer was “very lucky." This means that after level packing, you can change the code of a single turtle and never again achieve the same packing configuration in page 21.

Sometimes it happened that any of the artists needed to change something. At the same time, we went beyond the permissible number of pages, and we had to change other resources almost randomly until the packer found a suitable packaging configuration (try to explain this to the irritated artist at three in the morning).

But the best - and at the same time worst - in retrospect, part were attempts to fit the kernel code in C / assembler. We were literally a few days before the release date of the “golden master disk”, the last chance for release during the holiday season, otherwise we would have lost a whole year. Therefore, we randomly changed the code in C into semantically identical, but syntactically different constructions, so that the compiler would give out a code that was 200, 125, 50, and then 8 bytes less. The changes were something like this: what if you replace “for (i = 0; i <x; i ++)” with a while loop using a variable that was used elsewhere? This happened already when we had exhausted all our usual stunts, such as putting data into the bottom two bits of the pointers (which worked only because all the R3000 addresses were multiples of four bytes).

Finally, we managed to fit the Crash in PS1 memory and still had four bytes free. Yes, four of 2097152. Good old times.

- Dave Baggett, inky.com (and Naughty Dog employee # 1)

Attention to detail

It happened ten years ago. At that time I was working in a small studio on RTS, which was exclusively for PC. The team was average in size (about 35 people), we had been working for about a year and were in the middle of the production process.

This RTS is divided into levels: after passing a level, the next one is unlocked, and so on. Like any other game for the PC, it had to work on machines with different configurations, so we released the game with three sets of textures for different resolutions: low, medium and high.

That is, each level had two additional texture packs, one with medium resolution textures, and the second with high resolution textures (low resolution textures were packed directly into the main large file).

Production progressed quite well, and we almost completed the game. Performance was on the level, stability was present, and we fixed most of the bugs. Approaching the last day before the deadline. We had to burn the finished gold master CDs to send them to the factory the next morning.

So, we created ISO images, recorded English, French and Spanish versions and tested them. There were no problems. And then it came to the German drive! We started burning ISO, and the message “The image does not fit on the media” appeared on the screen.

What? How is this possible? We just recorded three other versions, and it's already eight o'clock on the clock. CDs had to be sent at seven in the morning the next day. We began to understand, and it turned out that the recorded German voice acting lasts longer and takes up more disk space than other languages. All of our volume requirements have been set considering other languages. Now we had about 10 hours to fix the problem, burn the CD and check its performance. There was no time to compress the sound or other tricky changes to save disk space.

Then we had a great idea: choose one of the levels, remove the high resolution texture package and replace it with a copy of the medium resolution package. Bam! Save 50 MB, ISO climbs onto CD. So our German friends with powerful PCs played one of the levels with the same texture details as people with average configurations! Yes, I confess, it was a long and tense night.

- Remy Kenin, architect of Far Cry engine

Size is not important ...

In 2008, we worked on a game loaded from XBLA. At that time, hard drives did not come bundled with all 360, and downloadable games had to work when installed on memory cards. Our game was rather small - about 240 MB. This meant that we had to test the product on 256 and 512 MB memory cards.

With the 512 MB card, the game started perfectly, but when it was started with 256 MB, periodic unstable system braking occurred. We thought a little over the decision, but as a result we came to the conclusion that it is better to spend efforts on improving the quality of the game, and not on fighting ghosts.

Therefore, we put a 20 MB music file inside the game data so that the total file size exceeds 260 MB. Thanks to this, for certification we did not need to check the game on a memory card with 256 megabytes. It was a good game that we released on time. Microsoft and our customers have not guessed anything.

- Anonymous

A lot of torment

When porting a good action game from PC to PS2, we had a lot of "funny" moments: a 256 MB PC game with active use of dynamic selection needed to fit in 32 MB. Even after making many optimizations and adding streaming upload levels, it took up too much space, so:

- The machine on which the build was performed loaded the level after starting the system and tracked each memory allocation. She watched which allocation operations lived to the beginning of the level; when the game was restarted, she used this sequence to linearly select each permanent location, and distributed everything else in a heap or temporary memory. This saved up to about 15% (5 MB) of memory, greatly accelerated memory allocation and greatly reduced fragmentation. But still, after about three levels too much fragmentation arose again, therefore:

- The machine for the assembly performed the second step: it restarted, loaded the level (with optimizations from the first step), and at the beginning of the level dumped the entire heap to disk. The finished game simply loaded these memory images directly on top of the heap (temporarily saving the local profile parameters to the stack) while loading each level.

Result: very fast loading of levels and zero fragmentation at the beginning of each level at the cost of increasing the build time of each release candidate.

- Anonymous

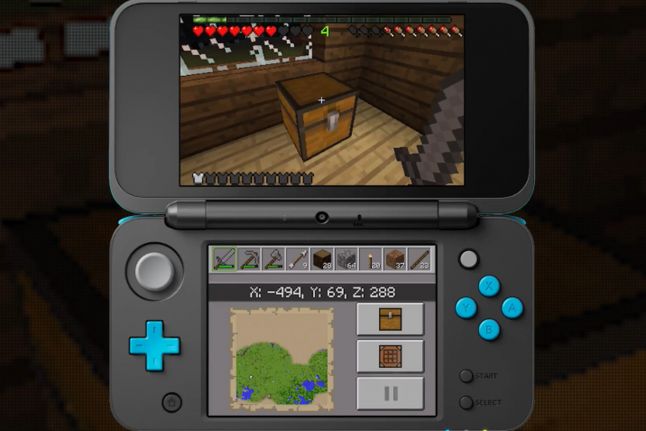

Pack the pixels

In the process of developing Minecraft for 3DS, we suffered from a lack of memory, even on the more powerful New 3DS. Therefore, we wanted to experiment with the texture formats that 3DS supported. The internal format of textures on 3DS was very strange. It was based on tiles arranged in a zigzag pattern of zigzag patterns, which were then linearly ordered at the highest level.

Unfortunately, none of our team was familiar with compressed formats to write a conversion utility. One programmer, Ian, used to work on a texture converter for the Mega Man Legacy Collection , but he processed mostly uncompressed pixel data.

This texture converter received .png and produced a ".3dst" file with its own format that Ian invented - in essence, it was a small header and raw data that we could just write to memory ("3dst" stands for "3DS Texture" - logical, right?).

Nintendo provided its own conversion utility, but it only exported the images into package files that you had to use with the Nintendo library for parsing and loading during game execution. It was too expensive for us. And here we were again unlucky - this Nintendo file format did not document, and it seemed the only way to get compressed images compiled into a supported 3DS format.

So I decided to dive into the hex editor. I fed different images of various sizes and formats to the Nintendo utility, noticing the changes that took place in the header of their files until I identified enough fields to quickly create the data I needed. I sketched out a small utility to extract raw data from Nintendo package files, then wrote a batch script to apply this process to all the textures we need. The decision was not quick, and certainly it was not elegant, but it coped with its task.

- Keith Keisershot, Digital Eclipse Programmer

Just brew it

When I was working on a 3D race for the platform, a bunch was contained inside the data section of the executable file. It was similar to the elf executable format called mod, it contained a huge area filled with zeros in which the application allocated memory when the file was loaded into memory.

Therefore, we often ran out of dynamic memory, and instead of correctly implementing memory management (the resources on this system were very limited), I decided to write a tool that inserts more zeros and performs the .exe patching. The solution worked perfectly and I didn’t even have a bad conscience, because it was a terrible platform and a terrible format of executable files.

- Andrew Hayning

Source: https://habr.com/ru/post/344822/

All Articles