Representing functions as a service - OpenFaaS

Prim Trans. : OpenFaaS is a serverless framework formally introduced in August, but appeared about a year ago and quickly hardened at the very top of GitHub projects using the Kubernetes tag . The text published below is a translation of the technical part of the official announcement of the project from its author Alex Ellis, who is well known in the community for his enthusiasm in the field of Docker (has the status of Docker Captain ).

Functions as a Service or OpenFaaS - a framework for creating serverless functions on top of containers. I started the project as a proof of concept in October last year, when I wanted to understand whether it was possible to run Alexa skills or AWS Lambda functions in Docker Swarm. The initial success led me to the publication in December of the same year of the first version of the Golang code in GitHub.

This publication offers a quick introduction to serverless computing and talks about the three main features that have emerged in FaaS over the last 500 commits.

')

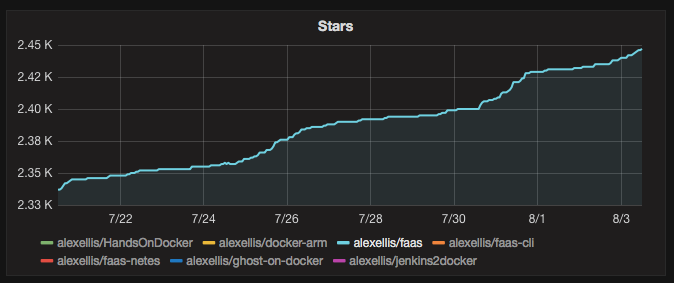

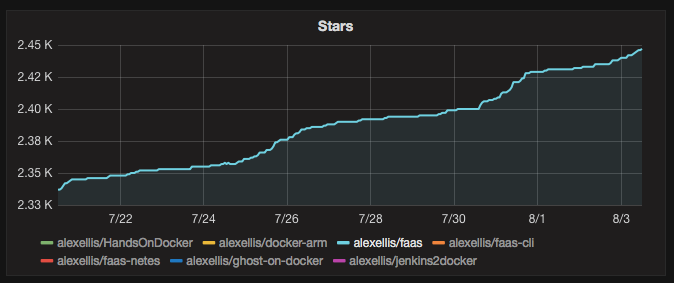

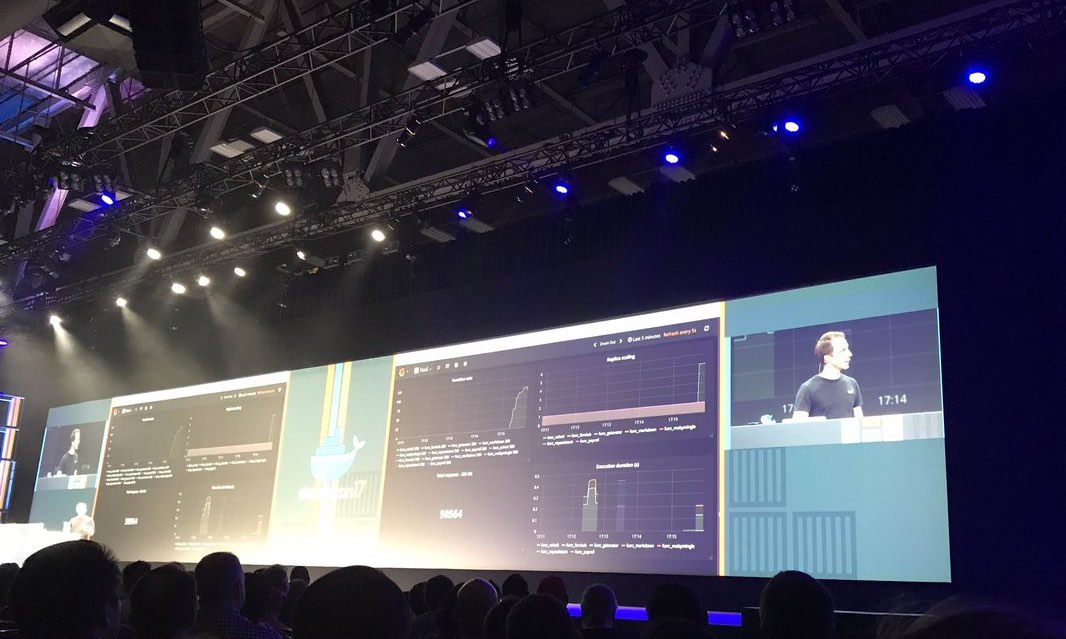

From the moment of the first commit, FaaS gained popularity: it received more than 4,000 stars on GitHub (now more than 7,000 today - approx. Transl. ) And a small community of developers and hackers who talked about it at various meetings, wrote their functions and made changes to the code . A significant event for me was getting a seat among the main Moby Cool Hacks sessions at Dockercon in Austin in April. The task before these performances sounded like an extension of the boundaries of what the Docker was created for .

Serverless is a bad name. We are talking about a new architectural pattern for event-driven (event-driven) systems. Serverless functions are often used as a link between other services or in an event-driven architecture. We used to call this a service bus.

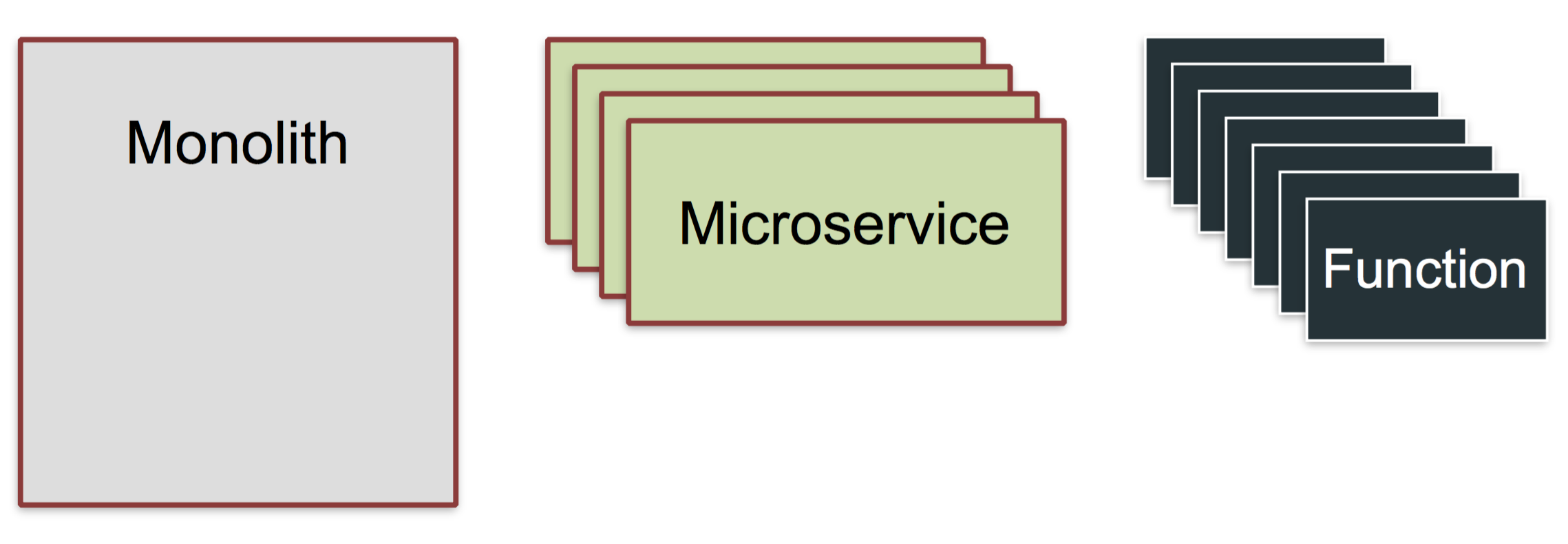

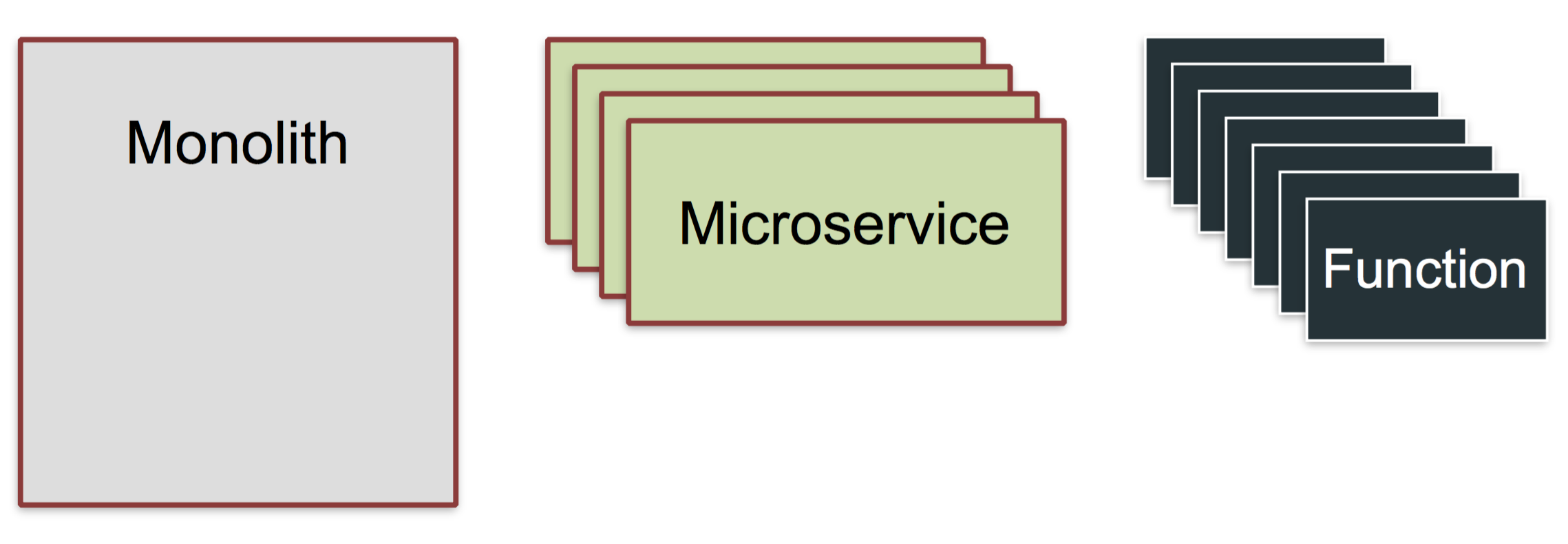

Serverless is evolution

Serverless function is a small, separate and reusable part of the code that:

It is also important to distinguish serverless products from IaaS providers and open source software projects.

On the one hand, we have such serverless implementations from IaaS providers as Lambda, Google Cloud Functions and Azure functions, and on the other hand, frameworks like OpenFaaS allow you to perform heavy work with orchestration platforms like Docker Swarm or Kubernetes.

Cloud native: use your favorite cluster.

The serverless product from the IaaS vendor is fully manageable, therefore it offers a high level of usability and payment in seconds / minutes. The flip side of the coin - you are very attached to their cycle of releases and support. Open source FaaS exist to provide diversity and choice.

OpenFaaS is built on the basis of technologies that are the industry standard in the cloud native world:

OpenFaaS stack

The peculiarity of the OpenFaaS project is that each process can become a serverless function using the watchdog component and the Docker container. This means three things:

Switching to serverless should not imply the need to rewrite code to another programming language. Just use what your business and team needs.

The

This is the main difference between FaaS and other open source serverless frameworks, which depend on specific executable environments for each supported language.

Let's look at the 3 most significant features that appeared since DockerCon (i.e. from April to August 2017 - approx. Transl. ) : CLI and templates for functions, Kubernetes support and asynchronous processing.

A console interface (CLI) was added to the FaaS project to simplify deployment functions and add scripting support to them. Until now, the user interface (UI) for API Gateway or curl could be used for this purpose. The new CLI allows you to define functions in the YAML file and deploy them to the same API Gateway.

Finnian Anderson has written a great introduction to the FaaS CLI on Practical Dev / dev.to.

To install the CLI there is a special script, and John McCabe helped with the recipe for brew:

or:

Using templates in the CLI, it is enough to write the handler in your favorite programming language, after which the CLI will use the template to build this handler into a Docker container with all the FaaS magic.

Two templates have been prepared: for Python and Node.js, but it’s easy to create your own.

CLI supports three actions:

If you have a cluster of 1 node, there is no need to push the images before they are deployed.

Here is an example of a CLI configuration file in YAML format (

But the minimal (empty) handler for the Python function:

An example that checks the status code for a URL over HTTP (

If additional PIP modules are required, add the

After this command, a Docker image will appear under the name

CLA for FaaS is available in this repository .

Being a Docker captain , I mainly study Docker Swarm and articles about him, but I have always been interested in Kubernetes. Having started learning how to set up Kubernetes in Linux and Mac, I already wrote three guides about this, and they were well-received in the community.

Having a good understanding of how to port the Docker Swarm concepts to Kubernetes, I prepared a prototype and ported all the code in a few days. The choice fell on creating a new microservice daemon interacting with Kubernetes, instead of adding additional dependencies to the main FaaS code base.

FaaS proxies calls to the new daemon through a standard RESTful interface for operations such as Deploy / List / Delete / Invoke and Scale.

This approach means that the user interface, CLI, and autoscaling work out of the box without having to make changes. The resulting microservice is supported in the new GitHub repository called FaaS-netes and is available in the Docker Hub. Setting it up in a cluster takes about 60 seconds.

In this video, FaaS unfolds on an empty cluster, after which it shows how to work with the user interface, Prometheus, and autoscaling.

There are probably two categories of server-free frameworks for Kubernetes: those that rely on a very specific executable environment for each supported programming language, and those that, like FaaS, allow any container to become a function.

FaaS has bindings for Docker Swarm and Kubernetes for the native API, that is, it uses objects that you already worked with to manage Deployments and Services . This means that there is less magic and code that needs decoding when it comes to the essence of writing new applications.

When choosing a framework, you should consider whether you want to bring new features or corrections to projects. For example, OpenWhisk is written in Scala, and most others are written in Golang.

One of the features of serverless function is that it is small and fast, it is executed synchronously and usually within a few seconds. But there are several reasons why you might want asynchronous processing functions:

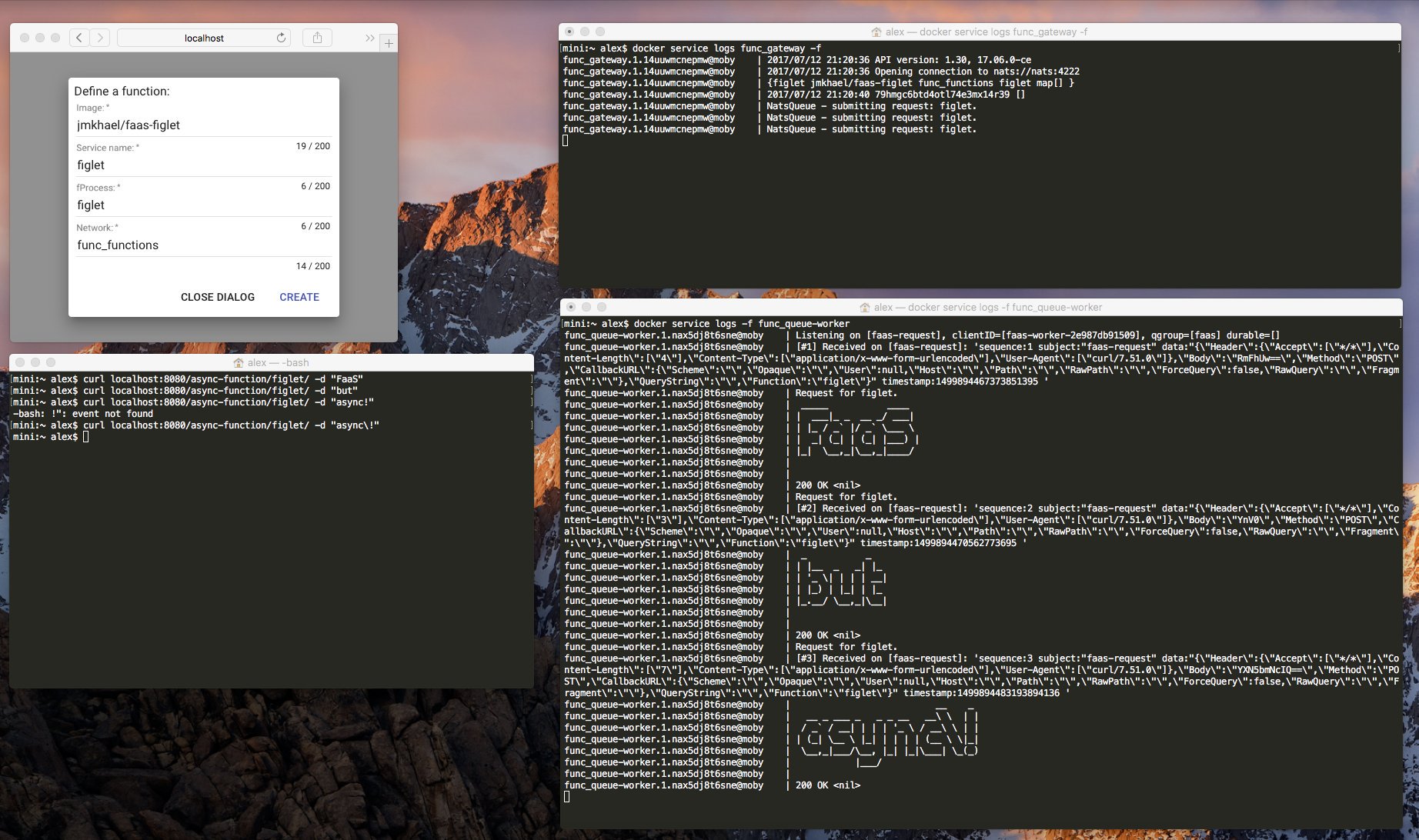

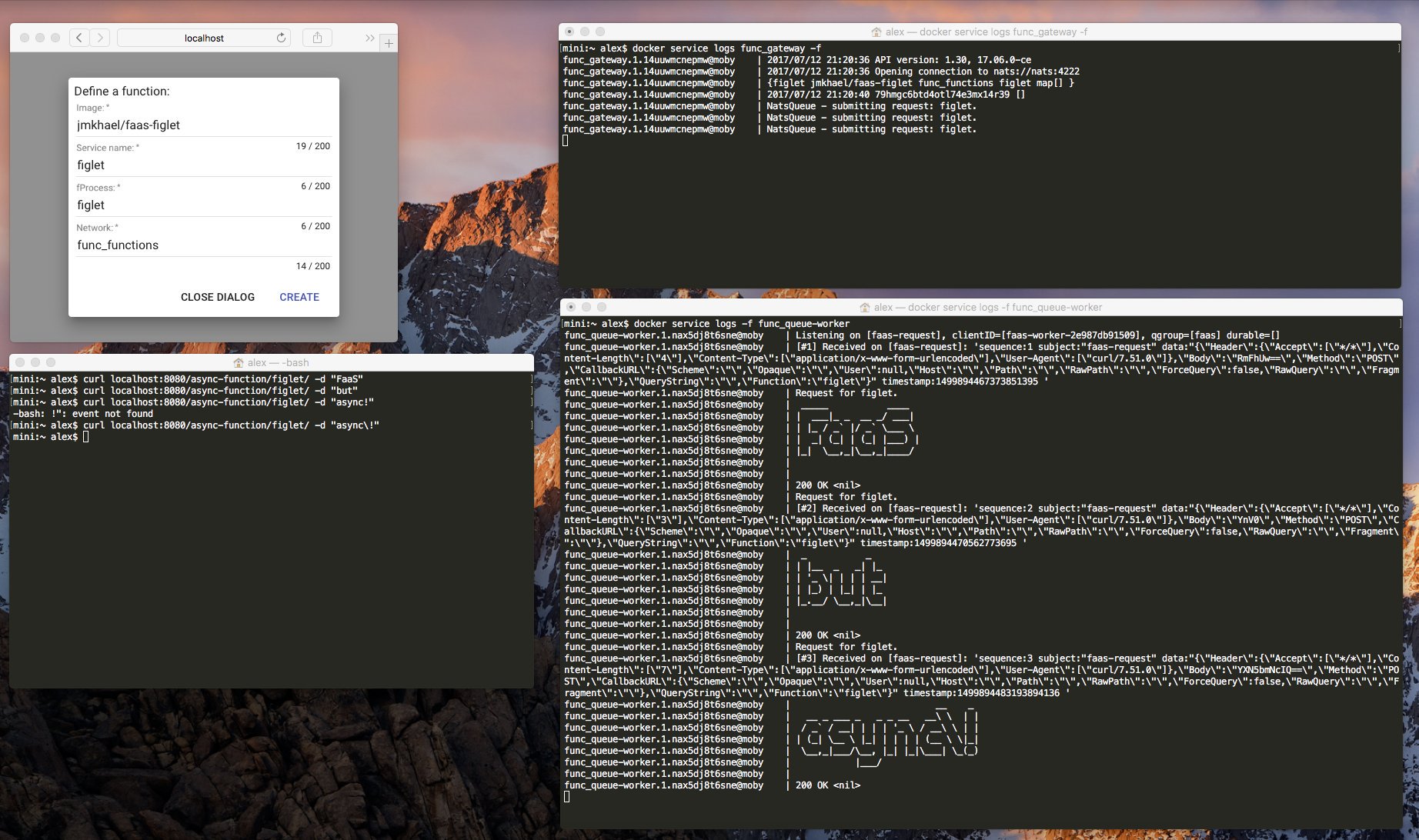

The prototype for asynchronous processing began with a distributed queue. The implementation uses NATS Streaming, but can be extended to work with Kafka or any other abstraction that looks like a queue.

Illustration from Twitter announcement of asynchronous mode in FaaS

An asynchronous call with NATS Streaming as a backend is included in the code base of the project. Instructions for its use are available here .

... and it doesn't matter if you want to help with issues, features in code, project releases, scripting, tests, performance measurement, documentation, updating examples, or even writing a blog about the project.

There is always something for everyone, and all this helps the project move forward.

Send any feedback, ideas, suggestions to @alexellisuk or through one of the GitHub repositories .

Not sure where to start?

Inspire with discussions and examples of community functions that include machine learning with TensorFlow, ASCII art and simple integrations.

A month ago, the author of this material also published instructions for getting started with OpenFaaS on Kubernetes 1.8 using Minikube.

If you are interested in the serverless theme under Kubernetes, you should also pay attention (at least) to the Kubeless and Fission projects, and the author of the article above gives a more complete list. Probably, we will write more about them in our blog, but for now - read among past materials:

Functions as a Service or OpenFaaS - a framework for creating serverless functions on top of containers. I started the project as a proof of concept in October last year, when I wanted to understand whether it was possible to run Alexa skills or AWS Lambda functions in Docker Swarm. The initial success led me to the publication in December of the same year of the first version of the Golang code in GitHub.

This publication offers a quick introduction to serverless computing and talks about the three main features that have emerged in FaaS over the last 500 commits.

')

From the moment of the first commit, FaaS gained popularity: it received more than 4,000 stars on GitHub (now more than 7,000 today - approx. Transl. ) And a small community of developers and hackers who talked about it at various meetings, wrote their functions and made changes to the code . A significant event for me was getting a seat among the main Moby Cool Hacks sessions at Dockercon in Austin in April. The task before these performances sounded like an extension of the boundaries of what the Docker was created for .

What is serverless?

Architecture evolves

Serverless is a bad name. We are talking about a new architectural pattern for event-driven (event-driven) systems. Serverless functions are often used as a link between other services or in an event-driven architecture. We used to call this a service bus.

Serverless is evolution

Serverless functions

Serverless function is a small, separate and reusable part of the code that:

- does not live long

- is not a daemon (run for a long time),

- does not start TCP services,

- not stateful,

- uses your existing services or third-party resources

- runs in a few seconds (default in AWS Lambda).

It is also important to distinguish serverless products from IaaS providers and open source software projects.

On the one hand, we have such serverless implementations from IaaS providers as Lambda, Google Cloud Functions and Azure functions, and on the other hand, frameworks like OpenFaaS allow you to perform heavy work with orchestration platforms like Docker Swarm or Kubernetes.

Cloud native: use your favorite cluster.

The serverless product from the IaaS vendor is fully manageable, therefore it offers a high level of usability and payment in seconds / minutes. The flip side of the coin - you are very attached to their cycle of releases and support. Open source FaaS exist to provide diversity and choice.

What is the feature of OpenFaaS?

OpenFaaS is built on the basis of technologies that are the industry standard in the cloud native world:

OpenFaaS stack

The peculiarity of the OpenFaaS project is that each process can become a serverless function using the watchdog component and the Docker container. This means three things:

- You can run the code in any programming language;

- for any time required;

- anywhere.

Switching to serverless should not imply the need to rewrite code to another programming language. Just use what your business and team needs.

For example:

The

cat or sha512sum can be a function that does not require any changes, since the functions interact through stdin / stdout. Windows features are also supported in Docker CE.This is the main difference between FaaS and other open source serverless frameworks, which depend on specific executable environments for each supported language.

Let's look at the 3 most significant features that appeared since DockerCon (i.e. from April to August 2017 - approx. Transl. ) : CLI and templates for functions, Kubernetes support and asynchronous processing.

1. New CLI

Simple deployment

A console interface (CLI) was added to the FaaS project to simplify deployment functions and add scripting support to them. Until now, the user interface (UI) for API Gateway or curl could be used for this purpose. The new CLI allows you to define functions in the YAML file and deploy them to the same API Gateway.

Finnian Anderson has written a great introduction to the FaaS CLI on Practical Dev / dev.to.

Utility script and brew

To install the CLI there is a special script, and John McCabe helped with the recipe for brew:

$ brew install faas-cli or:

$ curl -sL https://cli.get-faas.com/ | sudo sh Templates

Using templates in the CLI, it is enough to write the handler in your favorite programming language, after which the CLI will use the template to build this handler into a Docker container with all the FaaS magic.

Two templates have been prepared: for Python and Node.js, but it’s easy to create your own.

CLI supports three actions:

-action build- locally creates Docker images from templates;-action push- loads (push) images into the selected registry or Hub;-action deploy- expands FaaS-functions.

If you have a cluster of 1 node, there is no need to push the images before they are deployed.

Here is an example of a CLI configuration file in YAML format (

sample.yml ): provider: name: faas gateway: http://localhost:8080 functions: url_ping: lang: python handler: ./sample/url_ping image: alexellis2/faas-urlping But the minimal (empty) handler for the Python function:

def handle(req): print(req) An example that checks the status code for a URL over HTTP (

./sample/url_ping/handler.py ): import requests def print_url(url): try: r = requests.get(url,timeout = 1) print(url +" => " + str(r.status_code)) except: print("Timed out trying to reach URL.") def handle(req): print_url(req) If additional PIP modules are required, add the

requirements.txt file to your handler ( handler.py ). $ faas-cli -action build -f ./sample.yml After this command, a Docker image will appear under the name

alexellis2/faas-urlping , which you can load into the Docker Hub using -action push and close it with -action deploy .CLA for FaaS is available in this repository .

2. Support Kubernetes

Being a Docker captain , I mainly study Docker Swarm and articles about him, but I have always been interested in Kubernetes. Having started learning how to set up Kubernetes in Linux and Mac, I already wrote three guides about this, and they were well-received in the community.

Designing Kubernetes Support

Having a good understanding of how to port the Docker Swarm concepts to Kubernetes, I prepared a prototype and ported all the code in a few days. The choice fell on creating a new microservice daemon interacting with Kubernetes, instead of adding additional dependencies to the main FaaS code base.

FaaS proxies calls to the new daemon through a standard RESTful interface for operations such as Deploy / List / Delete / Invoke and Scale.

This approach means that the user interface, CLI, and autoscaling work out of the box without having to make changes. The resulting microservice is supported in the new GitHub repository called FaaS-netes and is available in the Docker Hub. Setting it up in a cluster takes about 60 seconds.

Kubernetes Support Demonstration

In this video, FaaS unfolds on an empty cluster, after which it shows how to work with the user interface, Prometheus, and autoscaling.

But wait ... there are other frameworks that work for Kubernetes?

There are probably two categories of server-free frameworks for Kubernetes: those that rely on a very specific executable environment for each supported programming language, and those that, like FaaS, allow any container to become a function.

FaaS has bindings for Docker Swarm and Kubernetes for the native API, that is, it uses objects that you already worked with to manage Deployments and Services . This means that there is less magic and code that needs decoding when it comes to the essence of writing new applications.

When choosing a framework, you should consider whether you want to bring new features or corrections to projects. For example, OpenWhisk is written in Scala, and most others are written in Golang.

3. Asynchronous processing

One of the features of serverless function is that it is small and fast, it is executed synchronously and usually within a few seconds. But there are several reasons why you might want asynchronous processing functions:

- this is an event and the caller does not need a result;

- execution or initialization takes a long time - for example, TensorFlow / Machine Learning;

- A large number of requests are accepted within the batch job;

- You want to limit the speed.

The prototype for asynchronous processing began with a distributed queue. The implementation uses NATS Streaming, but can be extended to work with Kafka or any other abstraction that looks like a queue.

Illustration from Twitter announcement of asynchronous mode in FaaS

An asynchronous call with NATS Streaming as a backend is included in the code base of the project. Instructions for its use are available here .

Any changes are welcome.

... and it doesn't matter if you want to help with issues, features in code, project releases, scripting, tests, performance measurement, documentation, updating examples, or even writing a blog about the project.

There is always something for everyone, and all this helps the project move forward.

Send any feedback, ideas, suggestions to @alexellisuk or through one of the GitHub repositories .

Not sure where to start?

Inspire with discussions and examples of community functions that include machine learning with TensorFlow, ASCII art and simple integrations.

PS from translator

A month ago, the author of this material also published instructions for getting started with OpenFaaS on Kubernetes 1.8 using Minikube.

If you are interested in the serverless theme under Kubernetes, you should also pay attention (at least) to the Kubeless and Fission projects, and the author of the article above gives a more complete list. Probably, we will write more about them in our blog, but for now - read among past materials:

Source: https://habr.com/ru/post/344656/

All Articles