Sean Barrett: How I Got into the Video Game Industry

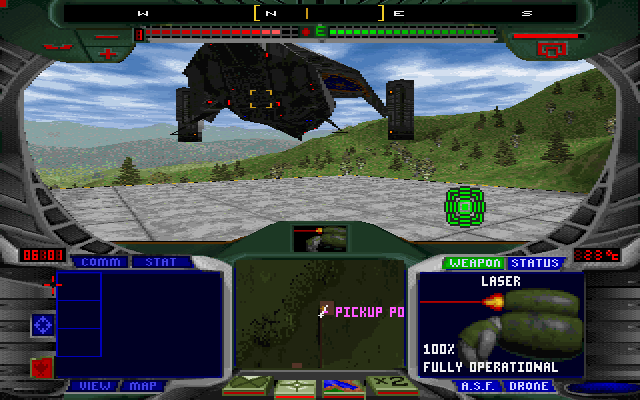

Programmer Terra Nova: Strike Force Centauri, System Shock 2 and Thief series, Sean Barrett talks about what led him to programming three-dimensional graphics and working on games.

One of Mike Abrash’s posts begins with a discussion of how his written work influenced his career. It reminded me of how his work influenced my career. Not sure if I ever told him that, perhaps, thanks to him I got into the gaming industry. I thought about writing a letter to him, but then I decided to put it all in public and do some research in this area.

')

When I was a teenager, in the 80s I had a computer Atari 800, on which I did the usual things (a few games, mostly BASIC). (Technically, at least initially, they were owned by my family, but I was the only user.) Although it was almost impossible for 800, but I became interested in 3D graphics. I studied Pascal and 3D at the same time as decoding a program for drawing frame graphics from the magazine Byte; I attempted hacker attempts to reproduce the same effect at 800. (In the end, I was able to trace 800 rays using a simple sphere with lighting on Lambert, and then checkered and reflecting spheres on my friend's Atari ST.)

Looking at the Infocom games that were created by singles, I thought I wanted to do the same “when I grow up”, but at that time the industry started moving towards large teams, and Infocom began to decline, so it no longer seemed interesting / justified and when I went to college, I never thought about such an opportunity again.

I continued to be interested in computer graphics and in college, I regularly read Computer Graphics SIGGRAPH and IEEE Computer Graphics and Applications. But I didn’t do any more programming of real-time graphics, even though I had a personal computer with the ability to display graphics. And I didn’t play PC games either. When I was programming, I mostly wrote something like text adventure engines and programs for IOCCC, and played only in MUD.

I graduated from college in 1992, when I was 25, and moved to Texas, where I began working in a startup-based startup software for printers. (This experience may seem of little value to the gaming industry, but in August 1993 I became (as far as I remember) the only programmer who worked on writing our new PostScript engine in C ++; our previous engine was written in Forth and 68000 assembly language, besides I had to support PostScript Level 2. So I was the only programmer in the language interpreter project and a two-dimensional graphics engine designed for the Adobe specification. Fifteen years later, in 2008, I started working on Iggy, a Flash-based UI library, which was basically a language interpreter and two-dimensional graphics engine designed for the Adobe specification. (However, I switched back to C.)) There I joined a pair of my friends, John Price and John Davis. I call them friends, but before moving to Texas I knew them only from LPMud ( Darker Realms ), in which we were administrators together. (To end these strange memories of past acquaintances, I’ll say that we created this LPMud when we met at another LPMud, Wintermute. One of the Wintermute administrators we emulated (and visited by our Mud several times) was Tim Kane .)

John, John and I were junior staff and quickly created a small clan. After working day, we played at work in Ultima Underworld, Ultima 7, Ultima Underworld 2 and Ultima 7 part 2 on the most powerful 386 and 486 that were in the office (it seems that in 1993 the most powerful was 486 50). At the same time, we found Mike Abrash’s 1992 graphics programming columns in the Dr. Dr. Dobb's Journal. John Price and I started writing on the PC 3D renderer polygons, competing with each other (and with Abrash) in who will achieve greater speed. My renderer was pretty fast until I tried to implement texture mapping - the first implementation required two division operations per pixel, which was very slow.

After the startup went bankrupt in 1994, the three of us got into the gaming industry (but in different ways): the John Price page on MobyGames ; John Davis MobyGames page ; My page is on MobyGames and this one (and in fact, I have three pages there , however, there are mostly not my projects, people just express "huge thanks" for their contributions).

As for me, since both Origin and I were in Texas, I decided to study the issue of employment in the company, so I wrote an e-mail to someone who worked in Looking Glass (I knew him only from the Usenet-group dedicated to strange text essays ), trying to find the contact information of Origin, because Origin published games LGS. Instead, he invited me to an interview at the LGS, and this is the story of how I got into the games industry, almost ending (not counting the links to the demos below).

Would I start programming graphics on a PC and find myself in the gaming industry if I hadn’t found those Abrash speakers on time and hadn’t arranged a game design / graphics programming competition? (My third co-adminstration with John Price separated from Wintermute because we had a strong conviction that a good MUD should strive to entertain players, and in many LPMud this was not the case. So our group did not appear completely spontaneously, and it is not so strange that as a result we became programmers in the gaming industry.) Maybe yes, maybe not. (And by the way, would I be in the LGS if I hadn’t had a casual acquaintance in the LGS? This is more difficult to judge - the Underworld series was my favorite, so maybe I would still find a way to contact them.)

Reflecting on this, I thought about the Abraham-inspired 3D polygon renderers that I wrote. I wondered if I could find them, so I went into archeology in my old archived “home” directories. I could not find them. However, I was able to detect the zip with the demos that I sent to the LGS, and they still work in DOSBox, so I’ll share them. (I could not find their source code, so my guesses are partially presented below.)

First, for reference, I want to show you the Tim Clark 5KB “Mars” demo released in 1993. Sorry for the frame breaks: I don’t know how to get Fraps to work properly with DOSBox.

I decided to implement it myself and add new features to it, so I wrote my own version. I don’t remember exactly when it was: EXE dated 1994.

Looking in the readme, I saw that I forgot to mention that this is a remake of the Mars demo. Perhaps I assumed that people from the LGS knew it. As it turned out, this was not the case, so perhaps they were more impressed than they should have been! In addition, they were engaged in the development of Terra Nova: Strike Force Centauri , so this could also affect their interest.

Notice that my demo extends Mars, and has different worlds. In the first world there are two different colors of relief. In the second (0:50) there is the effect of the shadows of the clouds on the ground, which I could not reproduce just as well in any other game / engine written by me. There is fog in the third world, and in the fourth - a strange lighting model and swirling clouds. In the fifth, there is a demoscene-style plasma sky that illuminates the relief (it’s just the invert of shadows), while the sixth is trying to mimic the lunar landscape.

Side slopes are imitations: I think that the sky uses a rigidly defined in the code mechanism for displaying lines with a constant z, and the baseline of the relief is tilted, but the vertical elements do not bend at all. However, the sky and the general slope make this effect quite convincing.

A slight flicker when moving forward arises because the forward movement is quantized along the squares of the map (but there seems to be no movement to the side). It was just a design error. Another blink occurs because I begin to skip the lines of the map when they move away. Skipped lines depend on your position, instead of, say, always skipping odd lines regardless of the parity of the line on which the player is currently located, which would be logical. Another design error. When I was assigned to do Terra Nova's terrain renderer (I was already the third person to work on this task), I corrected the exact same mistake in it.

I also sent LGS the first real-time texture engine I wrote. The map file and textures from it date back to February 1993, that is, it was about a month after the release of Ultima Underworld 2, and ten months before the release of DOOM.

In Underworld, there were sloping floors and the ability to look up and down, so they needed the correct, in terms of perspective, texture mapping, but they didn't have it. UW1 performed all the imposition of textures by drawing affine horizontal stripes, so that there was no perspective correction on the walls, but the floors and ceilings were drawn correctly. I didn’t understand this then, but UW2 divided the quadrilaterals into triangles and drew affine triangles, therefore the quadrilaterals demanding perspectives no longer looked stretched and were not distorted in a strange way; straight lines remained, but with a diagonal seam.

In the demo presented above, I myself (it seems to me - at that time I only had Abrash and Usenet speakers - maybe someone at Usenet discussed this?) Invented the idea of applying textures to walls / floors, which was later much better demonstrated DOOM. I think I rendered front to back with the fill buffer to reduce the cost per pixel fill rate. As you can see, when I rise higher than the height of the wall, the reverse faces are not cut off, but the fill buffer still allowed for lower costs. At some point I tried to rewrite the fill buffer and added an error that can be noticed: polygons sometimes disappear from the right edge or are sorted incorrectly, although there was no such problem in the original engine.

Finally, I had what might be called the first deferred renderer, although in reality this is not deferred shading or deferred lighting. I will tell you more about it below.

In this demo, I applied dynamic real-time lighting in a static scene. I imagined that it could be used for a game with pre-rendered graphics, à la Alone in the Dark or BioForge. (BioForge was only released in 1995, but I definitely saw its screenshots before I got into LGS.)

First, it renders a complex scene with non-real-time texture overlay (during startup) into the back-end buffer (into the g-buffer, if you wish) using perfect perspective correction. Each g-buffer contains diffuse albedo, which reflects the color and emitted color, but they are all packed only in 8 bits per pixel (using a palette, but different from the 8-bit display palette).

In real time, the engine re-renders the same polygons, but without texturing, just lighting. At the vertices of the polygons, diffuse and reflected light is calculated for the white surface, and then (as I assume) each of them interpolates separately for the entire polygon. Each pixel is loaded with a value from the g-buffer, and the lighting is calculated by unpacking the diffuse and reflected lighting into 8 bits (I assume again), and then the display color is searched for in the search table 256x256.

It should be noted that since the illumination is calculated only at the vertices, it is not very high quality. I think, I realized that we can dynamically subdivide polygons that require better lighting if we get to this (some of these polygons are subdivided in advance in order to improve the lighting in them).

In LGS, I somehow returned to this idea, creating something like an 8-bit sprite overlaid on a normal map; each pixel of the sprite had an 8-bit color and an 8-bit “lighting index”; conceptually, the lighting index was indexed into the normal palette, but in practice the index created directional lighting, diffuse and reflected, as well as self-shadowing. (For example, pre-render lighting with these effects from N lighting directions; now we process them as a sprite with N 8-bit color channels, one color for lighting at each angle, and then calculate the 8-bit palette for this sprite with “deep color” "For example, vector quantization compression. For real-time rendering, we perform mixing between the k closest light sources. High resolution details and 8-bit output hide most of the artifacts.)

However, after Terra Nova I never made games with sprite characters, so this was another one of my dozen inventions in real-time graphics that were useless.

Source: https://habr.com/ru/post/344004/

All Articles