Analyze it - Lenta.ru

Analyze it. Lenta.ru (part 1)

What, How, Why

For those who are too lazy to read - link to datasets below the article.

What - analysis of articles news resource Lenta.ru over the past 18 years (from September 1, 1999). How - by means of the R language (with the involvement of the MySterm program from Yandex on a separate site). Why ... In my case, the short answer to the question “why” will be “gaining experience” in Big Data. A more detailed explanation would be "the fulfillment of a real task, within which I can apply the skills acquired during training, as well as get a result that I could show as confirmation of my skills."

My background is 15 years as a 1C programmer and the first 5 courses in the Data Science specialization from Coursera.org, which basically gave a basis and work with R. Well, you can add experience writing small procedures on Basic, Pascal and Java 17-18 years backwards Despite the fact that in the current profession 80lvl is almost reached, no job has yet been sent from Google. Therefore, it was decided to put on the mainstream and touch the bigdate, since the threshold of entry is relatively lower compared to the same Java.

Of course, having decided within myself that I need practice and a portfolio, I would have to grab a couple of datasets, of which the sea is now, and analyze, analyze, analyze ... But having figured my skills in my head by directly analyzing and remembering that analysis is only 20-30% time and the rest is a search-collection-survey-data preparation, decided to take on the second. Yes, and I wanted something special, perhaps even interesting, in contrast to the analysis of air tickets sold in the USA over the past 30 years or the statistics of arrests.

Lenta.ru was chosen as an object of research. In part, because I am a long-time reader of it, although I regularly spit on the slag that slips past the editors (if there are any at all). In part, because it seemed relatively easy for data mining. However, to be honest, when approaching the choice of an object, I practically did not take into account the questions "and what will I do with this date" and "what questions will I ask." And this is due to the fact that at the moment I have more or less mastered only Getting and Cleaning Data and my knowledge of analysis is very poor. Of course, I imagined that at a minimum I could answer the question "how the average daily amount of news published has changed over the last 5-10 years," but I did not think beyond that.

And so, this article will be devoted to the extraction and cleaning of data that will be suitable for the analysis of Lenta.ru.

Grabbing

First of all, I needed to decide how to grab and parse the contents of the resource pages. Google suggested that the best option would be to use the rvest package, which at the same time allows you to get the text of the page by its address and using xPath to pull the contents of the fields I need. Of course, moving on, I had to split this task into two - getting pages and direct parsing, but I realized this later, but for now the first step was to get a list of links to the articles themselves.

After a brief study, the site had an Archive section that, using a simple script, redirected me to a page containing links to all the news for a specific date and the path to this page looked like https://lenta.ru/2017/07/01 / or https://lenta.ru/2017/03/09/ . It only remained to go through all these pages and get these latest news links.

For these purposes (grabbing and parsing) one way or another I used the following packages:

require(lubridate) require(rvest) require(dplyr) require(tidyr) require(purrr) require(XML) require(data.table) require(stringr) require(jsonlite) require(reshape2) Not a tricky code that allowed to get all the links to all articles in the last 8 years:

articlesStartDate <- as.Date("2010-01-01") articlesEndDate <- as.Date("2017-06-30") ## STEP 1. Prepare articles links list # Dowload list of pages with archived articles. # Takes about 40 minutes GetNewsListForPeriod <- function() { timestamp() # Prepare vector of links of archive pages in https://lenta.ru//yyyy/mm/dd/ format dayArray <- seq(as.Date(articlesStartDate), as.Date(articlesEndDate), by="days") archivePagesLinks <- paste0(baseURL, "/", year(dayArray), "/", formatC(month(dayArray), width = 2, format = "d", flag = "0"), "/", formatC(day(dayArray), width = 2, format = "d", flag = "0"), "/") # Go through all pages and extract all news links articlesLinks <- c() for (i in 1:length(archivePagesLinks)) { pg <- read_html(archivePagesLinks[i], encoding = "UTF-8") linksOnPage <- html_nodes(pg, xpath=".//section[@class='b-longgrid-column']//div[@class='titles']//a") %>% html_attr("href") articlesLinks <- c(articlesLinks, linksOnPage) saveRDS(articlesLinks, file.path(tempDataFolder, "tempArticlesLinks.rds")) } # Add root and write down all the news links articlesLinks <- paste0(baseURL, articlesLinks) writeLines(articlesLinks, file.path(tempDataFolder, "articles.urls")) timestamp() } 2017-06-30 generated an array of dates from 2010-01-01 to 2017-06-30 and transforming archivePagesLinks , I received links to all the so-called "archive pages":

> head(archivePagesLinks) [1] "https://lenta.ru/2010/01/01/" [2] "https://lenta.ru/2010/01/02/" [3] "https://lenta.ru/2010/01/03/" [4] "https://lenta.ru/2010/01/04/" [5] "https://lenta.ru/2010/01/05/" [6] "https://lenta.ru/2010/01/06/" > length(archivePagesLinks) [1] 2738 Using the read_html method read_html I “downloaded” the contents of the pages into the buffer in a loop, and with the help of the html_nodes and html_attr methods html_attr received direct links to articles, of which almost 400 were published:

> head(articlesLinks) [1] "https://lenta.ru/news/2009/12/31/kids/" [2] "https://lenta.ru/news/2009/12/31/silvio/" [3] "https://lenta.ru/news/2009/12/31/postpone/" [4] "https://lenta.ru/photo/2009/12/31/meeting/" [5] "https://lenta.ru/news/2009/12/31/boeviks/" [6] "https://lenta.ru/news/2010/01/01/celebrate/" > length(articlesLinks) [1] 379862 After getting the first results, I realized the problem. The code above was about 40 . Considering the fact that during this time 2738 links were processed, it can be calculated that it 379862 5550 minutes to process 379862 links, or 92 , that you will agree to no gate ... Built-in methods readLines {base} and download.file {utils} , which allowed just to get the text, gave similar results. The htmlParse {XML} method, which, like read_html , allowed to download and continue content parsing, did not improve the situation either. The same result using getURL {RCurl} . Dead end.

In search of a solution to the problem, I and Google decided to look in the direction of the "parallel" execution of my requests, so at the time the code worked, neither the network, nor the memory, nor the processor were loaded. Google prompted to dig in the direction of parallel-package {parallel} . A few hours of study and tests have shown that there is no profit with running even in two "parallel" threads. Scanty information in Google said that using this package you can parallelize any calculations or data manipulations, but when working with a disk or an external source, all requests are performed within a single process and are lined up (I could be mistaken in understanding the situation). Yes, and as I understood, even if the topic had taken off, it was worth only expecting an increase in performance multiple of the existing cores, i.e even with 8 pieces (which I didn't have) and real parallelism, I had to smoke for about 690 minutes.

The next idea was to run in parallel several processes R, in which their own part of a large list of links would be processed. However, Google didn’t say anything to the question “how to start several new R sessions from session R”. I also thought about the option of launching the R-script via the command line, but the experience with CMD was at the level of "type dir and get a list of files in the folder". I was again at an impasse.

When Google stopped giving me new results, with a clear conscience I decided to ask for help from the audience. Since Google quite often gave out stackoverflow , I decided to try my luck there. Having experience in thematic forums and knowing the reaction to newcomers' questions, I tried to state the problem as clearly and clearly as possible. And about a miracle, after a few hours I received from Bob Rudis a more than detailed answer, which, after being inserted into my code, almost completely solved my problem. True with a caveat: I absolutely did not understand how it works. The first time I heard about wget , I didn’t understand what the code does with the WARC and why they transfer the function to the method (I repeat, I didn’t finish the seminaries and didn’t use tricks in my previous language). However, if you look at the code for a long, long time, then enlightenment still comes. And by adding attempts to perform it piece by piece, examining the function by function, you can achieve certain results. Well, the same Google helped me with wget .

In spite of the fact that all development was conducted in the macOS environment, the need to use wget (and later MyStem, which worked only on Windows), had to narrow the runtime to Windows. I have an idea that curl can do something like that, and maybe I will take the time and try to implement it with it, but for now I decided not to stop at the place and move on.

As a result, the essence of the decision was reduced to the following - a previously prepared file containing links to articles was slipped to the wget command:

wget --warc-file=lenta -i lenta.urls Directly the execution code looked like this:

system("wget --warc-file=lenta -i lenta.urls", intern = FALSE) After execution, I received a bunch of files (one for each transmitted link) with html content of web pages. Also at my disposal was a packed WARC , which contained a log of communication with the resource, as well as the very contents of the web pages. That WARC and offered to parse Bob Rudis . Exactly what is needed. And I left copies of the read pages, which made it possible for them to re-read.

The first measurements of performance showed that 10 were spent for downloading 2000 links, and an extrapolation method (I wanted to use this word for a long time) received 1890 for all articles - “Olmost three times fast baht music notes” - almost three times faster, but still not enough. Returning a couple of steps back and testing a new mechanism with regard to parallel-package {parallel} , I realized that there is no profit here either.

There was a knight's turn. Run several truly parallel processes. Taking into account the fact that I already took a step aside from "reproducible research" (the principle we talked about in the courses) (using the external program wget and actually tying the implementation to the Windows environment), I decided to take another step and return to the idea running parallel processes, however, already outside R. How to make the CMD file execute several consecutive commands, without waiting for the previous one (read in parallel), the same stackoverflow told. It turned out that the excellent START command allows you to run a command in a separate window. Armed with this knowledge, I delivered the following code:

## STEP 2. Prepare wget CMD files for parallel downloading # Create CMD file. # Downloading process for 400K pages takes about 3 hours. Expect about 70GB # in html files and 12GB in compressed WARC files CreateWgetCMDFiles <- function() { timestamp() articlesLinks <- readLines(file.path(tempDataFolder, "articles.urls")) dir.create(warcFolder, showWarnings = FALSE) # Split up articles links array by 10K links numberOfLinks <- length(articlesLinks) digitNumber <- nchar(numberOfLinks) groupSize <- 10000 filesGroup <- seq(from = 1, to = numberOfLinks, by = groupSize) cmdCodeAll <- c() for (i in 1:length(filesGroup)) { # Prepare folder name as 00001-10000, 10001-20000 etc firstFileInGroup <- filesGroup[i] lastFileInGroup <- min(firstFileInGroup + groupSize - 1, numberOfLinks) leftPartFolderName <- formatC(firstFileInGroup, width = digitNumber, format = "d", flag = "0") rigthPartFolderName <- formatC(lastFileInGroup, width = digitNumber, format = "d", flag = "0") subFolderName <- paste0(leftPartFolderName, "-", rigthPartFolderName) subFolderPath <- file.path(downloadedArticlesFolder, subFolderName) dir.create(subFolderPath) # Write articles.urls for each 10K folders that contains 10K articles urls writeLines(articlesLinks[firstFileInGroup:lastFileInGroup], file.path(subFolderPath, "articles.urls")) # Add command line in CMD file that will looks like: # 'START wget --warc-file=warc\000001-010000 -i 000001-010000\list.urls -P 000001-010000' cmdCode <-paste0("START ..\\wget -i ", subFolderName, "\\", "articles.urls -P ", subFolderName) # Use commented code below for downloading with WARC files: #cmdCode <-paste0("START ..\\wget --warc-file=warc\\", subFolderName," -i ", # subFolderName, "\\", "articles.urls -P ", subFolderName) cmdCodeAll <- c(cmdCodeAll, cmdCode) } # Write down command file cmdFile <- file.path(downloadedArticlesFolder, "start.cmd") writeLines(cmdCodeAll, cmdFile) print(paste0("Run ", cmdFile, " to start downloading.")) print("wget.exe should be placed in working directory.") timestamp() } This code breaks an array of links to blocks of 10,000 pieces (in my case, 38 blocks turned out). For each block in the cycle, a folder of the type 00001-10000 , 10001-20000 , etc. is created, into which your own "articles.urls" file (with its 10,000 links) will be added and the downloaded files will also be there. In the same cycle, a CMD file is collected, which must simultaneously run 38 windows:

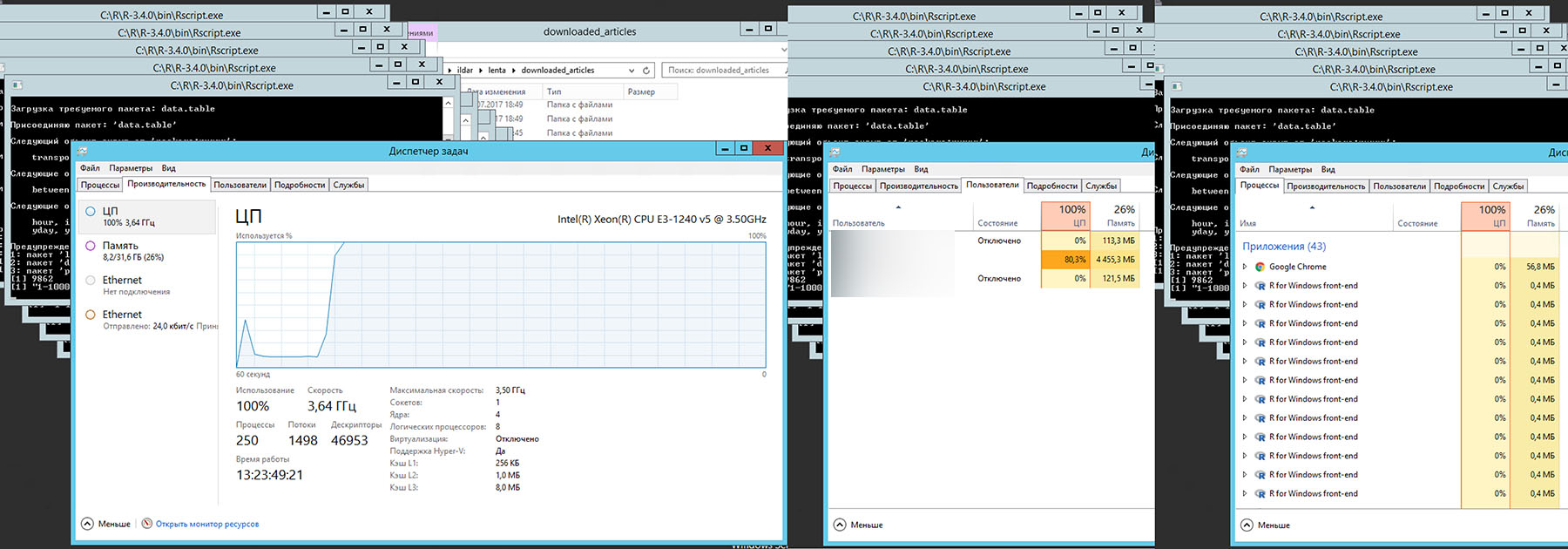

START ..\wget --warc-file=warc\000001-010000 -i 000001-010000\articles.urls -P 000001-010000 START ..\wget --warc-file=warc\010001-020000 -i 010001-020000\articles.urls -P 010001-020000 START ..\wget --warc-file=warc\020001-030000 -i 020001-030000\articles.urls -P 020001-030000 ... START ..\wget --warc-file=warc\350001-360000 -i 350001-360000\articles.urls -P 350001-360000 START ..\wget --warc-file=warc\360001-370000 -i 360001-370000\articles.urls -P 360001-370000 START ..\wget --warc-file=warc\370001-379862 -i 370001-379862\articles.urls -P 370001-379862 Launching the generated CMD file launches the expected 38 windows with the wget command and gives the following computer boot with the following characteristics 3.5GHz Xeon E-1240 v5, 32Gb, SSD, Windows Server 2012

The total time is 180 or 3 . The “crutch” parallelism yielded almost 10- gain compared to single-threaded execution of wget and 30- gain relative to the initial use read_html {rvest} . It was the first small victory and I had to use a similar “crutch” approach several more times later.

The result of the execution on the hard disk was as follows:

> indexFiles <- list.files(downloadedArticlesFolder, full.names = TRUE, recursive = TRUE, pattern = "index") > length(indexFiles) [1] 379703 > sum(file.size(indexFiles))/1024/1024 [1] 66713.61 > warcFiles <- list.files(downloadedArticlesFolder, full.names = TRUE, recursive = TRUE, pattern = "warc") > length(warcFiles) [1] 38 > sum(file.size(warcFiles))/1024/1024 [1] 18770.4 Which means 379703 downloaded web pages with a total size of 66713.61MB and 38 compressed WARC files with a total size of 18770.40MB . A simple calculation showed that I had “lost” 159 pages. Perhaps their fate can be learned by parsing the WARC files, following the example of Bob Rudis , but I decided to write them off for error and go my own way, directly by 379703 files.

Parsing

Before pulling something from the downloaded page, I had to determine what exactly to pull out, what kind of information I might be interested in. After a long study of the contents of the pages, prepared the following code:

# Parse srecific file # Parse srecific file ReadFile <- function(filename) { pg <- read_html(filename, encoding = "UTF-8") # Extract Title, Type, Description metaTitle <- html_nodes(pg, xpath=".//meta[@property='og:title']") %>% html_attr("content") %>% SetNAIfZeroLength() metaType <- html_nodes(pg, xpath=".//meta[@property='og:type']") %>% html_attr("content") %>% SetNAIfZeroLength() metaDescription <- html_nodes(pg, xpath=".//meta[@property='og:description']") %>% html_attr("content") %>% SetNAIfZeroLength() # Extract script contect that contains rubric and subrubric data scriptContent <- html_nodes(pg, xpath=".//script[contains(text(),'chapters: [')]") %>% html_text() %>% strsplit("\n") %>% unlist() if (is.null(scriptContent[1])) { chapters <- NA } else if (is.na(scriptContent[1])) { chapters <- NA } else { chapters <- scriptContent[grep("chapters: ", scriptContent)] %>% unique() } articleBodyNode <- html_nodes(pg, xpath=".//div[@itemprop='articleBody']") # Extract articles body plaintext <- html_nodes(articleBodyNode, xpath=".//p") %>% html_text() %>% paste0(collapse="") if (plaintext == "") { plaintext <- NA } # Extract links from articles body plaintextLinks <- html_nodes(articleBodyNode, xpath=".//a") %>% html_attr("href") %>% unique() %>% paste0(collapse=" ") if (plaintextLinks == "") { plaintextLinks <- NA } # Extract links related to articles additionalLinks <- html_nodes(pg, xpath=".//section/div[@class='item']/div/..//a") %>% html_attr("href") %>% unique() %>% paste0(collapse=" ") if (additionalLinks == "") { additionalLinks <- NA } # Extract image Description and Credits imageNodes <- html_nodes(pg, xpath=".//div[@class='b-topic__title-image']") imageDescription <- html_nodes(imageNodes, xpath="div//div[@class='b-label__caption']") %>% html_text() %>% unique() %>% SetNAIfZeroLength() imageCredits <- html_nodes(imageNodes, xpath="div//div[@class='b-label__credits']") %>% html_text() %>% unique() %>% SetNAIfZeroLength() # Extract video Description and Credits if (is.na(imageDescription)&is.na(imageCredits)) { videoNodes <- html_nodes(pg, xpath=".//div[@class='b-video-box__info']") videoDescription <- html_nodes(videoNodes, xpath="div[@class='b-video-box__caption']") %>% html_text() %>% unique() %>% SetNAIfZeroLength() videoCredits <- html_nodes(videoNodes, xpath="div[@class='b-video-box__credits']") %>% html_text() %>% unique() %>% SetNAIfZeroLength() } else { videoDescription <- NA videoCredits <- NA } # Extract articles url url <- html_nodes(pg, xpath=".//head/link[@rel='canonical']") %>% html_attr("href") %>% SetNAIfZeroLength() # Extract authors authorSection <- html_nodes(pg, xpath=".//p[@class='b-topic__content__author']") authors <- html_nodes(authorSection, xpath="//span[@class='name']") %>% html_text() %>% SetNAIfZeroLength() if (length(authors) > 1) { authors <- paste0(authors, collapse = "|") } authorLinks <- html_nodes(authorSection, xpath="a") %>% html_attr("href") %>% SetNAIfZeroLength() if (length(authorLinks) > 1) { authorLinks <- paste0(authorLinks, collapse = "|") } # Extract publish date and time datetimeString <- html_nodes(pg, xpath=".//div[@class='b-topic__info']/time[@class='g-date']") %>% html_text() %>% unique() %>% SetNAIfZeroLength() datetime <- html_nodes(pg, xpath=".//div[@class='b-topic__info']/time[@class='g-date']") %>% html_attr("datetime") %>% unique() %>% SetNAIfZeroLength() if (is.na(datetimeString)) { datetimeString <- html_nodes(pg, xpath=".//div[@class='b-topic__date']") %>% html_text() %>% unique() %>% SetNAIfZeroLength() } data.frame(url = url, filename = filename, metaTitle= metaTitle, metaType= metaType, metaDescription= metaDescription, chapters = chapters, datetime = datetime, datetimeString = datetimeString, plaintext = plaintext, authors = authors, authorLinks = authorLinks, plaintextLinks = plaintextLinks, additionalLinks = additionalLinks, imageDescription = imageDescription, imageCredits = imageCredits, videoDescription = videoDescription, videoCredits = videoCredits, stringsAsFactors=FALSE) } To start the title. Initially, I received from the title something like : : : Lenta.ru , hoping to split the title into a direct title and into a rubric with a subheading. However, then I decided to tighten up the title in pure form from the page metadata under the safety net.

The date and time I get from <time class="g-date" datetime="2017-07-10T12:35:00Z" itemprop="datePublished" pubdate=""> 15:35, 10 2017</time> , and I decided to get for safety not only 2017-07-10T12:35:00Z , but also the text presentation 15:35, 10 2017 and as it turned out not in vain. This textual representation allowed to get article time for cases when time[@class='g-date'] for some reason was missing on the page.

Authorship in the articles is extremely rare, but I still decided to pull this information, just in case. Also, it seemed to me interesting links that appeared in the texts of the articles themselves and under them in the section "Related Links". I spent a little more time than I would like to correctly parse information about pictures and videos at the beginning of the article, but also just in case.

To get the heading and subheadings (I originally wanted to pull it out of the headline) I decided to play it safe and save the line chapters: ["","","lenta.ru:_:_:_______"], // Chapters and she pull out the "World" and "Society" will be slightly easier than from the title.

Of particular interest to me was the number of shareings, the number of comments on the article, and of course the comments themselves (dictionary content, temporary activity), since that was the only information about how readers reacted to the article. But it was the most interesting thing that didn’t work for me. Counters of the number of ball and cam are set by a script that executes after the page loads. And all my super-tricky code was pumping the page up to this point, leaving the corresponding fields empty. Comments are also loaded by the script, in addition, they are disabled for articles after some time and it is not possible to get them. But I still work on this issue, since I still want to see the dependence of the presence of the words Ukraine / Putin / Candolysis and the number of sracha in the kammenta.

In fact, thanks to the help of the former colleague, I did manage to get to this information, but this will be discussed below.

That's basically all the information that I found useful.

The following code allowed me to start the parsing of files located in the first folder (out of 38):

folderNumber <- 1 # Read and parse files in folder with provided number ReadFilesInFolder <- function(folderNumber) { timestamp() # Get name of folder that have to be parsed folders <- list.files(downloadedArticlesFolder, full.names = FALSE, recursive = FALSE, pattern = "-") folderName <- folders[folderNumber] currentFolder <- file.path(downloadedArticlesFolder, folderName) files <- list.files(currentFolder, full.names = TRUE, recursive = FALSE, pattern = "index") # Split files in folder in 1000 chunks and parse them using ReadFile numberOfFiles <- length(files) print(numberOfFiles) groupSize <- 1000 filesGroup <- seq(from = 1, to = numberOfFiles, by = groupSize) dfList <- list() for (i in 1:length(filesGroup)) { firstFileInGroup <- filesGroup[i] lastFileInGroup <- min(firstFileInGroup + groupSize - 1, numberOfFiles) print(paste0(firstFileInGroup, "-", lastFileInGroup)) dfList[[i]] <- map_df(files[firstFileInGroup:lastFileInGroup], ReadFile) } # combine rows in data frame and write down df <- bind_rows(dfList) write.csv(df, file.path(parsedArticlesFolder, paste0(folderName, ".csv")), fileEncoding = "UTF-8") } Having received the address of a folder of the type 00001-10000 and its contents, I broke the array of files into blocks of 1000 and in a cycle using map_df started my ReadFile function for each such block.

The measurements showed that it took about 8 to process 10000 articles (and the read_html method was 95% time). All the same extrapolation received 300 or 5 .

And here is the promised second move of the knight. Running a truly parallel sessions R (good experience with CMD already had). Therefore, using this script, I got the required CMD file:

## STEP 3. Parse downloaded articles # Create CMD file for parallel articles parsing. # Parsing process takes about 1 hour. Expect about 1.7Gb in parsed files CreateCMDForParsing <- function() { timestamp() # Get list of folders that contain downloaded articles folders <- list.files(downloadedArticlesFolder, full.names = FALSE, recursive = FALSE, pattern = "-") # Create CMD contains commands to run parse.R script with specified folder number nn <- 1:length(folders) cmdCodeAll <- paste0("start C:/R/R-3.4.0/bin/Rscript.exe ", file.path(getwd(), "parse.R "), nn) cmdFile <- file.path(downloadedArticlesFolder, "parsing.cmd") writeLines(cmdCodeAll, cmdFile) print(paste0("Run ", cmdFile, " to start parsing.")) timestamp() } View:

start C:/R/R-3.4.0/bin/Rscript.exe C:/Users/ildar/lenta/parse.R 1 start C:/R/R-3.4.0/bin/Rscript.exe C:/Users/ildar/lenta/parse.R 2 start C:/R/R-3.4.0/bin/Rscript.exe C:/Users/ildar/lenta/parse.R 3 ... start C:/R/R-3.4.0/bin/Rscript.exe C:/Users/ildar/lenta/parse.R 36 start C:/R/R-3.4.0/bin/Rscript.exe C:/Users/ildar/lenta/parse.R 37 start C:/R/R-3.4.0/bin/Rscript.exe C:/Users/ildar/lenta/parse.R 38 Which in turn launched 38 scripts for execution:

args <- commandArgs(TRUE) n <- as.integer(args[1]) # Set workling directory and locale for macOS and Windows if (Sys.info()['sysname'] == "Windows") { workingDirectory <- paste0(Sys.getenv("HOMEPATH"), "\\lenta") Sys.setlocale("LC_ALL", "Russian") } else { workingDirectory <- ("~/lenta") Sys.setlocale("LC_ALL", "ru_RU.UTF-8") } setwd(workingDirectory) source("get_lenta_articles_list.R") ReadFilesInFolder(n)

Such parallelization allowed us to load the server 100% and complete the task in 30 . Another 10- win.

It remains only to execute a script that will merge 38 files just created:

## STEP 4. Prepare combined articles data # Read all parsed csv and combine them in one. # Expect about 1.7Gb in combined file UnionData <- function() { timestamp() files <- list.files(parsedArticlesFolder, full.names = TRUE, recursive = FALSE) dfList <- c() for (i in 1:length(files)) { file <- files[i] print(file) dfList[[i]] <- read.csv(file, stringsAsFactors = FALSE, encoding = "UTF-8") } df <- bind_rows(dfList) write.csv(df, file.path(parsedArticlesFolder, "untidy_articles_data.csv"), fileEncoding = "UTF-8") timestamp() } And in the end, we have on the 1.739MB disk a crude and unreduced date:

> file.size(file.path(parsedArticlesFolder, "untidy_articles_data.csv"))/1024/1024 [1] 1739.047 What is inside?

> str(dfM, vec.len = 1) 'data.frame': 379746 obs. of 21 variables: $ X.1 : int 1 2 ... $ X : int 1 2 ... $ url : chr "https://lenta.ru/news/2009/12/31/kids/" ... $ filename : chr "C:/Users/ildar/lenta/downloaded_articles/000001-010000/index.html" ... $ metaTitle : chr " " ... $ metaType : chr "article" ... $ metaDescription : chr " . "| __truncated__ ... $ rubric : logi NA ... $ chapters : chr " chapters: [\"\",\"_\",\"lenta.ru:_:_____"| __truncated__ ... $ datetime : chr "2009-12-31T21:24:33Z" ... $ datetimeString : chr " 00:24, 1 2010" ... $ title : chr " : : Lenta.ru" ... $ plaintext : chr " . "| __truncated__ ... $ authors : chr NA ... $ authorLinks : chr NA ... $ plaintextLinks : chr "http://www.interfax.ru/" ... $ additionalLinks : chr "https://lenta.ru/news/2009/12/29/golovan/ https://lenta.ru/news/2009/09/01/children/" ... $ imageDescription: chr NA ... $ imageCredits : chr NA ... $ videoDescription: chr NA ... $ videoCredits : chr NA ... . , . .

SOCIAL MEDIA

, ( ). , - , . , Google Chrome Network :

https://graph.facebook.com/?id=https%3A%2F%2Flenta.ru%2Fnews%2F2017%2F08%2F10%2Fudostov%2F :

{ "share": { "comment_count": 0, "share_count": 243 }, "og_object": { "id": "1959067174107041", "description": ..., "title": ..., "type": "article", "updated_time": "2017-08-10T09:21:29+0000" }, "id": "https://lenta.ru/news/2017/08/10/udostov/" } , , ( ). , . 4 , "".

, 4 CMD , :

## STEP 5. Prepare wget CMD files for parallel downloading social # Create CMD file. CreateWgetCMDFilesForSocial <- function() { timestamp() articlesLinks <- readLines(file.path(tempDataFolder, "articles.urls")) dir.create(warcFolder, showWarnings = FALSE) dir.create(warcFolderForFB, showWarnings = FALSE) dir.create(warcFolderForVK, showWarnings = FALSE) dir.create(warcFolderForOK, showWarnings = FALSE) dir.create(warcFolderForCom, showWarnings = FALSE) # split up articles links array by 10K links numberOfLinks <- length(articlesLinks) digitNumber <- nchar(numberOfLinks) groupSize <- 10000 filesGroup <- seq(from = 1, to = numberOfLinks, by = groupSize) cmdCodeAll <- c() cmdCodeAllFB <- c() cmdCodeAllVK <- c() cmdCodeAllOK <- c() cmdCodeAllCom <- c() for (i in 1:length(filesGroup)) { # Prepare folder name as 00001-10000, 10001-20000 etc firstFileInGroup <- filesGroup[i] lastFileInGroup <- min(firstFileInGroup + groupSize - 1, numberOfLinks) leftPartFolderName <- formatC(firstFileInGroup, width = digitNumber, format = "d", flag = "0") rigthPartFolderName <- formatC(lastFileInGroup, width = digitNumber, format = "d", flag = "0") subFolderName <- paste0(leftPartFolderName, "-", rigthPartFolderName) subFolderPathFB <- file.path(downloadedArticlesFolderForFB, subFolderName) dir.create(subFolderPathFB) subFolderPathVK <- file.path(downloadedArticlesFolderForVK, subFolderName) dir.create(subFolderPathVK) subFolderPathOK <- file.path(downloadedArticlesFolderForOK, subFolderName) dir.create(subFolderPathOK) subFolderPathCom <- file.path(downloadedArticlesFolderForCom, subFolderName) dir.create(subFolderPathCom) # Encode and write down articles.urls for each 10K folders that contains # 10K articles urls. # For FB it has to be done in a bit different way because FB allows to pass # up to 50 links as a request parameter. articlesLinksFB <- articlesLinks[firstFileInGroup:lastFileInGroup] numberOfLinksFB <- length(articlesLinksFB) digitNumberFB <- nchar(numberOfLinksFB) groupSizeFB <- 50 filesGroupFB <- seq(from = 1, to = numberOfLinksFB, by = groupSizeFB) articlesLinksFBEncoded <- c() for (k in 1:length(filesGroupFB )) { firstFileInGroupFB <- filesGroupFB[k] lastFileInGroupFB <- min(firstFileInGroupFB + groupSizeFB - 1, numberOfLinksFB) articlesLinksFBGroup <- paste0(articlesLinksFB[firstFileInGroupFB:lastFileInGroupFB], collapse = ",") articlesLinksFBGroup <- URLencode(articlesLinksFBGroup , reserved = TRUE) articlesLinksFBGroup <- paste0("https://graph.facebook.com/?fields=engagement&access_token=PlaceYourTokenHere&ids=", articlesLinksFBGroup) articlesLinksFBEncoded <- c(articlesLinksFBEncoded, articlesLinksFBGroup) } articlesLinksVK <- paste0("https://vk.com/share.php?act=count&index=1&url=", sapply(articlesLinks[firstFileInGroup:lastFileInGroup], URLencode, reserved = TRUE), "&format=json") articlesLinksOK <- paste0("https://connect.ok.ru/dk?st.cmd=extLike&uid=okLenta&ref=", sapply(articlesLinks[firstFileInGroup:lastFileInGroup], URLencode, reserved = TRUE), "") articlesLinksCom <- paste0("https://c.rambler.ru/api/app/126/comments-count?xid=", sapply(articlesLinks[firstFileInGroup:lastFileInGroup], URLencode, reserved = TRUE), "") writeLines(articlesLinksFBEncoded, file.path(subFolderPathFB, "articles.urls")) writeLines(articlesLinksVK, file.path(subFolderPathVK, "articles.urls")) writeLines(articlesLinksOK, file.path(subFolderPathOK, "articles.urls")) writeLines(articlesLinksCom, file.path(subFolderPathCom, "articles.urls")) # Add command line in CMD file cmdCode <-paste0("START ..\\..\\wget --warc-file=warc\\", subFolderName," -i ", subFolderName, "\\", "articles.urls -P ", subFolderName, " --output-document=", subFolderName, "\\", "index") cmdCodeAll <- c(cmdCodeAll, cmdCode) } cmdFile <- file.path(downloadedArticlesFolderForFB, "start.cmd") print(paste0("Run ", cmdFile, " to start downloading.")) writeLines(cmdCodeAll, cmdFile) cmdFile <- file.path(downloadedArticlesFolderForVK, "start.cmd") writeLines(cmdCodeAll, cmdFile) print(paste0("Run ", cmdFile, " to start downloading.")) cmdFile <- file.path(downloadedArticlesFolderForOK, "start.cmd") writeLines(cmdCodeAll, cmdFile) print(paste0("Run ", cmdFile, " to start downloading.")) cmdFile <- file.path(downloadedArticlesFolderForCom, "start.cmd") writeLines(cmdCodeAll, cmdFile) print(paste0("Run ", cmdFile, " to start downloading.")) print("wget.exe should be placed in working directory.") timestamp() } , 10, ( ), . , WARC , . , 100 . , , , . 50 , .

:

## Parse downloaded articles social ReadSocial <- function() { timestamp() # Read and parse all warc files in FB folder dfList <- list() dfN <- 0 warcs <- list.files(file.path(downloadedArticlesFolderForFB, "warc"), full.names = TRUE, recursive = FALSE) for (i in 1:length(warcs)) { filename <- warcs[i] print(filename) res <- readLines(filename, warn = FALSE, encoding = "UTF-8") anchorPositions <- which(res == "WARC-Type: response") responsesJSON <- res[anchorPositions + 28] getID <- function(responses) { links <- sapply(responses, function(x){x$id}, USE.NAMES = FALSE) %>% unname() links} getQuantity <- function(responses) { links <- sapply(responses, function(x){x$engagement$share_count}, USE.NAMES = FALSE) %>% unname() links} for(k in 1:length(responsesJSON)) { if(responsesJSON[k]==""){ next } responses <- fromJSON(responsesJSON[k]) if(!is.null(responses$error)) { next } links <- sapply(responses, function(x){x$id}, USE.NAMES = FALSE) %>% unname() %>% unlist() quantities <- sapply(responses, function(x){x$engagement$share_count}, USE.NAMES = FALSE) %>% unname() %>% unlist() df <- data.frame(link = links, quantity = quantities, social = "FB", stringsAsFactors = FALSE) dfN <- dfN + 1 dfList[[dfN]] <- df } } dfFB <- bind_rows(dfList) # Read and parse all warc files in VK folder dfList <- list() dfN <- 0 warcs <- list.files(file.path(downloadedArticlesFolderForVK, "warc"), full.names = TRUE, recursive = FALSE) for (i in 1:length(warcs)) { filename <- warcs[i] print(filename) res <- readLines(filename, warn = FALSE, encoding = "UTF-8") anchorPositions <- which(res == "WARC-Type: response") links <- res[anchorPositions + 4] %>% str_replace_all("WARC-Target-URI: https://vk.com/share.php\\?act=count&index=1&url=|&format=json", "") %>% sapply(URLdecode) %>% unname() quantities <- res[anchorPositions + 24] %>% str_replace_all(" |.*\\,|\\);", "") %>% as.integer() df <- data.frame(link = links, quantity = quantities, social = "VK", stringsAsFactors = FALSE) dfN <- dfN + 1 dfList[[dfN]] <- df } dfVK <- bind_rows(dfList) # Read and parse all warc files in OK folder dfList <- list() dfN <- 0 warcs <- list.files(file.path(downloadedArticlesFolderForOK, "warc"), full.names = TRUE, recursive = FALSE) for (i in 1:length(warcs)) { filename <- warcs[i] print(filename) res <- readLines(filename, warn = FALSE, encoding = "UTF-8") anchorPositions <- which(res == "WARC-Type: response") links <- res[anchorPositions + 4] %>% str_replace_all("WARC-Target-URI: https://connect.ok.ru/dk\\?st.cmd=extLike&uid=okLenta&ref=", "") %>% sapply(URLdecode) %>% unname() quantities <- res[anchorPositions + 22] %>% str_replace_all(" |.*\\,|\\);|'", "") %>% as.integer() df <- data.frame(link = links, quantity = quantities, social = "OK", stringsAsFactors = FALSE) dfN <- dfN + 1 dfList[[dfN]] <- df } dfOK <- bind_rows(dfList) # Read and parse all warc files in Com folder dfList <- list() dfN <- 0 warcs <- list.files(file.path(downloadedArticlesFolderForCom, "warc"), full.names = TRUE, recursive = FALSE) x <- c() for (i in 1:length(warcs)) { filename <- warcs[i] print(filename) res <- readLines(filename, warn = FALSE, encoding = "UTF-8") anchorPositions <- which(str_sub(res, start = 1, end = 9) == '{"xids":{') x <- c(x, res[anchorPositions]) } for (i in 1:length(warcs)) { filename <- warcs[i] print(filename) res <- readLines(filename, warn = FALSE, encoding = "UTF-8") anchorPositions <- which(str_sub(res, start = 1, end = 9) == '{"xids":{') x <- c(x, res[anchorPositions]) responses <- res[anchorPositions] %>% str_replace_all('\\{\\"xids\\":\\{|\\}', "") if(responses==""){ next } links <- str_replace_all(responses, "(\":[^ ]+)|\"", "") quantities <- str_replace_all(responses, ".*:", "") %>% as.integer() df <- data.frame(link = links, quantity = quantities, social = "Com", stringsAsFactors = FALSE) dfN <- dfN + 1 dfList[[dfN]] <- df } dfCom <- bind_rows(dfList) dfCom <- dfCom[dfCom$link!="",] # Combine dfs and reshape them into "link", "FB", "VK", "OK", "Com" dfList <- list() dfList[[1]] <- dfFB dfList[[2]] <- dfVK dfList[[3]] <- dfOK dfList[[4]] <- dfCom df <- bind_rows(dfList) dfCasted <- dcast(df, link ~ social, value.var = "quantity") dfCasted <- dfCasted[order(dfCasted$link),] write.csv(dfCasted, file.path(parsedArticlesFolder, "social_articles.csv"), fileEncoding = "UTF-8") timestamp() } . .

Cleaning

, 379746 obs. of 21 variables and size of 1.739MB , . fread {data.table} . :

> system.time(dfM <- read.csv(untidyDataFile, stringsAsFactors = FALSE, encoding = "UTF-8")) 133.17 1.50 134.67 > system.time(dfM <- fread(untidyDataFile, stringsAsFactors = FALSE, encoding = "UTF-8")) Read 379746 rows and 21 (of 21) columns from 1.698 GB file in 00:00:18 17.67 0.54 18.22 , - NA . - — - ( Parsing). , , :

# Load required packages require(lubridate) require(dplyr) require(tidyr) require(data.table) require(tldextract) require(XML) require(stringr) require(tm) # Set workling directory and locale for macOS and Windows if (Sys.info()['sysname'] == "Windows") { workingDirectory <- paste0(Sys.getenv("HOMEPATH"), "\\lenta") Sys.setlocale("LC_ALL", "Russian") } else { workingDirectory <- ("~/lenta") Sys.setlocale("LC_ALL", "ru_RU.UTF-8") } setwd(workingDirectory) # Set common variables parsedArticlesFolder <- file.path(getwd(), "parsed_articles") tidyArticlesFolder <- file.path(getwd(), "tidy_articles") # Creare required folders if not exist dir.create(tidyArticlesFolder, showWarnings = FALSE) ## STEP 5. Clear and tidy data # Section 7 takes about 2-4 hours TityData <- function() { dfM <- fread(file.path(parsedArticlesFolder, "untidy_articles_data.csv"), stringsAsFactors = FALSE, encoding = "UTF-8") # SECTION 1 print(paste0("1 ",Sys.time())) # Remove duplicate rows, remove rows with url = NA, create urlKey column as a key dtD <- dfM %>% select(-V1,-X) %>% distinct(url, .keep_all = TRUE) %>% na.omit(cols="url") %>% mutate(urlKey = gsub(":|\\.|/", "", url)) # Function SplitChapters is used to process formatted chapter column and retrive rubric # and subrubric SplitChapters <- function(x) { splitOne <- strsplit(x, "lenta.ru:_")[[1]] splitLeft <- strsplit(splitOne[1], ",")[[1]] splitLeft <- unlist(strsplit(splitLeft, ":_")) splitRight <- strsplit(splitOne[2], ":_")[[1]] splitRight <- splitRight[splitRight %in% splitLeft] splitRight <- gsub("_", " ", splitRight) paste0(splitRight, collapse = "|") } # SECTION 2 print(paste0("2 ",Sys.time())) # Process chapter column to retrive rubric and subrubric # Column value such as: # chapters: ["_","","lenta.ru:__:_:______"], // Chapters # should be represented as rubric value " " # and subrubric value "" dtD <- dtD %>% mutate(chapters = gsub('\"|\\[|\\]| |chapters:', "", chapters)) %>% mutate(chaptersFormatted = as.character(sapply(chapters, SplitChapters))) %>% separate(col = "chaptersFormatted", into = c("rubric", "subrubric") , sep = "\\|", extra = "drop", fill = "right", remove = FALSE) %>% filter(!rubric == "NA") %>% select(-chapters, -chaptersFormatted) # SECTION 3 print(paste0("3 ",Sys.time())) # Process imageCredits column and split into imageCreditsPerson # and imageCreditsCompany # Column value such as: ": / " should be represented # as imageCreditsPerson value " " and # imageCreditsCompany value " " pattern <- ': | |: |: |, |()||«|»|\\(|)|\"' dtD <- dtD %>% mutate(imageCredits = gsub(pattern, "", imageCredits)) %>% separate(col = "imageCredits", into = c("imageCreditsPerson", "imageCreditsCompany") , sep = "/", extra = "drop", fill = "left", remove = FALSE) %>% mutate(imageCreditsPerson = as.character(sapply(imageCreditsPerson, trimws))) %>% mutate(imageCreditsCompany = as.character(sapply(imageCreditsCompany, trimws))) %>% select(-imageCredits) # SECTION 4 print(paste0("4 ",Sys.time())) # Function UpdateDatetime is used to process missed values in datetime column # and fill them up with date and time retrived from string presentation # such as "13:47, 18 2017" or from url such # as https://lenta.ru/news/2017/07/18/frg/. Hours and Minutes set randomly # from 8 to 21 in last case months <- c("", "", "", "", "", "", "", "", "", "", "", "") UpdateDatetime <- function (datetime, datetimeString, url) { datetimeNew <- datetime if (is.na(datetime)) { if (is.na(datetimeString)) { parsedURL <- strsplit(url, "/")[[1]] parsedURLLength <- length(parsedURL) d <- parsedURL[parsedURLLength-1] m <- parsedURL[parsedURLLength-2] y <- parsedURL[parsedURLLength-3] H <- round(runif(1, 8, 21)) M <- round(runif(1, 1, 59)) S <- 0 datetimeString <- paste0(paste0(c(y, m, d), collapse = "-"), " ", paste0(c(H, M, S), collapse = ":")) datetimeNew <- ymd_hms(datetimeString, tz = "Europe/Moscow", quiet = TRUE) } else { parsedDatetimeString <- unlist(strsplit(datetimeString, ",")) %>% trimws %>% strsplit(" ") %>% unlist() monthNumber <- which(grepl(parsedDatetimeString[3], months)) dateString <- paste0(c(parsedDatetimeString[4], monthNumber, parsedDatetimeString[2]), collapse = "-") datetimeString <- paste0(dateString, " ", parsedDatetimeString[1], ":00") datetimeNew <- ymd_hms(datetimeString, tz = "Europe/Moscow", quiet = TRUE) } } datetimeNew } # Process datetime and fill up missed values dtD <- dtD %>% mutate(datetime = ymd_hms(datetime, tz = "Europe/Moscow", quiet = TRUE)) %>% mutate(datetimeNew = mapply(UpdateDatetime, datetime, datetimeString, url)) %>% mutate(datetime = as.POSIXct(datetimeNew, tz = "Europe/Moscow",origin = "1970-01-01")) # SECTION 5 print(paste0("5 ",Sys.time())) # Remove rows with missed datetime values, rename metaTitle to title, # remove columns that we do not need anymore dtD <- dtD %>% as.data.table() %>% na.omit(cols="datetime") %>% select(-filename, -metaType, -datetimeString, -datetimeNew) %>% rename(title = metaTitle) %>% select(url, urlKey, datetime, rubric, subrubric, title, metaDescription, plaintext, authorLinks, additionalLinks, plaintextLinks, imageDescription, imageCreditsPerson, imageCreditsCompany, videoDescription, videoCredits) # SECTION 6 print(paste0("6 ",Sys.time())) # Clean additionalLinks and plaintextLinks symbolsToRemove <- "href=|-–-|«|»|…|,|•|“|”|\n|\"|,|[|]|<a|<br" symbolsHttp <- "http:\\\\\\\\|:http://|-http://|.http://" symbolsHttp2 <- "http://http://|https://https://" symbolsReplace <- "[-|-|#!]" dtD <- dtD %>% mutate(plaintextLinks = gsub(symbolsToRemove,"", plaintextLinks)) %>% mutate(plaintextLinks = gsub(symbolsHttp, "http://", plaintextLinks)) %>% mutate(plaintextLinks = gsub(symbolsReplace, "e", plaintextLinks)) %>% mutate(plaintextLinks = gsub(symbolsHttp2, "http://", plaintextLinks)) %>% mutate(additionalLinks = gsub(symbolsToRemove,"", additionalLinks)) %>% mutate(additionalLinks = gsub(symbolsHttp, "http://", additionalLinks)) %>% mutate(additionalLinks = gsub(symbolsReplace, "e", additionalLinks)) %>% mutate(additionalLinks = gsub(symbolsHttp2, "http://", additionalLinks)) # SECTION 7 print(paste0("7 ",Sys.time())) # Clean additionalLinks and plaintextLinks using UpdateAdditionalLinks # function. Links such as: # "http://www.dw.com/ru/../B2 https://www.welt.de/politik/.../de/" # should be represented as "dw.com welt.de" # Function UpdateAdditionalLinks is used to process and clean additionalLinks # and plaintextLinks UpdateAdditionalLinks <- function(additionalLinks, url) { if (is.na(additionalLinks)) { return(NA) } additionalLinksSplitted <- gsub("http://|https://|http:///|https:///"," ", additionalLinks) additionalLinksSplitted <- gsub("http:/|https:/|htt://","", additionalLinksSplitted) additionalLinksSplitted <- trimws(additionalLinksSplitted) additionalLinksSplitted <- unlist(strsplit(additionalLinksSplitted, " ")) additionalLinksSplitted <- additionalLinksSplitted[!additionalLinksSplitted==""] additionalLinksSplitted <- additionalLinksSplitted[!grepl("lenta.", additionalLinksSplitted)] additionalLinksSplitted <- unlist(strsplit(additionalLinksSplitted, "/[^/]*$")) additionalLinksSplitted <- paste0("http://", additionalLinksSplitted) if (!length(additionalLinksSplitted) == 0) { URLSplitted <- c() for(i in 1:length(additionalLinksSplitted)) { parsed <- tryCatch(parseURI(additionalLinksSplitted[i]), error = function(x) {return(NA)}) parsedURL <- parsed["server"] if (!is.na(parsedURL)) { URLSplitted <- c(URLSplitted, parsedURL) } } if (length(URLSplitted)==0){ NA } else { URLSplitted <- URLSplitted[!is.na(URLSplitted)] paste0(URLSplitted, collapse = " ") } } else { NA } } # Function UpdateAdditionalLinksDomain is used to process additionalLinks # and plaintextLinks and retrive source domain name UpdateAdditionalLinksDomain <- function(additionalLinks, url) { if (is.na(additionalLinks)|(additionalLinks=="NA")) { return(NA) } additionalLinksSplitted <- unlist(strsplit(additionalLinks, " ")) if (!length(additionalLinksSplitted) == 0) { parsedDomain <- tryCatch(tldextract(additionalLinksSplitted), error = function(x) {data_frame(domain = NA, tld = NA)}) parsedDomain <- parsedDomain[!is.na(parsedDomain$domain), ] if (nrow(parsedDomain)==0) { #print("--------") #print(additionalLinks) return(NA) } domain <- paste0(parsedDomain$domain, ".", parsedDomain$tld) domain <- unique(domain) domain <- paste0(domain, collapse = " ") return(domain) } else { return(NA) } } dtD <- dtD %>% mutate(plaintextLinks = mapply(UpdateAdditionalLinks, plaintextLinks, url)) %>% mutate(additionalLinks = mapply(UpdateAdditionalLinks, additionalLinks, url)) # Retrive domain from external links using updateAdditionalLinksDomain # function. Links such as: # "http://www.dw.com/ru/../B2 https://www.welt.de/politik/.../de/" # should be represented as "dw.com welt.de" numberOfLinks <- nrow(dtD) groupSize <- 10000 groupsN <- seq(from = 1, to = numberOfLinks, by = groupSize) for (i in 1:length(groupsN)) { n1 <- groupsN[i] n2 <- min(n1 + groupSize - 1, numberOfLinks) dtD$additionalLinks[n1:n2] <- mapply(UpdateAdditionalLinksDomain, dtD$additionalLinks[n1:n2], dtD$url[n1:n2]) dtD$plaintextLinks[n1:n2] <- mapply(UpdateAdditionalLinksDomain, dtD$plaintextLinks[n1:n2], dtD$url[n1:n2]) } # SECTION 8 print(paste0("8 ",Sys.time())) # Clean title, descriprion and plain text. Remove puntuation and stop words. # Prepare for the stem step stopWords <- readLines("stop_words.txt", warn = FALSE, encoding = "UTF-8") dtD <- dtD %>% as.tbl() %>% mutate(stemTitle = tolower(title), stemMetaDescription = tolower(metaDescription), stemPlaintext = tolower(plaintext)) dtD <- dtD %>% as.tbl() %>% mutate(stemTitle = enc2utf8(stemTitle), stemMetaDescription = enc2utf8(stemMetaDescription), stemPlaintext = enc2utf8(stemPlaintext)) dtD <- dtD %>% as.tbl() %>% mutate(stemTitle = removeWords(stemTitle, stopWords), stemMetaDescription = removeWords(stemMetaDescription, stopWords), stemPlaintext = removeWords(stemPlaintext, stopWords)) dtD <- dtD %>% as.tbl() %>% mutate(stemTitle = removePunctuation(stemTitle), stemMetaDescription = removePunctuation(stemMetaDescription), stemPlaintext = removePunctuation(stemPlaintext)) dtD <- dtD %>% as.tbl() %>% mutate(stemTitle = str_replace_all(stemTitle, "\\s+", " "), stemMetaDescription = str_replace_all(stemMetaDescription, "\\s+", " "), stemPlaintext = str_replace_all(stemPlaintext, "\\s+", " ")) dtD <- dtD %>% as.tbl() %>% mutate(stemTitle = str_trim(stemTitle, side = "both"), stemMetaDescription = str_trim(stemMetaDescription, side = "both"), stemPlaintext = str_trim(stemPlaintext, side = "both")) # SECTION 9 print(paste0("9 ",Sys.time())) write.csv(dtD, file.path(tidyArticlesFolder, "tidy_articles_data.csv"), fileEncoding = "UTF-8") # SECTION 10 Finish print(paste0("10 ",Sys.time())) # SECTION 11 Adding social dfM <- read.csv(file.path(tidyArticlesFolder, "tidy_articles_data.csv"), stringsAsFactors = FALSE, encoding = "UTF-8") dfS <- read.csv(file.path(parsedArticlesFolder, "social_articles.csv"), stringsAsFactors = FALSE, encoding = "UTF-8") dt <- as.tbl(dfM) dtS <- as.tbl(dfS) %>% rename(url = link) %>% select(url, FB, VK, OK, Com) dtG <- left_join(dt, dtS, by = "url") write.csv(dtG, file.path(tidyArticlesFolder, "tidy_articles_data.csv"), fileEncoding = "UTF-8") } , time stamp print(paste0("1 ",Sys.time())) . 2.7GHz i5, 16Gb Ram, SSD, macOS 10.12, R version 3.4.0 :

[1] "1 2017-07-21 16:36:59" [1] "2 2017-07-21 16:37:13" [1] "3 2017-07-21 16:38:15" [1] "4 2017-07-21 16:39:11" [1] "5 2017-07-21 16:42:58" [1] "6 2017-07-21 16:42:58" [1] "7 2017-07-21 16:43:35" [1] "8 2017-07-21 18:41:25" [1] "9 2017-07-21 19:00:32" [1] "10 2017-07-21 19:01:04" 3.5GHz Xeon E-1240 v5, 32Gb, SSD, Windows Server 2012 :

[1] "1 2017-07-21 14:36:44" [1] "2 2017-07-21 14:37:08" [1] "3 2017-07-21 14:38:23" [1] "4 2017-07-21 14:41:24" [1] "5 2017-07-21 14:46:02" [1] "6 2017-07-21 14:46:02" [1] "7 2017-07-21 14:46:57" [1] "8 2017-07-21 18:58:04" [1] "9 2017-07-21 19:30:27" [1] "10 2017-07-21 19:35:18" ( ), UpdateAdditionalLinksDomain ( ) . tldextract {tldextract} . - — .

— 2 ? 7 4 2 .

, . Result:

> str(dfM, vec.len = 1) 'data.frame': 379746 obs. of 21 variables: $ X.1 : int 1 2 ... $ X : int 1 2 ... $ url : chr "https://lenta.ru/news/2009/12/31/kids/" ... $ filename : chr "C:/Users/ildar/lenta/downloaded_articles/000001-010000/index.html" ... > str(dfM, vec.len = 1) Classes 'data.table' and 'data.frame': 376913 obs. of 19 variables: $ url : chr "https://lenta.ru/news/2009/12/31/kids/" ... $ urlKey : chr "httpslentarunews20091231kids" ... $ datetime : chr "2010-01-01 00:24:33" ... $ rubric : chr "" ... $ subrubric : chr NA ... $ title : chr " " ... $ metaDescription : chr " . "| __truncated__ ... $ plaintext : chr " . "| __truncated__ ... $ authorLinks : chr NA ... $ additionalLinks : chr NA ... $ plaintextLinks : chr "interfax.ru" ... $ imageDescription : chr NA ... $ imageCreditsPerson : chr NA ... $ imageCreditsCompany: chr NA ... $ videoDescription : chr NA ... $ videoCredits : chr NA ... $ stemTitle : chr " " ... $ stemMetaDescription: chr " "| __truncated__ ... $ stemPlaintext : chr " "| __truncated__ ... .

, :

> file.size(file.path(tidyArticlesFolder, "tidy_articles_data.csv"))/1024/1024 [1] 2741.01 REPRODUCIBLE RESEARCH

( STEMMING) , - . - ( STEMMING) . 1 1999 .

700 .

2 700 :

> head(articlesLinks) [1] "https://lenta.ru/news/1999/08/31/stancia_mir/" [2] "https://lenta.ru/news/1999/08/31/vzriv/" [3] "https://lenta.ru/news/1999/08/31/credit_japs/" [4] "https://lenta.ru/news/1999/08/31/fsb/" [5] "https://lenta.ru/news/1999/09/01/dagestan/" [6] "https://lenta.ru/news/1999/09/01/kirgiz1/" > length(articlesLinks) [1] 702246 700000 ( 18 ) 70 4.5 . Result:

> indexFiles <- list.files(downloadedArticlesFolder, full.names = TRUE, recursive = TRUE, pattern = "index") > length(indexFiles) [1] 702246 > sum(file.size(indexFiles))/1024/1024 [1] 123682.1 123GB - 70 60 ( ). 2.831MB :

> file.size(file.path(parsedArticlesFolder, "untidy_articles_data.csv"))/1024/1024 [1] 3001.875 8 ( , 7). 3.5GHz Xeon E-1240 v5, 32Gb, SSD, Windows Server 2012 :

[1] "1 2017-07-27 08:21:46" [1] "2 2017-07-27 08:22:44" [1] "3 2017-07-27 08:25:21" [1] "4 2017-07-27 08:30:56" [1] "5 2017-07-27 08:38:29" [1] "6 2017-07-27 08:38:29" [1] "7 2017-07-27 08:40:01" [1] "8 2017-07-27 15:55:18" [1] "9 2017-07-27 16:44:49" [1] "10 2017-07-27 16:53:02" 4.5GB , :

> file.size(file.path(tidyArticlesFolder, "tidy_articles_data.csv"))/1024/1024 [1] 4534.328 STEMMING

. , , /// ( ) ( ). MyStem . :

# Load required packages require(data.table) require(dplyr) require(tidyr) require(stringr) require(gdata) # Set workling directory and locale for macOS and Windows if (Sys.info()['sysname'] == "Windows") { workingDirectory <- paste0(Sys.getenv("HOMEPATH"), "\\lenta") Sys.setlocale("LC_ALL", "Russian") } else { workingDirectory <- ("~/lenta") Sys.setlocale("LC_ALL", "ru_RU.UTF-8") } setwd(workingDirectory) # Load library that helps to chunk vectors source("chunk.R") # Set common variables tidyArticlesFolder <- file.path(getwd(), "tidy_articles") stemedArticlesFolder <- file.path(getwd(), "stemed_articles") # Create required folders if not exist dir.create(stemedArticlesFolder, showWarnings = FALSE) ## STEP 6. Stem title, description and plain text # Write columns on disk, run mystem, read stemed data and add to data.table StemArticlesData <- function() { # Read tidy data and keep only column that have to be stemed. # Add === separate rows in stem output. # dt that takes about 5GB RAM for 700000 obs. of 25 variables # and 2.2GB for 700000 obs. of 5 variables as tbl timestamp(prefix = "## START reading file ") tidyDataFile <- file.path(tidyArticlesFolder, "tidy_articles_data.csv") dt <- fread(tidyDataFile, stringsAsFactors = FALSE, encoding = "UTF-8") %>% as.tbl() dt <- dt %>% mutate(sep = "===") %>% select(sep, X, stemTitle, stemMetaDescription, stemPlaintext) # Check memory usage print(ll(unit = "MB")) # Prepare the list that helps us to stem 3 column sectionList <- list() sectionList[[1]] <- list(columnToStem = "stemTitle", stemedColumn = "stemedTitle", sourceFile = file.path(stemedArticlesFolder, "stem_titles.txt"), stemedFile = file.path(stemedArticlesFolder, "stemed_titles.txt")) sectionList[[2]] <- list(columnToStem = "stemMetaDescription", stemedColumn = "stemedMetaDescription", sourceFile = file.path(stemedArticlesFolder, "stem_metadescriptions.txt"), stemedFile = file.path(stemedArticlesFolder, "stemed_metadescriptions.txt")) sectionList[[3]] <- list(columnToStem = "stemPlaintext", stemedColumn = "stemedPlaintext", sourceFile = file.path(stemedArticlesFolder, "stem_plaintext.txt"), stemedFile = file.path(stemedArticlesFolder, "stemed_plaintext.txt")) timestamp(prefix = "## steming file ") # Write the table with sep, X, columnToStem columns and run mystem. # It takes about 30 min to process Title, MetaDescription and Plaintext # in 700K rows table. # https://tech.yandex.ru/mystem/ for (i in 1:length(sectionList)) { write.table(dt[, c("sep","X", sectionList[[i]]$columnToStem)], sectionList[[i]]$sourceFile, fileEncoding = "UTF-8", sep = ",", quote = FALSE, row.names = FALSE, col.names = FALSE) system(paste0("mystem -nlc ", sectionList[[i]]$sourceFile, " ", sectionList[[i]]$stemedFile), intern = FALSE) } # Remove dt from memory and call garbage collection rm(dt) gc() # Check memory usage print(ll(unit = "MB")) timestamp(prefix = "## process file ") # Process stemed files. it takes about 60 min to process 3 stemed files for (i in 1:length(sectionList)) { stemedText <- readLines(sectionList[[i]]$stemedFile, warn = FALSE, encoding = "UTF-8") # Split stemed text in chunks chunkList <- chunk(stemedText, chunk.size = 10000000) # Clean chunks one by one and remove characters that were added by mystem resLines <- c() for (j in 1:length(chunkList)) { resTemp <- chunkList[[j]] %>% str_replace_all("===,", "===") %>% strsplit(split = "\\\\n|,") %>% unlist() %>% str_replace_all("(\\|[^ ]+)|(\\\\[^ ]+)|\\?|,|_", "") resLines <- c(resLines, resTemp[resTemp!=""]) } # Split processed text in rows using === added at the beginnig chunkedRes <- chunk(resLines, chunk.delimiter = "===", fixed.delimiter = FALSE, keep.delimiter = TRUE) # Process each row and extract key (row number) and stemed content stemedList <- lapply(chunkedRes, function(x) { data.frame(key = as.integer(str_replace_all(x[1], "===", "")), content = paste0(x[2:length(x)], collapse = " "), stringsAsFactors = FALSE)}) # Combine all rows in data frame with key and content colums sectionList[[i]]$dt <- bind_rows(stemedList) colnames(sectionList[[i]]$dt) <- c("key", sectionList[[i]]$stemedColumn) } # Remove variables used in loop and call garbage collection rm(stemedText, chunkList, resLines, chunkedRes, stemedList) gc() # Check memory usage print(ll(unit = "MB")) # read tidy data again timestamp(prefix = "## reading file (again)") dt <- fread(tidyDataFile, stringsAsFactors = FALSE, encoding = "UTF-8") %>% as.tbl() # add key column as a key and add tables with stemed data to tidy data timestamp(prefix = paste0("## combining tables ")) dt <- dt %>% mutate(key = X) dt <- left_join(dt, sectionList[[1]]$dt, by = "key") dt <- left_join(dt, sectionList[[2]]$dt, by = "key") dt <- left_join(dt, sectionList[[3]]$dt, by = "key") sectionList[[1]]$dt <- "" sectionList[[2]]$dt <- "" sectionList[[3]]$dt <- "" dt <- dt %>% select(-V1, -X, -urlKey, -metaDescription, -plaintext, -stemTitle, -stemMetaDescription, -stemPlaintext, - key) write.csv(dt, file.path(stemedArticlesFolder, "stemed_articles_data.csv"), fileEncoding = "UTF-8") file.remove(sectionList[[1]]$sourceFile) file.remove(sectionList[[2]]$sourceFile) file.remove(sectionList[[3]]$sourceFile) file.remove(sectionList[[1]]$stemedFile) file.remove(sectionList[[2]]$stemedFile) file.remove(sectionList[[3]]$stemedFile) # Remove dt, sectionList and call garbage collection rm(dt) gc() # Check memory usage print(ll(unit = "MB")) timestamp(prefix = "## END ") } , :

> file.size(file.path(stemedArticlesFolder, "stemed_articles_data.csv"))/1024/1024 [1] 2273.52 > str(x, vec.len = 1) Classes 'data.table' and 'data.frame': 697601 obs. of 21 variables: $ V1 : chr "1" ... $ url : chr "https://lenta.ru/news/1999/08/31/stancia_mir/" ... $ datetime : chr "1999-09-01 01:58:40" ... $ rubric : chr "" ... $ subrubric : chr NA ... $ title : chr " \"\"\"\"\"\"\"\"\"\"\"\"\"\"\"\"" ... $ authorLinks : chr NA ... $ additionalLinks : chr NA ... $ plaintextLinks : chr NA ... $ imageDescription : chr NA ... $ imageCreditsPerson : chr NA ... $ imageCreditsCompany : chr NA ... $ videoDescription : chr NA ... $ videoCredits : chr NA ... $ FB : int 0 0 ... $ VK : int NA 0 ... $ OK : int 0 0 ... $ Com : int NA NA ... $ stemedTitle : chr " " ... $ stemedMetaDescription: chr " "| __truncated__ ... $ stemedPlaintext : chr " "| __truncated__ ... - attr(*, ".internal.selfref")=<externalptr> PS

:

- " " ? macOS, Linux, Windows?

- timestamp' print(" N")?

2.7GHz i5, 16Gb Ram, SSD, macOS 10.12, R version 3.4.03.5GHz Xeon E-1240 v5, 32Gb, SSD, Windows Server 2012?- ?

- "code smells"?

PPS - , , , , , . … .

Analyze it. Lenta-anal.ru ( 2)

Data Engineering

- ( ) , . , Linux Foundation Certified System Administrator (LFCS) Sander van Vugt (, ). 15 ( , Kernel ). , , . , - " -, , , .".

. 2 2 , RStudio Server . , , . , ( ).

4 :

DefCollections <- function() { collections <- c("c01_daytobeprocessed", "c02_linkstobeprocessed", "c03_pagestobeprocessed", "c04_articlestobeprocessed") return(collections) } 4 :

DefScripts <- function() { scripts <- c("01_days_process.R", "02_links_process.R", "03_pages_process.R", "04_articles_process.R") return(scripts) } :

c01_daytobeprocessed, ,2010-01-10 - 2010-01-10.01_days_process.R,c01_daytobeprocessed, ,c02_linkstobeprocessed.02_links_process.R,c02_linkstobeprocessed, , , ,c03_pagestobeprocessed.03_pages_process.R,c03_pagestobeprocessed, , , ( )c04_articlestobeprocessed.

, ( ) , . , cron , . … . ( c("1999-09-01", as.Date(Sys.time()) ) .

, :

- 5 cron, . , 80%,

c01_daytobeprocessed, 1001_days_process.R. - , 5 , 80%, 100 .

, c("1999-09-01", as.Date(Sys.time()) . , 2 2 , 16, 8. , 700 , .

— , .

. , CSV, , , .

( 01-09-1999 — 04-12-2017) lenta-ru-data-set_19990901_20171204.zip .

*:

[ { "_id": { "$oid": "5a0b067b537f258f034ffc51" }, "link": "https://lenta.ru/news/2017/11/14/cazino/", "linkDate": "20171114", "status": 0, "updated_at": "20171204154248 ICT", "process": "", "page": [ { "urlKey": "httpslentarunews20171114cazino", "url": "https://lenta.ru/news/2017/11/14/cazino/", "metaTitle": " ", "metaType": "article", "metaDescription": "17 , — , . 260 « », . .", "datetime": "2017-11-14 17:40:00", "datetimeString": " 17:40, 14 2017", "plaintext": " (), . , 14 , «.» () . , 2015 , 51- 33- . 15 , . , , , 66 . , , , 260 , , , , . 12 , , . «.», , . , 200 . « , , » (171.2 ) « » (210 ). «.» , . . 31 , . . 1 2009 .", "imageCreditsPerson": " ", "imageCreditsCompany": " ", "dateToUse": "20171114", "rubric": " ", "subrubric": " ", "stemedTitle": " ", "stemedMetaDescription": "17 260 ", "stemedPlaintext": " 14 2015 51 33 15 66 260 12 200 1712 210 31 1 2009 " } ], "social": [ { "FB": 1, "VK": 0, "OK": 1, "Com": 1 } ], "comments": [ { "id": 30154446, "hasLink": false, "hasGreyWord": false, "text": " . , , . , . , , , . , ; , — . , , , , , , ...\n\n — ", "moderation": "approved", "createdAt": "2017-11-14 23:10:31", "sessionSourceIcon": "livejournal", "userId": 2577791, "userpic": "https://avatars-c.rambler.ru/ca/v3/c/av/6d3dcf4b71dfde1edcfe149198747a48099f867369dfdb4ad8e2be69e41e2276b288a819cb98d3f3dafd72e1a2d46bf6529d74e4d245e4ada673fc057a48bfd5ba3c2f1d1f39fc57c9ec3d3f3a0ea8d1", "displayName": "vladtmb", "level": 0, "childrenCount": 0, "hasChild": false, "stemedText": " " } ] } ] * , sample (100 )

, - . , .

')

Source: https://habr.com/ru/post/343838/

All Articles