Greenplum 5: first steps in open source

For two years now, as one of the best distributed analytic enterprise-level DBMSs, it has been released into open source. What has changed during this time? What gave the opening source of the project? How will Greenplum evolve?

For two years now, as one of the best distributed analytic enterprise-level DBMSs, it has been released into open source. What has changed during this time? What gave the opening source of the project? How will Greenplum evolve?Under the cut, I will talk about what's new in the first major open source release of the DBMS, how the project is developing in the current minor versions and what innovations should be expected in the future.

If you are not familiar with the Greenplum DBMS, you can begin your acquaintance with this review article .

Release 5.0.0 was held on September 7th. This is the first release that includes improvements made by third-party developers (community). Releases of version 4.3, although laid out in an open repository, were developed only by Pivotal specialists.

')

The release brought a lot of innovations, as it seems to me, the main reason for this is that users working with Greenplum for a long time, finally got the opportunity to implement all their Wishlist, which the Pivotal company could not realize and which were accumulated for so long. I will give a brief description, in my opinion, of the most important changes in the new major release and in subsequent minor updates, as there are too many changes to tell about everything. At the end of the article I will provide links to the Release Notes of the new release and its minor updates.

Conventionally, all innovations can be divided into three groups:

- New features ported from fresh versions of PostgreSQL

- Greenplum innovations

- New additional services and extensions

Let's start in order.

1. New features ported from fresh versions of PostgreSQL

- Rebase on PostgreSQL 8.3

Unlike many other PostgreSQL-based projects, Greenplum does not seek to have the most recent version of PostgreSQL at its core — Greenplum was based on PostgreSQL 8.2, up to version 5.0.0, raised to 8.3 in the current major release. At the same time, the capabilities of newer versions of PostgreSQL are actively transferred to the project; - Heap-tables can now have checksum

Greenplum allows you to create two types of internal tables — heap tables and append-optimized tables. If for the second the function of calculating the checksum of files on the disk was always available, for heap-tables it appeared in the current release. The function is enabled by the parameter; - Anonymous blocks

This innovation was dragged from PostgreSQL unchanged. Not the most important (the code block could always be wrapped in a function), but the refinement so long awaited by administrators and developers.DO $$DECLARE r record; BEGIN FOR r IN SELECT table_schema, table_name FROM information_schema.tables WHERE table_type = 'VIEW' AND table_schema = 'public' LOOP EXECUTE 'GRANT ALL ON ' || quote_ident(r.table_schema) || '.' || quote_ident(r.table_name) || ' TO webuser'; END LOOP; END$$; - DBlink

The mechanism allows you to perform queries in external third-party DBMS and pick up the result. It would seem that this mechanism greatly expands the capabilities of Greenplum, allowing you to take data into an analytical database directly from sources, but DBlink's applicability is very limited - due to the Greenplum architecture, data transfer using DBlink is not performed in parallel segments, but through a single thread through the master. This fact makes DBlink use only for control requests to third-party databases, avoiding the transfer of data directly. For the sake of fairness, it is worth noting that with the parallel collection of data from third-party DBMS, one more innovation of 5 will help to cope, which we will discuss in the third part of the review of new functions.SELECT * FROM dblink('host=remotehost port=5432 dbname=postgres', 'SELECT * FROM testdblink') AS dbltab(id int, product text); - ORDER BY perception control of null values

Now with a SELECT query, it is possible to set the block [NULLS {FIRST | LAST}], which controls how NULL values are displayed - at the beginning or end of sorted values.SELECT * from my_table_with_nulls ORDER BY 1 NULLS FIRST; - Extensions

Also ported from PostgreSQL unchanged. Now this mechanism is used to create, delete and update various third-party extensions. In essence, the CREATE EXTENSION statement executes the specified SQL script.

2. Greenplum innovations

- Query Optimizer Improvements - ORCA

An alternative cost query optimizer existed in version 4.3, but it was optionally included there. In the new release, the optimizer was significantly improved, in particular, the performance of short light queries, queries with a very large number of join, and a number of other cases improved. The mechanism for cutting off unnecessary partitions was also refined, if the request contains a condition using the partitioning key. Now this optimizer is used by default; - Resource Groups

Greenplum already has a load management mechanism - Resource queues (resource queues), but it only allows you to limit the launch of requests based on their cost. The new mechanism allows you to limit requests for memory consumption and CPU (but, alas, not the load on the disk subsystem);CREATE RESOURCE GROUP rgroup1 WITH (CPU_RATE_LIMIT=20, MEMORY_LIMIT=25); - PL / Python 2.6 -> 2.7

The built-in version of Python is now 2.7; - COPY improvements

In the not so small shelf of parallel downloads and uploads of data from Greenplum arrived - now the standard command of uploading data from a table to a flat local file supports ON SEGMENT construction - with it the data is unloaded on all database segments into the local file system. Also appeared PROGRAM construction - pick up and send data to an external bash-command. By the way, these two options can be used together:COPY mydata FROM PROGRAM 'cat /tmp/mydata_<SEGID>.csv' ON SEGMENT CSV;

3. New services and extensions

- PXF support

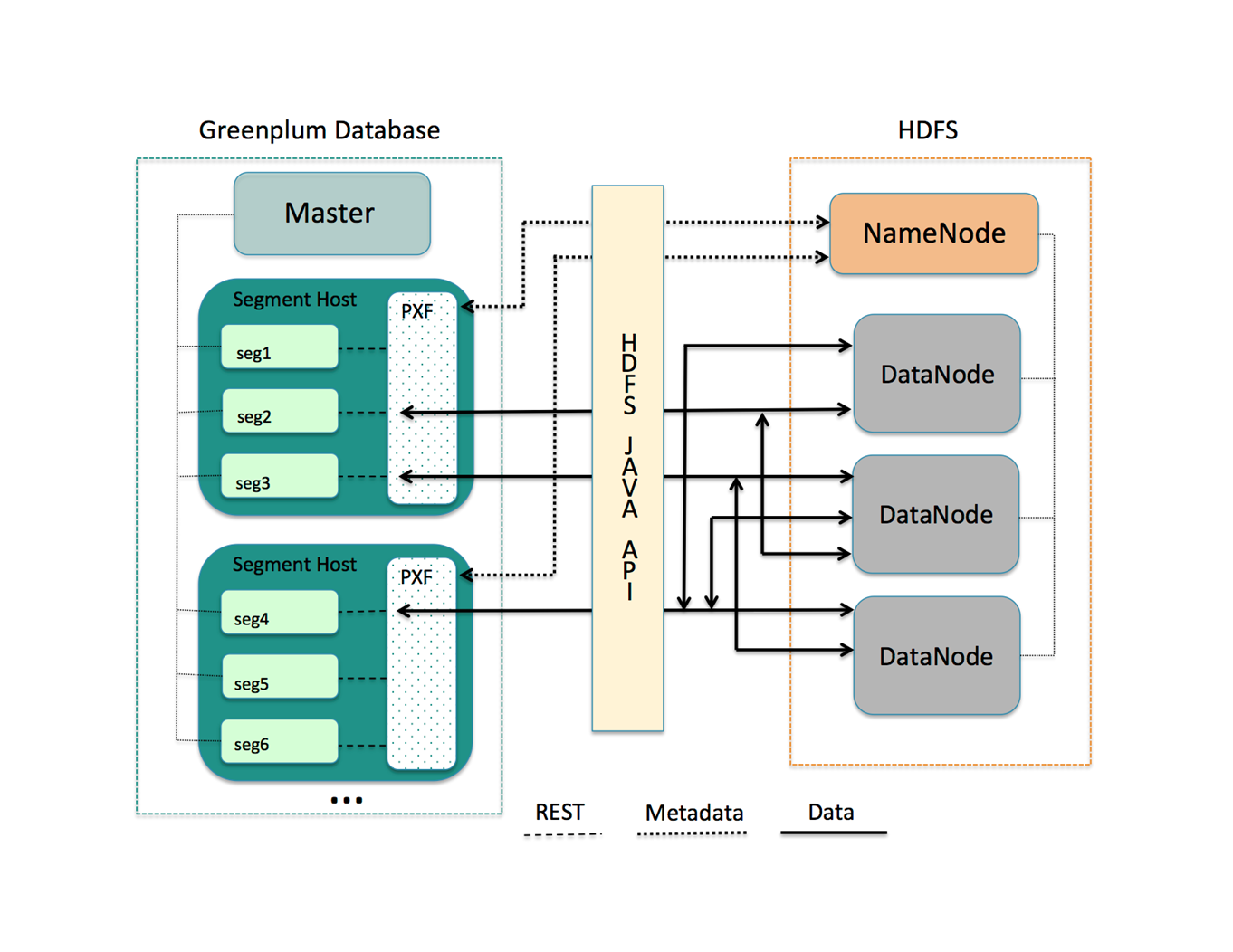

In my opinion, this is the most important revision of Greenplum in the new release. PXF is a framework that allows Greenplum to exchange data in parallel with third-party systems. This is not a new technology, it was originally developed for the fork Greenplum - HAWQ, working on top of the Hadoop cluster. In Greenplum, there was already a parallel implementation of the connector for the Hadoop cluster, while PXF also introduces much more flexibility and the ability to connect arbitrary third-party systems to the integration box, writing its own connector.

The framework is written in Java and is a separate process on the server segment Greenplum, on the one hand communicating with the Greenplum segments via the REST API, on the other - using third-party Java clients and libraries. For example, there is now support for the main services of the Hadoop stack (HDFS, Hive, Hbase) and parallel upload of data from third-party DBMS via JDBC.

However, the PXF service must be running on every server in the Greenplum cluster.

PXF interfacing with HDFS

As it seems to me, the most interesting is the possibility of integrating Greenplum with third-party DBMS through JDBC. So, for example, by adding a JDBC-thin-driver for Oracle Database to CLASSPATH, we will be able to request data from the tables of the same name DBMS, with each segment of Greenplum parallel inquiring its data shard based on the logic specified in the external table:CREATE EXTERNAL TABLE public.insurance_sample_jdbc_ora_ro( policyid bigint, statecode text, ... point_granularity int ) LOCATION ('pxf://myoraschema.insurance_test?PROFILE=JDBC&JDBC_DRIVER=oracle.jdbc.driver.OracleDriver&DB_URL=jdbc:oracle:thin:@//ora-host:1521/XE&USER=pxf_user&PASS=passoword&PARTITION_BY=policyid:int&RANGE=100000:999999&INTERVAL=10000') FORMAT 'CUSTOM' (FORMATTER='pxfwritable_import');

Taking into account the possibility of using partitions (sections) as external tables, PXF allows you to build surprisingly flexible and productive data processing platforms on the basis of Greenplum - for example, to store hot, fresh data in Oracle, warm - in Greenplum itself and cold, archived - in the Hadoop cluster, while the user will see all the data in one table; - Passwordcheck module

This module allows you to restrict the setting of weak passwords when creating or changing a role (CREATE ROLE or ALTER ROLE); - PGAdmin 4

The popular PostgreSQL client now supports enhanced interaction with Greenplum. Onboard support for DDL partitioned tables, AO and Heap tables. External table DDL is not yet supported.

To summarize the innovations of a two-year stay in open source, you can:

- The Greenplum architecture remains true to itself. There were no significant changes (such as inhomogeneous segments or a variable number of mirrors),

and thank God; - The development of the PostgreSQL component of the DBMS remains the same - porting new functions instead of constantly upgrading the version by rebase;

- One can see development towards integration with third-party systems, and this, I think, is very, very correct;

- Greenplum gains modularity and flexibility, old, inflexible functionalities are slowly removed from the system (GPHDFS, Legacy Optimizer).

What's next?

Not so long ago, the release 6.0.0 was tagged in the official repository. This release should be released in September next year, and here are some (at least) innovations in it for sure:

- PXF pushdown - transfer to the side of the DBMS source data selection conditions (where-filters). This will allow to transfer part of the load to third-party systems and take the finished result from them;

- PXF passing user identity - in the future, PXF will forward the user name Greenplum, under which the request works, to an external system. Security, everything. Perhaps this revision will be implemented in one of the minor updates of the "five";

- A new kind of compression - Zstd. According to the results of the first tests, Zstd in Greenplum works 4 times faster, while compressing data by 10% more efficiently than Zlib. Special pride is added by the fact that this feature was developed by our team (Arenadata);

- Further refinement of the new optimizer ORCA.

As it seems to me, the output to open source definitely went to Greenplum for the benefit. The development of the project, remaining true to the previous course, greatly accelerated and expanded. I think in the near future we will see a lot of completely new functionality for Greenplum.

Related Links:

Official repository

5.0.0 Release notes

5.1.0 Release notes

5.2.0 Release notes

5.3.0 Release notes

A little about us: the Arenadata project was founded by people from Pivotal (the developer Greenplum and Pivotal Hadoop) in 2015, its goal was to create their own distributions of the Greenplum and Hadoop enterprise-level to build modern data storage and processing platforms.

In early 2017, the project was acquired by IBS.

Now there are three own distributions and all necessary services in the project portfolio. In particular, in the direction of Greenplum we:

- We provide technical support;

- We provide consulting services;

- We are migrating data and processes from third-party DBMS to Greenplum.

In the comments I will try to answer any questions about the project Arenadata and Greenplum as a whole. We will also be glad to see you in the Greenplum users channel in Telegram. You are welcome!

Source: https://habr.com/ru/post/343640/

All Articles