Nontrivial cases of working with servers

Any equipment, including server hardware, sometimes starts to work unpredictably. It doesn’t matter whether the equipment is new or has been working at full load for several years.

There are many cases of failure and incorrect work, and the diagnosis of the problem often turns into an exciting puzzle.

')

Below we describe some interesting and non-trivial cases.

Fault detection

The registration of the problem most often occurs after the customer contacts the technical support service through the ticket system.

In the case of a client who rents dedicated servers of a fixed configuration from us, we carry out diagnostics in order to find out that the problem is not programmatic.

Clients usually solve software problems on their own, however, in any case, we try to offer assistance to our system administrators.

If it becomes clear that the problem is hardware (for example, the server does not see part of the RAM), then we always have a similar server platform in reserve.

If a hardware problem is detected, we transfer the disks from the failed server to the backup one and, after a small reconfiguration of the network equipment, the server is started up. Thus, the data is not lost, and the idle time does not exceed 20 minutes from the time of the call.

Examples of problems and solutions

Network failure on the server

There is a possibility that after transferring disks from a failed server to a backup server, the network on the server will stop working. This usually happens when using Linux-based operating systems such as Debian or Ubuntu.

The fact is that during the initial installation of the operating system, the MAC addresses of the network cards are written into a special file located at the address /etc/udev/rules.d/70-persistent-net.rules.

When the operating system starts, this file maps the interface names to the MAC addresses. When replacing the server with a backup, the MAC addresses of the network interfaces no longer match, which results in the inoperability of the network on the server.

To solve the problem, you must delete the specified file and restart the network service, or restart the server.

The operating system, not finding this file, will automatically generate a similar one and match the interfaces already with the new MAC addresses of the network cards.

Reconfiguring IP addresses is not required after this, the network will immediately start working.

Floating hang problem

Once a server came to us for diagnostics with the problem of random hangs in the process of work. Checked the BIOS and IPMI logs - empty, no errors. We put it on stress testing, loading all the processor cores by 100%, while simultaneously controlling the temperature - it hovered tight after 30 minutes of operation.

In this case, the processor worked properly, the temperature values did not exceed the standard under load, all the coolers were OK. It became clear that it was not overheating.

Further, it was necessary to exclude the possible failures of the RAM modules, so they put the server on the test memory with the help of the rather popular Memtest86 +. After 20 minutes, the server is expected to freeze, producing errors for one of the RAM modules.

Replacing the module with a new one, we put the server to the test again, but a fiasco was waiting for us - the server hung up again, producing errors on another RAM module. Replaced and his. Another test - once again hung, re-issuing errors in RAM. A close inspection of the RAM slots did not reveal any defects.

There remained one possible cause of the problem - the central processor. The fact is that the memory controller is located exactly inside the processor and that it could fail.

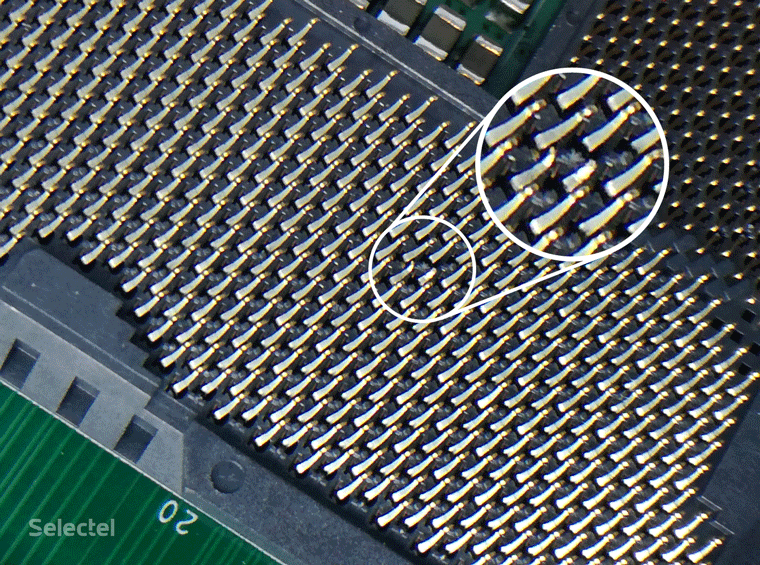

After removing the processor, they discovered a catastrophe - one pin of the socket was broken in the upper part, the broken tip of the pin literally stuck with the contact pad of the processor. As a result, when there was no load on the server, everything worked adequately, but when the CPU temperature increased, the contact was broken, thereby stopping the normal operation of the RAM controller, which caused the hangs.

Finally, the problem was solved by replacing the motherboard, since, alas, we can’t afford to repair a broken pin socket, and this is a task for the service center.

Imaginary server hangup during OS installation

Enough funny cases arise when hardware manufacturers begin to change the architecture of the hardware, refusing to support old technologies in favor of new ones.

A user complained to us about server hangs while trying to install the Windows Server 2008 R2 operating system. After successfully launching the installer, the server stopped responding to the mouse and keyboard in the KVM console. To localize the problem, a physical mouse and keyboard were connected to the server — the same thing, the installer starts and stops responding to input devices.

At that time, this server was one of the first on the basis of the Supermicro X11SSL-f motherboard. In the BIOS settings there was one interesting item Windows 7 install, exhibited in Disable. Since Windows 7, 2008 and 2008 R2 are deployed on the same installer, they set this parameter to Enable and miraculously the mouse and keyboard finally work. But this was only the beginning of the epic with the installation of the operating system.

At the time of selecting the disk to install, no disks were displayed, moreover, an error was generated by the need to install additional drivers. The operating system was installed from a USB flash drive and a quick search on the Internet showed that this effect occurs if the installation program cannot find drivers for a USB 3.0 controller.

Wikipedia reported that the problem is solved by turning off USB 3.0 support in the BIOS (XHCI controller). When we opened the documentation for the motherboard, we were in for a surprise - the developers decided to completely abandon the EHCI (Enhanced Host Controller Interface) controller in favor of the XHCI (eXtensible Host Controller Interface). In other words, all the USB ports on this motherboard are USB 3.0 ports. And if you disable the XHCI controller, then we will also disable input devices, making it impossible to work with the server and, accordingly, install the operating system.

Since the server platforms were not equipped with CD / DVD drives, the only solution was to integrate the drivers directly into the operating system distribution. Only by integrating the USB 3.0 controller drivers and rebuilding the installation image, we were able to install Windows Server 2008 R2 on this server, and this case entered our knowledge base so that engineers would not waste too much time on fruitless attempts.

An interesting feature of Dell PowerVault

Even more fun there are times when customers bring us equipment for accommodation, but it does not behave as expected. This is exactly what happened with the Dell PowerVault disk shelf.

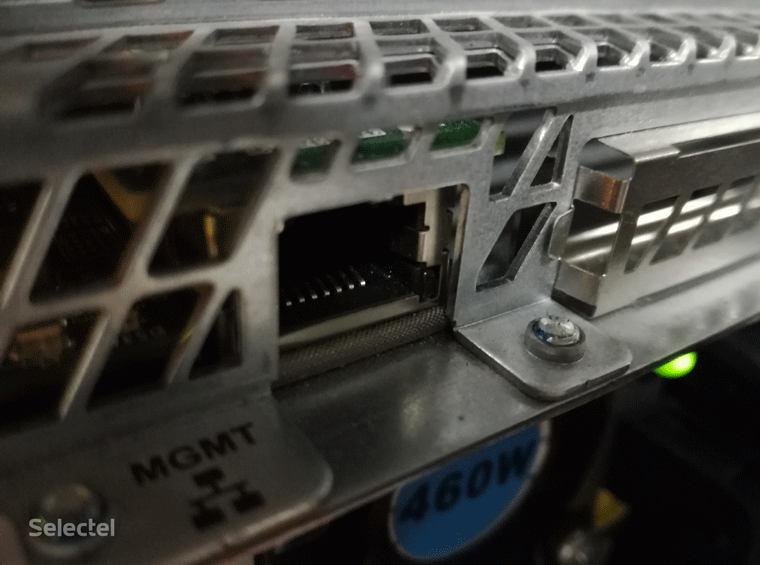

The device is a storage system with two disk controllers and network interfaces for work under the iSCSI protocol. In addition to these interfaces, there is a MGMT port for remote management.

Among our services for hosted equipment there is just a special service “Additional port 10 Mbit / s”, which is ordered when it is necessary to connect remote server management tools. These funds have different names:

- “ILO” from Hewlett-Packard;

- Dell's “iDrac”;

- IPMI from Supermicro.

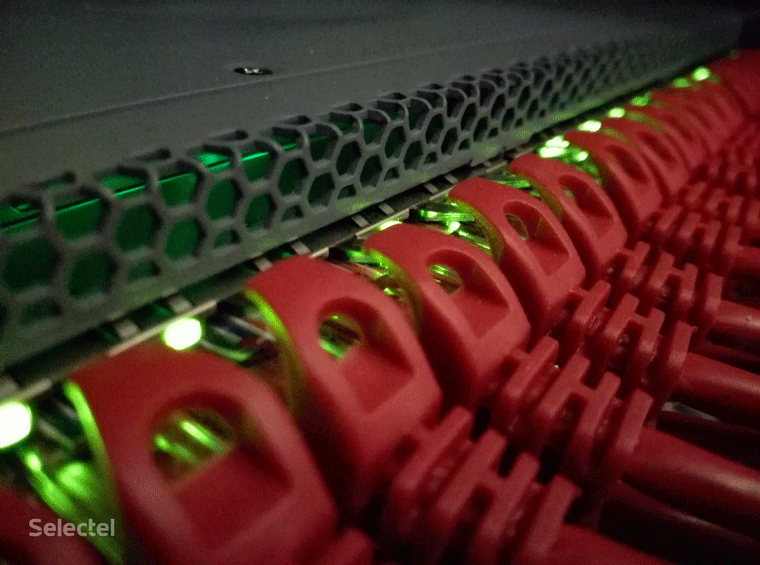

Their functionality is approximately the same - monitoring server status and accessing a remote console. Accordingly, they do not need high channel speed - 10 Mbps is quite enough for comfortable work. This service was ordered by the client. We paved the appropriate copper cross, and set up the port of our network equipment.

To limit the speed, the port is simply configured as 10BASE-T and is included in the work, having a maximum speed of 10 Mbps. After everything was ready - we connected the MGMT port of the disk shelf, but the client almost immediately said that nothing was working for him.

Checking the status of the switch port, we found the unpleasant inscription “Physical link is down”. Such an inscription says that there are problems with the physical connection between the switch and the client equipment connected to it.

A badly crimped connector, a broken connector, broken wires in a cable - this is a small list of problems that lead to the absence of a link. Of course, our engineers immediately took a twisted pair tester and checked the connection. All the wires were ideally called, both ends of the cable were crimped perfectly. In addition, by including a test laptop in this cable, we received as it should be a connection with a speed of 10 Mbps. It became clear that the problem was on the side of the client’s equipment.

Since we are always trying to help our clients in solving problems, we decided to find out what exactly is the absence of a link. Carefully studied the port connector MGMT - everything is in order.

Found on the manufacturer's website the original operating instructions, to clarify - is it possible from the software to "pay off" this port. However, this possibility was not foreseen - in any case the port was automatically raised. Despite the fact that such equipment should always support Auto-MDI (X) - in other words, it is correct to determine which cable is included: normal or crossover, we experimentally compressed the crossover and included it in the same switch port. We tried to force the duplex parameter on the switch port. The effect was zero - there was no link and the ideas were already running out.

Then one of the engineers made an absolutely contrary to the common sense assumption that the equipment does not support 10BASE-T and will work only on 100BASE-TX or even 1000BASE-X. Usually any port, even on the cheapest device, is compatible with 10BASE-T and at first the engineer’s suggestion of a shallow as “fiction”, but because of hopelessness they decided to try switching the port to 100BASE-TX.

There was no limit to our surprise, the link instantly rose. What exactly caused the lack of support for 10BASE-T on the MGMT port remains a mystery. Such a case is a very big rarity, but there is a place to be.

The client was surprised no less than ours and was very grateful for solving the problem. Accordingly, he was left the port in 100BASE-TX, limiting the speed on the port directly using the built-in speed limiting mechanism.

Cooling turbine failure

Once a client came to us, asked to remove the server and bring it to the service area. Engineers did everything and left him alone with the equipment. An hour passed, the second, the third - the client all the time started / stopped the server and we asked what the problem was.

It turns out that the server production Hewlett-Packard failed two impeller cooling of the six. The server turns on, gives a cooling error and immediately turns off. In this case, the server is a hypervisor with critical services. To restore the normal operation of services, it was necessary to perform an urgent migration of virtual machines to another physical node.

We decided to help the client as follows. Usually the server understands that everything is fine with the cooling fan, simply by reading the number of revolutions. At the same time, of course, the engineers of Hewlett-Packard did everything so that the original impeller could not be replaced with an analogue - a non-standard connector, a non-standard pinout.

The original of such a part costs about $ 100 and it is impossible to just go and buy it - you need to order from abroad. The benefit of the Internet found the circuit with the original pinout and found out that one of the pins is just responsible for reading the number of engine revolutions per second.

The rest was a matter of technology - they took a couple of wires for prototyping (by chance they were at hand - some of our engineers are addicted to Arduino) and simply connected pins from neighboring working impellers to connectors that failed. The server started and the client finally managed to migrate the virtual machines and start services to work.

Of course, all this was done solely under the responsibility of the client, however, in the end, such a non-standard move made it possible to reduce idle time to a minimum.

And where are the wheels?

In some cases, the cause of the problem is sometimes so nontrivial that it takes a very large amount of time to search for it. And so it happened when one of our clients complained about the accidental dump of disks and server hang. Hardware platform - Supermicro in case 847 (4U form factor) with baskets for connecting 36 disks. Three identical Adaptec RAID controllers were installed in the server, each with 12 disks connected. At the time of the problem, the server stopped seeing a random number of disks and hung up. The server was taken out of production and started to diagnose.

The first thing that I managed to find out was that the disks fell off on only one controller. At the same time, the “fallen disks” disappeared from the list in the native management utility Adaptec and re-appeared there only when the server was completely turned off and then connected. The first thing that came to mind was the controller software. All three controllers were slightly different firmware, so it was decided to install one version of the firmware on all controllers. Completed, chased the server in maximum load modes - everything works as expected. Having marked the problem as resolved, the server was given back to the client in production.

Two weeks later, again with the same problem. It was decided to replace the controller with a similar one. Completed, flashed, hooked up, put on the tests. The problem remained - after a couple of days, all the disks fell out on the new controller and the server safely hung up.

Reinstalled the controller in another slot, replaced the backplane and SATA cables from the controller to the backplane. A week of tests and disks again fell out - the server again hung. Appeal in support of Adaptec did not bring results - they checked all three controllers and found no problems. Replaced the motherboard, rebuilding the platform almost from scratch. Everything that caused the slightest doubt was replaced by a new one. And the problem reappeared. Mysticism and only.

The problem was solved by chance, when they began to check each disk separately. At a certain load, one of the disks began to knock heads and gave a short circuit to the SATA port, while there was no alarm indication. At the same time, the controller stopped seeing a part of the disks and started to recognize them again only when the power was reconnected. This is how a single failed disk disabled the entire server platform.

Conclusion

Of course, this is only a small part of the interesting situations that have been solved by our engineers. It is quite difficult to “catch” some problems, especially when there are no hints in the logs. But any such situations stimulate engineers to thoroughly understand the server hardware device and find a wide variety of solutions to problems.

These are the funny things that happened in our practice.

And what were you faced? Welcome to the comments.

Source: https://habr.com/ru/post/343416/

All Articles