Broadcast h264 video without transcoding and delay

It is no secret that when controlling aircraft, video transmission from the device itself to the ground is often used. Usually this opportunity is provided by the manufacturers of UAVs themselves. However, what to do if the drone is assembled by yourself?

We and our Swiss partners from Helvetis were faced with the task of streaming live video from a webcam from a low-powered embedded device on the drone via WiFi to a Windows tablet. Ideally, we would like:

It would seem that could go wrong?

')

So, we stopped at the following list of equipment:

First, we tried using a simple Python script that, using OpenCV, received frames from the camera, compressed them using JPEG, and sent them over HTTP to the client application.

This approach turned out to be (almost) working. As a viewing application, you could use any web browser. However, we immediately noticed that the frame rate was lower than expected, and the CPU utilization level on Minnowboard was constantly at 100%. The embedded device simply could not cope with real-time frame encoding. From the advantages of this solution, it is worth noting a very small delay in the transmission of 480p video with a frequency of no more than 10 frames per second.

During the search, a webcam was detected, which, in addition to uncompressed YUV frames, could produce frames in MJPEG format. It was decided to use this useful feature to reduce the load on the CPU and find a way to transfer video without transcoding.

First of all, we tried everybody’s favorite open-source ffmpeg combine, which allows, among other things, to read a video stream from a UVC device, encode it and transmit. After a short dive into the manual, the command line keys were found, which allowed to receive and transmit a compressed MJPEG video stream without transcoding.

CPU utilization was low. Delighted, we eagerly opened the stream in the ffplay player ... To our disappointment, the video delay level was absolutely unacceptable (about 2 - 3 seconds). After trying everything from here and going through the Internet, we still could not achieve a positive result and decided to abandon ffmpeg.

After the failure of ffmpeg, it was the turn of the VLC media player, or rather the console utility cvlc. VLC by default uses a bunch of buffers that, on the one hand, help to achieve a smooth image, but on the other hand, give a serious delay of a few seconds. Having suffered a great deal, we selected the parameters with which the streaming looked tolerable enough, i.e. the delay was not very long (about 0.5 s), there was no transcoding, and the client showed the video fairly smoothly (it was necessary, however, the client left a small buffer of 150 ms).

This is the summary line for cvlc:

Unfortunately, the video did not work quite stably, and a delay of 0.5 s was unacceptable for us.

Having come across an article about practically our task, we decided to try mjpg-streamer. Tried, liked it! Absolutely no change turned out to use mjpg-streamer for our needs without significant delay in video at 480p resolution.

Against the background of previous failures, we were happy for quite a long time, but then we wanted more. Namely: a little less to hammer the channel and improve the quality of the video up to 720p.

To reduce the download of the channel, we decided to change the used codec to h264 (having found a suitable web-camera in our stocks). Mjpg-streamer did not have h264 support, so it was decided to modify it. During development, we used two cameras with a built-in h264 codec, manufactured by Logitech and ELP. As it turned out, the content of the h264 stream in these cameras was significantly different.

The h264 stream consists of several types of network abstraction layer packets. Our cameras generated 5 types of packages:

IDR (Instantaneous decoding refresh) - package containing the encoded image. At the same time all the necessary data for decoding the image are in this package. This package is necessary for the decoder to start generating an image. Usually the first frame of any video compressed h264 - IDR picture.

Non-IDR is a packet containing an encoded image containing references to other frames. The decoder is not able to recover the image on one Non-IDR frame without the presence of other packets.

In addition to the IDR frame, the decoder needs PPS and SPS packets to decode the image. These packages contain metadata about the image and frame stream.

Based on the mjpg-streamer code, we used the API V4L2 (video4linux2) to read the data from the cameras. As it turned out, one “frame” of the video contained several NAL packets.

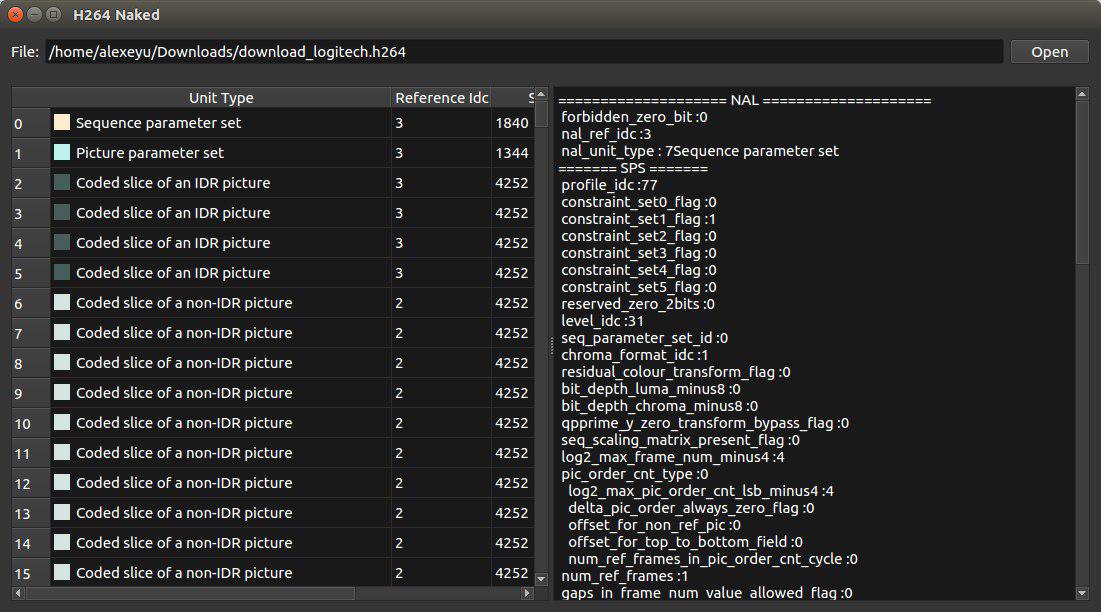

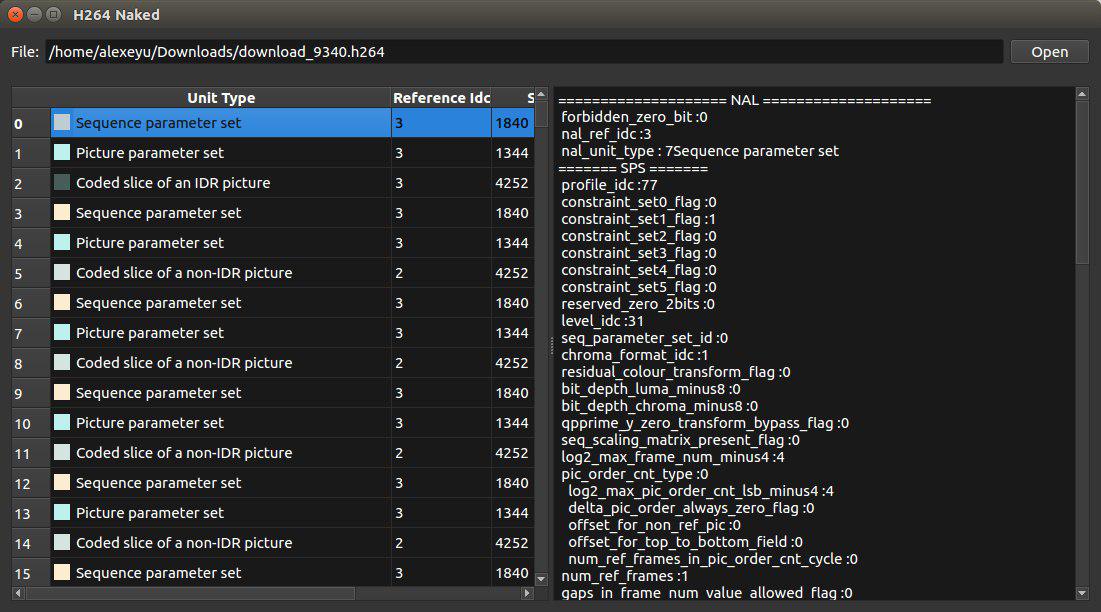

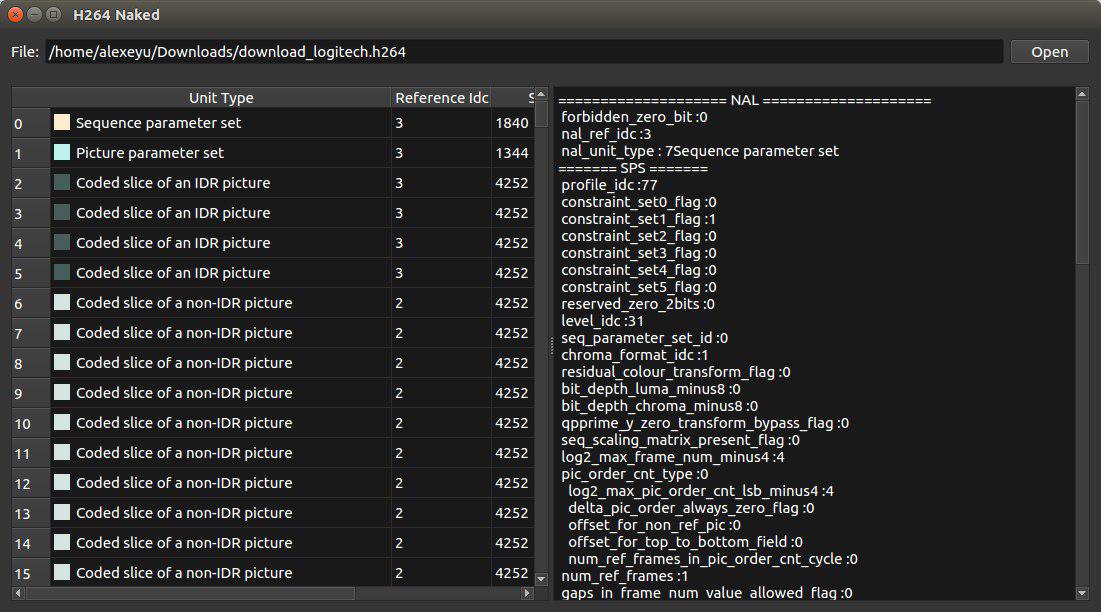

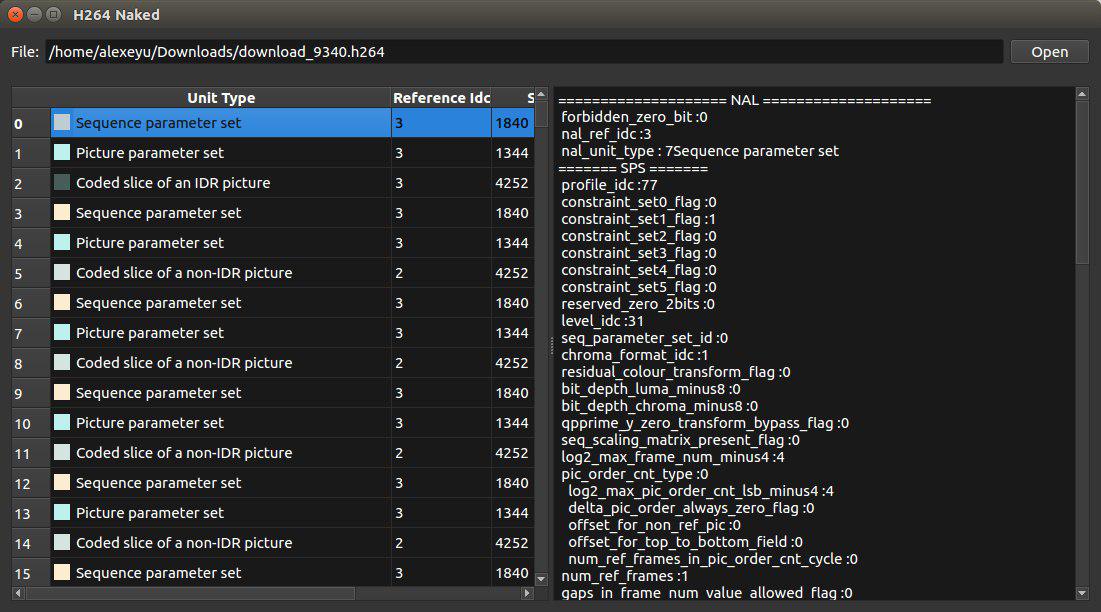

It is in the content of the “frames” that the essential difference between the cameras was found. We used the h264bitstream library to parse the stream. There are standalone utilities that allow you to view the contents of a stream.

The Logitech camera frame stream consisted mainly of non-IDR frames, besides being divided into several data partitions. Every 30 seconds, the camera generated a packet containing an IDR picture, SPS and PPS. Since the decoder needs an IDR package in order to start decoding the video, this situation did not suit us right away. Unfortunately, it turned out that there is no adequate way to establish the period with which the camera generates IDR packets. Therefore, we had to abandon the use of this camera.

The ELP camera was much more convenient. Each frame we received contained PPS and SPS packets. In addition, the camera generated an IDR packet every 30 frames (~ 1s period). It suited us quite well and we opted for this camera.

The basis of the server part, it was decided to take the above mjpg-streamer. Its architecture made it easy to add new input and output plugins. We started by adding a plug-in to read the h264 stream from the device. An existing http plugin was chosen as the output plugin.

In V4L2, it was enough to indicate that we want to receive frames in the V4L2_PIX_FMT_H264 format in order to start receiving the h264 stream. Since an IDR frame is needed for decoding a stream, we parsed the stream and waited for the IDR frame. The client application stream was sent via HTTP starting from this frame.

On the client side, we decided to use libavformat and libavcodec from the ffmpeg project to read and decode the h264 stream. In the first test prototype, streaming over the network, splitting it into frames and decoding was assigned to ffmpeg, converting the resulting decoded image from NV12 format to RGB and displaying was done on OpenCV.

The first tests showed that this method of video broadcasting is efficient, but there is a significant delay (about 1 second). Our suspicion fell on the http protocol, so it was decided to use UDP to transmit packets.

Since we did not need to support existing protocols like RTP, we implemented our simplestbicycle protocol, in which the NAL packets of the h264 stream were transmitted within the UDP datagram. After a little refinement of the receiving part, we were pleasantly surprised by the low latency of the video on the desktop PC. However, the very first tests on a mobile device showed that software decoding of h264 is not a fad of mobile processors. The tablet just did not have time to process frames in real time.

Since the Atom Z3740 processor used on our tablet supports Quick Sync Video (QSV) technology, we tried using the QSV h264 decoder from libavcodec. To our surprise, he not only did not improve the situation, but also increased the delay to 1.5 seconds even on a powerful desktop PC! However, this approach did significantly reduce the load on the CPU.

Having tried various decoder configuration options in ffmpeg, it was decided to abandon libavcodec and use the Intel Media SDK directly.

The first surprise for us was the horror, which is proposed to immerse the person who decided to develop using the Media SDK. The official example offered to developers is a powerful combine that can do everything, but which is difficult to understand. Fortunately, we found like-minded people on the Intel forums who were also dissatisfied with an example. They found old, but more easily digestible tutorials . Based on the simple_2_decode example, we got the following code.

After implementing video decoding using the Media SDK, we faced a similar situation - the video delay was 1.5 seconds. Desperate, we turned to the forums and found tips that were supposed to reduce the delay in decoding video.

The h264 decoder from the Media SDK accumulates frames before issuing the decoded image. It was found that if the “end of stream” flag is set in the data structure transmitted to the decoder (mfxBitstream), the delay is reduced to ~ 0.5 seconds:

Further experimentally, it was found that the decoder holds 5 frames in the queue, even if the end of stream flag is set. As a result, we had to add the code that simulated the “final end of the stream” and forced the decoder to output frames from this queue:

After that, the delay level went down to the acceptable, i.e. imperceptible gaze.

Starting the task of broadcasting video in real time, we very much hoped to use existing solutions and do without our bicycles.

Our main hope was such video giants as FFmpeg and VLC. Despite the fact that they seem to be able to do what we need (to transmit video without transcoding), we were not able to remove the delay resulting from the transmission of video.

Almost accidentally stumbling upon the mjpg-streamer project, we were fascinated by its simplicity and precise work in translating video in the MJPG format. If you suddenly need to transfer this particular format, then we strongly recommend using it. It is no coincidence that it was on its basis that we implemented our decision.

As a result of the development, we got a fairly lightweight solution for transmitting video without delay, not demanding to the resources of either the transmitting or the receiving parties. In the task of decoding video, the Intel Media Media SDK helped us a lot, even if we had to use a bit of force to make it give frames without buffering.

We and our Swiss partners from Helvetis were faced with the task of streaming live video from a webcam from a low-powered embedded device on the drone via WiFi to a Windows tablet. Ideally, we would like:

- delay <0.3s;

- low CPU utilization on the embedded system (less than 10% per core);

- Resolution at least 480p (better than 720p).

It would seem that could go wrong?

')

So, we stopped at the following list of equipment:

- Minnowboard 2-core, Atom E3826 @ 1.4 GHz, OS: Ubuntu 16.04

- ELP USB100W04H webcam that supports multiple formats (YUV, MJPEG, H264)

- Windows tablet ASUS VivoTab Note 8

Attempts to get by with standard solutions.

Simple solution with Python + OpenCV

First, we tried using a simple Python script that, using OpenCV, received frames from the camera, compressed them using JPEG, and sent them over HTTP to the client application.

Http mjpg streaming to python

from flask import Flask, render_template, Response import cv2 class VideoCamera(object): def __init__(self): self.video = cv2.VideoCapture(0) def __del__(self): self.video.release() def get_frame(self): success, image = self.video.read() ret, jpeg = cv2.imencode('.jpg', image) return jpeg.tobytes() app = Flask(__name__) @app.route('/') def index(): return render_template('index.html') def gen(camera): while True: frame = camera.get_frame() yield (b'--frame\r\n' b'Content-Type: image/jpeg\r\n\r\n' + frame + b'\r\n\r\n') @app.route('/video_feed') def video_feed(): return Response(gen(VideoCamera()), mimetype='multipart/x-mixed-replace; boundary=frame') if __name__ == '__main__': app.run(host='0.0.0.0', debug=True) This approach turned out to be (almost) working. As a viewing application, you could use any web browser. However, we immediately noticed that the frame rate was lower than expected, and the CPU utilization level on Minnowboard was constantly at 100%. The embedded device simply could not cope with real-time frame encoding. From the advantages of this solution, it is worth noting a very small delay in the transmission of 480p video with a frequency of no more than 10 frames per second.

During the search, a webcam was detected, which, in addition to uncompressed YUV frames, could produce frames in MJPEG format. It was decided to use this useful feature to reduce the load on the CPU and find a way to transfer video without transcoding.

FFmpeg / VLC

First of all, we tried everybody’s favorite open-source ffmpeg combine, which allows, among other things, to read a video stream from a UVC device, encode it and transmit. After a short dive into the manual, the command line keys were found, which allowed to receive and transmit a compressed MJPEG video stream without transcoding.

ffmpeg -f v4l2 -s 640x480 -input_format mjpeg -i /dev/video0 -c:v copy -f mjpeg udp://ip:port CPU utilization was low. Delighted, we eagerly opened the stream in the ffplay player ... To our disappointment, the video delay level was absolutely unacceptable (about 2 - 3 seconds). After trying everything from here and going through the Internet, we still could not achieve a positive result and decided to abandon ffmpeg.

After the failure of ffmpeg, it was the turn of the VLC media player, or rather the console utility cvlc. VLC by default uses a bunch of buffers that, on the one hand, help to achieve a smooth image, but on the other hand, give a serious delay of a few seconds. Having suffered a great deal, we selected the parameters with which the streaming looked tolerable enough, i.e. the delay was not very long (about 0.5 s), there was no transcoding, and the client showed the video fairly smoothly (it was necessary, however, the client left a small buffer of 150 ms).

This is the summary line for cvlc:

cvlc -v v4l2:///dev/video0:chroma="MJPG":width=640:height=480:fps=30 --sout="#rtp{sdp=rtsp://:port/live,caching=0}" --rtsp-timeout=-1 --sout-udp-caching=0 --network-caching=0 --live-caching=0 Unfortunately, the video did not work quite stably, and a delay of 0.5 s was unacceptable for us.

Mjpg-streamer

Having come across an article about practically our task, we decided to try mjpg-streamer. Tried, liked it! Absolutely no change turned out to use mjpg-streamer for our needs without significant delay in video at 480p resolution.

Against the background of previous failures, we were happy for quite a long time, but then we wanted more. Namely: a little less to hammer the channel and improve the quality of the video up to 720p.

H264 streaming

To reduce the download of the channel, we decided to change the used codec to h264 (having found a suitable web-camera in our stocks). Mjpg-streamer did not have h264 support, so it was decided to modify it. During development, we used two cameras with a built-in h264 codec, manufactured by Logitech and ELP. As it turned out, the content of the h264 stream in these cameras was significantly different.

Chambers and flow pattern

The h264 stream consists of several types of network abstraction layer packets. Our cameras generated 5 types of packages:

- Picture parameter set (PPS)

- Sequence parameter set (SPS)

- Coded slice layer without partitioning, IDR picture

- Coded slice layer without partitioning, non-IDR picture

- Coded slice data partition

IDR (Instantaneous decoding refresh) - package containing the encoded image. At the same time all the necessary data for decoding the image are in this package. This package is necessary for the decoder to start generating an image. Usually the first frame of any video compressed h264 - IDR picture.

Non-IDR is a packet containing an encoded image containing references to other frames. The decoder is not able to recover the image on one Non-IDR frame without the presence of other packets.

In addition to the IDR frame, the decoder needs PPS and SPS packets to decode the image. These packages contain metadata about the image and frame stream.

Based on the mjpg-streamer code, we used the API V4L2 (video4linux2) to read the data from the cameras. As it turned out, one “frame” of the video contained several NAL packets.

It is in the content of the “frames” that the essential difference between the cameras was found. We used the h264bitstream library to parse the stream. There are standalone utilities that allow you to view the contents of a stream.

The Logitech camera frame stream consisted mainly of non-IDR frames, besides being divided into several data partitions. Every 30 seconds, the camera generated a packet containing an IDR picture, SPS and PPS. Since the decoder needs an IDR package in order to start decoding the video, this situation did not suit us right away. Unfortunately, it turned out that there is no adequate way to establish the period with which the camera generates IDR packets. Therefore, we had to abandon the use of this camera.

The ELP camera was much more convenient. Each frame we received contained PPS and SPS packets. In addition, the camera generated an IDR packet every 30 frames (~ 1s period). It suited us quite well and we opted for this camera.

Implementing a broadcast server based on mjpg-streamer

The basis of the server part, it was decided to take the above mjpg-streamer. Its architecture made it easy to add new input and output plugins. We started by adding a plug-in to read the h264 stream from the device. An existing http plugin was chosen as the output plugin.

In V4L2, it was enough to indicate that we want to receive frames in the V4L2_PIX_FMT_H264 format in order to start receiving the h264 stream. Since an IDR frame is needed for decoding a stream, we parsed the stream and waited for the IDR frame. The client application stream was sent via HTTP starting from this frame.

On the client side, we decided to use libavformat and libavcodec from the ffmpeg project to read and decode the h264 stream. In the first test prototype, streaming over the network, splitting it into frames and decoding was assigned to ffmpeg, converting the resulting decoded image from NV12 format to RGB and displaying was done on OpenCV.

The first tests showed that this method of video broadcasting is efficient, but there is a significant delay (about 1 second). Our suspicion fell on the http protocol, so it was decided to use UDP to transmit packets.

Since we did not need to support existing protocols like RTP, we implemented our simplest

Since the Atom Z3740 processor used on our tablet supports Quick Sync Video (QSV) technology, we tried using the QSV h264 decoder from libavcodec. To our surprise, he not only did not improve the situation, but also increased the delay to 1.5 seconds even on a powerful desktop PC! However, this approach did significantly reduce the load on the CPU.

Having tried various decoder configuration options in ffmpeg, it was decided to abandon libavcodec and use the Intel Media SDK directly.

The first surprise for us was the horror, which is proposed to immerse the person who decided to develop using the Media SDK. The official example offered to developers is a powerful combine that can do everything, but which is difficult to understand. Fortunately, we found like-minded people on the Intel forums who were also dissatisfied with an example. They found old, but more easily digestible tutorials . Based on the simple_2_decode example, we got the following code.

Stream Decoding with Intel Media SDK

mfxStatus sts = MFX_ERR_NONE; // h264 mfxBitstream mfx_bitstream; memset(&mfx_bitstream, 0, sizeof(_mfxBS)); mfx_bitstream.MaxLength = 1 * 1024 * 1024; // 1MB mfx_bitstream.Data = new mfxU8[mfx_bitstream.MaxLength]; // UDP StreamReader *reader = new StreamReader(/*...*/); MFXVideoDECODE *mfx_dec; mfxVideoParam mfx_video_params; MFXVideoSession session; mfxFrameAllocator *mfx_allocator; // MFX mfxIMPL impl = MFX_IMPL_AUTO; mfxVersion ver = { { 0, 1 } }; session.Init(sts, &ver); if (sts < MFX_ERR_NONE) return 0; // :( // , AVC (h.264) mfx_dec = new MFXVideoDECODE(session); memset(&mfx_video_params, 0, sizeof(mfx_video_params)); mfx_video_params.mfx.CodecId = MFX_CODEC_AVC; // mfx_video_params.IOPattern = MFX_IOPATTERN_OUT_SYSTEM_MEMORY; // mfx_video_params.AsyncDepth = 1; // reader->ReadToBitstream(&mfx_bitstream); sts = mfx_dec->DecodeHeader(&mfx_bitstream, &mfx_video_params); if (sts < MFX_ERR_NONE) return 0; // :( // mfxFrameAllocRequest request; memset(&request, 0, sizeof(request)); sts = mfx_dec->QueryIOSurf(&mfx_video_params, &request); if (sts < MFX_ERR_NONE) return 0; // :( mfxU16 numSurfaces = request.NumFrameSuggested; // 32 mfxU16 width = (mfxU16)MSDK_ALIGN32(request.Info.Width); mfxU16 height = (mfxU16)MSDK_ALIGN32(request.Info.Height); // NV12 - YUV 4:2:0, 12 mfxU8 bitsPerPixel = 12; mfxU32 surfaceSize = width * height * bitsPerPixel / 8; // mfxU8* surfaceBuffers = new mfxU8[surfaceSize * numSurfaces]; // mfxFrameSurface1** pmfxSurfaces = new mfxFrameSurface1*[numSurfaces]; for(int i = 0; i < numSurfaces; i++) { pmfxSurfaces[i] = new mfxFrameSurface1; memset(pmfxSurfaces[i], 0, sizeof(mfxFrameSurface1)); memcpy(&(pmfxSurfaces[i]->Info), &(_mfxVideoParams.mfx.FrameInfo), sizeof(mfxFrameInfo)); pmfxSurfaces[i]->Data.Y = &surfaceBuffers[surfaceSize * i]; pmfxSurfaces[i]->Data.U = pmfxSurfaces[i]->Data.Y + width * height; pmfxSurfaces[i]->Data.V = pmfxSurfaces[i]->Data.U + 1; pmfxSurfaces[i]->Data.Pitch = width; } sts = mfx_dec->Init(&mfx_video_params); if (sts < MFX_ERR_NONE) return 0; // :( mfxSyncPoint syncp; mfxFrameSurface1* pmfxOutSurface = NULL; mfxU32 nFrame = 0; // while (reader->IsActive() && (MFX_ERR_NONE <= sts || MFX_ERR_MORE_DATA == sts || MFX_ERR_MORE_SURFACE == sts)) { // if (MFX_WRN_DEVICE_BUSY == sts) Sleep(1); if (MFX_ERR_MORE_DATA == sts) reader->ReadToBitstream(mfx_bitstream); if (MFX_ERR_MORE_SURFACE == sts || MFX_ERR_NONE == sts) { nIndex = GetFreeSurfaceIndex(pmfxSurfaces, numSurfaces); if (nIndex == MFX_ERR_NOT_FOUND) break; } // // NAL- sts = mfx_dec->DecodeFrameAsync(mfx_bitstream, pmfxSurfaces[nIndex], &pmfxOutSurface, &syncp); // if (MFX_ERR_NONE < sts && syncp) sts = MFX_ERR_NONE; // if (MFX_ERR_NONE == sts) sts = session.SyncOperation(syncp, 60000); if (MFX_ERR_NONE == sts) { // ! mfxFrameInfo* pInfo = &pmfxOutSurface->Info; mfxFrameData* pData = &pmfxOutSurface->Data; // NV12 // Y: pData->Y, // UV: pData-UV, 2 Y } } // After implementing video decoding using the Media SDK, we faced a similar situation - the video delay was 1.5 seconds. Desperate, we turned to the forums and found tips that were supposed to reduce the delay in decoding video.

The h264 decoder from the Media SDK accumulates frames before issuing the decoded image. It was found that if the “end of stream” flag is set in the data structure transmitted to the decoder (mfxBitstream), the delay is reduced to ~ 0.5 seconds:

mfx_bitstream.DataFlag = MFX_BITSTREAM_EOS; Further experimentally, it was found that the decoder holds 5 frames in the queue, even if the end of stream flag is set. As a result, we had to add the code that simulated the “final end of the stream” and forced the decoder to output frames from this queue:

if( no_frames_in_queue ) sts = mfx_dec->DecodeFrameAsync(mfx_bitstream, pmfxSurfaces[nIndex], &pmfxOutSurface, &syncp); else sts = mfx_dec->DecodeFrameAsync(0, pmfxSurfaces[nIndex], &pmfxOutSurface, &syncp); if (sts == MFX_ERR_MORE_DATA) { no_frames_in_queue = true; } After that, the delay level went down to the acceptable, i.e. imperceptible gaze.

findings

Starting the task of broadcasting video in real time, we very much hoped to use existing solutions and do without our bicycles.

Our main hope was such video giants as FFmpeg and VLC. Despite the fact that they seem to be able to do what we need (to transmit video without transcoding), we were not able to remove the delay resulting from the transmission of video.

Almost accidentally stumbling upon the mjpg-streamer project, we were fascinated by its simplicity and precise work in translating video in the MJPG format. If you suddenly need to transfer this particular format, then we strongly recommend using it. It is no coincidence that it was on its basis that we implemented our decision.

As a result of the development, we got a fairly lightweight solution for transmitting video without delay, not demanding to the resources of either the transmitting or the receiving parties. In the task of decoding video, the Intel Media Media SDK helped us a lot, even if we had to use a bit of force to make it give frames without buffering.

Source: https://habr.com/ru/post/343362/

All Articles