3 Unusual Linux Networking Cases

This article presents three small stories that occurred in our practice: at different times and in different projects. What unites them is that they are related to the Linux network subsystem (Reverse Path Filter, TIME_WAIT, multicast) and illustrate how deeply one often has to analyze an incident that one encounters for the first time to solve the problem ... and, of course, what joy one can experience as a result received solution.

First story: about Reverse Path Filter

A client with a large corporate network decided to pass through some of its Internet traffic through a single corporate firewall located behind the central unit’s router. Using iproute2, traffic going to the Internet was sent to the central unit, where several routing tables were already configured. By adding an additional routing table and setting up redirection routes to the firewall in it, we enabled traffic redirection from other branches and ... traffic did not go.

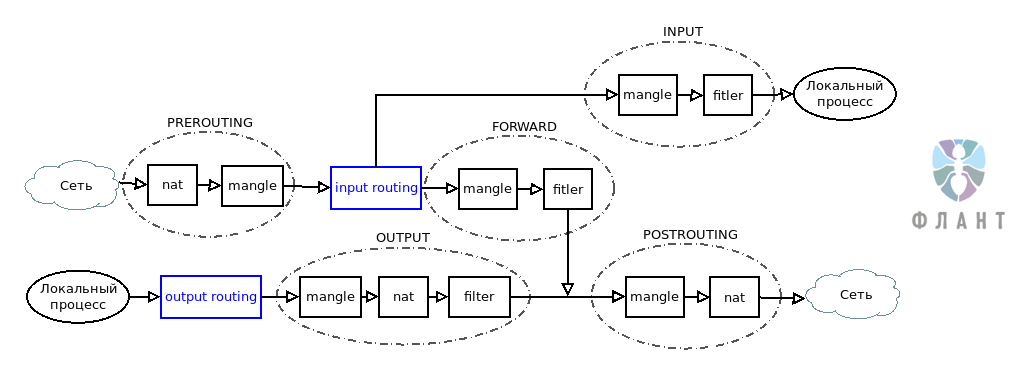

Traffic flow through Netfilter tables and chains

')

Began to find out why configured routing does not work. At the incoming tunnel interface of the router traffic was detected:

$ sudo tcpdump -ni tap0 -p icmp and host 192.168.7.3 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on tap0, link-type EN10MB (Ethernet), capture size 262144 bytes 22:41:27.088531 IP 192.168.7.3 > 8.8.8.8: ICMP echo request, id 46899, seq 40, length 64 22:41:28.088853 IP 192.168.7.3 > 8.8.8.8: ICMP echo request, id 46899, seq 41, length 64 22:41:29.091044 IP 192.168.7.3 > 8.8.8.8: ICMP echo request, id 46899, seq 42, length 64 However, there were no packets on the outgoing interface. It became clear that they were filtered on the router, however, there were no explicit rules for dropping packets in iptables. Therefore, we began to consistently, as traffic progressed, set rules that drop our packets and, after installation, look at the counters

$ sudo iptables -A PREROUTING -t nat -s 192.168.7.3 -d 8.8.8.8 -j DROP $ sudo sudo iptables -vL -t nat | grep 192.168.7.3 45 2744 DROP all -- any any 192.168.7.3 8.8.8.8 Checked in succession nat PREROUTING, mangle PREROUTING. In mangle FORWARD, the counter has not increased, which means that packets are lost during the routing stage. After checking the routes and rules again, they began to study what exactly is happening at this stage.

In the Linux kernel, for each interface, the Reverse Path Filtering (

rp_filter ) parameter is enabled by default. In the case when you use complex, asymmetric routing and the response packet will not be returned to the source by the route that the request packet came from, Linux will filter out such traffic. To solve this problem, you must disable Reverse Path Filtering for all your network devices that participate in routing. Below is a simple and fast way to do this for all your network devices: #!/bin/bash for DEV in /proc/sys/net/ipv4/conf/*/rp_filter do echo 0 > $DEV done Returning to the case, we solved the problem by disabling the Reverse Path Filter for the tap0 interface and now consider switching off

rp_filter for all devices involved in asymmetric routing as a good practice on routers.The second story: about TIME_WAIT

An unusual problem arose in the high-load web project we served: from 1 to 3 percent of users could not access the site. When studying the problem, we found out that inaccessibility did not correlate in any way with the loading of any system resources (disk, memory, network, etc.), did not depend on the location of the user or his telecom operator. The only thing that united all users who had problems was that they went online via NAT.

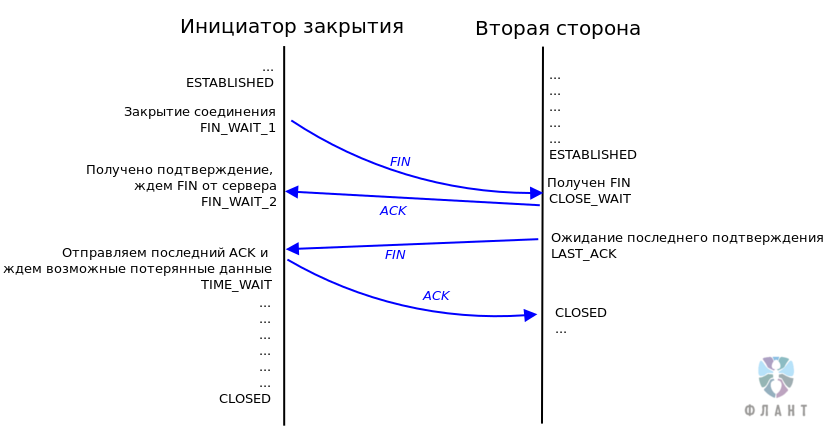

The TCP

TIME_WAIT state allows the system to verify that data transfer has indeed been stopped on this TCP connection and that no data has been lost. But the possible number of simultaneously open sockets is a finite value, which means it is a resource that is spent, including on the TIME_WAIT state, in which the client is not serviced.

TCP closure mechanism

The answer, as expected, was found in the kernel documentation. The natural desire of a highload system administrator is to reduce idle resource consumption. A quick googling will show us a lot of tips that encourage you to enable the Linux kernel options

tcp_tw_reuse and tcp_tw_recycle . But with tcp_tw_recycle not everything is as simple as it seemed.We will deal with these parameters in more detail:

- The

tcp_tw_reuseparametertcp_tw_reuseuseful to include in the fight for resources held byTIME_WAIT. A TCP connection is identified by itsIP1_Port1_IP2_Port2parameterIP1_Port1_IP2_Port2. When the socket enters theTIME_WAITstate, withtcp_tw_reusedisabledtcp_tw_reusea new outgoing connection will be established with the selection of a new localIP1_Port1. Old values can only be used when the TCP connection is in theCLOSEDstate. If your server creates a lot of outgoing connections, settcp_tw_reuse = 1and your system will be able to use theTIME_WAITports in case of running out of free ones. To install, type in/etc/sysctl.conf:net.ipv4.tcp_tw_reuse = 1

And execute the command:sudo sysctl -p - The

tcp_tw_recycleparametertcp_tw_recycledesigned to reduce the time the socket is in theTIME_WAITstate. By default, this time is 2 * MSL (Maximum Segment Lifetime), and MSL, according to RFC 793 , is recommended to be set to 2 minutes. Turning ontcp_tw_recycle, you tell the Linux kernel to use not a constant as MSL, but to calculate it based on the features of your network. As a rule (if you do not have dial-up), turning ontcp_tw_recyclesignificantly reduces the time the connection is in theTIME_WAITstate. But there is a pitfall: going to theTIME_WAITstate, your network stack withtcp_tw_recycleenabled will reject all packets from the IP of the second party that participated in the connection. This can cause a number of accessibility problems during operation due to NAT, which we encountered in the case above. The problem is extremely difficult to diagnose and does not have a simple reproducing / reproducibility procedure, so we recommend extreme caution when usingtcp_tw_recycle. If you decide to enable it, add one line to/etc/sysctl.confand (do not forget to runsysctl -p):net.ipv4.tcp_tw_recycle = 1

Story Three: OSPF and multicast traffic

The serviced corporate network was built on the basis of tinc VPN and the adjacent rays of IPSec and OVPN connections. We used OSPF to route this whole L3 address space. At one of the nodes where a large number of channels aggregated, we found that a small part of the networks, despite the correct configuration of OSPF, periodically disappears from the route table on this node.

Simplified device VPN network used in the project described

First of all, they checked connection with routers of problem networks. Communication was stable:

Router 40 $ sudo ping 172.24.0.1 -c 1000 -f PING 172.24.0.1 (172.24.0.1) 56(84) bytes of data. --- 172.24.0.1 ping statistics --- 1000 packets transmitted, 1000 received, 0% packet loss, time 3755ms rtt min/avg/max/mdev = 2.443/3.723/15.396/1.470 ms, pipe 2, ipg/ewma 3.758/3.488 ms Having diagnosed OSPF, we were even more surprised. On the node where problems were observed, the routers of problem networks were absent in the list of neighbors. On the other side, the problematic router in the list of neighbors was present:

Router 40 # vtysh -c 'show ip ospf neighbor' | grep 172.24.0.1 Router 1 # vtysh -c 'show ip ospf neighbor' | grep 172.24.0.40 255.0.77.148 10 Init 14.285s 172.24.0.40 tap0:172.24.0.1 0 0 0 The next step eliminated possible problems with the delivery of ospf hello from 172.24.0.1. Requests from him came, but the answers did not leave:

Router 40 $ sudo tcpdump -ni tap0 proto ospf tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on tap0, link-type EN10MB (Ethernet), capture size 262144 bytes 09:34:28.004159 IP 172.24.0.1 > 224.0.0.5: OSPFv2, Hello, length 132 09:34:48.446522 IP 172.24.0.1 > 224.0.0.5: OSPFv2, Hello, length 132 No restrictions were installed in iptables - they found out that the packet was dropped after all the tables passed through Netfilter. Again we went deep into reading the documentation, where the kernel parameter

igmp_max_memberships was found, which limits the number of multicast connections for one socket. By default, this number is 20. We, for a round number, increased it to 42 - OSPF work normalized: Router 40 # echo 'net.ipv4.igmp_max_memberships=42' >> /etc/sysctl.conf Router 40 # sysctl -p Router 40 # vtysh -c 'show ip ospf neighbor' | grep 172.24.0.1 255.0.77.1 0 Full/DROther 1.719s 172.24.0.1 tap0:172.24.0.40 0 0 0 Conclusion

No matter how difficult the problem, it is always solvable and often - through the study of documentation. I would be glad to see in the comments a description of your experience in finding solutions to complex and unusual problems.

PS

Read also in our blog:

Source: https://habr.com/ru/post/343348/

All Articles