Biomes painter: fill the world with content

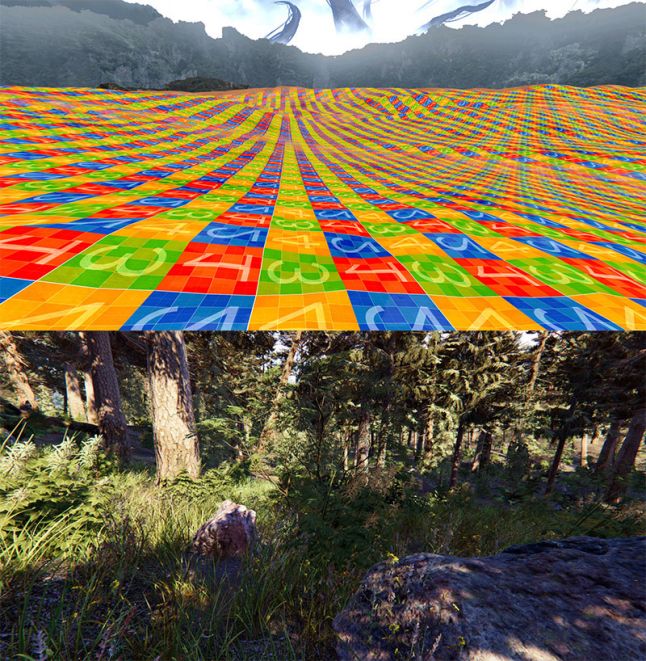

How to convert a height map from the top image to the forest from the bottom?

Open world games are steadily gaining popularity and are at the top of the bestseller lists. Each new game raises the bar on the size and complexity of the world. Just by looking at the trailers of the latest open world games, one can understand that their goal is to create a feeling of immense scale.

The construction of such worlds poses a big question for developers - how to effectively fill such spacious worlds? No one wants to arrange each tree by hand, especially if the development team is small. In the end, game development is always associated with smart trade-offs.

')

If you look at a typical open world game, you can see the Pareto principle in action - 20% of the content is the main player’s path, and 80% is the background. The main way of the player should be of high quality and artistic content, because the players will spend most of their time on it. Backgrounds, including extensive forests or deserts around major cities, do not require such attention to detail. These 80% are a great target for smart content placement tools that sacrifice quality and decoration slightly in favor of speed and ease of content creation.

After the release of our latest game, “Shadow Warrior 2,” we had the opportunity to experiment a bit with new ideas, while our design team was busy preparing the new game. We decided to spend this time creating a prototype of an improved placement tool, actively working with level artists. We are very grateful to our employer Flying Wild Hog, who allowed to write about it so early, and to all those who participated in the creation of this prototype.

We knew how to generate a simple elevation map in World Machine. The question was how to quickly transform this map into a beautiful scene, while at the same time the team of artists of the levels did not die from overwork.

Decision analysis

There are several approaches to solving this problem, including procedural location, location based on physics and location based on color maps.

Procedural location generates content based on a set of rules and a random seed number. Such methods can be divided into those that simulate a physical process (teleological) and those that simply try to imitate the end result (ontogenetic). An example of a teleological method is the generation of a forest based on the accumulation of water and the distribution of sunlight in The Witcher 3 . Another example is the procedural vegetation creation tool in UE4 , which simulates the growth of successive generations of vegetation. Examples of ontogenetic methods can be considered procedural generation based on Houdini, in which technical artists write the rules themselves, for example, as in " Ghost Recon Wildlands ".

A physical solution is an interesting way to locate objects. They are based on a simulation of physics, in which you can drop several objects from a height and wait until they are scattered by level. This is implemented, for example, in the Object Placement Tool for Unity.

The location based on the color map is based on hand-colored color maps, which are then converted into resources according to a given set of rules. A recent example of this approach is the tools from Horizon Zero Dawn , which has become a powerful source of inspiration for us.

starting point

We are a fairly small studio with limited resources, so we are always looking for ways to speed up our work - including with the help of improved location tools for entities.

Our first placement tool was based on physics and was created for our first game Hard Reset (2011). The game was attended by dark cyberpunk cities, so we created a tool for quickly locating various types of garbage. We could just hang objects in the air and turn on a physical simulation. After everything fell to the ground and stopped moving, then if we liked the end results, it was enough just to save them. The use of this tool was a real pleasure, but as a result, its use was rather limited. The results were difficult to manage, and the repetition of the simulation often turned out to be slower than the manual arrangement, so in the end we decided to drop this idea.

We looked closely at the procedural decisions, but we never managed to apply them, mainly because of the team of level artists who didn’t master Houdini and similar packages.

In the second game, Shadow Warrior (2013), we had open-air areas with different types of vegetation, so we created a drawing-based layout tool . The process of working on levels was based on creating basic grids (meshes) in 3ds Max. The artists of the levels painted the vertices of these level grids, and during the import of the level, this coloring of the vertices was transformed into a set of object creation points.

Shaded Warrior colored level grid - the density of grass and thickets is stored in the color of the peaks

Inside our game editor, level artists could select any area and customize the type of entities created there with the specified density and properties (for example, snapping to the grid or color variations). Then, during the execution of the game, we created these entities in accordance with the rules set by the artists and the parameters for the execution of the game (for example, the LOD settings). This tool was well received by a team of level artists and they often asked us to expand its capabilities even further.

Requirements

We began by writing down the characteristics expected from the new system:

- Rapid prototyping. We wanted to quickly prototype worlds based on the high-level input data provided by level artists so that they could quickly define the appearance of the world in general terms. The level artist should at least be able to indicate where the forest is, the desert, etc. For example, draw a 2D map of the world, and then convert it into an in-game world. Very important is the ability to quickly launch a prototype of the world within the game, so that the entire development team can start working.

- Simple and safe iterations. We need a way to make last minute safe changes that will not need to rebuild the whole world and will not need to block the area (convert the location tool data into manually spaced entities). Locking an area allows you to make arbitrary changes to the location of entities, but destroys the whole point of the location tool, because after locking it will not be possible to customize the location rules in the process without destroying the changes made manually. That is, reducing a parameter, for example, tree density, should destroy only a few instances of trees, and not rebuild the entire forest from scratch.

- Extensibility For small development teams, the ability to gradually add new features is important. We can not first plan in the first year of development, then in the second year to create resources, locate them in the third year, and then release the game. We need the ability to work with resources throughout the entire production and a trouble-free way to add them to the already existing world. For example, we need a simple way to replace one type of tree with two types of trees without changing their location.

- Perfect integration with manual content. Obviously, we need some way of locating the military base inside the generated forest or manually laying the road going through this forest without worrying that the generated trees stick out from the buildings or the paved road.

We were ready to sacrifice the level of quality and manual control for the sake of more efficient location of content.

Biomes painter

Observing how our level artists used the previous drawing tool, we noticed that they were doing double work. For example, they first place instances of grass, and later paint soil under this grass with the appropriate grass texture. We decided to generate both texturing of the ground and the location of the entities using one system. This not only accelerated the work, but also allowed to create a holistic world in which all resources are located on the corresponding textures of the earth.

We wanted to be able to reuse biome color maps to speed prototyping. To solve this problem, we based our system on two color maps: a biome type (for example, forest, desert, water, etc.) and weights (lush vegetation), and also added some rules governing coloring the weight map: low values should have meant almost empty terrain, and high - lush vegetation or many obstacles.

In the previous coloring tool, after completing a new set of prefabs, we often had to go back to the old areas and repaint them. To simplify the iterations, we decided to build a system with more complex rules, namely, a list of rules for creating objects, which will be evaluated in order of importance - from the most important to the most unimportant. This allowed us to add new prefabs to existing areas without a headache.

In addition, to be able to iterate, we needed to minimize the effect of changing the rules. To solve this problem, we base everything on pre-computed creation points and pre-computed random numbers. For example, if you need to correct the points of creation of trees, then when you adjust the density of their location, new instances appear, but most of the forest remains unchanged.

Finally, after the first tests, we decided that we would still need some procedural generation to destroy some of the repeating patterns. We solved the problem by placing special objects (for example, a fallen tree in the forest) with a very low density (with a low probability of creation).

Biome rules

Now that we have a biome-type map and a weights map, we need some rules that describe how to transform these maps into entities and textures of the terrain.

The rules for texture mapping are quite simple:

- The interval of the biome weights with a specified decline

- Interval of weights of relief with a specified decline

- Interval of hilly terrain with a given decline

- Density

Each rule is assigned a specific relief texture, and these rules are applied from bottom to top. First, we fill the entire biome with a basic texture. Then we evaluate the subsequent rules and allocate the assigned textures when the conditions are met, that is, we replace the previous texture at the current point.

Entity rules are a bit more complex:

- All the above texture rules

- Relative to the ground or to the world axis "upwards" - for example, trees are tied to the world axis "upwards", because they usually grow upwards, but the stones are located relative to the relief

- Random angular displacement from the axis of the binding - allows you to destroy the monotony, for example, the growing bamboo

- Random rotation around the reference axis

- Random Scale Interval

- Offset along the reference axis

- Influence (essence collision radius)

As in the case of texture rules, each entity rule is assigned a specific prefab. Entity rules apply from top to bottom. First we create large entities, such as stones or trees, then, if possible, we create bushes, grass, etc. In addition, each entity also checks for collisions between itself and already placed elements.

Using such rules you can build an example of a biome, for example, here is a forest:

Scale assignment example for forest biome

Other possible interesting rules include checking the distance to another entity. For example, the creation of small trees around large. We decided not to implement them yet in order to minimize procedural generation.

LOD biome

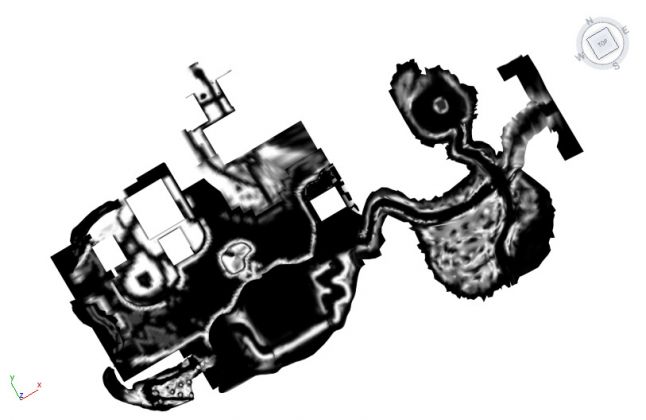

And here the system manifests itself completely. Due to the fact that all entities are formed from color maps, it significantly improves LOD and streaming loading. We create entities in the process of executing the game, so from the point of view of a streaming system, we only need to get only two bytes per square meter instead of loading all the entity location data.

For various graphics settings on a PC, we simply manage the density of small objects like garbage or grass. The creation of the LOD world is governed by complex entity creation rules. We create everything that is near the player. After a certain distance we create only larger objects. Even further we create only the largest objects. Finally, beyond a certain distance from the camera, we do not create objects at all. This not only helps in rendering, but also helps in all third-party computations of the central processor, because we don’t have to simulate or track entity changes at a distance.

Biome Integration

We wanted to integrate our solution with manual entities and other tools. In the case of spline tools, for example, tools for creating rivers or roads, we can analytically calculate the distance from this spline. Based on this distance, it is possible to automatically remove all entities of the biome painter from the laid roads and rivers. Moreover, we reduce the weight of biomes around this spline. Thus, if we position the road inside the forest, the lush vegetation next to the road will be reduced.

An example of how a river creation tool automatically works with biomes.

In the same way, we work with manually placed entities. In our prefabs, you can insert special blockers biomes. Biome blockers are simple shapes (i.e., spheres or convex shapes) that remove the biome entities and reduce the weight of the biomes around them with a certain predetermined decay value. This not only helps to avoid the appearance of trees inside manually placed houses, but also allows you to freely move buildings without having to manually repaint color maps, because everything around you will adapt to the new position of the building without destroying the drawn biome data.

The working process

Our workflow begins with World Machine, in which we generate an initial elevation map. In the next step, we iterate through the approximate color biome maps in the Substance Designer. We created support for automatically re-importing biome maps, so when the graphic artist saves them in the Substance Designer, the new biome map is imported, and the changes are immediately visible inside the game editor.

This allows you to quickly create a world filled with resources, relief textures, etc. Obviously, it will not match the quality of the finished game, but in general, at this stage the game can already work, and the gameplay development team is already starting to work on the player’s speed, transport, and combat.

Finally, when we are satisfied with a rough model of the world, we begin to manually allocate resources and make minor changes to the color maps of biomes using brushes from the game editor.

Implementation

The arrangement of entities is reduced to cyclically traversing pre-computed points of creation, obtaining world data at each point (for example, elevation, slope, etc.), calculating density from creation rules, and comparing density with a pre-calculated minimum density at the point of creation, to determine whether to create an entity at the current point. Entities are instances of prefabs, that is, we can create, for example, trees with triggers, sounds, special effects (for example, fireflies) and relief decals.

Preliminary calculation of a good set of creation points turned out to be surprisingly difficult. We wanted to calculate in advance a pattern with the following properties:

- As dense as possible

- There is a specified minimum distance between the points.

- Nearby entities are not aligned with one straight line, because it would destroy the illusion of a natural arrangement (for more details, read the excellent series of posts about grass placement in the Witness game)

- The above properties should be preserved with a decrease in density (due to a decrease in the number of given points of creation depending on the calculated density)

- The pattern must have a seamless overlay. to cover the big world

We tried to generate a set of points, similar to the Poisson spot, with the additional requirement that nearby points were not aligned with one straight line. As a result, we arrived at a regular grid distorted by several sin and cos functions. We also assigned a weight to each point with a simple dithering algorithm in order to preserve the above properties when deleting some points due to a decrease in the density of entity creation.

When creating entities on the relief, it is important not to use the original elevation height map, but to use the one in which there are manually inserted relief grids instead. Fortunately, we had this data because we used this ray tracing to create this combined elevation map for drawing shadows of large elevation heights.

To handle collisions between entities, we have a two-dimensional collision bitmap; before placing entities, we rasterize an entity form onto it.

It seems that the location of entities is conveniently executed in the GPU shader, but in fact, when we started implementing more complex rules, such as collisions between entities with different sizes, the code became very confusing. As a result, we decided to simply create entities using the CPU function. This function gets a new 64m x 64m tile, creates entities and shuts down, and then we start another function with another tile.

On the other hand, on the video processor, the creation of relief textures works fine, because each texel can be calculated in parallel without any dependencies. We simply run one shader for each level of the clipmap relief to create texture maps for it. The only drawback is that in order to handle collision reactions (bullets, footprints, etc.), we need this data to be in the main memory from the CPU. To do this, we needed to copy the aforementioned texture maps from the video processor memory to the main memory.

Summarize

Who knows what the future will bring to us, but in an interview with the visionaries of the gaming industry, the mention of the Metaverse often pops up (for example, in this interview with Tim Sweeney). I have no idea how this Metaverse will look like, but it will definitely need more intelligent tools that can build and dispose of huge amounts of content. I believe that once such tools will become the usual standard for level artists.

Source: https://habr.com/ru/post/343234/

All Articles