How does “transcribing” differ from “voice recognition”?

Voice assistants are doing everything now. They are built into phones, headphones, stand-alone speakers, they try to communicate with us when they call support and replace “press 1 to contact the operator” in the voice menus. Our “lego for telephony” Voximplant has been offering different voice recognition options for many years now, and under the cat I’ll use the example of several lines of JavaScript to show the main options, how they differ from each other and why “stream recognition from Google” is not always the best option .

Stream recognition

The idea is that a real-time voice is transmitted to a service like the Google Speech API, and that one “tries to recognize” it and returns the recognized words, phrases and sentences as far as possible. The main difficulty for the service is to understand that the flow of sounds is a complete word or phrase, and not the beginning of a long word. With Voximplant, stream recognition can be tried with a couple of lines of code:

If we say quickly “check of connection one two three”, then we will get one event:

If you “minted” a phrase by words, the result will be completely different:

')

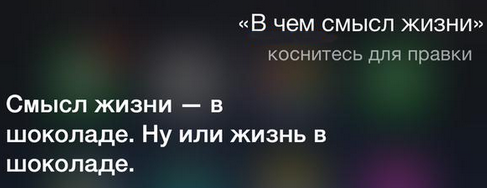

As you can see, confidence is no longer the same, some of the numbers have become words and some of the text, and “creazim” suddenly appeared. In practice, each scenario of “communication” of a person and a machine is thoroughly tested and debugged: the ASR module is fed with the dictionary of “expected” words to increase their chances of being recognized, JS code is added to search for synonyms, fuzzy matching, waiting for pauses and other home preparations. to strike a balance between “quickly recognized as kreazim” and “for two minutes nothing was recognized, because the background noise and the caller breathed loudly into the phone”.

Stream recognition is well suited as a replacement for the traditional “voice menu.” "Connect me with the operator" or "what's with the delivery" for complex services is much more convenient than "press one to listen to nine more menu items."

But besides the voice menu there are other useful things. For example, recognize all the conversations of operators for the month, analyze and draw interesting conclusions. Big Data works wonders, and even with 80% recognition quality, you can easily find the best contact center operator or learn how to tell the operators “typical” answers to certain questions. For such use, the quality of recognition is important to us and its speed is not important. This kind of recognition is traditionally called “transcribing” (“transcribing”) in English, which is a slow and high-quality recognition after the end of a conversation.

JSON markup transcription

It is done in the same few lines of JS code, but with nuances. Since transcribing occurs after the end of a conversation, then immediately giving the URL to the resulting JSON file has a side effect: by making a request right after the end of the conversation, you can get an empty file. Therefore, the API works differently: each JS session has an identifier, and by it you can get a list of all the “artifacts” of this session: a log, recorded audio streams and recognized audio streams. The identifier can be transferred to your backend during a call, or you can use the same HTTP API to search for the necessary calls by time, custom data, or other parameters. Enabling transcription looks like this:

Pay attention to the format - if you set it to “json”, then at the output we will get the marked text, where for each word (or, if not lucky at all, phrases) it is indicated at what recording interval this was said. Convenient for making search interfaces for logs and then playing the necessary fragments. At the same time, no matter how I “minted” words, transcribing gives an adequate result:

Source: https://habr.com/ru/post/343196/

All Articles