On fractals, martingales and random integrals. Part one

In my opinion, stochastic calculus is one of those magnificent sections of higher mathematics (along with topology and complex analysis), where formulas are found with poetry; this is the place where they acquire beauty, the place where the scope for artistic creativity begins. Many of those who read the article Wiener Chaos or Another way to flip a coin , even if they understood little, could still appreciate the magnificence of this theory. Today we will continue our mathematical journey, we will plunge into the world of random processes, non-trivial integration, financial mathematics and even touch on functional programming a little. I warn you, keep your brains ready, as we have a serious conversation.

By the way, if you are interested in reading in a separate article, it suddenly turned out that

d X 2 n e q 2 X c d o t d X ,

Hidden threat of fractals

Imagine some simple function. f ( t ) at some interval [ t , t + D e l t a t ] . Submitted? In standard mathematical analysis, we are accustomed to the fact that if the dimensions of this interval are reduced long enough, then the function given to us starts to behave rather smoothly, and we can bring it closer to a straight line, in a pinch, with a parabola. All thanks to the theorem on the decomposition of the function formulated by the English scientist Brooke Taylor in 1715:

f(t+ Deltat)=f(t)+f′(t) cdot Deltat+ frac12f″(t) cdot( Deltat)2+ cdots

By the time Benoit Mandelbrot invented the very concept of "fractals", they had been preventing many world scientists from sleeping for a hundred years. One of the first to whom fractals tarnished his reputation was Andre-Marie Ampère. In 1806, the scientist put forward a rather convincing "proof" of the fact that any function can be divided into intervals of continuous functions, and these, in turn, due to continuity, must have a derivative. And many of his contemporary mathematicians agreed with him. In general, Ampere cannot be blamed for this, seemingly rather gross error. Mathematicians of the beginning of the 19th century had a rather vague definition of such nowadays as basic concepts of mathematical analysis as continuity and differentiability, and, as a result, they could be greatly mistaken in their evidence. Even such eminent scientists as Gauss, Fourier, Cauchy, Legendre, Galois, not to mention much less well-known mathematicians of those times, were no exception.

')

Nevertheless, in 1872, Karl Weierstrass refuted the hypothesis of the existence of derivatives of continuous functions, presenting on display the world's first fractal monster, everywhere continuous and never differentiable.

Here, for example, how this function is defined:

f(t)= sum n=0infty frac sin(2nt)2n

The Brownian movement has the same property (by the way, for some reason, not Br, but Unov, the surname is British, though). Brownian motion is a continuous, random process. B(t) such that all its increments are independent and have a normal distribution:

B(T)−B(t) sim mathcalN(0,T−t), quadt<T.

Infinite variation of the Brownian motion

Let's imagine the function again f(t) on the interval t in[0,T] and divide this interval into a set of n non-intersecting subintervals:

\ Pi = \ {0 = t_0 <t_1 <\ dots <t_n = T \}.

Vf[0,T]= lim | Pi | rightarrow0 sumni=1|f(ti)−f(ti−1)|

will be the ultimate. In which case are we not so sure? Well, for example, the function will not have a finite variation.

f(t)=1 mathbbQ(t),

VX[0,T]( omega)= lim | Pi | rightarrow0 sumni=1|X(ti)−X(ti−1)|( omega).

This is how a fairly simple implementation of a variation on Haskell will look like:

-- | 'totalVariation' calculates total variation of the sample process X(t) totalVariation :: [Double] -- process trajectory X(t) -> Double -- V(X(t)) totalVariation x@(_:tx) = sum $ zipWith (\x xm1 -> abs $ x - xm1) tx x Where do random processes occur in the real world? One of the most famous examples today is financial markets, namely the movement of prices for currency, stocks, options, and various securities. Let him X(t) - this is Apple stock value. At some point you decided to buy f(t0) stock by price X(t0) and after a certain period of time the price changed and became equal X(t1) . As a result, you have f(t0) cdot DeltaX(t1) net profit / loss, where DeltaX(ti)=X(ti)−X(ti−1) . You have decided to buy / sell part of the shares and now you are holding f(t1) valuable papers. After some time, your income / losses amount to f(t0) cdot DeltaX(t1)+f(t1) cdot DeltaX(t2) , etc. In the end, you will earn / lose

I(T)= sumni=1f(ti−1) cdot DeltaX(ti).

muX(a,b]:=X(b)−X(a).

intT0f(t)dX(t)= int[0,T]f(t) muX(dt).

intT0f(t)dX(t)= int[0,T]f(t) muX+(dt)− int[0,T]f(t) muX−(dt).

\ begin {aligned} V _ {[0, T]} ^ X & = \ lim _ {\ | \ Pi \ | \ rightarrow 0} \ sum_ {i = 1} ^ n | X (t_ {i}) - X (t_ {i-1}) | \\ & \ leq \ lim _ {\ | \ Pi \ | \ rightarrow 0} \ Big (\ sum_ {i = 1} ^ n | X ^ + (t_ {i}) - X ^ + (t_ {i-1}) | + \ sum_ {i = 1} ^ n | X ^ - (t_ {i}) - X ^ - (t_ {i-1}) | \ Big) \\ & = X ^ + (T) + X ^ - (T) <\ infty. \ end {aligned}

Now we can easily integrate something. Take the trajectory of some continuous random process and call it g(t) . Using the Lagrange mean theorem, we can show that for a continuous function g(t) the variation will be equal Vg[0,T]= intT0|g′(t)|dt . If this integral exists, then under the above mentioned conditions of boundedness and measurability f(t) we can count

intT0f(t)dg(t)= intT0f(t)g′(t)dt.

So the question is: is it possible to take the integral in the sense of Lebesgue from the Brownian motion? We calculate the variation, and if it is finite, then we should not have any problems. Let's go from the side of the so-called quadratic variation :

[B](T)= lim | Pi || rightarrow0 sumni=1 big(B(ti+1)−B(ti) big)2

\ begin {aligned} \ sum_ {i = 1} ^ n \ big (B (t_ {i + 1}) - B (t_i) \ big) ^ 2-T & = \ sum_ {i = 1} ^ n \ Big (\ big (B (t_ {i + 1}) - B (t_i) \ big) ^ 2- (t_ {i + 1} -t_i) \ Big) \\ & = \ sum_ {i = 1} ^ n (Z_i ^ 2-1) (t_ {i + 1} -t_i), \ end {aligned}

Zi= fracB(ti+1)−B(ti) sqrtti+1−ti sim mathcalN(0,1)

mathbbE[(Z2i−1)2]= operatornameVar(Z2i)=2.

\ begin {aligned} \ mathbb {E} \ bigg [\ Big (\ sum_ {i = 1} ^ n \ big (B (t_ {i + 1})) - B (t_i) \ big) ^ 2 -T \ Big) ^ 2 \ bigg] & = \ sum_ {i = 1} ^ n \ mathbb {E} [(Z_i ^ 2-1) ^ 2 (t_ {i + 1} -t_i) ^ 2] \\ & = 2 \ sum_ {i = 1} ^ n (t_ {i + 1} -t_i) ^ 2 \\ & \ leq \ | \ Pi \ | \ cdot 2 \ sum_ {i = 1} ^ n (t_ {i + 1} -t_i) \ rightarrow 0. \ end {aligned}

[B](T)=T.

Notice that [B](T)= operatornameVar(B(T)) however, the methods for calculating these two values are completely different. The dispersion of the Brownian motion is considered to be the averaging of all its trajectories, given their probabilities. The quadratic variation is taken from a single random trajectory along which the movement took place, and the probabilities do not play a role here. While the variance can only be calculated theoretically, since it requires averaging of all implementations, the quadratic implementation can be calculated explicitly (with a sufficient data sampling value). The above formula is interesting in particular because, by definition, the quadratic variation, like the variation of the first kind VX[0,T] is a random variable. However, the feature of the Brownian movement is that its quadratic variation degenerates and takes only one single value corresponding to the length of the interval, regardless of the trajectory along which the movement took place.

We write a code that considers the quadratic variation of the implementation of a random process X(t) :

-- | 'quadraticVariation' calculates quadratic variation of the sample process X(t) quadraticVariation :: [Double] -- process trajectory X(t) -> Double -- [X(t)] quadraticVariation x@(_:tx) = sum $ zipWith (\x xm1 -> (x - xm1) ^ 2) tx x So now we are ready to return to calculating the variation of interest. VB[0,T] . We found out that

lim | Pi | rightarrow0 sumni=1(B(ti+1)−B(ti))2=T.

\ begin {aligned} \ sum_ {i = 1} ^ n (B (t_ {i + 1}) - B (t_i)) ^ 2 \ leq \ max \ big \ {| B (t_ {i + 1} ) - B (t_i) | \ big | (t_ {i}, t_ {i + 1}) \ subset \ Pi \ big \} \ cdot \ sum_ {i = 1} ^ n | B (t_ {i + 1}) - B (t_i) | . \ end {aligned}

We know that the Brownian motion is a continuous process, which means

\ max \ big \ {| B (t_ {i + 1}) - B (t_i) | \ big | (t_ {i}, t_ {i + 1}) \ subset \ Pi \ big \} \ rightarrow 0.

VB[0,T]= infty.

Y-yes ...

Ito integral or New Hope

In 1827, the English botanist Robert Brown, observing pollen grains in water through a microscope, noticed that the particles inside their cavity move in a certain way; however, he was then unable to identify the mechanisms causing this movement. In 1905, Albert Einstein published an article explaining in detail how the movement observed by Brown was the result of the pollen moving by individual water molecules. In 1923, the child prodigy and “father of cybernetics,” Norbert Wiener, wrote an article about differentiable spaces, where he approached this movement from mathematics, determined probability measures in the trajectory space, and using the concept of Lebesgue integrals, laid the foundation for stochastic analysis. Since then, in the mathematical community, the Brownian motion is also referred to as the Wiener process.

In 1938, Kiyoshi Ito, a graduate of Tokyo University, began working at the Bureau of Statistics, where in his free time he got acquainted with the works of Andrei Kolmogorov, the founder of modern probability theory, and Paul Levy, a French scientist, who studies various properties of Brownian motion at that time. He tries to combine Levi’s intuitive visions with Kolmogorov’s exact logic, and in 1942 he wrote the paper On Stochastic Processes and Infinitely Divisible Distribution Laws, in which he reconstructs from scratch the concept of stochastic integrals and the theory of stochastic differential equations describing motions caused by random events.

Ito integral for simple processes

Ito has developed a fairly simple method for constructing an integral. To begin, we define the Ito integral for a simple piecewise-constant process. Delta(t) and then we extend this concept to more complex integrands. So let us have a split again. \ Pi = \ {0 = t_0 <t_1 <\ dots <t_n = T \} . We believe the values Delta(t) are constant at each interval [tj,tj+1)

Imagine that B(t) - is the value of any financial instrument at the time t (Brownian motion can take negative values, so this is not a very good model for price movement, but for now we will ignore this). Also let t0,t1, dots,tn−1 - these are trading days (hours / seconds, not important), but Delta(t0), Delta(t1,), dots, Delta(tn−1) - these are our positions (the number of financial instruments on hand). We believe that Delta(t) depends only on information known at the time t . By information, I mean the trajectory of the Brownian motion up to this point in time. Then our income will be:

I(t)= sumkj=0 Delta(tj)[B(tj+1−B(tj)]+ Delta(tk)[B(t)−B(tk)].

I(t)= intt0 Delta(s)dB(s).

This integral has several interesting properties. The first and rather obvious: at the initial moment, his expectation at any point is zero:

mathbbE[I(t)]=0.

The calculation of the second moment / variance already requires additional calculations. Let him Dj=B(tj+1)−B(tj) for j=1, dots,k−1 and Dk=B(t)−B(tk) . Then we can rewrite the Ito integral in the form

I(t)= sumkj=0 Delta(tj)Dj.

\ begin {aligned} \ mathbb {E} [I ^ 2 (t)] & = \ mathbb {E} \ Big [\ sum_ {j = 0} ^ k \ Delta ^ 2 (t_j) D_j ^ 2 + 2 \ sum_ {0 \ leq i <j \ leq k} \ Delta (t_i) \ Delta (t_j) D_iD_j \ Big] \\ & = \ sum_ {j = 0} ^ k \ mathbb {E} \ Big [\ Delta ^ 2 (t_j) D_j ^ 2 \ Big] + 2 \ sum_ {0 \ leq i <j \ leq k} \ mathbb {E} [\ Delta (t_i) \ Delta (t_j) D_iD_j] \\ & = \ sum_ {j = 0} ^ k \ mathbb {E} \ Big [\ Delta ^ 2 (t_j) \ Big] \ cdot \ mathbb {E} [D_j ^ 2] + 2 \ sum_ {0 \ leq i <j \ leq k} \ mathbb {E} [\ Delta (t_i) \ Delta (t_j) D_i] \ cdot \ mathbb {E} [D_j] \\ & = \ sum_ {j = 0} ^ {k-1} \ mathbb { E} \ Big [\ Delta ^ 2 (t_j) \ Big] (t_ {j + 1} -t_j) + \ mathbb {E} [\ Delta ^ 2 (t_k)] (t-t_k) \\ & = \ sum_ {j = 0} ^ k \ mathbb {E} \ Big [\ int_ {t_j} ^ {t_ {j + 1}} \ Delta ^ 2 (s) ds \ Big] + \ mathbb {E} \ Big [ \ int_ {t_k} ^ t \ Delta ^ 2 (s) ds \ Big] \\ & = \ mathbb {E} \ Big [\ int_ {0} ^ t \ Delta ^ 2 (s) ds \ Big]. \ end {aligned}

mathbbE[I2(t)]= mathbbE Big[ intt0 Delta2(s)ds Big]

And finally, the quadratic variation. Let's first calculate the variation on each interval. [tj,tj+1] where values Delta(t) are constant, and then add them all together. Take a split interval tj=s0<s1< cdots<sm=tj+1 and consider

\ begin {aligned} \ sum_ {i = 0} ^ {m-1} [I (s_ {i + 1}) - I (s_i)] ^ 2 & = \ sum_ {i = 0} ^ {m- 1} [\ Delta (t_j) (B (s_ {i + 1}) - B (s_i)) ^ 2 \\ & = \ Delta ^ 2 (t_j) \ sum_ {t = 0} ^ {m-1} (B (s_ {i + 1}) - B (s_i)) ^ 2 \\ & \ xrightarrow [m \ rightarrow \ infty] {} \ Delta ^ 2 (t_j) (t_ {j + 1} -t_j) \ \ & = \ int_ {t_j} ^ {t_ {j + 1}} \ Delta ^ 2 (s) ds. \ end {aligned}

[I(t)]= intt0 Delta2(s)ds.

Now we can clearly see the difference between variance and variation. If the process Delta(t) is deterministic, they coincide. But if we decide to change our strategy depending on the trajectory along which the Brownian motion passed, then the variation will begin to change, while the variance is always equal to a certain value.

Ito integral for complex processes

Now we are ready to extend the concept of Ito integral to more complex processes. Let us have a function h(t) which can continuously change in time and even have breaks. The only assumptions we want to preserve are the quadratic integrability of the process:

mathbbE Big[ intT0h2(t)dt Big]< infty

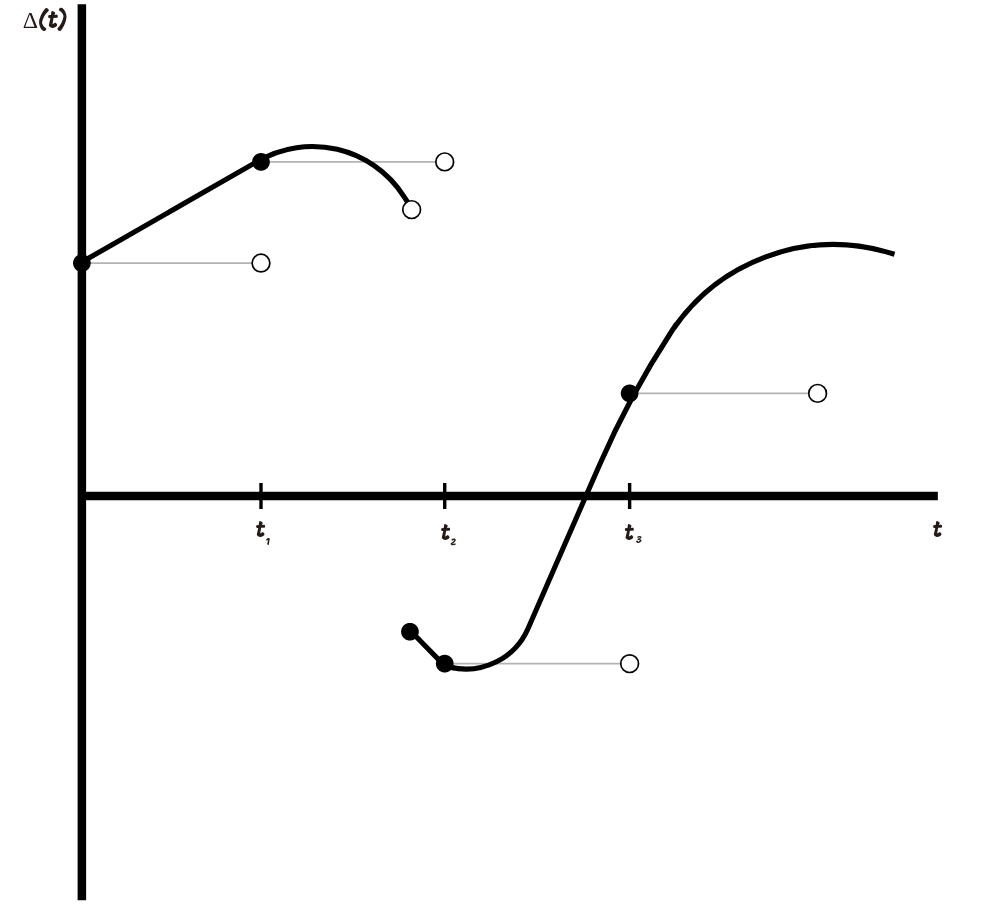

In order to determine intT0h(t)dB(t) we approximate h(t) simple processes. The graph below shows how this can be done.

The approximation is as follows: we choose a partition 0=t0<t1<t2<t3 then create a simple process whose values are equal h(tj) for each tj and do not change on the segment [tj,tj+1) . The smaller the maximum step of our partition becomes, the closer the approximating simple process becomes to our integrand.

In general, it is possible to select a sequence of simple processes. hn(t) such that it will converge to h(t) . By convergence we mean

limn rightarrow infty mathbbE Big[ intT0|hn(t)−h(t)|2dt Big]=0.

intt0h(s)dB(s)= limn rightarrow infty intt0hn(s)dB(s), quad0 leqt leqT.

- His trajectories are continuous.

- It is adapted. It is logical, because at every moment we must know our value of income.

- It is linear: intt0h(s)dB(s)+ intt0f(s)dB(s)= intt0(h(s)+f(s))dB(s).

- He is a martingale, but more on that towards the end of the article.

- Ito’s isometry is preserved: mathbbE[I2(t)]= mathbbE Big[ intt0h2(s)ds Big] .

- Its quadratic variation: [I(t)]= intt0h2(s)ds.

A small remark: for those who want to study in more detail the construction of the Ito integral or in general is interested in financial mathematics, I strongly advise the book by Steven Shreve “Stochastic Calculus for Finance. Volume II.

How about?

For the following reasoning, we will denote the Ito integral in a slightly different form:

I(h)= intT0h(s)dB(s),

I(t)= intT0h(s) cdot1[0,t](s)ds=I(h cdot1[0,t]).

In the previous article, we implemented the Gaussian process: W:H rightarrowL2( Omega, mathcalF, mathbbP) . We know that the Gaussian process is a mapping from a Hilbert space into a probabilistic one, which is essentially a generalized case of the Ito integral, a mapping from a space of functions into a space of random processes. Really,

data L2Map = L2Map {norm_l2 :: Double -> Double} type ItoIntegral = Seed -- ω, random state -> Int -- n, sample size -> Double -- T, end of the time interval -> L2Map -- h, L2-function -> [Double] -- list of values sampled from Ito integral -- | 'itoIntegral'' trajectory of Ito integral on the time interval [0, T] itoIntegral' :: ItoIntegral itoIntegral' seed n endT h = 0 : (toList $ gp !! 0) where gp = gaussianProcess seed 1 (n-1) (\(i, j) -> norm_l2 h $ fromIntegral (min ij + 1) * t) t = endT / fromIntegral n The function itoIntegral ' takes as input the seed is a parameter for the random variable generator, n is the dimension of the output vector, endT is the parameter T and the function h from the class L2Map, for which the function norm_l2 is defined , which returns the integrand rate: t mapsto intt0h2(s)ds . At the output, itoIntegral issues an Ito integral implementation on the interval [0,T] with a sampling rate corresponding to the parameter n . Naturally, a similar way of implementing the Ito integral is in a sense overkill, but it allows you to tune in to functional thinking, so necessary for further reasoning. We will write a faster implementation that does not require working with giant matrices.

-- | 'mapOverInterval' map function f over the interval [0, T] mapOverInterval :: (Fractional a) => Int -- n, size of the output list -> a -- T, end of the time interval -> (a -> b) -- f, function that maps from fractional numbers to some abstract space -> [b] -- list of values f(t), t \in [0, T] mapOverInterval n endT fn = [fn $ (endT * fromIntegral i) / fromIntegral (n - 1) | i <- [0..(n-1)]] -- | 'itoIntegral' faster implementation of itoIntegral' function itoIntegral :: ItoIntegral itoIntegral seed 0 _ _ = [] itoIntegral seed n endT h = scanl (+) 0 increments where increments = toList $ (sigmas hnorms) * gaussianVector gaussianVector = flatten $ gaussianSample seed (n-1) (vector [0]) (H.sym $ matrix 1 [1]) sigmas s@(_:ts) = fromList $ zipWith (\xy -> sqrt(xy)) ts s hnorms = mapOverInterval n endT $ norm_l2 h Now using this function, we can implement, for example, the ordinary Brownian motion: B(t)= intt0dB(s) . The function h is a function whose norm_l2 norm will be t mapsto intt0ds=t , identical function.

l2_1 = L2Map {norm_l2 = id} -- | 'brownianMotion' trajectory of Brownian motion aka Wiener process on the time interval [0, T] brownianMotion :: Seed -- ω, random state -> Int -- n, sample size -> Double -- T, end of the time interval -> [Double] -- list of values sampled from Brownian motion brownianMotion seed n endT = itoIntegral seed n endT l2_1 Let's try to draw different versions of the trajectories of the Brownian motion.

import Graphics.Plot let endT = 1 let n = 500 let b1 = brownianMotion 1 n endT let b2 = brownianMotion 2 n endT let b3 = brownianMotion 3 n endT mplot [linspace n (0, lastT), b1, b2, b3]

It's time to double-check our previous calculations. Let's calculate the variation of the trajectory of Brownian motion on the interval [0,1] .

Prelude> totalVariation (brownianMotion 1 100 1) 8.167687948236862 Prelude> totalVariation (brownianMotion 1 10000 1) 80.5450335433388 Prelude> totalVariation (brownianMotion 1 1000000 1) 798.2689137110999 We can observe that with increasing accuracy, variation takes on more and more large values, while quadratic variation tends to T=1 :

Prelude> quadraticVariation (brownianMotion 1 100 1) 0.9984487348804609 Prelude> quadraticVariation (brownianMotion 1 10000 1) 1.0136583395467458 Prelude> quadraticVariation (brownianMotion 1 1000000 1) 1.0010717246843375 Try running a variation calculation for arbitrary Ito integrals to make sure that [I](h)= intT0h2(s)ds regardless of the trajectory of movement.

Martingales

There is such a class of random processes - martingales . In order to X(t) was a martingale, you must fulfill three conditions:

- It is adapted

- X(t) almost everywhere of course:

mathbbE[|X(t)|]< infty

- Conditional expectation of future values X(t) equals its last known value:

mathbbE[X(T)|X(t)]=X(t), quadt<T.

Brownian motion - martingale. The fulfillment of the first condition is obvious; the fulfillment of the second condition follows from the properties of the normal distribution. The third condition is also easily verified:

mathbbE[B(T)|B(t)]= mathbbE[(B(T)−B(t))+B(t)|B(t)]=B(t).

There is another interesting theorem, the so-called martingale representation theorem : if we have a martingale M(t) whose values are determined by the Brownian motion, that is, the only process that introduces uncertainty in the values M(t) , - this B(t) then this martingale can be represented as

M(t)=M(0)+∫t0H(s)dB(s),

And that's it for today.

Source: https://habr.com/ru/post/343178/

All Articles