The battlefield is augmented reality. Part I: The Basics of Object Recognition

We plan to publish a series of articles on object recognition technologies. As a cherry on the cake, we will consider a live case for which we used a multiplatform engine for AR, but more on that later.

In this article we will talk about the basics, get acquainted with all the actors of the process, have some fun, remember your favorite memes and in between cases create the necessary picture of knowledge.

So:

')

+ decomposition of the case to show how all this is connected.

Computer vision, as a scientific discipline appeared quite a long time - in the 1950s. Then about any OpenCV and speech did not go, the official release of which occurred only in the year 2000.

In the 1950s, two-dimensional algorithms were developed for recognizing statistical characteristics, and simple algorithms were used.

Then, in the 1980s, on the basis of the theory of J. Gibson, mathematical models were developed for calculating the optical flux on a pixel basis.

So, step by step, people found the opportunity to better recognize objects. At a certain point, they learned to recognize the letters in the pictures, and then they taught this operation and the computer.

In a nutshell, OpenCV is a low-level library that can recognize colors, shapes, and shapes with the helpof blood magic algorithms.

The library is adapted for different platforms, therefore it is universal and is used everywhere: from mobile devices to robots on the “Arduino”. OpenCV is as old as Zarathustra’s notes, but nonetheless continues to evolve and inspire life in new projects. In order not to go deep, I advise you to go here .

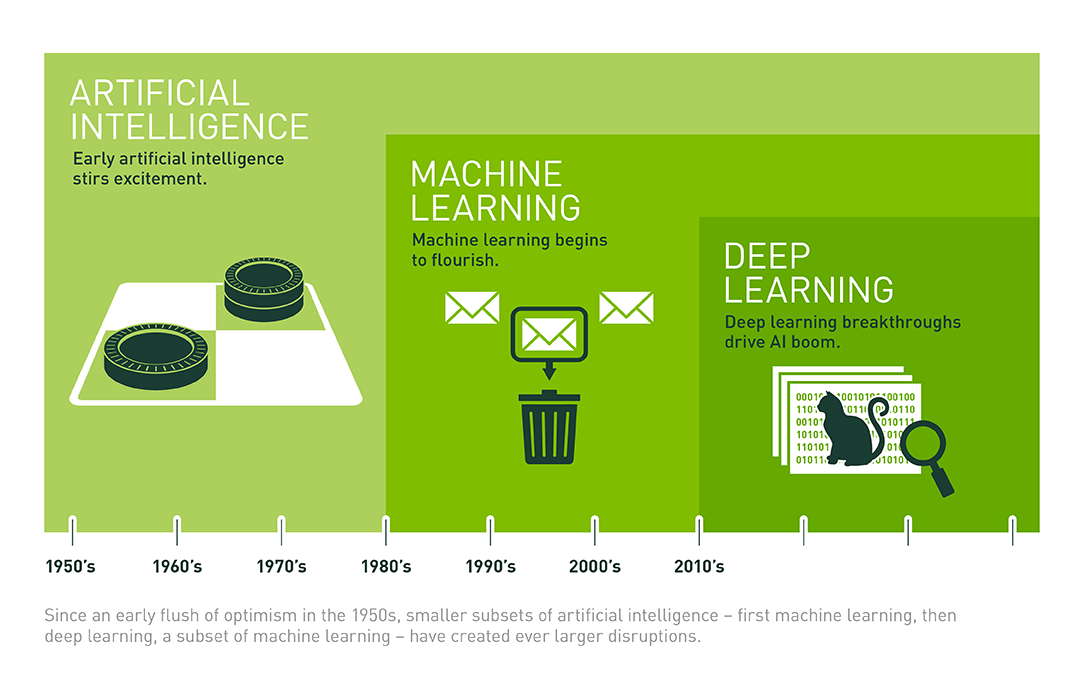

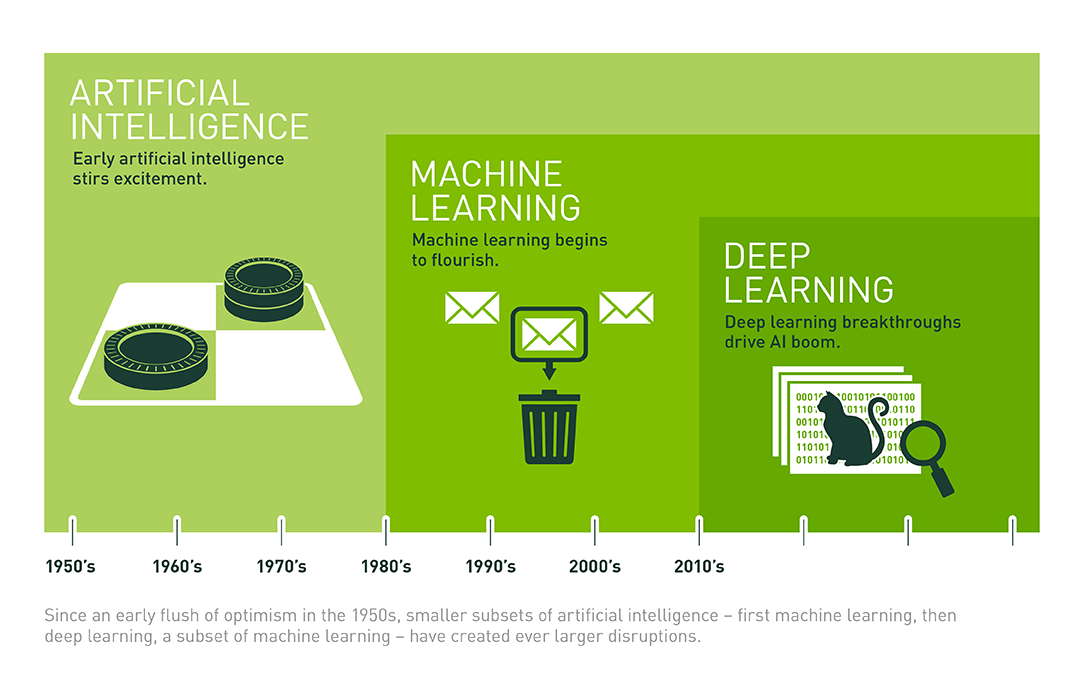

People trained computers to recognize objects, and this is Machine Learning. Then it smoothly flowed into Deep learning - literate rebranding of machine learning, something like a “neural network with several layers of correspondence”. One of the giants of deep learning in the modern world is Google .

As we see in the picture, it all started with artificial intelligence, went further into machine learning and ended deep learning.

Neural networks - the next milestone in the development of machine learning, access to a new level. Despite the fact that my roommate with the nickname "40YearOldVirgin" perfectly understands the whole mathematical background, we will leave things in the best traditions of development approaches.

Namely: keep it simple, stupid.

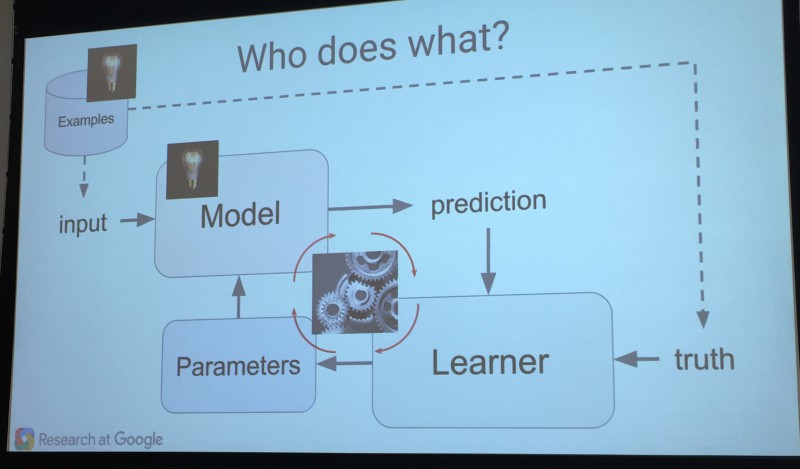

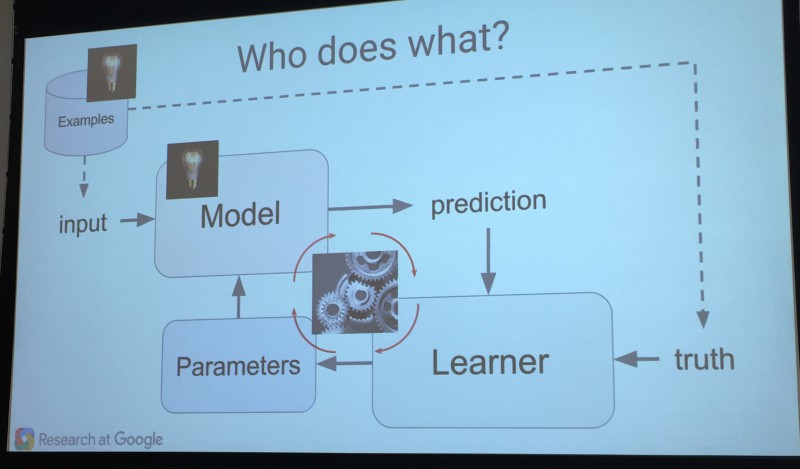

Take a look at the picture, it describes the composition of the neural network and the device work.

At the entrance there is an example, the validity of which is estimated by the network and is based on the basis of available experience and accumulated data.

The basic principle of prediction: the larger the sample, the more accurate the data - it acquires a new meaning here ... The longer the training, the more accurate the prediction. Hardcore fans recommend reading the blog .

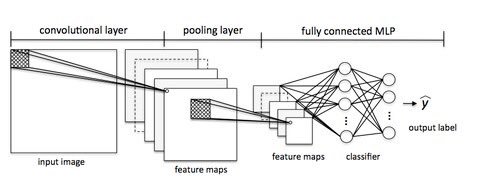

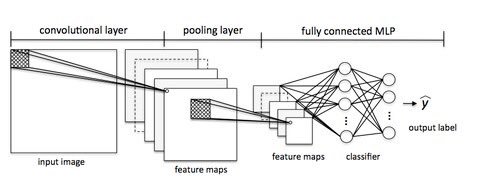

The picture shows a sequence of actions located on different layers of the neural network.

And the fact that with the introduction of Deep Learning on the stage everything was mixed into a huge pile and the borders were blurred so much that no one understands how it works.

Therefore, we summarize:

Of course, in this case, you can train without third-party ML (machine learning), but it is more difficult. We, or rather our ios developer, used the HAAR cascades. A great example of how this works .

We need with the help of clever algorithms to recognize the road sign "speed limit".

Walkthrough for idlers. We will not go into the details of the implementation, we will carry out a simple decomposition of the task to see everything in perspective.

Object recognition:

Understanding the object :

Let's look at such cases.

In both situations, for recognition, we turn to a neural network, which, based on experience, can provide a result. For example, a “neuron” with road stops saw different versions of signs: both with a speed limit and unsuitable for our case (without a digit or even a “brick” sign).

In order not to send frequent requests to the network, we assume that if in 5 frames there is a rectangle that has not shifted much from the previous frame, then this is the same object.

This is all fine when it comes to simple recognition for one platform. But the thoughts of the customer are inscrutable.

In the next issue we will plunge into the fascinating world of 3D.

written by Vitaly Zarubin, Senior Software Engineer Reksoft

In this article we will talk about the basics, get acquainted with all the actors of the process, have some fun, remember your favorite memes and in between cases create the necessary picture of knowledge.

So:

')

- OpenCV library.

- Principles of recognition.

- The history of the development of Computer vision.

+ decomposition of the case to show how all this is connected.

One important fact

Computer vision, as a scientific discipline appeared quite a long time - in the 1950s. Then about any OpenCV and speech did not go, the official release of which occurred only in the year 2000.

In the 1950s, two-dimensional algorithms were developed for recognizing statistical characteristics, and simple algorithms were used.

Then, in the 1980s, on the basis of the theory of J. Gibson, mathematical models were developed for calculating the optical flux on a pixel basis.

So, step by step, people found the opportunity to better recognize objects. At a certain point, they learned to recognize the letters in the pictures, and then they taught this operation and the computer.

So, it all started a long time ago, in a distant, predalok galaxy ... with OpenCV

In a nutshell, OpenCV is a low-level library that can recognize colors, shapes, and shapes with the help

The library is adapted for different platforms, therefore it is universal and is used everywhere: from mobile devices to robots on the “Arduino”. OpenCV is as old as Zarathustra’s notes, but nonetheless continues to evolve and inspire life in new projects. In order not to go deep, I advise you to go here .

People trained computers to recognize objects, and this is Machine Learning. Then it smoothly flowed into Deep learning - literate rebranding of machine learning, something like a “neural network with several layers of correspondence”. One of the giants of deep learning in the modern world is Google .

As we see in the picture, it all started with artificial intelligence, went further into machine learning and ended deep learning.

A couple of words about neural networks

Neural networks - the next milestone in the development of machine learning, access to a new level. Despite the fact that my roommate with the nickname "40YearOldVirgin" perfectly understands the whole mathematical background, we will leave things in the best traditions of development approaches.

Namely: keep it simple, stupid.

Take a look at the picture, it describes the composition of the neural network and the device work.

At the entrance there is an example, the validity of which is estimated by the network and is based on the basis of available experience and accumulated data.

The basic principle of prediction: the larger the sample, the more accurate the data - it acquires a new meaning here ... The longer the training, the more accurate the prediction. Hardcore fans recommend reading the blog .

The picture shows a sequence of actions located on different layers of the neural network.

So what is the salt? - the reader will ask

And the fact that with the introduction of Deep Learning on the stage everything was mixed into a huge pile and the borders were blurred so much that no one understands how it works.

Therefore, we summarize:

- OpenCV recognizes objects.

- These objects are fed into the neuron.

- It analyzes the layers for compliance and gives the result at the output.

- The neural network itself is trained by the experience gained.

Of course, in this case, you can train without third-party ML (machine learning), but it is more difficult. We, or rather our ios developer, used the HAAR cascades. A great example of how this works .

Let's take a case

We need with the help of clever algorithms to recognize the road sign "speed limit".

Walkthrough for idlers. We will not go into the details of the implementation, we will carry out a simple decomposition of the task to see everything in perspective.

Object recognition:

- Create an object that will interact with OpenCV, and more specifically, it will convert CVPixelBufferRef to cv :: Mat and work with it, let CV Manager name;

- Using the GPUImage library, we write our own filter, which at given intervals (in order not to fully load the processor) pulls the CVPixelBufferRef frames from the video stream and returns it to our CV Manager.

- To recognize any objects in the frame, we will use HAARcascades .

- At the output we get an array of rectangles.

Understanding the object :

Let's look at such cases.

- To recognize the “speed limit” road sign, you can train our system on your own or find a system that has already been trained for recognition;

- For recognition, for example, a face, a service like FindFace is used. We can give a rectangle from the previous step in the API and get a face ID;

In both situations, for recognition, we turn to a neural network, which, based on experience, can provide a result. For example, a “neuron” with road stops saw different versions of signs: both with a speed limit and unsuitable for our case (without a digit or even a “brick” sign).

In order not to send frequent requests to the network, we assume that if in 5 frames there is a rectangle that has not shifted much from the previous frame, then this is the same object.

Cons of the approach:

- Little accuracy.

- Using the network for recognition.

- Problems when turning the screen (HAAR cannot recognize at an angle).

- As you can see, everything is rather primitive, in fact for each element we take everything ready, vzhuh – vzhuh and in production.

This is all fine when it comes to simple recognition for one platform. But the thoughts of the customer are inscrutable.

In the next issue we will plunge into the fascinating world of 3D.

written by Vitaly Zarubin, Senior Software Engineer Reksoft

Source: https://habr.com/ru/post/342950/

All Articles