ENOG'14 - the impact of content locks on the Internet infrastructure

Qrator Labs expresses its gratitude to the ENOG program committee for permission to publish at Habré a transcript of a roundtable on blocking prohibited content. The event took place in Minsk on October 9-10. Attention! The text is long, sensitive topic - please take seriously the comment that you want to leave under the publication.

ENOG (the “Eurasian group of network operators”, originally European Network Operators Group) is a regional forum of Internet professionals dealing with the most important aspects of the Internet. Within the framework of the forum, they have the opportunity to exchange experience and knowledge on issues inherent in the Russian Federation, the CIS countries and Eastern Europe.

')

The sequence of presentations and topics of reports:

- The technical side of locks - Alexey Semenyaka, RIPE NCC

- Overview of the technical situation with locks in Russia - Philip Schors Kulin, DIPHOST

- Deep packet inspection problems in transportation networks - Artem ximaera Gavrichenkov, Qrator Labs

- Prospects for content blocking in the context of further development of Internet technologies - Anton Baskov, AB Architecture Bureau

- Administrative blocking issues - Yuri Kargapolov, UANIC

Content blocking, introduction

Yuri Kargapolov (moderator of the discussion):

Let's probably start our very unusual, for ENOG, panel discussion. This panel is related to what we used to call “blocking”, which, even we feel it, affect the quality of our networks, the quality of the service provided. Ultimately, locks affect the architecture of the networks we build. There is a certain set of issues that are particularly relevant for Russia. But, as it turned out, he began to gain strength both for Ukraine, and for Belarus and for many other countries in the region. We still do not understand how to respond adequately to this, and therefore this discussion will be devoted to trying to understand how to respond to blockages, what strategies to choose and how we can adequately respond to this challenge.

The technical side of locks, Alexey Semenyaka, RIPE NCC

Presentation video and presentation slides

Our task is to understand how to make locks in one way or how to live with locks so as to receive technically minimal harm. And I must say that this is not a new problem - this is a new problem only for the region, but in general in the world this bun has been chewed many times already. The first discussion at a sufficiently high level appeared more than 15 years ago, a system of locks, say, in the UK, began to operate on a large scale approximately in 2003.

Here is a list of international organizations that issued certain documents: reports, recommendations, reviews on how traffic is blocked on the Internet. You see that the list begins with the UN General Assembly - indeed, there is a document of the General Assembly on blocking in different countries of the world. There is the European Court of Human Rights, there is the Council of Europe, there is the OSCE.

There are also Internet organizations: ISoC, IETF, which issued a whole RFC 7754 about this, and a draft also is on the way. To put it mildly, this problem is not ignored. There is also the EFF, of course, not left aside - they also have important and interesting documents.

When I was preparing for the session, I read the documents of those organizations that are listed on the slide, which is about 800 pages. This is a huge amount of information accumulated to date. A huge number of problems have already been discussed in the world - now the turn has come to us.

My task now is to voice those agreements on the basis of which we will work in this discussion. Some zero axioms with which we now begin and take them for granted.

More or less accepted in the world terminology contains three concepts: blocking, filtering, censoring.

We will talk about blocking - by static signs. This is different from filtering when traffic, for example, P2P networks is filtered by signatures to detect copyrighted content. It also differs from censoring, which, according to legal definition, is “prior approval of information before making it public”. That is, this restriction of freedom of dissemination of information.

What are the reasons for this discussion?

Locking is a common practice worldwide. There are few countries where it is absent, and there is not a single more or less developed country where it would be completely absent. In one form or another in all countries of the world there are some restrictions on illegal content hosted in a network environment.

Accordingly, at present, general signs are formulated that are considered universal in international law and allow really blocking content. Enemy number one - the spread of child pornography, this is the reason why content is blocked almost everywhere. It is incitement to hatred, discord, calls for genocide and so on. This is also defamation. In Europe and in some other countries, but mainly in Europe, at the level of the European Court monitor copyright infringement.

At the present moment, if we formulate - and this is a prerequisite from which we proceed in the current discussion, if we formulate the best practice or the best situation that can be described, it is:

- A system in which blocking occurs not in a closed, but in an open way, as a result of a controversial procedure, for example, in court. Where the other party can challenge and argue its reason why the lock should not be implemented.

- The criteria for blocking are formulated in advance and correspond to the consensus that exists in society. This is not pressure on society, this is what society has formulated for itself as an acceptable standard of living.

- At the same time, the court relies on competent expertise from the professional community to prevent technically dangerous actions - those that carry too serious consequences and proceed from the understanding of the professional competencies of the network community. In this case, what can be resolved before the trial, that is, all measures for pre-trial settlement, are taken. There is a system of agreements between the potential offender and the potentially offended.

This is the system in which we believe that some cases where locks are legitimate, reasonable and explainable - in such a system it can be discussed and it can exist, has the right to life. Once again - we would like to see the Internet completely unfiltered and transparent, but we live in the situation that exists in the world today - we are trying to find a point of minimal harm. Actually, this point is now shown on the screen - the situation to which we must strive.

If we look at the jurisprudence of the ECHR, which regularly examines claims in cases of blocking, which is little known in our region, although there were lawsuits even from the former USSR, including from Russia. A good illustration are the two claims of Turkish citizens against, in fact, Turkey, about blocking.

One trial was about the thing described by a good collateral censorship, when a certain illegal resource was blocked, along with it some Google resources were banned. The court sided with the citizen and asked Turkey not to do that. This is a very recent case, I have not yet seen the published results of the case.

In the second case, resources containing copyrighted content - music were blocked. The court sided with the state and declared that this case is not a violation of human rights. That is, in fact, adopted a differentiated approach - this is the reality that is worth adhering to.

European Court of Justice (European Court) in 2001 decided to unconditionally freeze resources for copyright infringement. Over the past 16 years, he has made a huge number of private decisions that it is unacceptable to conduct excessive blocking due to the fact that it violates human rights, and this is clearly stated.

At the same time, the ECJ language for the national courts of Europe is quite mild, which creates problems in interpretation, because it is not very clear what the criteria are and how not to go beyond these limits. Here are the wordings used: “take reasonable measures to block”, “sufficiently impede access to prohibited resources” and “not unnecessarily deny access to legal information”. That is, the wording is rather vague, but there are quite a few private definitions. Let's say that the problem of clear wording, even at this level, has not been resolved by now.

What should the network community do? This is not the thing that we can solve today and not the thing that we can discuss today. If only because the majority of those present in the hall cannot officially represent their organization, they do not have the right to vote (in the sense of the board of directors) or the right to sign.

But unconditionally that it is necessary to look for forms of interaction with the state, where this is possible. It is not always possible, but where possible it must be done.

To form professional associations so that the pressure is common. To view was integrated. And to participate both at the level of the legislative process and at the level of participation in judicial procedures.

Where it is possible, and I understand that countries are different, situations are also different, therefore there can be no absolute obligations.

Well, I’ll shortly go through world practice, explaining how it looks. There are three vectors that form a coordinate system:

- How is the decision to block?

- How is the lock?

- What is the blocking area? That is, among whom content is blocked.

Decision-making.

The mildest situation is when locks are not described in the legislation, activity on the Internet is considered as an absolute analogue of offline activity and a private decision is made.

Three different examples of countries where everything works this way are: Japan, Latvia, and Croatia. It must be said that in Latvia there were very few examples of blocking - in my opinion, only the only court decision to block the film, which did not lead to anything, because the film was blocked in one place, but by that time there were already about a million others places

But the operators of these countries have some problems with the execution of court decisions, so to speak, because there are no uniform rules on how to do this. Neither at the state level, nor at the industry level.

The second situation is when locks are not described in the legislation, but their issue is resolved at the level of professional associations of operators. Operators among themselves independently agree on how they do it. And on a voluntary basis, by the provision of government agencies or vessels, operators block content. This is practically the whole of Europe.

The most advanced, in a bad sense, situation with locks in, let's say, traditional Europe in Great Britain. But even there the decision is made by the association that was specifically created for this. This is the association of telecom operators, non-state association.

The next type of decision-making is when there are locks and they are described in the legislation, but they are carried out by a court decision, that is, as a result of a public and adversarial procedure. Examples are given on the slide, it is worth saying that here, too, not without “quirks”, for example, in Belgium, the decision is not only for the end operator, but also for transit. The transit is obliged not to transmit any prohibited content, which causes problems. For several years already, the Belgian network community has been trying to explain to the state that it is almost impossible to track the transfer of a film protected by copyright on transit - this does not work. But there is such a case and it causes a certain tension. Although it would seem that Belgium is a fairly technologically developed country with a good civil society.

And the last - when locks are described in the legislation and carried out without any adversarial procedure. The list of countries is large, I specifically brought a variety of countries - as you can see, there is Turkmenistan here, but there is Estonia, there is Turkey, there is Ukraine, there is India. This is also, unfortunately, quite a popular practice, constantly causing technical problems.

How do locking platforms work? There are cases when the state requires the use of some centralized state platform - drive traffic through some center. Vivid examples: China, Saudi Arabia, Turkmenistan.

There is a typical solution that is used by the main operators in the country. Here is an unexpected example - the United Kingdom with the Clean Feed system, which was first debugged by British Telecom, now it is used by all major UK operators. As I said, despite the fact that this is a typical solution that has become the de facto standard, the resources in this solution appear on the basis of the representation of the public organization, which includes communication operators. That is, the final decision on the inclusion in the black list is not made by the state, but by a public organization.

Or self-realization by operators, or the presence on the market of many solutions, etc.

What mechanisms are there?

The heaviest mechanism that Ukraine recently used massively, that is, the majority of operators blocked prohibited resources in this way - this is an autonomous blocking system. That is, all the resources of the autonomous system are sent to a black hole.

The next method is IP blocking. A very popular way in Russia - a bunch of small operators work that way. This is how Turkey works, how Pakistan and many others work, various examples can be given, such as Burundi, Congo and so on. That is, the poor countries of Africa are also very fond of this method of blocking.

Lock at the DPI level. A different level of analysis is possible here, starting with a simple analysis of the host in the HTTP header or the SNI field in HTTPS. And ending with quite tricky packet analysis. Naturally, the further - the harder it works, here the war of the sword and shield, because more and more traffic is encrypted, however, the United Kingdom and China are following this path.

And quite an interesting example is blocking by DNS. What does it mean? This means that, attention, only provider DNS sends the user to the stub. In this case, everyone understands that the user can register four eights in the DNS host, and everything will work - this, so to say, is considered as the compromise that is acceptable in this case. Virtually the whole of continental Europe performs locks in just such a soft way.

And the last - blocking area.

This is either all users of the network, or some separate groups. You can see how these groups are described - for example, in the UK they are “default” user groups. That is, the user can request to remove locks from groups of resources, which, for example, include all pornography. But by default it is disabled - this is the system that is currently in the process of implementation.

In the United States, these are libraries and schools - there is filtering, and quite tough. Croatia, Lithuania, Poland are only schools. And in France there was a law on filtering in public places, the public Internet. That is, you can get unfiltered Internet at home, up to the things I mentioned, but in public places the Internet should be filtered.

On this my part is finished.

Overview of common blocking methods

Yuri Kargapolov :

Alexey, thanks for the task of a certain coordinate system regarding what happens with locks in the world. In addition, we would also like to present to you the situation that occurs in a particular country - the Russian Federation. We will analyze this situation with the help of Philip Kulin, who will tell you in detail about his experience in this matter.

Overview of the technical situation with locks in Russia, Philip Kulin, DIPHOST

Presentation video and presentation slides

It is legislatively established that there is some register of prohibited sites, which is filled by the supervisory authority: Procurator, Consortium, court, right holders and so on. The law is constantly being supplemented, almost at every session of its discussion there are new state bodies that can offer something there, let's say, suggest. And all this is provided to the supervisory authority - Roskomnadzor, which keeps a register of prohibited resources. According to the results of the decision, Roskomnadzor provides the register to telecom operators - providers, they use it for blocking. I will note that the law is not a bit technical, there is no blocking object - providers, as it were, do it themselves, there are some recommendations and you can play yourself.

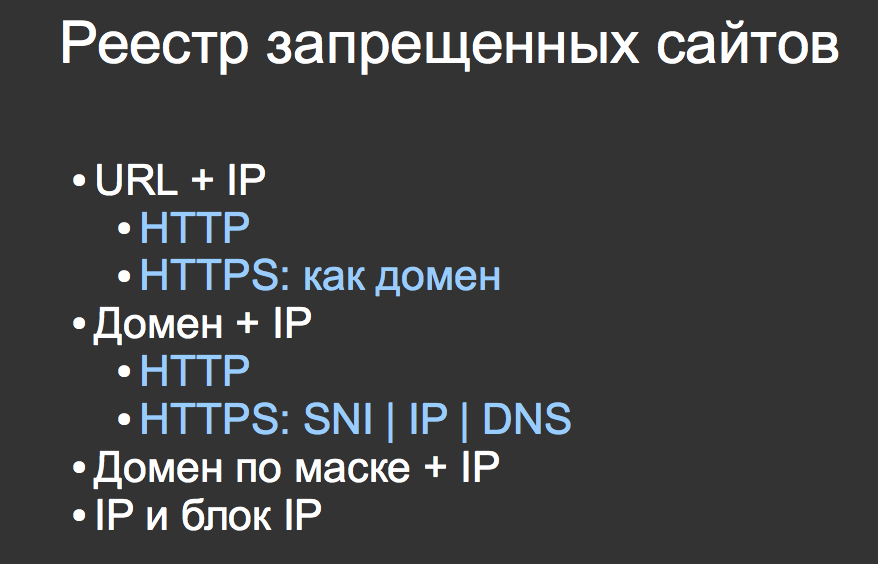

What is the registry of prohibited sites? The registry of prohibited sites is a set of functions that providers, at their own discretion or recommendations of the regulator should perform. Respectively, URL, the domain and a mask, that is a template are blocked. At the same time, there are static entries that provide both a domain name and IP. If the protocol is encrypted, then there are options: either the provider understands server indication, that is, the TLS header, or it blocks by IP — there are such providers and there are many of them, or true DNS. Here again, I note that we assume that the DNS is completely intercepted and substitutes the correct answer, if the answer is from the registry.

There is also IP filtering completely and IP blocking filtering, of which not so much, in fact.

How is it now generally accepted for telecom operators to filter by the registry? For recommendations and some own reasons.

The first is selective filtering of IP from the registry or full interception of DNS and substitution of the response, or filtering the entire channel into a gap. The channel is fully visible and filtered. The first two methods can be combined for various reasons.

How is filtering controlled?

Since the end of 2016, the state has provided free of charge for telecom operators a certain device “Auditor”. This is a box that painfully resembles RIPE Atlas and is made absolutely on the same basis. It is installed on the subscriber side, that is, imitates the subscriber and controls the quality of blocking by the number of passes. That is, just thinks how many times she was able to reach a banned site.

A side effect of such control is that this point itself takes IP addresses. Before checking it somewhere takes the addresses of those resources that are in the registry. Which may or may not coincide with what is written in the registry. Again, the DNS does not have to give everyone the same IP address and, accordingly, telecoms operators have a problem with this. The second problem point is that the operator is responsible for unrestricted access, and anyone with access to the management of the domain included in the list of prohibited resources can manage IP addresses that will experience communication degradation if the blocking is not over IP.

At the beginning of the summer there were a lot of related problems, including blocking bank payments, because someone indicated IP processing centers.

What do we see in the registry in numbers?

There are a lot of numbers, but the main thing that is interesting is that there are more than 90,000 records today. They change every day, and when I watched last night, there were 92,000, today, probably, 93-94 thousand. This is all registry entries.

Of these, blocking by mask is the most interesting — this is when the provider must run after each domain and make a pattern. 1000 domains are running away, Roskomnadzor noticed this and applied a mask lock to them. Blocking by IP blocks is eight cases, and there are Blackberry services locked in the registry.

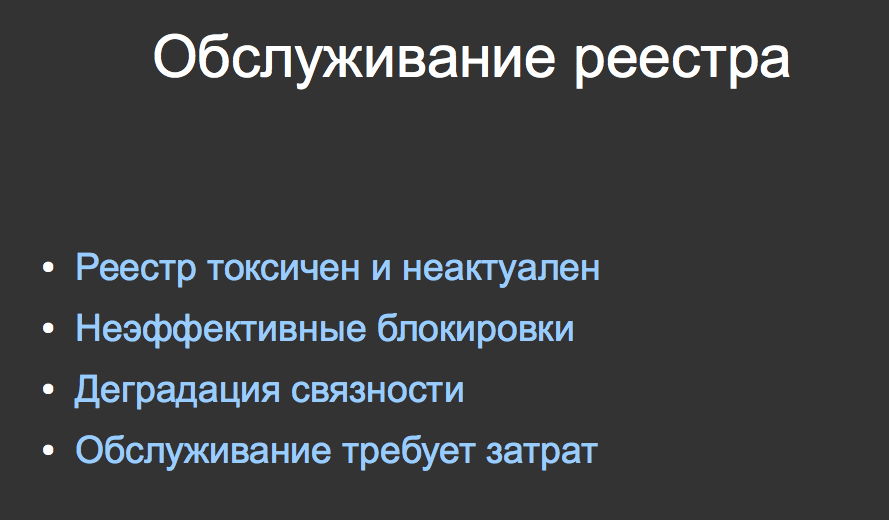

Registry toxicity is what nonsense is there. Registry redundancy of 13%, what is it? There is a lock on the domain, there is a lock on the URL. And if there is a blocking on the domain, it is meaningless to keep the URL, because the provider will no longer look at them - he will look at the entire domain. That is, there are 13% of such extra entries in the registry - it is more plump than necessary.

There are 60,000 unique IPs in the registry, but in fact, truly unique IPs that turn into domains are 35,000. The registry is kept extremely careless in this regard, and there is a lot of unnecessary information that does not correspond to reality.

Another interesting fact is that the registry contains a URL that does not meet any standards. That is, someone wrote a URL by hand, which somehow needs to be interpreted or not at all. There are 2 similar URLs in the registry, but they have been holding there for half a year already and, I think, they registered there.

The relevance of the registry. 51% of the registry is not true - IP addresses do not correspond to anything, that is, more than half of the registry is full stuffing.

Each of you, by bribing me or stealing my laptop, or making an offer that I cannot refuse , can get a list of domains available for registration and use it for some of their purposes, blocking any arbitrary resources in Russia. There are more than two thousand such domains, right yesterday I counted them especially for us.

Are there any domains escaping from blocking? Yes, there are such domains. There are more than a thousand of them, but in fact, when I looked at what these domains are and why they run away, it turned out that 30% of the domains are certain CNAME records for certain domains in certain CDNs that have a TTL for 60 seconds. That is, it is not a special runaway, and in the case of some CDN for some reason they do it like this.

And, again, this leads to problems for telecom operators, who are forced to run after these domains. And no one knows about it - I am the first person to talk about it publicly.

Accordingly, the findings. The registry is toxic, irrelevant. If someone wants to make locks efficiently, then efficiency suffers. There is a problem with the degradation of communication - there is experience with bank payments. And in order to maintain the registry, it is necessary to expend energy, time, money, but, unfortunately, the supervisory authority apparently does not have such funds.

I described the situation - how is the situation developing right now? A few days before ENOG, a special department was created under the supervisory authority, which will deal with blocking and access restriction issues, that is, funds are spent on it. Now there is an active recommendation with the decision to intercept all DNS traffic and substitute the appropriate answers when it matches the registry.

The decision is doubtful - I will say straight away, this will lead to the fact that they will use another method. But everyone is aware of this, including the supervisory authority, therefore, DPI is being imposed on the entire width of the channel so that it is not necessary to maintain a list of IP addresses in the registry and update it. That is, they believe that it is difficult, and they shift the issue of service to providers - this is already voiced in the drafts of the regulatory document that has already been published.

On that note, I pass the microphone to the next speaker.

Yuri Kargapolov :

, . , . , , . , . , — .

deep packet inspection , , Qrator Labs

What we will discuss in the report that is about to begin is questions that arise in the development and implementation of dpi-solutions. That is, the purpose of the report is the formation, perhaps, of a certain checklist to the dpi equipment when it is implemented in the transit transport network.

This report is built on experience. What experience? For eight years now, I have been engaged in building a global anycast network among my team, whose task is (and this is a typical task) deep packet inspection, this is protection against ddos attacks.

The global network is a bunch of points of presence around the world: from North America to Southeast Asia through the CIS and Europe, where each point of presence represents this kind of iron, and iron, which can be bought on the market. That is, in this gland there is nothing custom.

Custom software there, the very DPI-solution that is fully developed in our country. This process has been going on for eight years now, this is eight years of design, from the choice of hardware to setting up software. That is, design, research, deployment, including on networks of operators, including the enterprise customers.

The main task of the solution is accessibility, in all senses. That is, it is a traffic analysis, and monitoring the availability and performance of various services. In the end, this is a solution to protect against DDoS attacks.

What is the solution to protect against DDoS attacks today?

This analysis, returning to the term deep packet inspection, analyzes traffic at all levels. From primitive analysis by, say, IP addresses, ports, to tracking traffic flows, sessions, connections, and up to analyzing the behavior of each individual user using various big data tools.

What have we learned from building such a decision?

Many of you have probably heard about the term that is in the title of this slide. This term sounds like “packet filtering at the seventh level” - it is in this formulation that you can hear it in many places. In this formulation a certain hypothesis is laid, which is also laid in the architecture of some solutions.

The hypothesis is the following - batch analysis, that is, the analysis of each individual packet, is a measure sufficient to determine problems with traffic, including at the seventh level, that is, from the third to the seventh. This hypothesis appeared, first of all, because it is very convenient for construction.

You need to understand that when you build DPI-solutions you are faced with a number of problems. First, if you want to reassemble a TCP session, analyze a TLS session and analyze something in it, then you need to allocate a lot of RAM for this business, this is a very difficult task with finding each individual connection. Computational complexity is growing and this needs to be done on every packet passing by.

Further, network engineers, of whom there are many in the hall and they will not let me lie, do not like the solutions that are put into the network, as they say, “into the gap”. When all traffic is chased through a security solution, because network engineers are well aware that all these solutions are unreliable in most cases. The main task of the network engineer is continuous availability - he has an SLA, responsibility to customers for the continuous operation of the service, a security solution negatively affects the availability of the server: while it reboots, while problems are with it, and so on.

Therefore, in general, the security solution, in particular for DPI, works on a copy of the traffic somewhere in the mirror port. And it’s quite desirable that it be limited to Netflow / IPFIX and do nothing else.

This is a very convenient approach to build, the problem is that we have a tier network system for a reason. This is not someone's whim, the level structure of the network is crucial for its operation. The network works exactly at the levels, the packet is the data unit of the third level, the IP protocol level. Already at the level of the TCP protocol, we do not have the concept of a “packet”, there is a concept of a “segment” - these are different things, everyone who has come across mssclamping knows this.

But in fact, at the TCP level, we have sessions, that is, connections — above there are TLS sessions, even higher there is already just a data stream of the seventh level. Therefore, ignoring the layer network structure makes the decision theoretically vulnerable. It made such a decision vulnerable even when everything on the Internet was transmitted in clear text. However, why "theoretically."

Three months ago, a repository appeared on Github with the talking name GoodbyeDPI . This repository includes software that is intended for installation on a client's windows-machine, that is, any user of the network that uses a number of primitive, clever, but still simple techniques such as:

- IP Protocol Identification Field Analysis

- TCP Fragmentation

- Some exercises with HTTPS-headers, including playing with casing, that is, changing the case of letters in the headers and the like.

That is, this is a very simple technique, which turned out to be enough, and not even the entire complex, but one or two combined, in order to circumvent the absolute majority of the DPI solutions already implemented on the networks of operators.

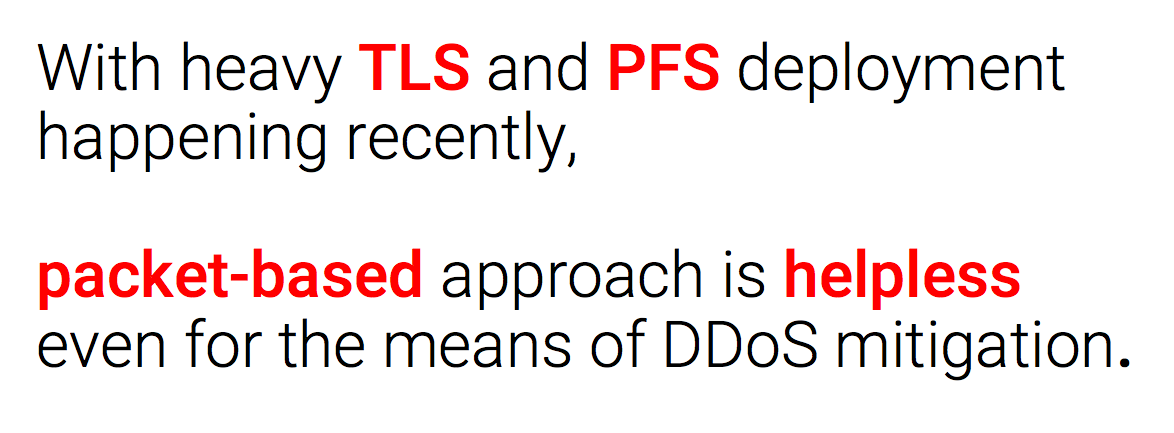

And this is when we talk about something that is sent to cleartext as HTTP headers. But now is 2017, and we have the HTTPS protocol.

According to statistics, at the end of 2015, the free TLS-certificate provider Let's Encrypt has already released about 60 million certificates.

According to the Firefox telemetry that the Mozilla Foundation publishes, more than 60% are two-thirds of the entire mass of sites visited by users of this browser exclusively via HTTPS.

Accordingly, the concept of packet filtering at the seventh level was vulnerable even when we had a cleartext. With the current level and degree of TLS deployment and with the deployment of mechanisms like perfect forward secrecy, the analysis of per packet traffic is useless even for the purposes we are dealing with - to protect against DDoS attacks.

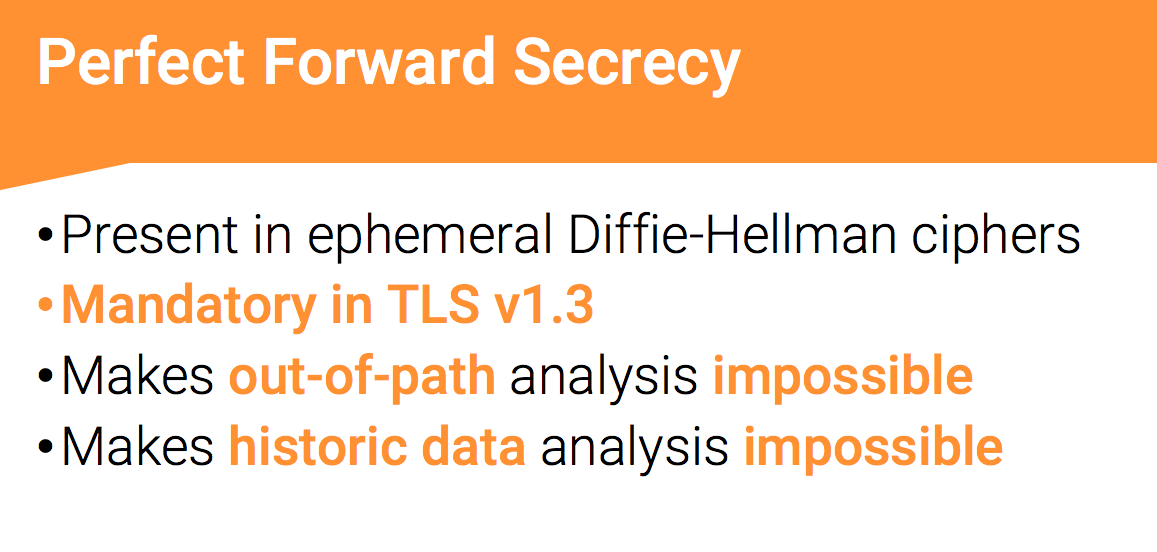

By the way, what is PFS. Perfect forward secrecy is a concept that is implemented in the ephemeral varieties of the Diffie Hellman cipher. It works now, it worked in SSL, the protocol that is no longer there, it works in TLS and it is mandatory in the new version of TLS 1.3, which is now being prepared for release.

The essence of the perfect forward secrecy is that the established TLS connection with the cipher, for example, the ephemeral varieties of Diffie Hellman cannot be decrypted with even a private key. This is impossible to do if you look at a copy of the traffic — the client and the server negotiate the ciphers between themselves, the private key here is used exclusively as a signature of this cipher, respectively, with its help nothing is properly encrypted and it cannot be decrypted. This makes such a beautiful situation impossible when we recorded traffic for 3 months or three years and then we want to decipher it and see what was there - thanks to the perfect forward secrecy, this is impossible.

And along the way, the perfect forward secrecy breaks down already a number of DPI-solutions that are used for various security purposes, including the enterprise level.

According to our statistics, which I collected a year ago, 70% of https requests in our network use pfs. Accordingly, in such networks where dpi is used that does not take this into account, 70% of https-requests pass without analysis. And it’s not just 70% of requests, it’s important that 60% of legitimate users use perfect forward secrecy and Diffie Hellman. And 90% of bots, because attackers know perfectly well that if they use ciphers with pfs support, then the chance to get around a wrong or incorrectly implemented dpi-solution is greatly increased.

What tasks of DPI-solutions exist at the moment?

Well, I have already said enough about DDoS protection and will not return to it anymore. What other tasks are there? This is, in general, quality of service, that is, prioritization of one or another traffic flow, based on their parameters, from Inspection. In some form of shaping. Next is parental control, that is, a consensus restriction of access to content. Further, this is the introduction of advertising on the networks of operators for their subscribers, instead of the advertising that would be available to them if they were not customers of this operator. Next is the enforcement of copyright, that is, blocking of content that is protected by copyright and is illegally distributed. And finally, Lawful Interception.

All this needs to be done on the transport network and it is necessary to take into account that analysis at the session level is currently required. This gives that the DPI solution must take into account the concept of computational complexity, and this complexity for it is extremely high.

A simple example on which I will not stop and give a link. Very many DPI solutions offer such convenient functionality as matching each packet with a regular expression. Very convenient - I wrote a regular expression and everything is blocked, everything works.

There are a number of problem points in regular expressions, one of them is shown on the slide along with a link, and the level of the problem can be understood by the name catastrophic backtracking. With a certain type of regular expression and a specific string in it, we have quadratic string matching — the longer the string, the faster the execution speed quadratically.

Naturally, a number of DPI-solutions that use this, do not issue any warnings when forming a complex regular expression. And here we are faced with the following dilemma when performing - either it is necessary to spend up to a second of computer time for matching with individual packages / streams, that is, connection speed, traffic speed, drops dramatically. Or at some point it is necessary to stop analyzing the packet and cut it further. Depends on the task that stands before us.

What does this lead us to? Besides the fact that many believe that the DPI-solution is a kind of silver bullet - it sounds very good, deep Packet Inspection. As a refrigerator, I bought it, put it on - it solves the problem, maybe it needs to be somehow adjusted, in general, it is able to solve its problems. In fact, it is not.

Today, DPI is not a product line, not a classification, but only a general description of a whole range of solutions that solve completely diverse tasks, each of which is intended to solve one, maximum two tasks. The more tasks you put on it, the worse it copes with them.

And, of course, a single solution does not cope well with each DPI task. If it works well with parental control, it means that it protects badly against DDoS attacks.

Moreover, even if it is designed to solve one problem, there is always a trade-off, a trade off, between the speed of packet processing (as is the case with catastrophic backtracking) and functionality. Accordingly, either we get the functionality and the speed suffers from it, or we prioritize the speed, and then the functionality suffers.

Another problem with DPI solutions is that the network, especially the transit network, currently implies its own transparency for applications. Applications count on this transparency - voice and video of visitors, and game protocols, of which 3 new, and overlay networks, data transfer within enterprise networks appear in a month. The modern web also expects the network to work as written in the RFC. There is no analysis of the strange non-standardized view on the net. The protocols HTTP / 2, MPTCP, QUIC - all in general count on it.

Moreover, the modern network implements cryptographic protocols, TLS 1.3, DNSSEC and others, which all generally rely on the transit network to be transparent. In the case of the implementation of DPI, this is not the case, and this is not in vain.

The result is the following - a number of applications suffer from these problems. This is a Eric Rescorla slide from the IETF in Prague. During the test implementation of TLS 1.3 on the Cloudflare network, if I am not mistaken, serious problems arose - from 1% to 10% of the traffic in TLS 1.3 was lost due to "some" middleboxes that do not know it and do not support it. Accordingly, TLS 1.3 is badly damaged due to network opacity, which affects the security of the network and users in general. TLS 1.3 is being finalized, a number of applications are affected, while others will adapt.

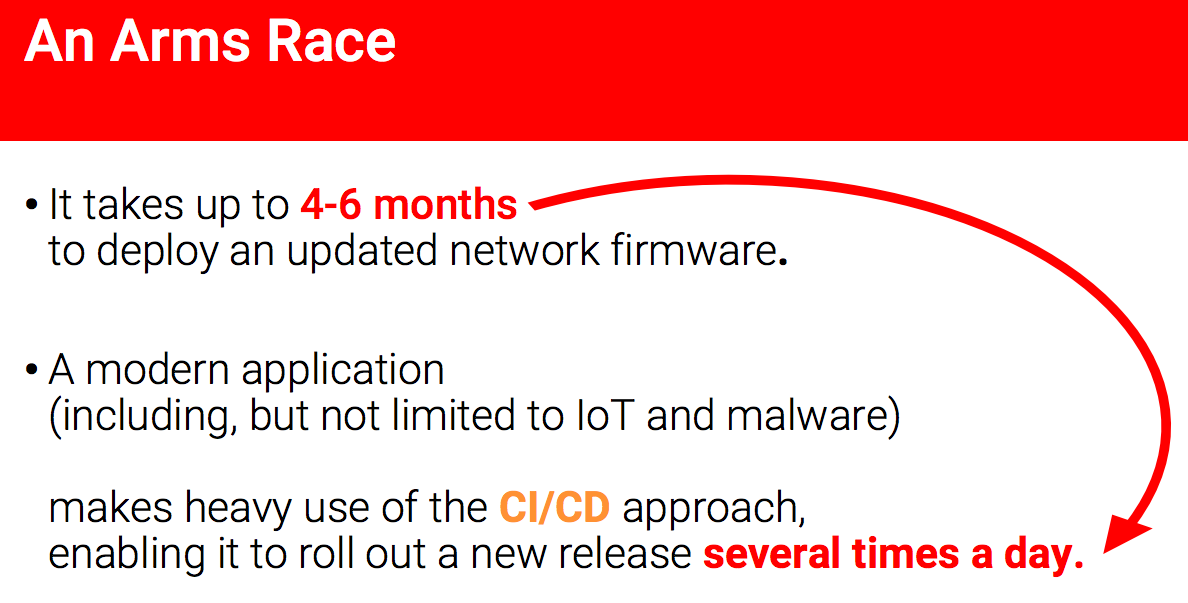

How will this adaptation happen? At the security roundtable, which was held at the previous ENOG in St. Petersburg, we learned that the introduction, deployment of updated firmware, even if they have security problems - that is, a security update, from the moment of detection of the vulnerability to the deployment of the patch is spent from 4 to 6 months. What is the time spent on?

First, from 2 to 3 months, as Cisco in this example from Digital Security, writes this fix. It is impossible to accelerate this in practice - I participated in such a process and, unfortunately, it is impossible to accelerate it to less than two months. Then another 2-3 months, a total of six, is deployed on the network, since the main thing I have already said is for the operator to maintain continuity of work. Any update can adversely affect the operation of the equipment, it needs to be rebooted, technical work - I do not want to get involved with it. Total we have six months.

In this case, if we have a DPI-solution, it must adapt to how the network evolves at the seventh level. Modern application: gaming, voice over IP, mobile application, IoT and including malware, uses modern approaches CI and CD, which allows you to roll out a new release several times a day.

What does this lead to? To the fact that if we have a DPI solution, not supporting them with some kind of malware or a certain type of traffic may not be considered as a vulnerability, it may be considered as a new functionality and may require updating the equipment. At the same time, those resources that will try to bypass this blocking will roll out updates not once in 6 months, but several times a day.

What it is? This is an arms race that a vendor or an implementation of a DPI solution will inevitably lose.

What are we coming to? In addition, the decision on DPI, which considers each package separately, is not enough to solve problems in general. What it works well for is the satisfaction of the Pareto principle, 80/20 law. If you agree that you will spend 20% of your efforts on solving 80% of problems - this is the solution for you. Spheres of application, such as parental control, quality of service, the same advertisement and, in general, some kind of traffic blocking according to the patterns that Alexey spoke about earlier.

Accordingly, with the full understanding that the user who wants to get around this, it will bypass. If the task is to implement the blocking of 100% of certain content, then in a situation where we have no control over the client (in terms of network interaction) or the server, we need to understand that the client-server can play an arbitrary game. They can, in particular, pretend that they establish a connection, thereby allocating the resources of the DPI-solution to this session, about which they forget. Neither the client nor the server spend resources on it - the DPI-solution does not know how it functions, therefore memory is allocated on it, respectively, when using a large number of such clients, the DPI-solution can simply go into failure. If it is set to a gap, then a failure will occur at that moment on the entire network, which many engineers would like to avoid.

On the issue of security - another point that must be considered when choosing and implementing a DPI-solution is that this decision is complex. It is difficult to design, difficult to build, implement is also not easy. At the same time, let's say, approaches to security by various vendors, I am not ready to talk about them now, I don’t know how vendors, for example, update their solutions, conduct some kind of security audit / analysis.

What we can say for sure is that there are solutions in the world, one of which is, for example, FinFisher, the datasheet of which indicates the purpose of the installation through various “technological holes” on the operator’s network, without the operator’s knowledge and for analysis traffic passing by. This solution, which already exists - I don’t have details on how it works, but it’s important that people involved in such things, from intruders to certain companies, know perfectly well that there can be complex things on the operator’s network.

And if these things begin to unfold throughout the network, they will look there, they will look for holes in them. If this is just some kind of surveillance, then for the operator it may not be important, but if there are any intruders who gain control over the DPI-solution deployed in a gap throughout the operator’s entire network, the consequences are hard to imagine. These are consequences of the level of a Hollywood film or a futurological congress - what happens, what they can do, most likely, almost everything.

What does all this lead us to? Moreover, the issue of network transparency and security in this context is fundamental, fundamental. And if a certain network community or a certain network structure wants to install some non-transparent solutions on an IP transport network, the main task in deploying such a solution is, first of all, standardization. Access to those activities, those communities, committees that are engaged in standardization so that all participants in the network perfectly understand what is happening in its middle, what is happening in transit. These are RIPE, IETF, ICANN, IEEE and other communities that are involved in the harmonization of requirements and requirements from all network participants: from game protocols to telecom operators to their requirements, which are often not taken into account when deploying DPI solutions. They need to be taken into account, if you want to do this, you need to do it seriously.

Because the only alternative is unreliable IP transport, applications that bypass unreliable IP transport, breaking it along the way, and the instability of the critical network infrastructure as a whole.

Prospects for using content blocking methods

Yuri Kargapolov :

Thank you, Artem. Your report added optimism to our discussion. We tried to plan the panel in such a way that now Anton Baskov gave us a vision of the future - what technological solutions can be deployed. Let's try to see this future together.

Prospects for content blocking in the context of further development of Internet technologies - Anton Baskov, AB Architecture Bureau

Presentation video and presentation slides

Suppose that the situation has become better over time. The registry is no longer 100,000 entries, and its support is at a more or less normal level. Suppose we have found some kind of solution that conditionally works in the current situation. What's next? How will the situation change over time?

When developing approaches to blocking illegal content, we always need to choose the right balance between efficiency, cost, and the additional collateral damage that blockages bring on the network. What remains outside of the conditionally simple methods for implementing locks? What situations should we consider?

For example, we have to take into account the cases when the content can hide, that is, change addresses, identifiers, do strange redirections - there are many different options. There may be a situation with hiding a target, access via hidden networks, a situation with distributed content, when part of the content is inside the network of one operator - in such a case, such traffic will be difficult to block dynamically, it will have to be sent to the filtering point and returned.

We also need to consider the risks associated with creating global solutions within a country. For example, false positives, sometimes specifically induced. For example, you can take from Philip Kulin a list of these wonderful 2000 domains that he talked about and use them to block critical infrastructure. You can also, using the fact that the number of solutions that deal with locks is limited, find vulnerabilities in one or several solutions and get terrifying problems on individual network segments, or in principle block critical infrastructure.

I would also like to separately note that it’s important not only how we block content, but what exactly we block, what exactly we mean by purpose. In order to understand the purpose of blocking, we need to look for live traffic, user behavior, and also have a distributed Internet monitoring infrastructure, for example, similar in RIPE Atlas architecture.

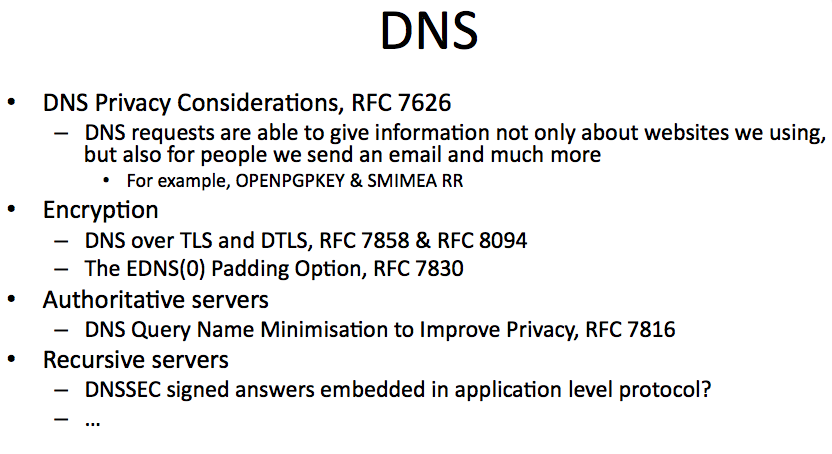

Confidentiality and comprehensive monitoring is a long-standing topic within the IETF, and is inextricably linked with locks. At the same time, if there are currently 1.5 documents about locks, then the topic related to confidentiality and monitoring, judging by the number of documents, is considered more relevant. This trend manifested itself somewhere in 2011 and is actively developing to the present moment.

I would like to give a brief overview of individual documents and decisions in this area.

Many solutions that block traffic over HTTPS are based on SNI (Server Name Indication). Currently, SNI is the transfer of the host name to plaintext, after which the necessary key matching is performed based on the certificates presented. At this level, we can determine which particular resource is being accessed. It is on the basis of SNI that point-filtering of resources working on top of TLS is performed. The above document describes two methods for encrypting SNI in order to exclude the possibility of its analysis, including later - by recording traffic, we will not be able to find out which resource the user of interest has been accessing.

Interestingly, the document considers an approach in which to access the target resource we must turn to the specified intermediate node, which will then transfer the encrypted traffic to the required host and, in fact, serves only as one of the links in the chain, the traffic transmission point. Thus, without additional analysis using indirect data, we will not be able to find out what resources are actually hidden behind the intermediate node.

If we talk about DNS, then, despite the "image" of an open database, information about user requests can also be extremely useful for pervasive monitoring. It is not only easily accessible information about the resources we visit, but also information about who we communicate with. When you send a letter, individual modern clients try to get additional information about the recipient, for example, by sending a request with a hash of an email address.

What has been done to, if possible, to exclude DNS analysis? First, it is DNS encryption in the framework of TLS and DTLS, so that even indirectly it is impossible to understand, by individual patterns, to which resource was accessed. Secondly, this is the Query Name Minimisation approach, whose main task is to minimize the amount of information transmitted to intermediate authoritative servers by resolver. Currently, recursive servers are transmitted by the user through intermediate authoritative servers, including the root ones, without any changes, which allows us to understand at what addresses and with what requests users are addressed. At the moment, this information is available, it is quite easily available for analysis.

At the level of the recursive server at the moment everything remains the same: although the channel is encrypted, the recursive server itself knows about all user requests. But the situation is also changing, as there are plans to include DNS information signed by DNSSEC into separate protocols of the application level. Suppose that we go to the page of the site, and the server, realizing that requests for external resources will continue to be carried out, already offers us signed answers in advance. We do not have to refer to the DNS, we can get the necessary information within the current connection and use it further, thereby minimizing calls to the recursive server. Accordingly, the load on the recursive server is reduced, so part of the requests by which you can understand where the user went to is provided at the application level.

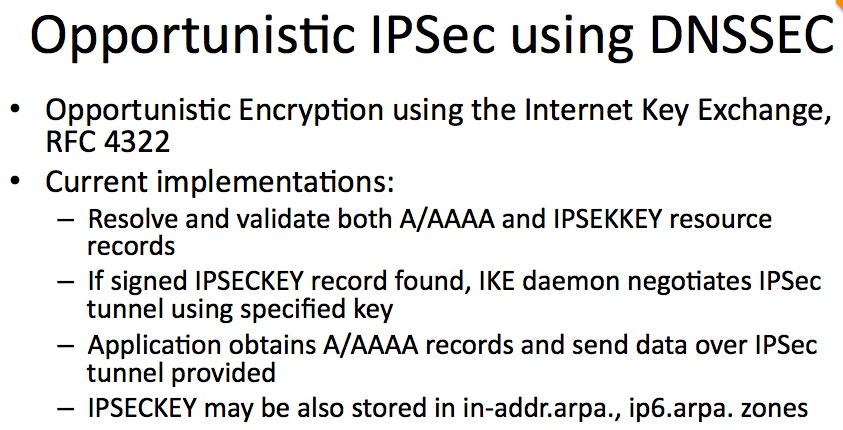

There is an absolutely awesome thing called Opportunistic IPSec. It was developed more or less long ago, and modern implementations are already different from the original RFC. In this case, DNSSEC acts as a trigger, in which a key is written, and all connections between network nodes are encrypted at the transport level. Accordingly, intermediate nodes will not be able to not only get access to the data, but even directly understand which protocols we work with the end node. In addition, this mechanism allows you to protect unencrypted connections of legacy protocols.

If earlier we thought that “good resources” that have nothing to hide, including those that do not contain prohibited content, work via HTTP, that is, in plaintext, then at the current moment this is no longer the case. With software updates for browsers and HTTP servers, a mechanism appears as opportunistic security. Using the mechanism of alternative services, the user is more or less forcibly redirected to HTTP / 2 with TLS support. And the traffic that was originally transmitted in the form of plaintext HTTP, will soon become encrypted. Firefox has supported this technology since 2015. Judging by the bugtracker, Chrome in the near future (within 1-2 months) will also support the mechanism of opportunistic security. It remains to wait for the support of this mechanism by the servers “by default”,and suddenly most of the unencrypted traffic will be encrypted.

If earlier we talked about technologies that in one way or another affect the ability to block access to information, then you need to understand that such changes that prevent users from spying on occur also with other protocols. Here you can cite as an example e-mail. If earlier connections between mail servers used the opportunistic security mechanism, then at the current moment there is a trigger in the DNS, which clearly states in which case it is necessary to use a TLS connection and which certificates should be trusted.

The same applies to the letters transmitted via SMTP servers: now you can easily find out in which case we can send an encrypted message to the recipient. Special filters have already been developed that can be delivered to the organization’s mail servers, which, without being visible to the user, will, if possible, encrypt all outgoing mail.

What will be the effect of the implementation of all the above protocols related to encryption? The effectiveness of locks, that is, the ability to detect access to forbidden content, will decrease, the accuracy of locks will also fall due to the inability to distinguish good content from bad. Collateral damage will only increase.

The approach that is now popular, for example, in Russia, when we block content, after which we forget about it, no longer works. It is necessary to deal not only with the blocking of content, but also by the source of illegal information, so that such content does not exist in principle.

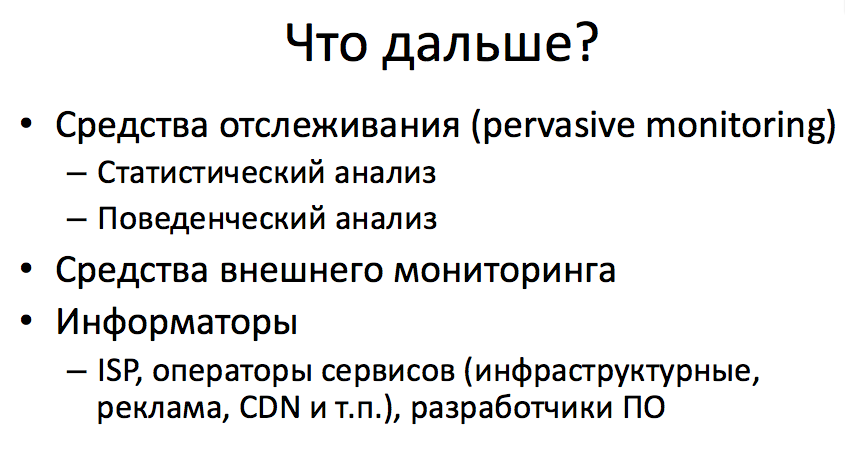

What methods at the same time remain at the disposal of law enforcement agencies?

Despite the fact that the amount of metadata by which traffic can be analyzed is minimized, the methods of statistical and behavioral analyzes remain - monitoring individual indicators or monitoring user behavior in the mass allows predicting the appearance of new sources of illegal content. Let me give you an example: if a person suffers a gambling addiction, he will continue to try to circumvent locks in order to log into an online casino. At the same time, behavioral and statistical analysis methods are much less sensitive to the implementation of blocking bypass methods, although they require significant resources for work, which, given the changes described in the protocols described above, will not allow these approaches to be used for comprehensive surveillance.

There are also external monitoring tools that will be improved in order to determine what needs to be done to block illegal content, i.e. determine where this content is located and how to block it correctly in order to minimize indirect damage.

Do not forget that the RFC predicted a few more years, and that the industry is most likely waiting for the future - this is the presence of informants, i.e. cooperation between major infrastructure services and law enforcement agencies. They can be telecom operators, large analytical and advertising services such as Google Analytics, Google Adwords, Yandex Metrika, CDN operators, and possibly software developers who have access to more metadata. Unfortunately, the data that these services have access to is more than enough both to combat illegal content and to continue comprehensive surveillance.

If we talk about the current moment, simple mechanisms to restrict access (including legal and legal nature) are in most cases sufficient to make it more difficult for users to access illegal content. It makes no sense to violate the Pareto principle, about which Artyom spoke. Block everything - the task is unreal.

In cases where simple access restriction mechanisms will not work, operational work will be much more efficient. Of course, provided that it is provided with the necessary means of rapid development, which we discussed above. It will be much more effective than in vain attempts to block information, organize a system of total filtering in the network.

It should also be borne in mind that crime will increasingly use the network to coordinate illegal actions, and most of them will not be directly connected with conditionally publicly available illegal content, against which the blocking system is designed. So the conclusion from the foregoing is simple - an approach where locks are the only way to combat illegal content is futile. You should not invest billions in useless infrastructure, but rather focus on developing solutions that will help law enforcement agencies work efficiently in the world of modern technologies.

Administrative blocking issues, Yuri Kargapolov, UANIC

Video report

What I wanted to say, without slides.

The fact that I came from Ukraine. We started implementing a blocking strategy in the country. We started to implement on the basis of two presidential decrees, that is, we have no legislative basis in the sense of the existing law, moreover, we have an interesting innovation in the law on telecommunications, which says that the operator is not responsible for the content of the content transmitted through its networks .

But meanwhile, those who do not comply are punished, and a glaring example was this - when Yandex was blocked from us and they said that it was necessary to block all Yandex-related services. Two days later, Yandex went to Cloudflare, and there were clever comrades who sent the entire Cloudflare to zero, giving it to the world via BGP. The world ate it without checking, and gave it further.

When we realized it, we started rolling back, but it turned out that this Ukrainian uplink was disconnected from everyone, and we sat a third day with a large number of disabled channels.

I would like to say one more thing. Who in the 90s was engaged in IP-telephony? You remember this situation, when there is one, there is a second subscriber, they call each other, something passes, something does not pass, because the corresponding software and codecs were not in all the routers, this zoo out of everything that is possible, did not work? What will happen now is an artificial repetition of that situation with IP-telephony. This is not a direct analogy, but this is the place to be, so that we realize the moment where we are.

If we understand where we are, then the next step that should be done is to create a working group that could normally, without haste, professionally discuss the issue. To develop approaches that will save us from those rakes, which we have already attacked repeatedly.

When we prepared this panel discussion and drove it away by connecting other experts who listened to us, we came to the conclusion that we just had to complete with a proposal to create a working group. With RIPE, ICANN, ISOC - in general, a working group that would start to carefully discuss these issues.

Therefore, we also appeal to the leadership of the relevant organizations so that they have in mind - we will go to them.

Thank.

Source: https://habr.com/ru/post/342846/

All Articles