How we built the software-defined data center in the drawer

I woke up one day early and thought: why not build a data center? Own, on Intel NUC - mini-PCs, on which half of our Intel technology center spins.

Our office is on the way from the office canteen, and someone blurted out what I was doing. Therefore, colleagues began to come to neighing. They dropped in, asked where I was hiding the data center, then laughed.

On the third day, the laughter suddenly stopped, and many began to scratch their heads. Because it turned out a mobile data center for demonstrations and training, which can be brought to the customer in a suitcase. Or install on a tank.

')

The epic of the data center builder is below.

In general, we often need entire data centers to train server core engineers and new software runs. Server time is very expensive, and therefore usually have to learn from cats. Once again ordering a window, we suddenly realized that right under our feet (literally) we have a lot of Intel NUC. Intel gave them to us for the solutions center, which we opened at the beginning of the year. And they just came up. Ordinary consumer computers behave rather badly under constant load, require complex cooling, etc. For nukes inside i7, they are designed for a 90 percent load during the whole operation time (months and years), there is a very thoughtful heat sink. I remembered how Apple wanted to make data-center racks, shoving four mac-mini in one rack unit, and decided: why not?

All I need to combine the machines in the data center is a switch and good orchestrating software. And VMware partner licenses are always there for demonstration. And I undertook to build from them SDDC - a software-defined data center, where all the power and all virtual machines can be both a payload and parts of the infrastructure.

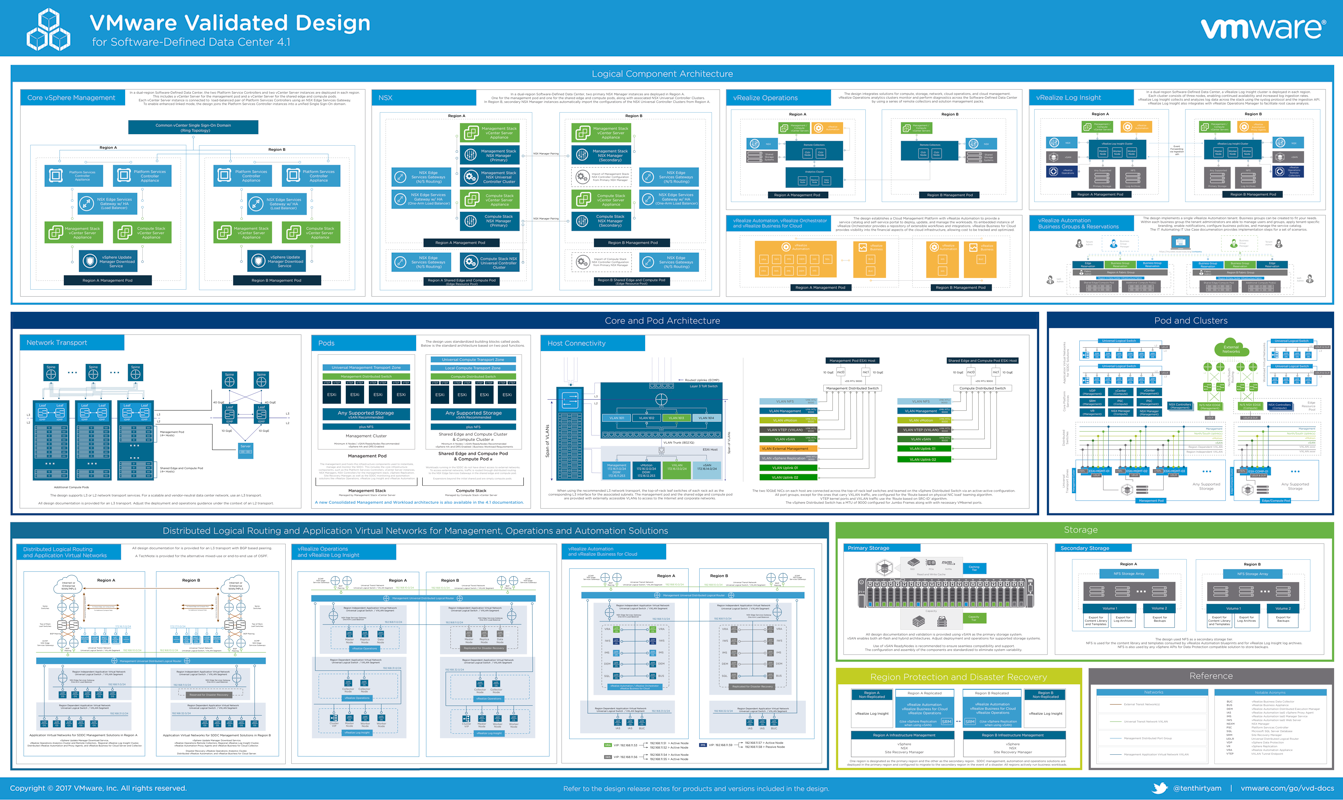

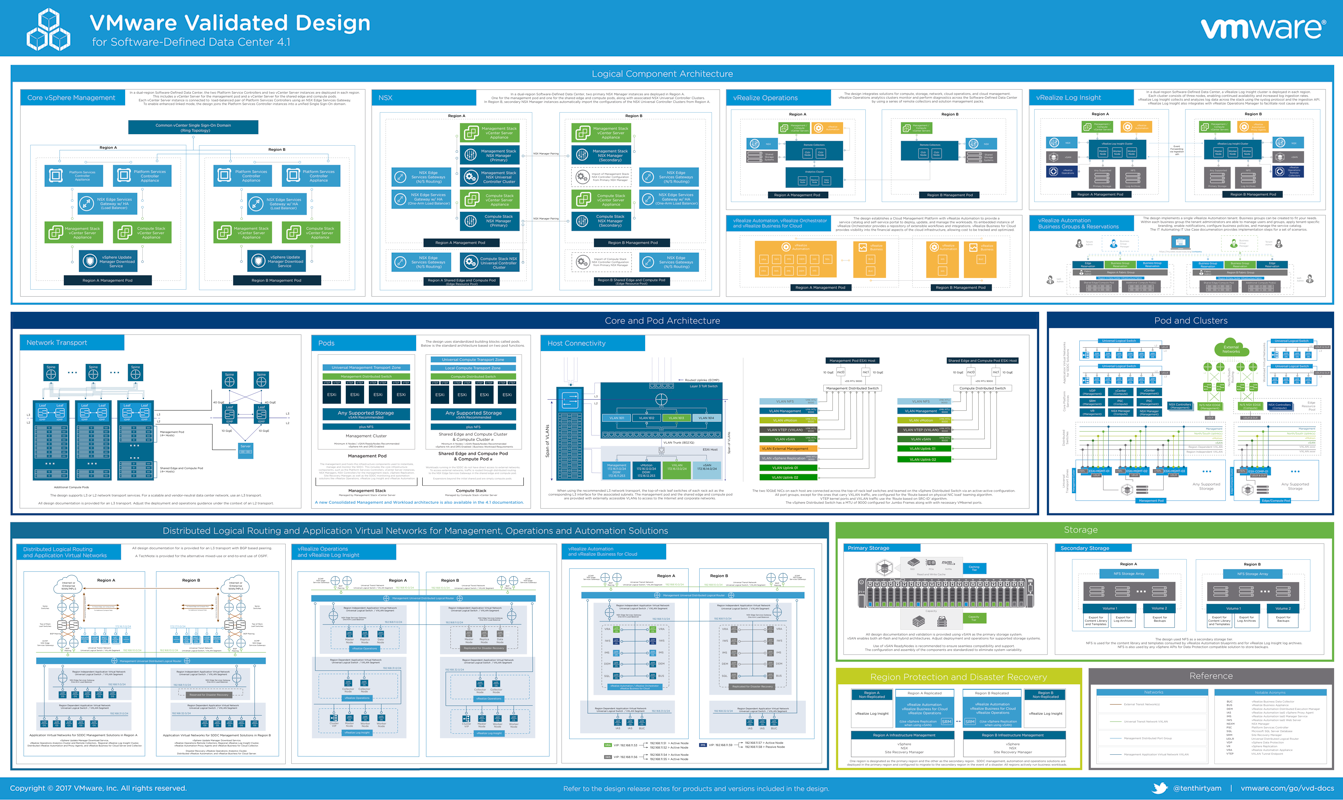

Here’s how it looks in the VMware reference architecture:

Of course, it was not without excesses: at some point, my cleaning center collapsed my data center. I had to rebuild again.

The full stack of software-defined data center starts with hypervisors to receive virtual machines on physical ones. These virtual machines will be quanta of the future software data center.

They took the switch 10-port gigabit, 5 nukes. And from the laptop connected to the switch and the machines.

Connected, started to set. ESXi itself was delivered easily. But VMware did not assume that it could be installed on such a hell of iron, so the nuke’s network card fell out of supported hardware (if there ever was one there). And in our data center is critical. Because our computers are sticky to the network card, and not vice versa.

We found the right driver in the old version of the distribution, added it to the new 6.5 Update 1. We installed it again - the network appeared. For the same reason, the lack of a driver could not launch the SD-reader. I had to use external flash drives to boot.

The basic setting is over. Deployed vCenter. This is management software, it provides the integration of our nukes into a highly accessible, dynamically balanced cluster and allows you to manage resources. We put it on one of the boxes, and it was time to deploy the hyper-convergent infrastructure in all its glory.

At this stage, we have virtual machines on the virtualization servers, but there is no unified storage system. It is necessary to combine server disks or connect external shelves, plus to ensure that when you turn off any of the servers, the data are not lost. That is, to deploy software-defined storage. Because a separate stand with a flash factory for 20-50 million obviously did not climb into the drawer.

The software-defined storage system in the VMware stack is vSAN. It was configured smoothly in the spirit of “next-next-next-done”, even correctly identified the NVMe-disks under the cache (yes, imagine, we had them). But there were problems with the configuration of the switch.

We immediately knew that we would be trapped in one gigabit on the switch, and the vSAN in the recommended configuration should be 10, and better 2 to 10 — he wants to change data very quickly inside the bus. vSAN needs a Jumbo Frame - a large MTU of 9000 bytes, because the larger the transfer frame, the less overhead and it works faster. At first, our modest 10-port Gigabit switch stubbornly refused to use the MTU settings. A few cups of coffee later he resigned and even pleasantly surprised with performance - vSAN worked quite quickly, despite one gigabit interface in the backend.

Next vSAN need at least two disks on the node. On nuke just two. Configuration: USB flash drive for downloading a hypervisor, one SSD over SATA 3.0 (Intel SSD DC S3520 with 480 GB capacity), the second M.2 - Intel SSD Pro 6000p with 128 GB capacity, which became a cache in vSAN. Gathered easily.

If, before this, the peeping colleagues warmly wished for success and left with a laugh, now many have become interested. They came again and again, asked about the condition of the patient.

Before me lay the next level - SDN, that is, a software-defined network. This is when the same virtualization servers (in our case nukes) become components of the network infrastructure.

Somewhere in the area, my cleaning center collapsed my data center: I simply chopped the power of the entire bundle and put the nukes in their place at the center of Intel solutions. I was very worried, because SDS solutions do not like turning off all the nodes at all, and even with the load, but after switching on, everything went fine. Looking ahead, I will say that at the very end, a colleague, who needed a socket, was once again so noted. Also, everything went up okay, already with a full set of software.

Began to deploy NSX from VMware. It went easier, but not easy. Slightly analyzed the packets - there were problems with proxying in our network (which was used to access nukes).

Suddenly, it is nukes that are perfect as firewalls, routers, and balancers. Previously, in offices, the old Pentiums-2 were used for this — the second life of an old car.

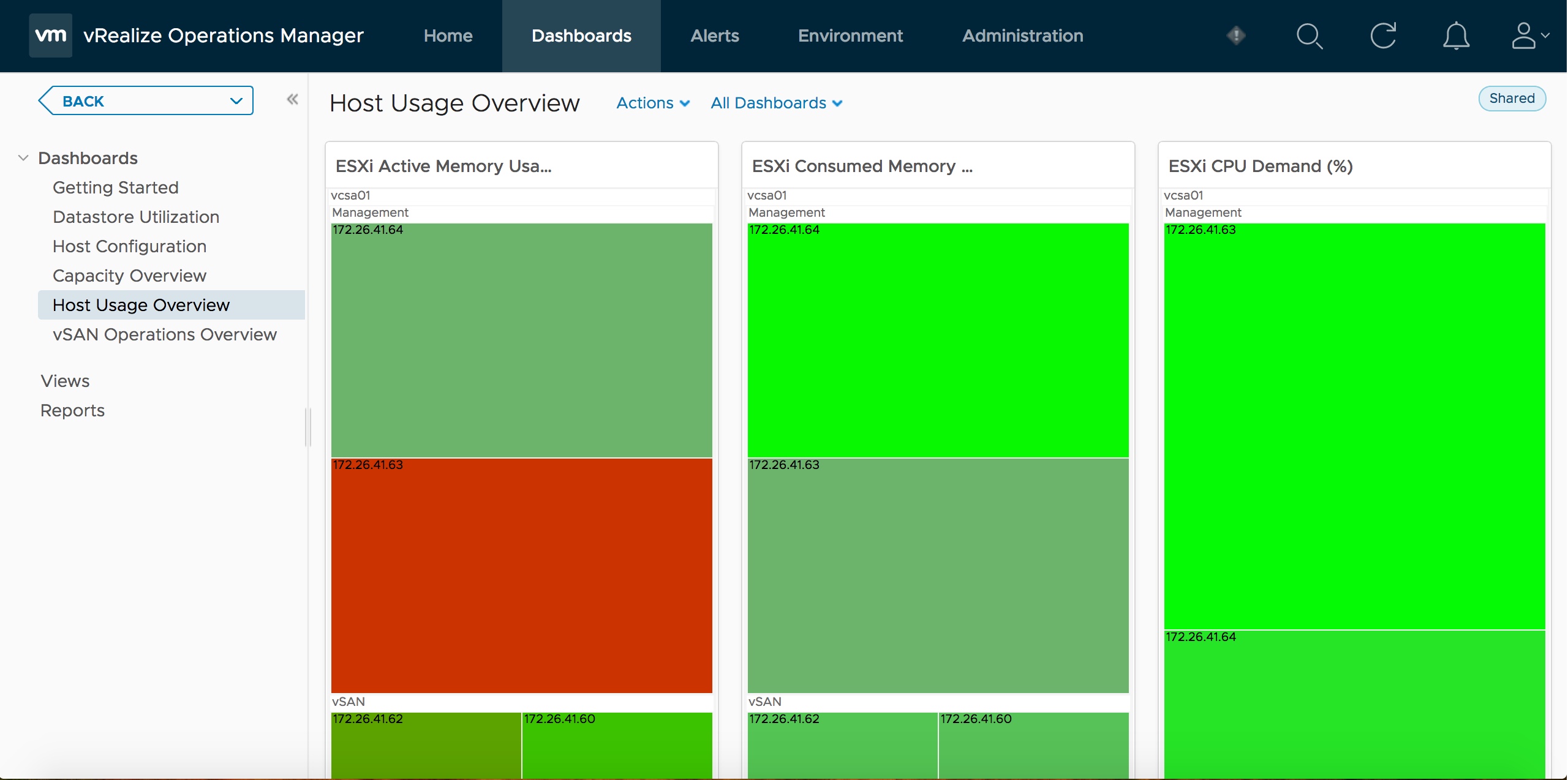

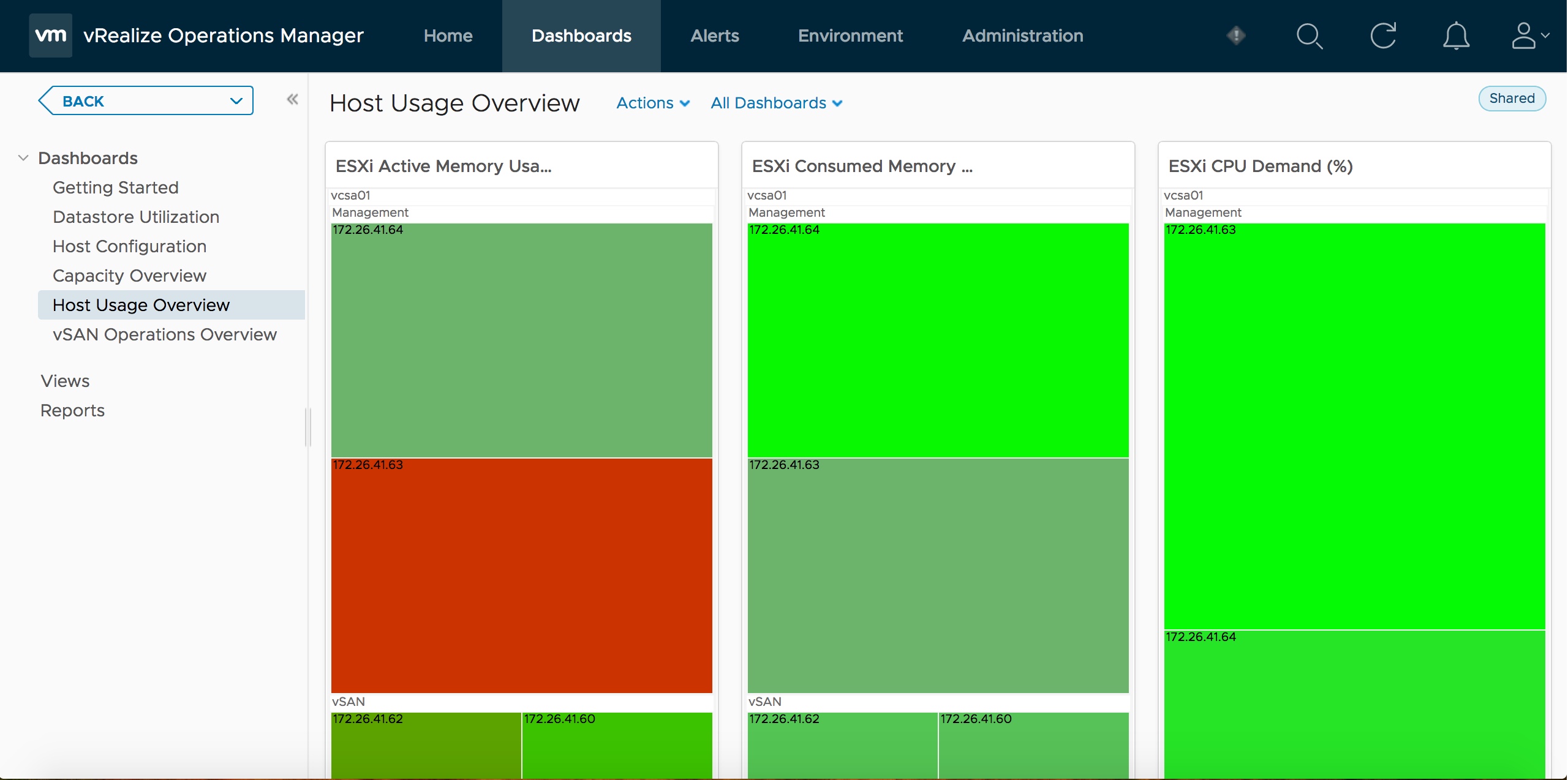

Now we need monitoring. And the fact that the data center without monitoring, right? Began to install vRealize Operations. It is also designed, hmm, for other tasks and for another hardware, so in the basic configuration I devoured half of my data center resources. Turned down (this is not recommended in normal situations). As a result, it collects data on how efficiently our data center is used, where and to which virtual clients more resources are issued than necessary (no), where what is happening. He looks at the load on the hosts, makes an inventory and gives advice on where to change. He also receives information from the iron through the sim provider: fans, temperature, disk status, recording delays, and so on. Before equipment failure, it is able to migrate services and data from a dying server in the background - this thing is called Proactive HA. For the sake of justice, the latter could not be configured - the wrong level of hardware, this is not a Dell or HP server.

I must say that I began to leave the data center under load at night. The nukes were warming themselves, and at first this frightened me — I came in several times a day to touch them. At home I would be exactly the kind of heater disturbed. But colleagues from Intel said that everything was a bundle (hehe!), And I continued the experiments.

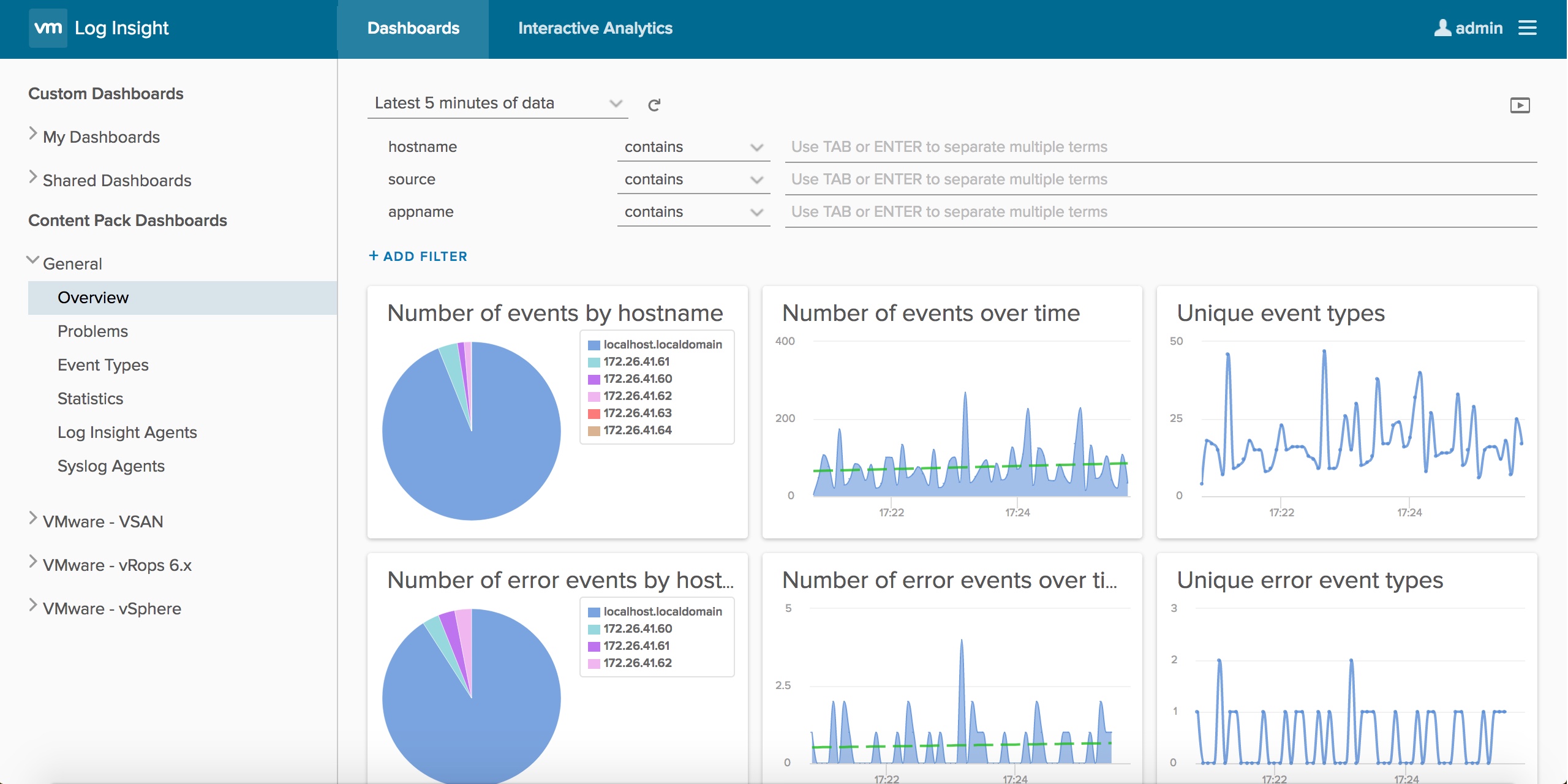

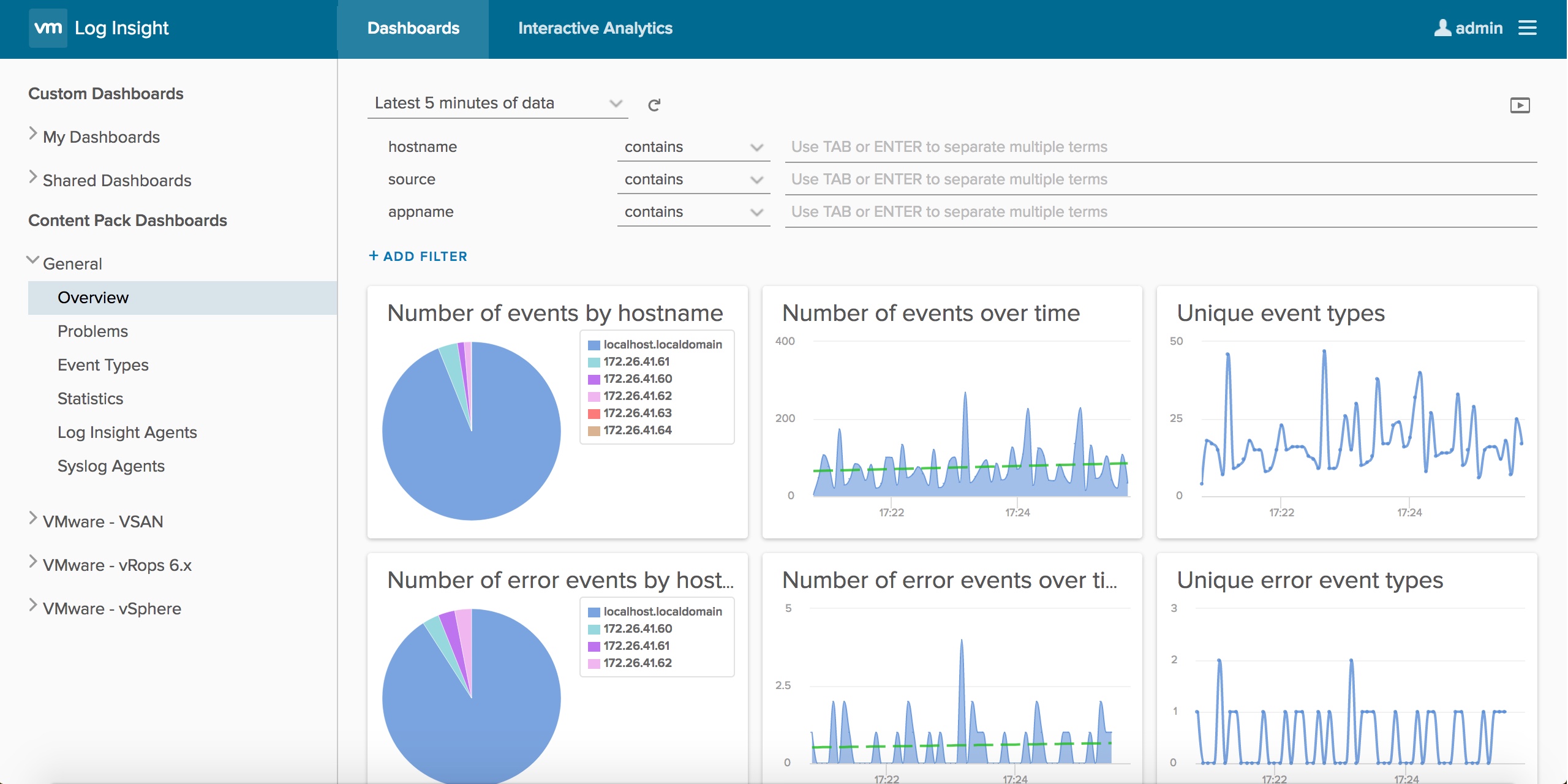

The next link is a system for analyzing logs and any “smart analytics” - vRealize Log Insight. There is nothing special to add, the product spun up and immediately started working, it was necessary only as a syslog server for all components of our software data center.

The next step is vRealize Automation. All I need to say about him is that he again grabbed a bunch of resources. It was developed normally, but loaded data center from five nyuk by 90%. Also cut a bit and deployed the base portal on it.

Total - it turned out. It is now a training stand, a source of incessant jokes (“Where are the data center? And in your pocket!”, “Why didn’t you backup? He eats 100% of resources!”, “When will we take the grid?”) And a demo unit. You will not go to the customer with a half-rack, but with this - easily.

Let me remind you that nukes still have a good built-in vibration protection (unlike normal servers) and are generally very well made, so they will survive:

Backup in the end, I still put it fine, by the way.

What is interesting for? Well, in practice, on the same set of software (with a pair of replacements for cheaper licenses) and another hardware, you can fit a private data center in a semi-rack. This is necessary for companies per 100 people, such as investment funds, who do not want to share their data outside, and this is often decided by expensive PAKs. Or stand right in the office with soundproofing, which crammed servers, switches and oops.

Oh yes! And I earned an excellent achivka "data center builder." And he won a beer.

If someone wants to repeat our experience, below is the configuration of our stand, which is tested and works exactly.

5 pieces of mini-PC Intel NUC Kit NUC7i7BNH, each has the following components:

Our office is on the way from the office canteen, and someone blurted out what I was doing. Therefore, colleagues began to come to neighing. They dropped in, asked where I was hiding the data center, then laughed.

On the third day, the laughter suddenly stopped, and many began to scratch their heads. Because it turned out a mobile data center for demonstrations and training, which can be brought to the customer in a suitcase. Or install on a tank.

')

The epic of the data center builder is below.

Task

In general, we often need entire data centers to train server core engineers and new software runs. Server time is very expensive, and therefore usually have to learn from cats. Once again ordering a window, we suddenly realized that right under our feet (literally) we have a lot of Intel NUC. Intel gave them to us for the solutions center, which we opened at the beginning of the year. And they just came up. Ordinary consumer computers behave rather badly under constant load, require complex cooling, etc. For nukes inside i7, they are designed for a 90 percent load during the whole operation time (months and years), there is a very thoughtful heat sink. I remembered how Apple wanted to make data-center racks, shoving four mac-mini in one rack unit, and decided: why not?

All I need to combine the machines in the data center is a switch and good orchestrating software. And VMware partner licenses are always there for demonstration. And I undertook to build from them SDDC - a software-defined data center, where all the power and all virtual machines can be both a payload and parts of the infrastructure.

Here’s how it looks in the VMware reference architecture:

Of course, it was not without excesses: at some point, my cleaning center collapsed my data center. I had to rebuild again.

1. Hypervisors

The full stack of software-defined data center starts with hypervisors to receive virtual machines on physical ones. These virtual machines will be quanta of the future software data center.

They took the switch 10-port gigabit, 5 nukes. And from the laptop connected to the switch and the machines.

Connected, started to set. ESXi itself was delivered easily. But VMware did not assume that it could be installed on such a hell of iron, so the nuke’s network card fell out of supported hardware (if there ever was one there). And in our data center is critical. Because our computers are sticky to the network card, and not vice versa.

We found the right driver in the old version of the distribution, added it to the new 6.5 Update 1. We installed it again - the network appeared. For the same reason, the lack of a driver could not launch the SD-reader. I had to use external flash drives to boot.

2. Management

The basic setting is over. Deployed vCenter. This is management software, it provides the integration of our nukes into a highly accessible, dynamically balanced cluster and allows you to manage resources. We put it on one of the boxes, and it was time to deploy the hyper-convergent infrastructure in all its glory.

3. Software-defined storage system (SDS)

At this stage, we have virtual machines on the virtualization servers, but there is no unified storage system. It is necessary to combine server disks or connect external shelves, plus to ensure that when you turn off any of the servers, the data are not lost. That is, to deploy software-defined storage. Because a separate stand with a flash factory for 20-50 million obviously did not climb into the drawer.

The software-defined storage system in the VMware stack is vSAN. It was configured smoothly in the spirit of “next-next-next-done”, even correctly identified the NVMe-disks under the cache (yes, imagine, we had them). But there were problems with the configuration of the switch.

We immediately knew that we would be trapped in one gigabit on the switch, and the vSAN in the recommended configuration should be 10, and better 2 to 10 — he wants to change data very quickly inside the bus. vSAN needs a Jumbo Frame - a large MTU of 9000 bytes, because the larger the transfer frame, the less overhead and it works faster. At first, our modest 10-port Gigabit switch stubbornly refused to use the MTU settings. A few cups of coffee later he resigned and even pleasantly surprised with performance - vSAN worked quite quickly, despite one gigabit interface in the backend.

Next vSAN need at least two disks on the node. On nuke just two. Configuration: USB flash drive for downloading a hypervisor, one SSD over SATA 3.0 (Intel SSD DC S3520 with 480 GB capacity), the second M.2 - Intel SSD Pro 6000p with 128 GB capacity, which became a cache in vSAN. Gathered easily.

If, before this, the peeping colleagues warmly wished for success and left with a laugh, now many have become interested. They came again and again, asked about the condition of the patient.

Before me lay the next level - SDN, that is, a software-defined network. This is when the same virtualization servers (in our case nukes) become components of the network infrastructure.

Somewhere in the area, my cleaning center collapsed my data center: I simply chopped the power of the entire bundle and put the nukes in their place at the center of Intel solutions. I was very worried, because SDS solutions do not like turning off all the nodes at all, and even with the load, but after switching on, everything went fine. Looking ahead, I will say that at the very end, a colleague, who needed a socket, was once again so noted. Also, everything went up okay, already with a full set of software.

4. Software defined network (SDN)

Began to deploy NSX from VMware. It went easier, but not easy. Slightly analyzed the packets - there were problems with proxying in our network (which was used to access nukes).

Suddenly, it is nukes that are perfect as firewalls, routers, and balancers. Previously, in offices, the old Pentiums-2 were used for this — the second life of an old car.

5. Monitoring and reporting

Now we need monitoring. And the fact that the data center without monitoring, right? Began to install vRealize Operations. It is also designed, hmm, for other tasks and for another hardware, so in the basic configuration I devoured half of my data center resources. Turned down (this is not recommended in normal situations). As a result, it collects data on how efficiently our data center is used, where and to which virtual clients more resources are issued than necessary (no), where what is happening. He looks at the load on the hosts, makes an inventory and gives advice on where to change. He also receives information from the iron through the sim provider: fans, temperature, disk status, recording delays, and so on. Before equipment failure, it is able to migrate services and data from a dying server in the background - this thing is called Proactive HA. For the sake of justice, the latter could not be configured - the wrong level of hardware, this is not a Dell or HP server.

I must say that I began to leave the data center under load at night. The nukes were warming themselves, and at first this frightened me — I came in several times a day to touch them. At home I would be exactly the kind of heater disturbed. But colleagues from Intel said that everything was a bundle (hehe!), And I continued the experiments.

6. Analysis of logs

The next link is a system for analyzing logs and any “smart analytics” - vRealize Log Insight. There is nothing special to add, the product spun up and immediately started working, it was necessary only as a syslog server for all components of our software data center.

7. Self-service portal with gui

The next step is vRealize Automation. All I need to say about him is that he again grabbed a bunch of resources. It was developed normally, but loaded data center from five nyuk by 90%. Also cut a bit and deployed the base portal on it.

Everything!

Total - it turned out. It is now a training stand, a source of incessant jokes (“Where are the data center? And in your pocket!”, “Why didn’t you backup? He eats 100% of resources!”, “When will we take the grid?”) And a demo unit. You will not go to the customer with a half-rack, but with this - easily.

Let me remind you that nukes still have a good built-in vibration protection (unlike normal servers) and are generally very well made, so they will survive:

Backup in the end, I still put it fine, by the way.

What is interesting for? Well, in practice, on the same set of software (with a pair of replacements for cheaper licenses) and another hardware, you can fit a private data center in a semi-rack. This is necessary for companies per 100 people, such as investment funds, who do not want to share their data outside, and this is often decided by expensive PAKs. Or stand right in the office with soundproofing, which crammed servers, switches and oops.

Oh yes! And I earned an excellent achivka "data center builder." And he won a beer.

For researchers

If someone wants to repeat our experience, below is the configuration of our stand, which is tested and works exactly.

5 pieces of mini-PC Intel NUC Kit NUC7i7BNH, each has the following components:

- 2 Kingston HyperX Impact 16GB 2133MHz DDR4 CL13 SODIMM RAM modules

- 128GB M.2 SSD Intel SSD Pro 6000p Series for cache

- SATA 3.0 SSD 480 GB Intel SSD DC S3520 Series for storage

- 32GB SanDisk Ultra Fit USB Drive for hypervisor installation

Links

- VMware vCloud Suite SDDC Management Platform. The suite includes the following components: vSphere, vRealize Operations, vRealize Log Insight, vRealize Automation, vRealize Business for Cloud, SDS VMware vSAN.

- SDN VMware NSX

- Intel NUC Mini PC

- Pro software-defined data center

- My mail is SSkryl@croc.ru

Source: https://habr.com/ru/post/342820/

All Articles