6 lines of deep learning

Hi, Habr! Such a thing as “deep learning” has existed since 1986, when it was first used by Rina Dechter. Technology developed in 2006 after the publication of Jeffrey Hinton’s publication on effective pre-training of a multilayered neural network. Today, deep learning often lives in conjunction with speech recognition, language understanding and computer vision. Under the cat you will learn about the use of deep learning algorithms in SQL. Drop in!

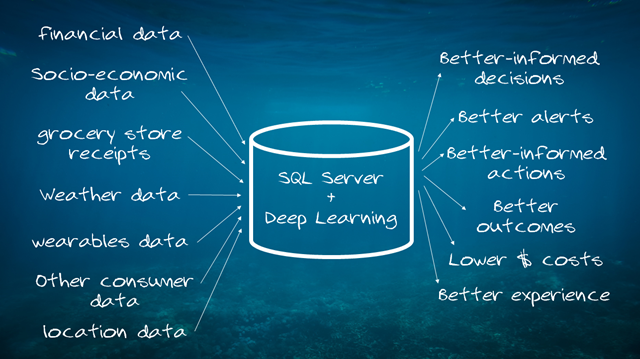

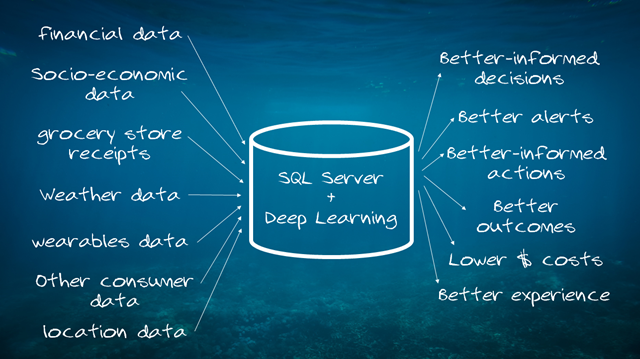

The use of deep learning has reached such proportions that the new mantra “Deep learning in every application” may well become a reality in the coming decade. The venerable SQL Server DBMS did not stand aside. To the question “Is it possible to use deep learning algorithms in SQL Server?” We confidently answer “Yes!” In the public preview version of the new release of SQL Server, we significantly improved R Services within SQL Server and added a powerful set of machine learning tools used by developers and Microsoft itself. Due to this, DBMS applications created on the basis of SQL Server receive means for increasing the speed of work, productivity and scaling, as well as access to other new features of machine learning and a deep neural network. Most recently, we demonstrated how SQL Server performs over a million R-predictions per second when using it as a machine-based model management system, and now we invite you to evaluate the R code examples and machine learning patterns for SQL Server on GitHub. .

')

In this blog, I would like to elaborate on what , why, and how questions regarding deep learning in SQL Server. Having clarified this, it will be easier for us to see the perspectives of data-driven artificial intelligence using such a powerful data processing platform as SQL Server.

To date, all companies and all applications work with data.

Any application can be called intellectual if it combines data storage and intelligence (whether it is artificial intelligence, machine learning system or any other intellectual system). SQL Server helps developers and users to realize the very essence of deep learning in their applications by writing only a few lines of code. In addition, with its help, database developers can deploy critical operating systems with built-in deep learning models. In this article, we have gathered ten reasons why SQL Server needs deep learning opportunities.

Ten reasons why SQL Server needs deep learning.

In truth, hardly anyone will buy a DBMS just for the sake of managing the database. Buyer important opportunities that it provides. By endowing SQL Server with deep learning capabilities, we can scale AI and machine learning both in the traditional sense (data scaling, throughput, latency), and in terms of productivity (simplified implementation and a flatter learning curve). This brings valuable results immediately in many ways, be it time, user interaction, productivity, cost reduction and profit growth, new opportunities, business prospects, ideological leadership in the industry, etc.

In practice, deep learning technologies in SQL Server can be used in banking and finance, as well as in healthcare, manufacturing, retail, e-commerce, and the Internet of Things (IoT) systems. And the use of these technologies to detect fraud, predict morbidity, predict energy consumption or analyze personal information will improve existing industries and applications. It also means that no matter what loads are running on SQL Server, be it customer relationship management (CRM), enterprise resource planning (ERP), data storage (DW), real-time transaction processing (OLTP), and others, you you can effortlessly use deep learning technology in them. This is about using it not separately, but in combination with all kinds of data and analytics that SQL Server is famous for (for example, processing structured, geospatial, graphical, external, temporal data, as well as JSON data). And you need to add here only ... your ideas.

Here I will show how to put all this into practice. As an example, let's take an experiment on forecasting classes of galaxies from images using the powerful Microsoft R programming language and its new MicrosoftML package for machine learning (created by our specialists in the field of algorithm development, data processing and analysis). And we will do this in SQL Server with connected R Services services on a shared Azure NC virtual machine . I am going to distribute images of galaxies and other space objects into 13 classes based on the classification adopted in astronomy - mostly elliptical and spiral galaxies and their varieties. The shape and other visual characteristics of galaxies change as they develop. Studying the forms of galaxies and their classification help scientists better understand the processes of the development of the Universe. Having examined these images, it will not be difficult for a person to distribute them into the correct classes. But in order to do this with 2 trillions of known galaxies, it will be difficult to do without the help of machine learning and intelligent technologies such as deep neural networks, so I’m going to use them. It is easy to imagine that instead of astronomical data we have medical, financial or IoT data, and that we need to make predictions using them.

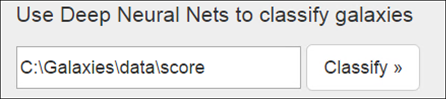

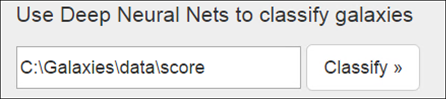

Imagine a simple web application that loads images of galaxies from a folder and then distributes them into various classes — spiral and elliptical, and then re-distributes them into classes inside these classes (for example, this is a normal spiral galaxy or galaxy with type of handle).

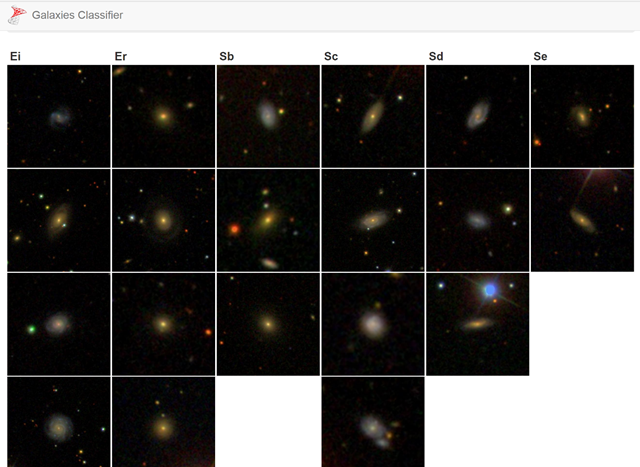

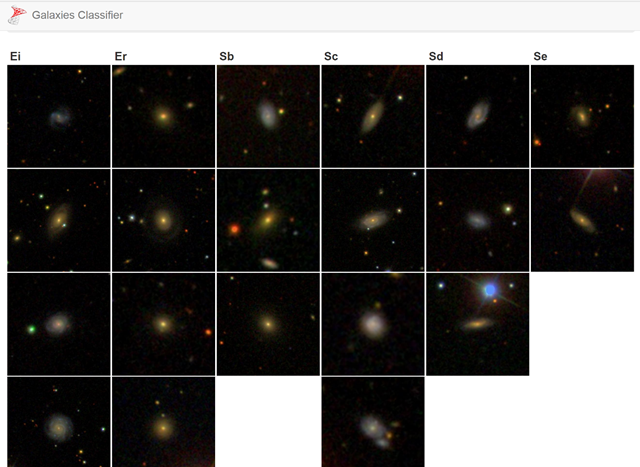

And it can classify a huge number of images incredibly fast. Sample output:

The first two columns contain elliptical-type galaxies, and the rest contain various spiral galaxies.

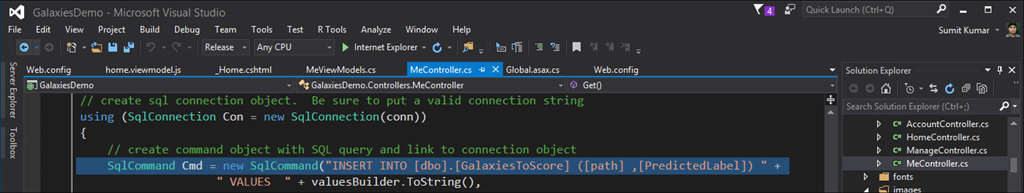

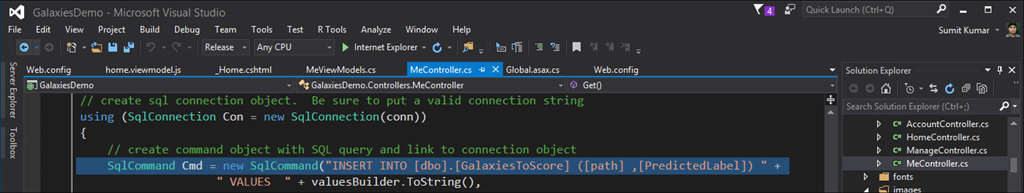

The code of such an application actually performs not so many actions, it only prescribes the paths to new files for their distribution into classes in the database table (the rest of the program code consists of the distribution of the resulting data, page layout, etc.).

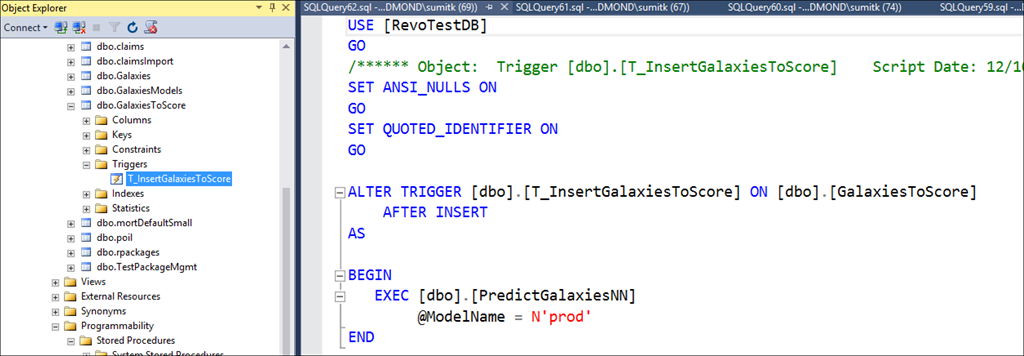

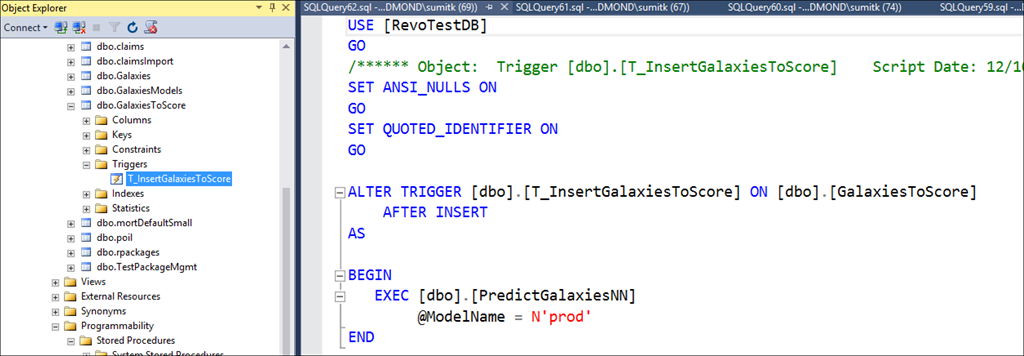

Take a look at the table where the application prescribes the paths to the image files. It contains a column with paths to images of galaxies and a column for storing the expected classes of galaxies. As soon as a new data line is written to the table, the trigger fires:

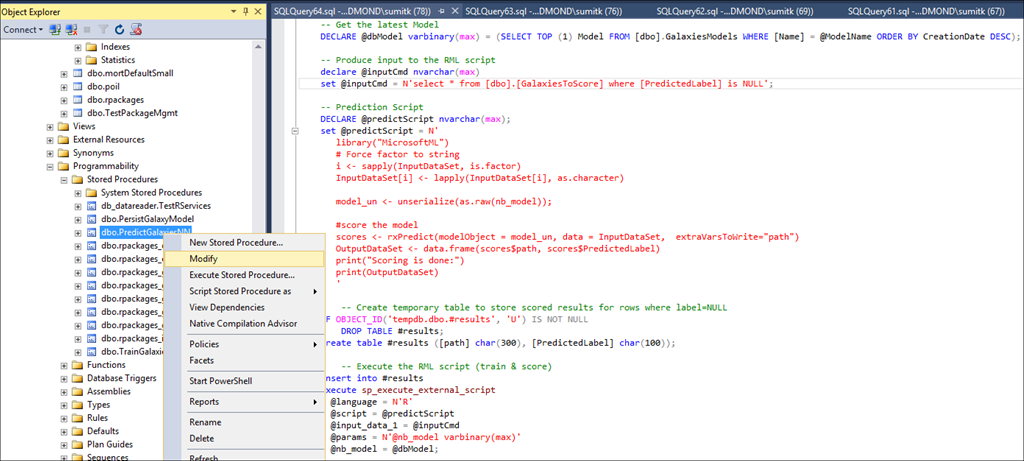

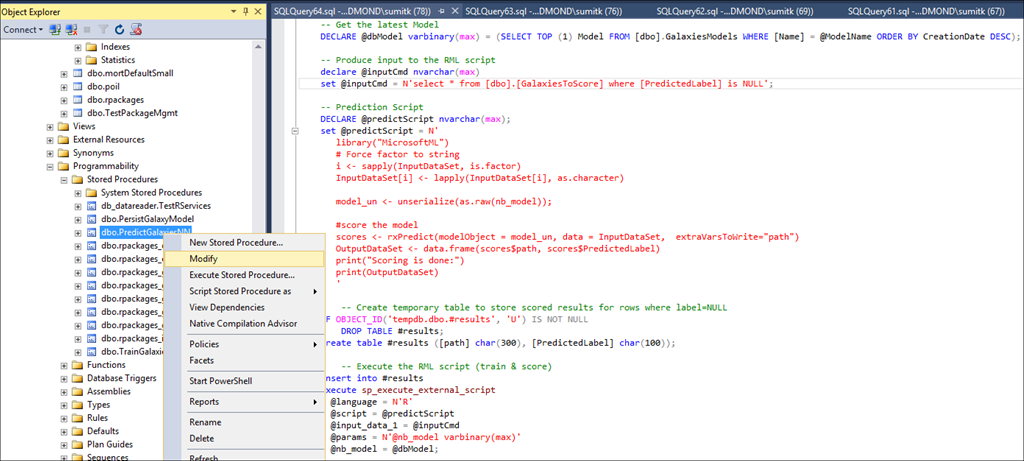

In turn, the trigger calls the stored procedure - PredictGalaxiesNN, as shown below (with the part of the R script embedded in the stored procedure):

It is here, in a few lines of the R code, magic happens. This R script accepts new data lines (which have not yet received a rating) and a model stored as varbinary (max) in the table. A little later, I will return to the question of how this model turned out to be there. Inside the script, the model is deserialized and is used by the calculation function (rxPredict) already familiar to us in the next line in order to evaluate the new lines and then write the final output data.

This is a new version of the rxPredict function that understands the machine learning algorithms included in the MicrosoftML package. The next line downloads a package containing new MicrosoftML machine learning algorithms.

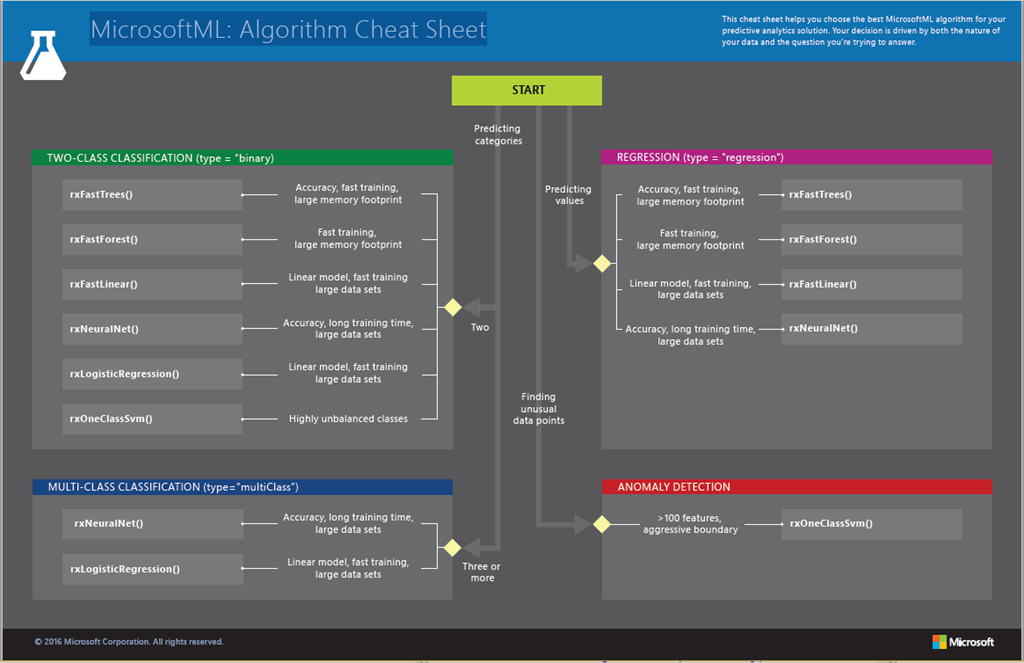

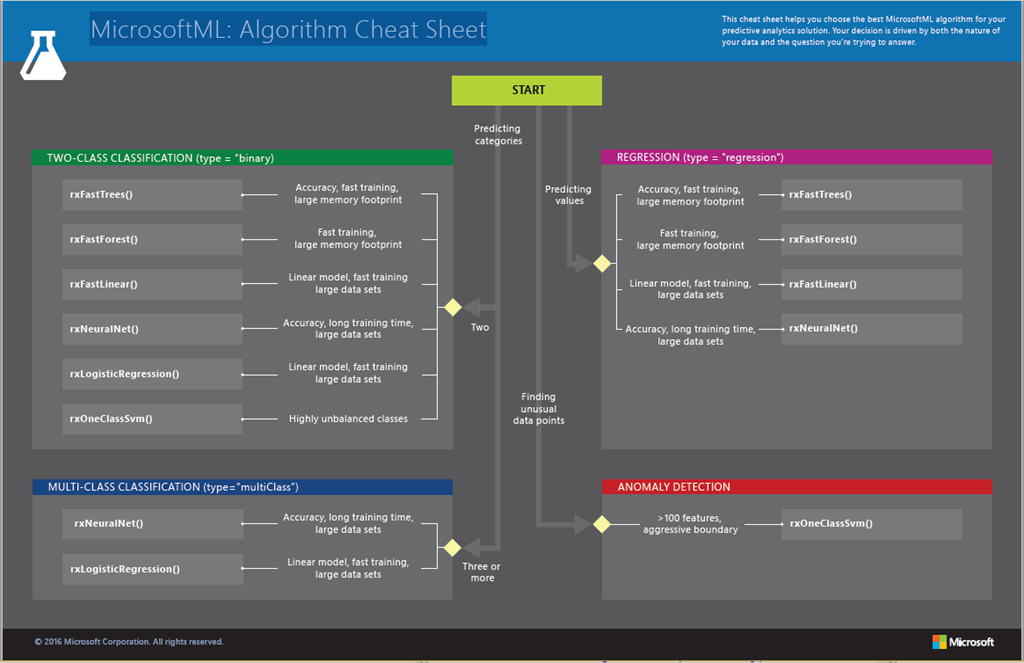

In addition to the deep neural network (DNN), the main topic of this blog, the package contains five more powerful machine learning algorithms: fast linear, fast tree-based, fast forest-based, single-class method for detecting SVM anomalies, regularized logistic regression (with support for L1 regularization and L2) and neural networks. Thus, with just 6–7 lines of the R language, any application can become intelligent thanks to a model based on a deep neural network. The application will only need to connect to SQL Server. By the way, using the sqlrutils package, it became easy to create a stored procedure for code R.

Where was the model trained? And she also studied in SQL Server. However, it was not necessary to teach it specifically in SQL Server, it can be done on a separate machine with a standalone R Server solution, working locally or through the cloud. Today, all these new machine learning algorithms are available in the R Server version for Windows, and support will soon come from other platforms. It was more convenient for me to do the training in the SQL Server field, but I could do it outside of it. Take a look at the stored procedure with the code for learning.

Code for learning

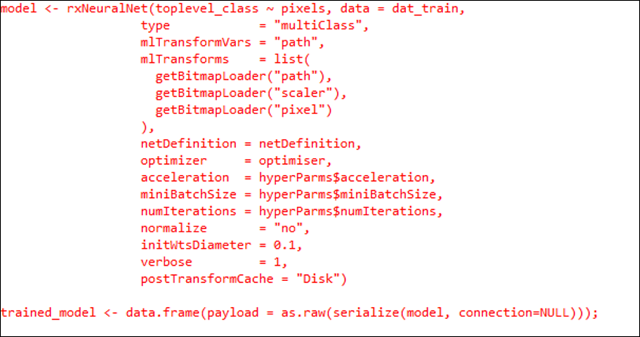

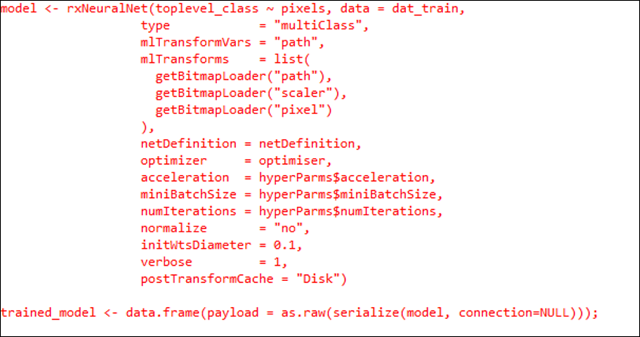

The model is trained in these lines of code:

This is a new feature rxNeuralNet from MicrosoftML for deep neural network training. This code is similar to other functions R and rx - it also contains the formula, the input data set and some other parameters. One of the parameters we see here is the string netDefinition = netDefinition. It is in it that the neural network is determined.

Network definition

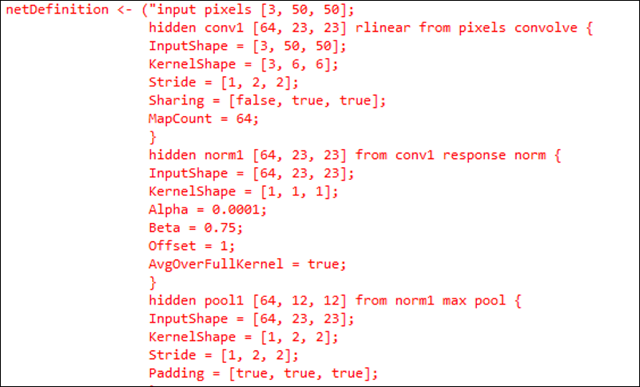

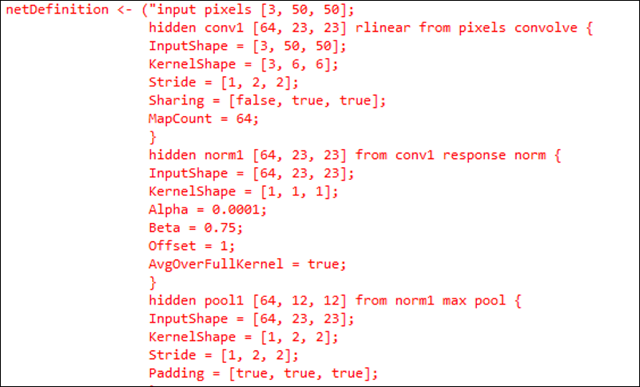

The deep neural network is defined in this part of the code:

Here is the definition of a deep neural network using the specification language Net #, created specifically for this. The neural network contains 1 input, 1 output and 8 hidden layers. It starts with an input layer size of 50 × 50 pixels and image data in the RGB format (3 color depth). The first hidden layer is a convolutional layer, where we specify the size of the nucleus (a small part of the image) and how many times the nucleus should be compared with the other nuclei (to roll). There are other layers for other types of convolutions, as well as for normalizing and regulating the number of requests that help stabilize this neural network. And finally, the output layer, associating it with one of 13 classes. With about 50 lines of the Net # specification, I determined a complex neural network. The Net # guide is in the MSDN section.

The size of the trained data / GPU

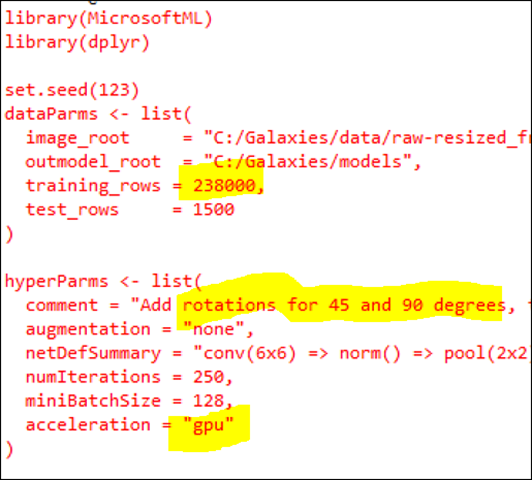

Here is the R code for learning the model:

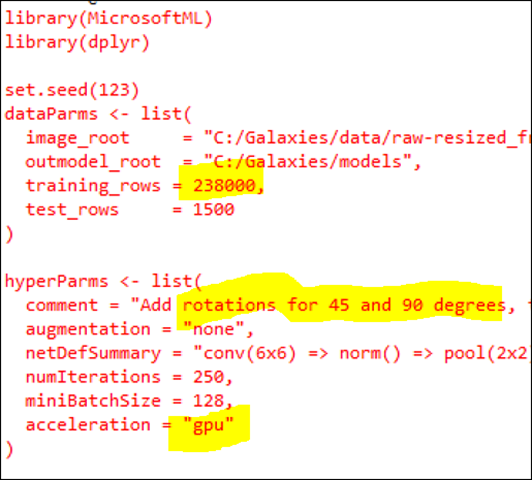

I would like to tell you about several more lines - training_rows = 238000. This model was trained on 238,000 images obtained from the database of the Sloan Digital Sky Survey project. Then we created two variants of each image, turning them 45 and 90 degrees. Thus, a total of about 700,000 images for training. This is a very large amount of data for training, so the question is, how long did it take to process it? Answer: we were able to train this model in 4 hours, using a not-too-large machine with a 6-core processor and 56 GB of RAM, as well as a powerful graphics accelerator Nvidia Tesla K80. This is the new NC-based Azure graphical virtual machine, available to anyone who has signed up for an Azure subscription. We were able to optimize the computation speed due to the use of a graphics processor (GPU) by setting one simple parameter: acceleration = “gpu”. Without a GPU, learning takes about 10 times longer.

With just a few lines of R code and MicrosoftML algorithms, I managed to train a deep neural network on an impressive amount of image data and use the trained model in SQL using R services that allow any application connected to SQL to easily get these intelligent technologies. This is the power of Microsoft R and MicrosoftML combined with SQL Server. And this is only the beginning, because we are working on the creation of other algorithms so that the power of artificial intelligence and machine learning becomes available to all. To select the appropriate machine learning algorithm for the predictive analytical model, you can download the MicrosoftML: Algorithm Cheat Sheet here .

What to add?

The use of deep learning has reached such proportions that the new mantra “Deep learning in every application” may well become a reality in the coming decade. The venerable SQL Server DBMS did not stand aside. To the question “Is it possible to use deep learning algorithms in SQL Server?” We confidently answer “Yes!” In the public preview version of the new release of SQL Server, we significantly improved R Services within SQL Server and added a powerful set of machine learning tools used by developers and Microsoft itself. Due to this, DBMS applications created on the basis of SQL Server receive means for increasing the speed of work, productivity and scaling, as well as access to other new features of machine learning and a deep neural network. Most recently, we demonstrated how SQL Server performs over a million R-predictions per second when using it as a machine-based model management system, and now we invite you to evaluate the R code examples and machine learning patterns for SQL Server on GitHub. .

')

In this blog, I would like to elaborate on what , why, and how questions regarding deep learning in SQL Server. Having clarified this, it will be easier for us to see the perspectives of data-driven artificial intelligence using such a powerful data processing platform as SQL Server.

Why add?

To date, all companies and all applications work with data.

Any application can be called intellectual if it combines data storage and intelligence (whether it is artificial intelligence, machine learning system or any other intellectual system). SQL Server helps developers and users to realize the very essence of deep learning in their applications by writing only a few lines of code. In addition, with its help, database developers can deploy critical operating systems with built-in deep learning models. In this article, we have gathered ten reasons why SQL Server needs deep learning opportunities.

Ten reasons why SQL Server needs deep learning.

- Using machine intelligence with storage systems (for example, SQL Server), you get security, compliance, privacy, encryption, core data management services , accessibility groups, advanced business intelligence tools, in-memory data processing technologies, virtualization, geospatial, temporary, graphical and other awesome features.

- You can work in "real time" or "batch processing" by analogy with real-time transaction processing (OLTP) and interactive analytical processing (OLAP), but with respect to deep learning and machine intelligence.

- To take full advantage of deep learning, you don’t have to change your SQL Server based applications. In addition, many mobile, IoT, and web applications can use the same deep learning model without having to duplicate code.

- You can use the functions of machine learning libraries (for example, MicrosoftML) to increase the efficiency of data processing specialists, developers, database administrators, as well as your entire business. It is much faster and more efficient than doing the same on your own.

- You will be able to develop predictable solutions to deploy or scale them according to current requirements. In the latest SQL Server update package, many features previously available only in the Enterprise Edition are now supported in other editions of SQL Server, including Standard / Express / Web Edition. This means that you can use deep learning even in the standard version of SQL Server at no additional cost.

- You can use disparate external data sources (using PolyBase) to train and extract information from deep-seated models.

- You can simulate different situations and create “what if” scripts inside SQL Server, and then use them for a variety of deep learning models. Thus, you can get an intelligent solution even with a very limited amount of data for training.

- You can easily and quickly use deep learning models using stored procedures and triggers.

- You will get all the necessary tools, monitoring and debugging tools, as well as the SQL Server ecosystem applicable to machine intelligence. SQL Server will truly become your machine learning management system to monitor the entire life cycle of DNN models along with the data.

- You will be able to create new data and receive analytics from stored data without increasing the transactional workload (using the HTAP template ).

In truth, hardly anyone will buy a DBMS just for the sake of managing the database. Buyer important opportunities that it provides. By endowing SQL Server with deep learning capabilities, we can scale AI and machine learning both in the traditional sense (data scaling, throughput, latency), and in terms of productivity (simplified implementation and a flatter learning curve). This brings valuable results immediately in many ways, be it time, user interaction, productivity, cost reduction and profit growth, new opportunities, business prospects, ideological leadership in the industry, etc.

In practice, deep learning technologies in SQL Server can be used in banking and finance, as well as in healthcare, manufacturing, retail, e-commerce, and the Internet of Things (IoT) systems. And the use of these technologies to detect fraud, predict morbidity, predict energy consumption or analyze personal information will improve existing industries and applications. It also means that no matter what loads are running on SQL Server, be it customer relationship management (CRM), enterprise resource planning (ERP), data storage (DW), real-time transaction processing (OLTP), and others, you you can effortlessly use deep learning technology in them. This is about using it not separately, but in combination with all kinds of data and analytics that SQL Server is famous for (for example, processing structured, geospatial, graphical, external, temporal data, as well as JSON data). And you need to add here only ... your ideas.

How to add?

Here I will show how to put all this into practice. As an example, let's take an experiment on forecasting classes of galaxies from images using the powerful Microsoft R programming language and its new MicrosoftML package for machine learning (created by our specialists in the field of algorithm development, data processing and analysis). And we will do this in SQL Server with connected R Services services on a shared Azure NC virtual machine . I am going to distribute images of galaxies and other space objects into 13 classes based on the classification adopted in astronomy - mostly elliptical and spiral galaxies and their varieties. The shape and other visual characteristics of galaxies change as they develop. Studying the forms of galaxies and their classification help scientists better understand the processes of the development of the Universe. Having examined these images, it will not be difficult for a person to distribute them into the correct classes. But in order to do this with 2 trillions of known galaxies, it will be difficult to do without the help of machine learning and intelligent technologies such as deep neural networks, so I’m going to use them. It is easy to imagine that instead of astronomical data we have medical, financial or IoT data, and that we need to make predictions using them.

application

Imagine a simple web application that loads images of galaxies from a folder and then distributes them into various classes — spiral and elliptical, and then re-distributes them into classes inside these classes (for example, this is a normal spiral galaxy or galaxy with type of handle).

And it can classify a huge number of images incredibly fast. Sample output:

The first two columns contain elliptical-type galaxies, and the rest contain various spiral galaxies.

So how can a simple application make such a complex classification?

The code of such an application actually performs not so many actions, it only prescribes the paths to new files for their distribution into classes in the database table (the rest of the program code consists of the distribution of the resulting data, page layout, etc.).

SqlCommand Cmd = new SqlCommand("INSERT INTO [dbo].[GalaxiesToScore] ([path] ,[PredictedLabel]) "

What happens in the database?

The part responsible for forecasting and operationalization

Take a look at the table where the application prescribes the paths to the image files. It contains a column with paths to images of galaxies and a column for storing the expected classes of galaxies. As soon as a new data line is written to the table, the trigger fires:

In turn, the trigger calls the stored procedure - PredictGalaxiesNN, as shown below (with the part of the R script embedded in the stored procedure):

It is here, in a few lines of the R code, magic happens. This R script accepts new data lines (which have not yet received a rating) and a model stored as varbinary (max) in the table. A little later, I will return to the question of how this model turned out to be there. Inside the script, the model is deserialized and is used by the calculation function (rxPredict) already familiar to us in the next line in order to evaluate the new lines and then write the final output data.

scores <- rxPredict(modelObject = model_un, data = InputDataSet, extraVarsToWrite="path") This is a new version of the rxPredict function that understands the machine learning algorithms included in the MicrosoftML package. The next line downloads a package containing new MicrosoftML machine learning algorithms.

[ library("MicrosoftML") ] In addition to the deep neural network (DNN), the main topic of this blog, the package contains five more powerful machine learning algorithms: fast linear, fast tree-based, fast forest-based, single-class method for detecting SVM anomalies, regularized logistic regression (with support for L1 regularization and L2) and neural networks. Thus, with just 6–7 lines of the R language, any application can become intelligent thanks to a model based on a deep neural network. The application will only need to connect to SQL Server. By the way, using the sqlrutils package, it became easy to create a stored procedure for code R.

What about the training model?

Where was the model trained? And she also studied in SQL Server. However, it was not necessary to teach it specifically in SQL Server, it can be done on a separate machine with a standalone R Server solution, working locally or through the cloud. Today, all these new machine learning algorithms are available in the R Server version for Windows, and support will soon come from other platforms. It was more convenient for me to do the training in the SQL Server field, but I could do it outside of it. Take a look at the stored procedure with the code for learning.

Code for learning

The model is trained in these lines of code:

This is a new feature rxNeuralNet from MicrosoftML for deep neural network training. This code is similar to other functions R and rx - it also contains the formula, the input data set and some other parameters. One of the parameters we see here is the string netDefinition = netDefinition. It is in it that the neural network is determined.

Network definition

The deep neural network is defined in this part of the code:

Here is the definition of a deep neural network using the specification language Net #, created specifically for this. The neural network contains 1 input, 1 output and 8 hidden layers. It starts with an input layer size of 50 × 50 pixels and image data in the RGB format (3 color depth). The first hidden layer is a convolutional layer, where we specify the size of the nucleus (a small part of the image) and how many times the nucleus should be compared with the other nuclei (to roll). There are other layers for other types of convolutions, as well as for normalizing and regulating the number of requests that help stabilize this neural network. And finally, the output layer, associating it with one of 13 classes. With about 50 lines of the Net # specification, I determined a complex neural network. The Net # guide is in the MSDN section.

The size of the trained data / GPU

Here is the R code for learning the model:

I would like to tell you about several more lines - training_rows = 238000. This model was trained on 238,000 images obtained from the database of the Sloan Digital Sky Survey project. Then we created two variants of each image, turning them 45 and 90 degrees. Thus, a total of about 700,000 images for training. This is a very large amount of data for training, so the question is, how long did it take to process it? Answer: we were able to train this model in 4 hours, using a not-too-large machine with a 6-core processor and 56 GB of RAM, as well as a powerful graphics accelerator Nvidia Tesla K80. This is the new NC-based Azure graphical virtual machine, available to anyone who has signed up for an Azure subscription. We were able to optimize the computation speed due to the use of a graphics processor (GPU) by setting one simple parameter: acceleration = “gpu”. Without a GPU, learning takes about 10 times longer.

Summarizing

With just a few lines of R code and MicrosoftML algorithms, I managed to train a deep neural network on an impressive amount of image data and use the trained model in SQL using R services that allow any application connected to SQL to easily get these intelligent technologies. This is the power of Microsoft R and MicrosoftML combined with SQL Server. And this is only the beginning, because we are working on the creation of other algorithms so that the power of artificial intelligence and machine learning becomes available to all. To select the appropriate machine learning algorithm for the predictive analytical model, you can download the MicrosoftML: Algorithm Cheat Sheet here .

Source: https://habr.com/ru/post/342788/

All Articles